Are you following our On-Prem to the Cloud Series via the DevOps Lab on Channel 9? If not, you should be! In this week’s episode, which falls right at number 8, we continue to build on the skills we have learned throughout each episode. So far we have managed to take our Mercury Health application from our on-prem server and it now runs in Azure App Service via Platform as a Service (PaaS). Last week, Jay Gordon walked us through how to plan for database migration and we now have our DB in Azure as well via Azure SQL Server.

This week, I walk Damian Brady through some of the considerations one should take when you plan your journey towards true cloud native architecture, especially if you’re curious about containers! If you missed it, you can watch it below!

Now, in this 20 minute episode we cover a broad range of considerations and review the pathway to migrate Mercury Health, which is currently written in ASP.Net 4.x, to a container. We review why you want your application running in a container, as well as the benefits containers offer.

This post will dive deeper in to the technical specifics behind the overview we discuss in the video.

First, let’s recap the definition of what a container is – a container is not a real thing. It’s not. It’s an application delivery mechanism with process isolation. In fact, in other videos I have made on YouTube, I compare how a container is similar to a waffle, or even a glass of whiskey. If you’re new to containers, I highly recommend checking out my “Getting Started with Docker” video series available here.

Second, let’s simplify what a Dockerfile actually is – the TL;DR is it’s an instruction manual for the steps you need to either simply run, or build and run your application. That’s it. At its most basic level, it’s just a set of instructions for your app to run, which can include the ports it needs, the environment variables it can consume, the build arguments you can pass, and the working directories you will need.

Now, since a container’s sole goal is to deliver your application with only the processes your application needs to run, we can take that information and begin to think about our existing application architecture. In the case of Mercury Health, and many similar customers who are planning their migration path from on-prem to the cloud, we have a legacy application that is not currently architected for cross platform support – I.E. it only runs on Windows. That’s okay! Let’s talk about Windows Containers…

Windows Containers

Windows Containers are a great way to utilize the benefits containers offer (smaller footprint, faster startup time, better performance than a traditional VM, and easier to maintain than VM environments), and reduce the amount of time needed initially to refactor your application.

If you have a legacy ASP.NET application for example, and you’re currently using Visual Studio 2019, there is a very easy upgrade path available to add Docker and Container Orchestration support to your solution file.

Once you have the added Docker files (a Docker-Compose.yml to help with your local debugging experience is included) you should be able to run your ASP.NET application within a Windows Container on your system. Now, let’s break down how this Dockerfile is working.

Sample of a Dockerfile generated by Visual Studio 2019:

#Depending on the operating system of the host machines(s) that will build or run the containers, the image specified in the FROM statement may need to be changed.

#For more information, please see https://aka.ms/containercompat

FROM mcr.microsoft.com/dotnet/framework/aspnet:4.8-windowsservercore-ltsc2019

ARG source

WORKDIR /inetpub/wwwroot

COPY ${source:-obj/Docker/publish} .

We start with FROM which sets up the base image for your application – this base image includes a shared kernel with the host OS, as well as any dev dependencies, needed to run (not build) our app. Windows Server containers do differ from Linux containers in this regard because it is not as easy to separate the kernel and user mode components in Windows as cleanly as you can in Linux. There’s a helpful doc here if you’d like to learn more about how this can affect host version and container image versions specifically when it comes to Windows containers. In this instance, we need a Windows 2019 kernel since we are using Windows 10 version 20H2 and ASP.Net 4.8 – the shared kernel is also why you cannot run a Windows application on *nix operating systems.

Next, we set a build argument equal to SOURCE – this is an argument we can change at build time through the use of the –build-arg flag. We follow this up with setting our working directly equal to /inetpub/wwwroot, which is the same path we would use if we were on a traditional IIS Windows Server.

Finally, we run a copy command with what is called a variable substitution; basically, when this image is built, Docker will take the source argument (if it as been set) and copy that path to the previously set working directory of the container; if that value has not been set, it will use the default path – obj/Docker/publish to copy to the . I.E working directory.

This Dockerfile assumes the msbuild of our .sln file has already happened and thus the /bin and /obj folders have already been created. This is perhaps the easiest way to get started with containers, but there are a few areas we can improve.

-

This Dockerfile along with our current application’s state, allows zero way to input a connection_string value upon run. We either have to hardcode the connection string in our web.config, or we can make some code changes to support something like configuration builders.

-

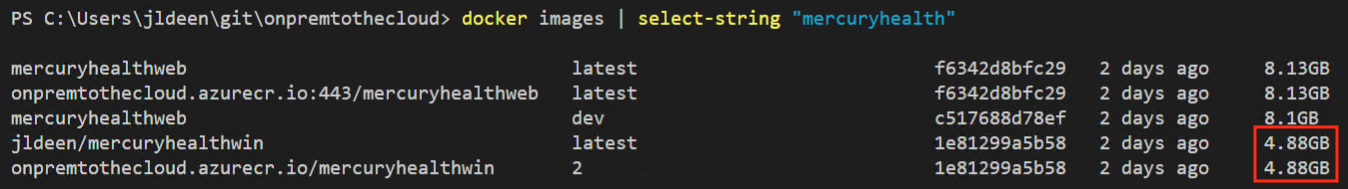

The image this Dockerfile builds is just under 10GB, coming in at 8.13GB. That’s quite large, and while it certainly reduces our VM footprint, it’s still too large to suggest we have gained much in terms of performance enhancements.

- We haven’t solved the “it works on my machine” issue since the build is still contingent on the host machine having both Visual Studio installed, and running msbuild prior to building your Docker image.

We can tackle these issues with a modified Dockerfile, which you can see below.

# escape=`

FROM mcr.microsoft.com/dotnet/framework/sdk:4.8-windowsservercore-ltsc2019 AS build

## Set Working Directory

WORKDIR /app

## Copy all working directory files to container path /app

COPY ./Web/src .

# COPY . .

## Nuget restore solution file

RUN nuget restore MercuryHealth.sln;

## MSBuild solutionfile

RUN msbuild 'MercuryHealth.sln' /p:configuration='Release' /p:platform='Any CPU' `

/p:VisualStudioVersion=16.0

## 2nd stage begins

FROM jldeen/iis:2

## Set IIS Working Directory

WORKDIR /inetpub/wwwroot

## Copy files from previous stage

COPY --from=build /app/MercuryHealth.Web/. ./

## Expose port 80

EXPOSE 80

This updated Dockerfile is what we call a multistage Dockerfile. In it, we use nuget to restore our packages, and msbuild to build our solution file inside the container. Then we use a custom IIS image to copy the output from the msbuild task to this runtime container image, and we expose port 80, since that is the port our application listens on.

In multistage Dockerfiles, only the final stage is what ends up becoming a part of our image and ultimately running in our container. This means none of the dev dependencies needed (I.E. the ASP.NET 4.8 SDK) is passed to our final stage, and as a result, our custom IIS image is significantly smaller. Our custom image also has a PowerShell script it runs, which handles our connection_string dilemma. In fact, a good friend of mine, Anthony Chu, has a great blog post where he walks us through how to override your Web.config settings without any code changes.

These intentional changes and considerations have helped us to cut our existing Docker image almost in half. We are now at 4.9GB in size!

Again, this is great improvement with very little rearchitecting needed on our part, or our developers parts, BUT this is not sustainable. A nearly 5GB image is still too large to offer any true performance benefits, especially when, as we saw in our video, it takes almost 10 minutes to pull our image, and star our container in Azure (whether that be in Azure App Service with Windows Containers, or Azure Container Instances – not recommended for long running applications).

This leads us into a true rearchitecting reality – Anthony’s solution for overriding Web.config values is genius, it really is, but at some point, we will have to make code changes. When the time comes to make those code changes, we really should evaluate the code as a whole – would it not make sense to rearchitect to something lighter, something faster, and something all developers could collaborate on with something cross platform like .NET 5.0?

In our example video, I took an older version of Mercury Health, which was written in .NET Core, and continued with our container journey. Let’s now talk about Linux Containers.

Xplat containers (aka Linux Containers)

Linux Containers, by the nature of *Nix systems are significantly smaller than Windows containers. You can have different “flavors” of Linux containers, but the two most popular are Debian based (think Ubuntu) and Alpine ased (I.E Alpine Linux). The latter is the smallest, but comes with a slight learning curve if you are unfamiliar with the differences between Debian and Alpine (apt vs apk for package manager commands, for example). Generally speaking, drilling into Linux itself is outside the scope of this blog post, but if you’re interested in learning more, drop a comment below and I can write a follow up blog post.

Let’s focus on our .NET core Dockerfile we started with in our video:

#See https://aka.ms/containerfastmode to understand how Visual Studio uses this Dockerfile to build your images for faster debugging.

FROM mcr.microsoft.com/dotnet/core/sdk:2.2 AS base

# FROM mcr.microsoft.com/dotnet/core/aspnet:2.2-alpine AS base

WORKDIR /app

EXPOSE 80

EXPOSE 443

FROM mcr.microsoft.com/dotnet/core/sdk:2.2 AS build

WORKDIR /src

COPY ["MercuryHealthCore.csproj", "."]

RUN dotnet restore "./MercuryHealthCore.csproj"

COPY . .

WORKDIR "/src/."

RUN dotnet build "MercuryHealthCore.csproj" -c Release -o /app/build

FROM build AS publish

RUN dotnet publish "MercuryHealthCore.csproj" -c Release -o /app/publish

FROM base AS final

WORKDIR /app

COPY --from=publish /app/publish .

ENTRYPOINT ["dotnet", "MercuryHealthCore.dll"]

Similar to our last Dockerfile within Windows, this, too, is a multistage Dockerfile. In fact, we have a total of 4 stages – one for a base image, one to handle our build tasks (dotnet restore and dotnet build), one to publish our project with a release configuration, and then a final image to handle our runtime of the built application.

Right off the bat, since our base image for all tasks focus on the SDK, our final image will come in at 1.77GB (this was the “large” image we talked about in our show).

Now, this is a tremendous improvement over the 8.13GB we initially started with from Visual Studio 2019. However, we can do better in terms of size and performance.

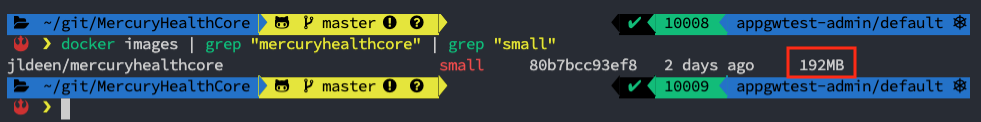

Just as I walked Damian through in our video, we can be very intentional about the processes needed for our application to run. There isn’t anything specific a Debian image offers us, which means we can reduce our footprint by first switching to an Alpine based image, and then separating our build and runtime processes (I.E SDK for build, and runtime for final). When we made these small changes to our Dockerfile, or our application “instructions”, we were able to reduce our image size to 192MB!

That’s a 2200%+ DECREASE in total image size from where we first started with the Windows and Visual Studio 2019 Dockerfile, and an even larger footprint decrease if we consider we previously needed and entire virtual machine to run this app!

The footprint reduction is great, but what about passing our connection string variables? I’m so glad you asked! Luckily, overriding appsettings and connection strings is very easy in .NET Core (and now in .NET 5.0) since we have an appsettings.json file, which can accept an env override by simple adding a -e "ConnectionStrings:DefaultConnection"="connection-string-here" flag to our Docker run command (docker run -it --name mercuryhealthcore-local -p 8080:80 -e "ConnectionStrings:DefaultConnection"=$CONNSTR jldeen/mercuryhealthcore:small).

Alternatively, if you run this container in Azure App Service for Linux Containers (recommended for production and long running applications) or even in Azure Container Instances (ACI) (if you’re playing with containers for the first time and working with dev/test scenarios), you can specify the connection string in the “Environment Variables” section of the respective Azure Resource.

Summary

All in all, there are a lot of ways you can continue your journey from on-prem to the cloud by taking advantage of container orchestration; some of those ways are easy and some require a little elbow grease. The reality of this situation is at some point in your journey, you will need to make code changes – whether that’s to make a few tweaks to your existing ASP.NET app so you can dynamically change environment variables as needed (and do so securely in the future with something like Azure KeyVault), or more than “a few tweaks” and fully invest in refactoring to a cross platform architecture.

Personally, I break it down like this – I’m going to have to refactor in someway no matter what – do I want to do it once and gain all the benefits I’m offered by setting myself up for scaling, or do I want to do it a little, only to do it a little again, and again, and again?

Whatever YOUR answer is, and especially if you’re new to this journey, we have resources to help. I strongly recommend checking out Microsoft Learn’s FREE modules and learning path resources to go hands on with everything you read here, and everything Damian and I covered in our chat today.

0 comments