Azure Pipelines YAML CD features now generally available

We’re excited to announce the general availability of the Azure Pipelines YAML CD features. We now offer a unified YAML experience to configure each of your pipelines to do CI, CD, or CI and CD together.

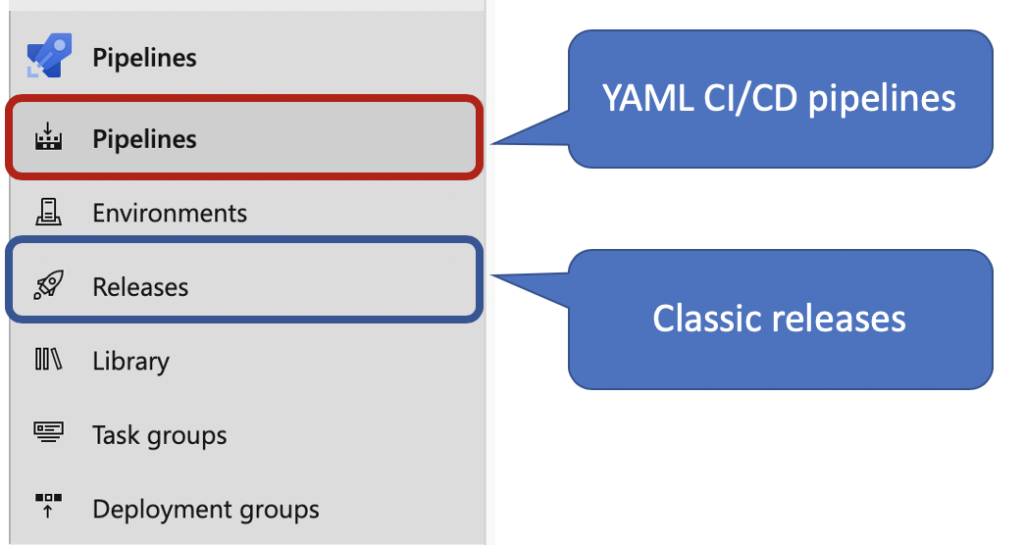

Releases vs. YAML pipelines

Azure Pipelines supports continuous integration (CI) and continuous delivery (CD) to test, build and ship your code to any target – repeatedly and consistently. You accomplish this by defining a pipeline. CD pipelines can be authored using the YAML syntax or through the visual user interface (Releases).

You can create and configure release pipelines in the web portal with the visual user interface editor (Releases). A release pipeline can consume and deploy the artifacts produced by the CI pipelines to your deployment targets. On the other hand, you can define the CI/CD workflows in a YAML file – azure-pipelines.yml. The pipelines file is versioned with your code. It follows the same branching structure. As result, you get validation of the changes through code reviews in pull requests and branch build policies. Most importantly, the changes are in the version control with the rest of the codebase so you can easily identify the issue.

Highlights

YAML CD features introduces several new features that are available for all organizations using multi-stage YAML pipelines. Some of the highlights include.

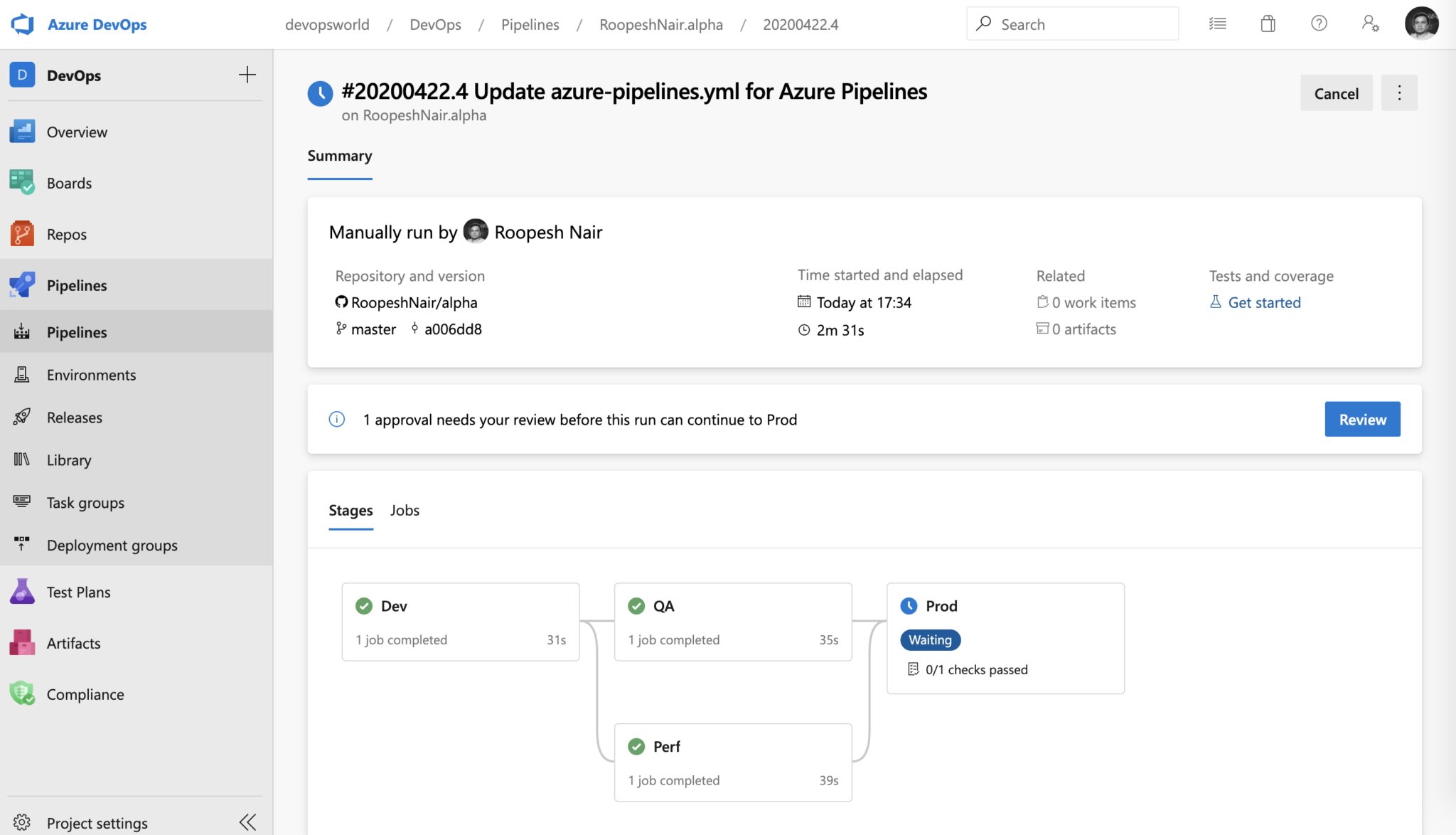

Multi-stage YAML pipelines (for CI and CD)

Stages are the major divisions in a pipeline: “build app”, “Run tests”, and “deploy to Prod” are good examples of stages. They are a logical boundary in your pipeline at which you can pause the pipeline and perform various checks. Stages may be arranged into a dependency graph. For example, “run dev stage before the QA”.

Resources in YAML pipelines

A resource defines the type of artifact used by a pipeline. Resources provide full traceability of the artifacts consumed in your pipeline. It includes artifact, version, associated commits, and work-items. Most importantly, fully automate the DevOps workflow by subscribing to trigger events on your resources. Supported type of resources are: pipelines, builds, repositories, containers, and packages.

resources:

pipelines: [ pipeline ]

builds: [ build ]

repositories: [ repository ]

containers: [ container ]

packages: [ package ]

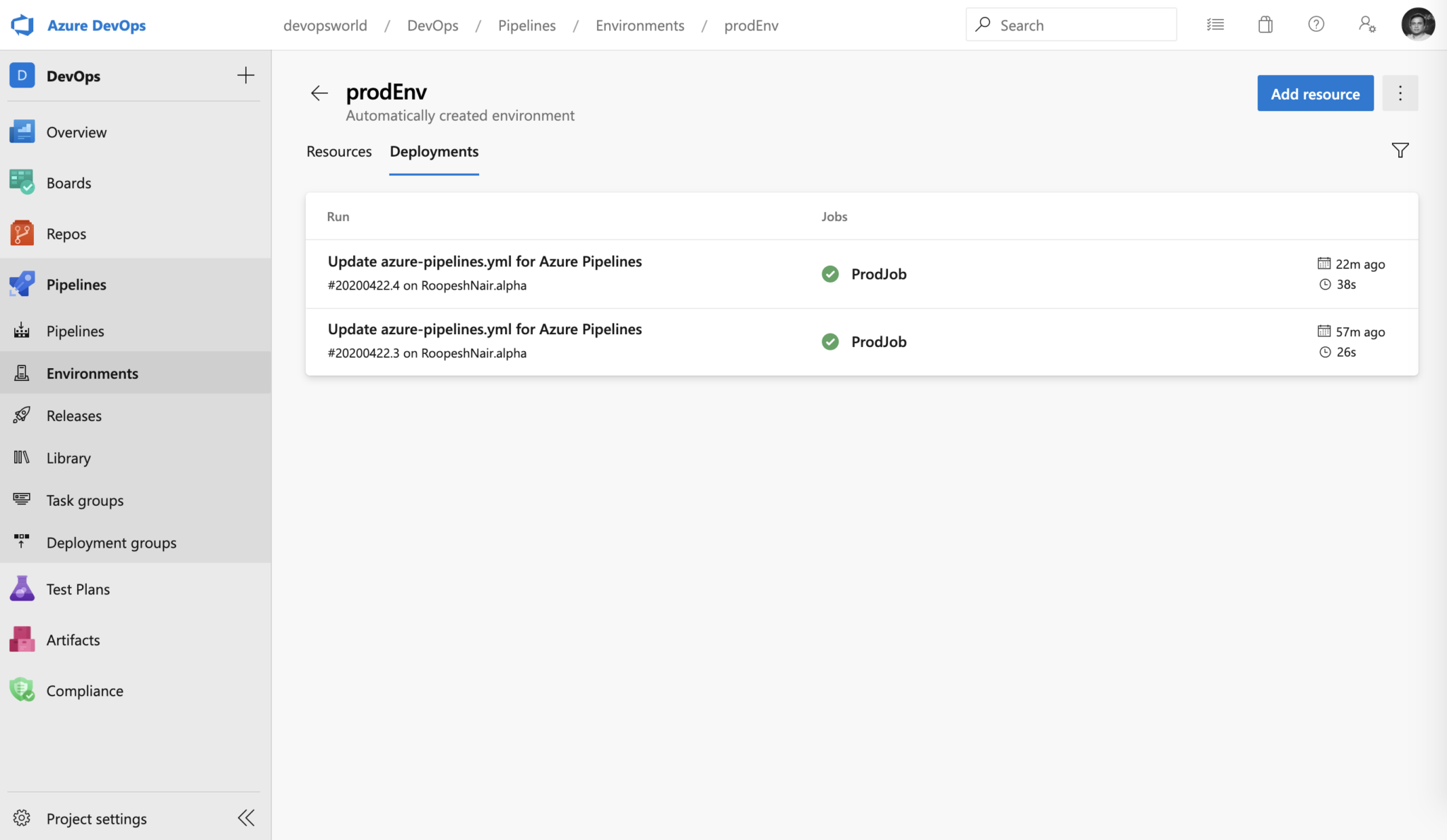

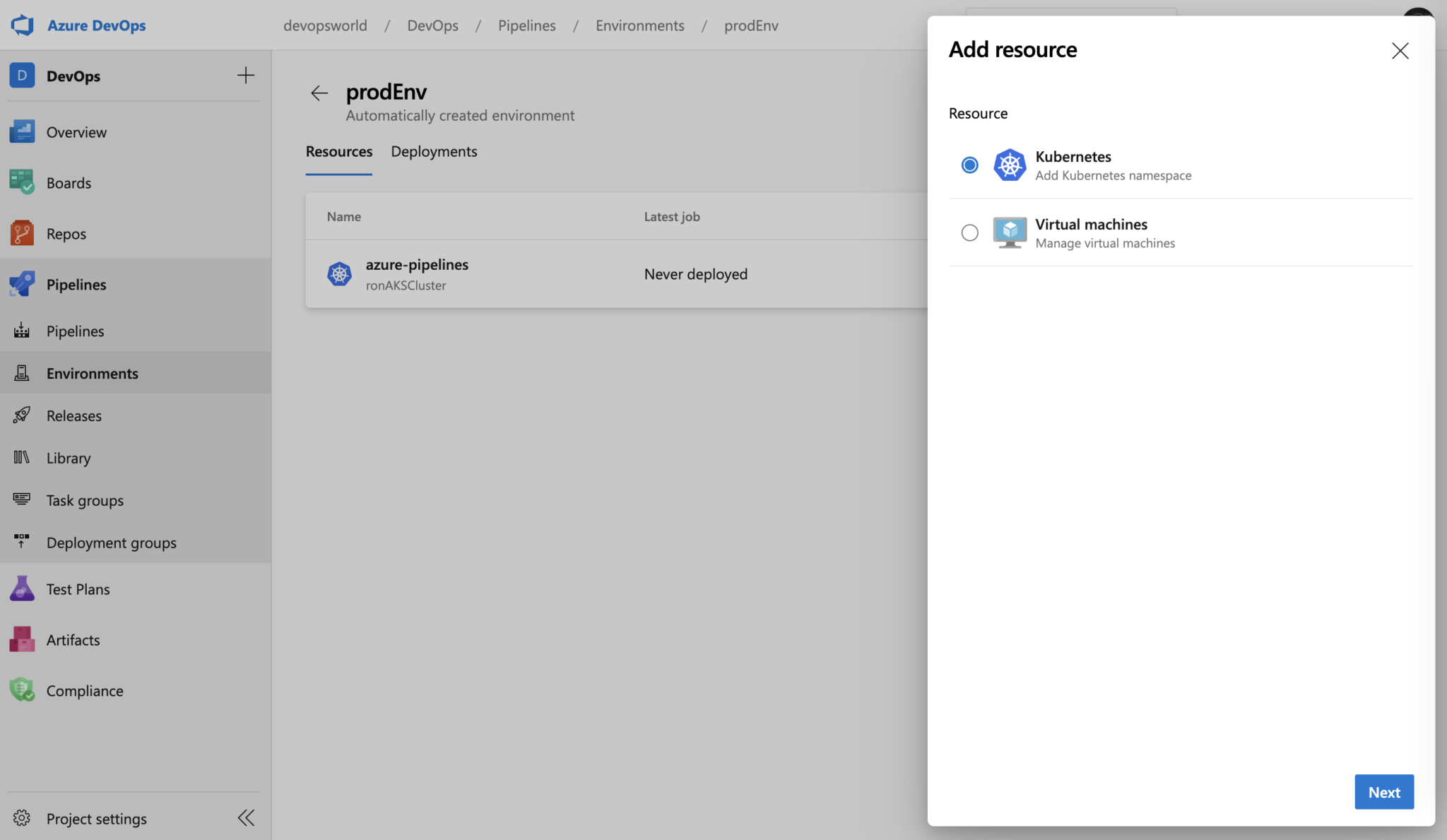

Environments and deployment strategies

An environment is a collection of resources that can be targeted for deployment from a pipeline. Environments can include Kubernetes clusters, Azure web apps, virtual machines, databases etc. Typical examples of environment names are Dev, Test, QA, Staging, and Production.

Kubernetes and Virtual Machine resources in environment

Kubernetes resource view provides the status of objects within the mapped Kubernetes namespace. It overlays pipeline traceability on top so you can trace back from the Kubernetes object to the pipeline and to the commit. Virtual machine resource view lets you add your VMs that are on any cloud or on-premises. As a result, rollout application updates and track deployments to the virtual machines.

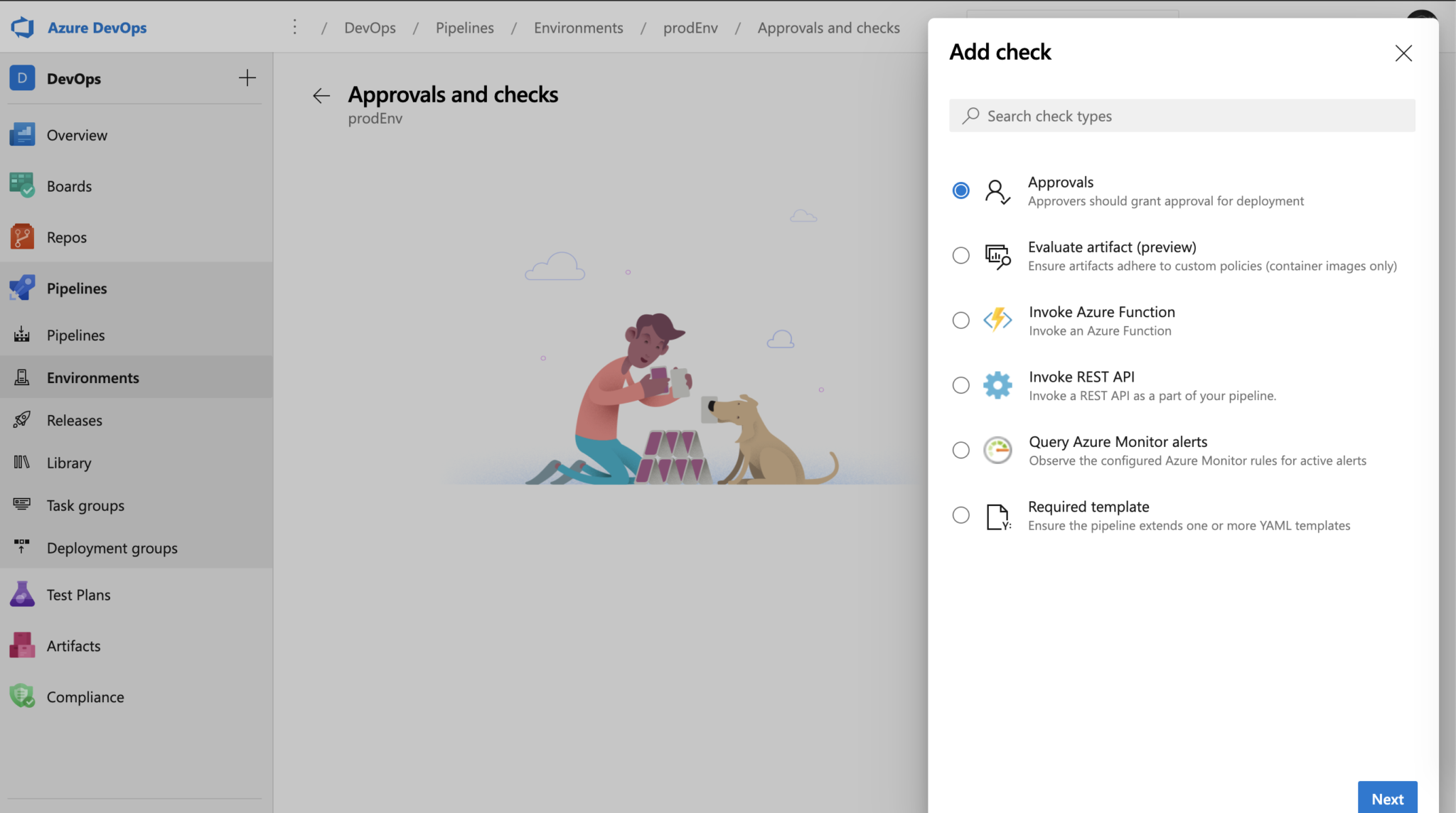

Approvals and checks on resources

Pipelines rely on resources such as environments, service connections, agent pools, and library items. Checks enable the resource owner to control whether a stage in a pipeline can consume the resource. As an owner, you can define the checks that must be met prior a stage consuming that resource can start. For example, a manual approval checks on an environment ensures that deployment can happen only after the reviewers have signed-off.

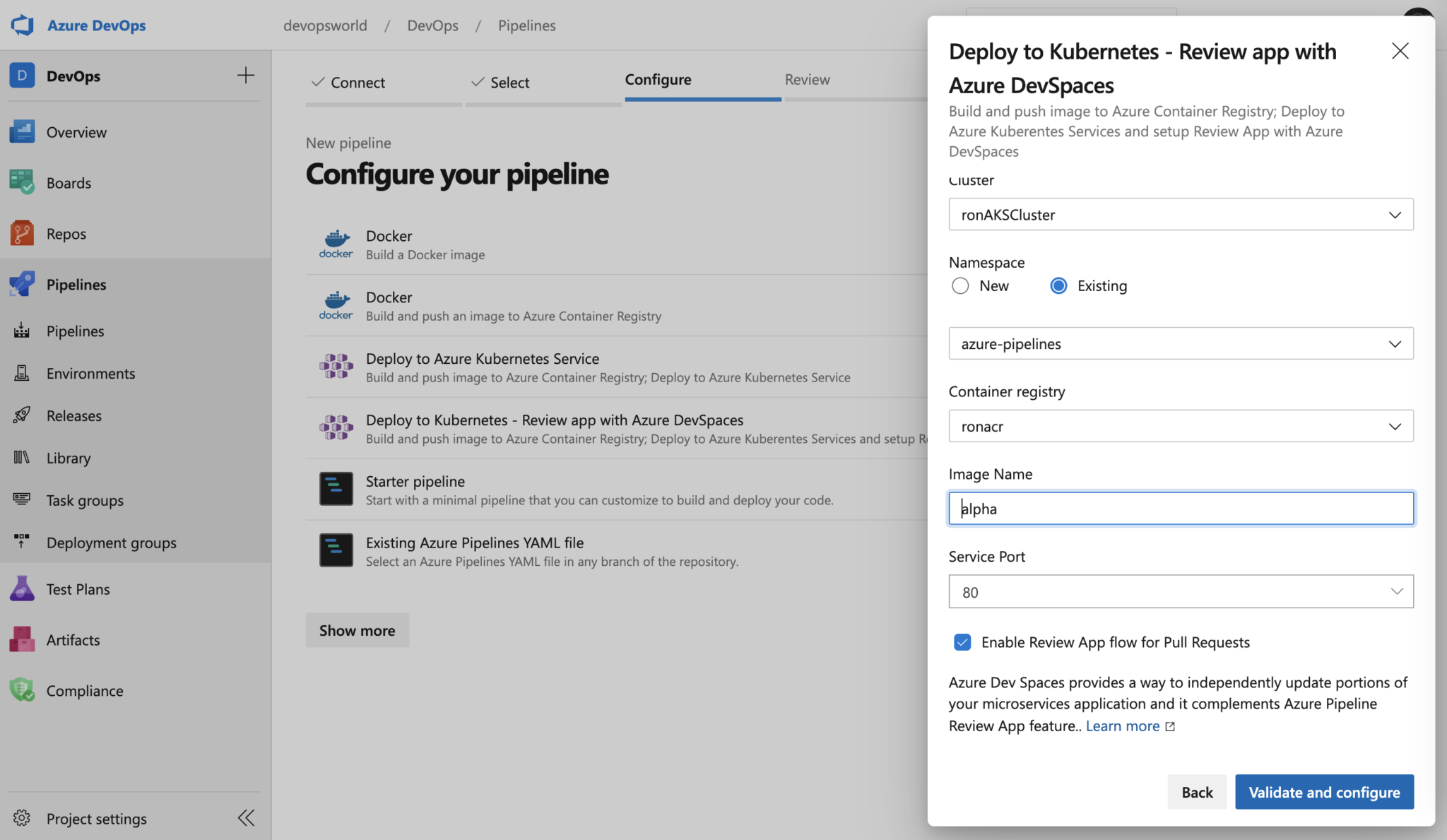

Review apps for collaboration

Review apps works by deploying every pull request from your git repository to a dynamically created resource in an environment. The team can see the changes in the PR, as well as how they work with other dependent services before they’re merged into the main branch. As a result, you to shift-left the code quality and improve productivity.

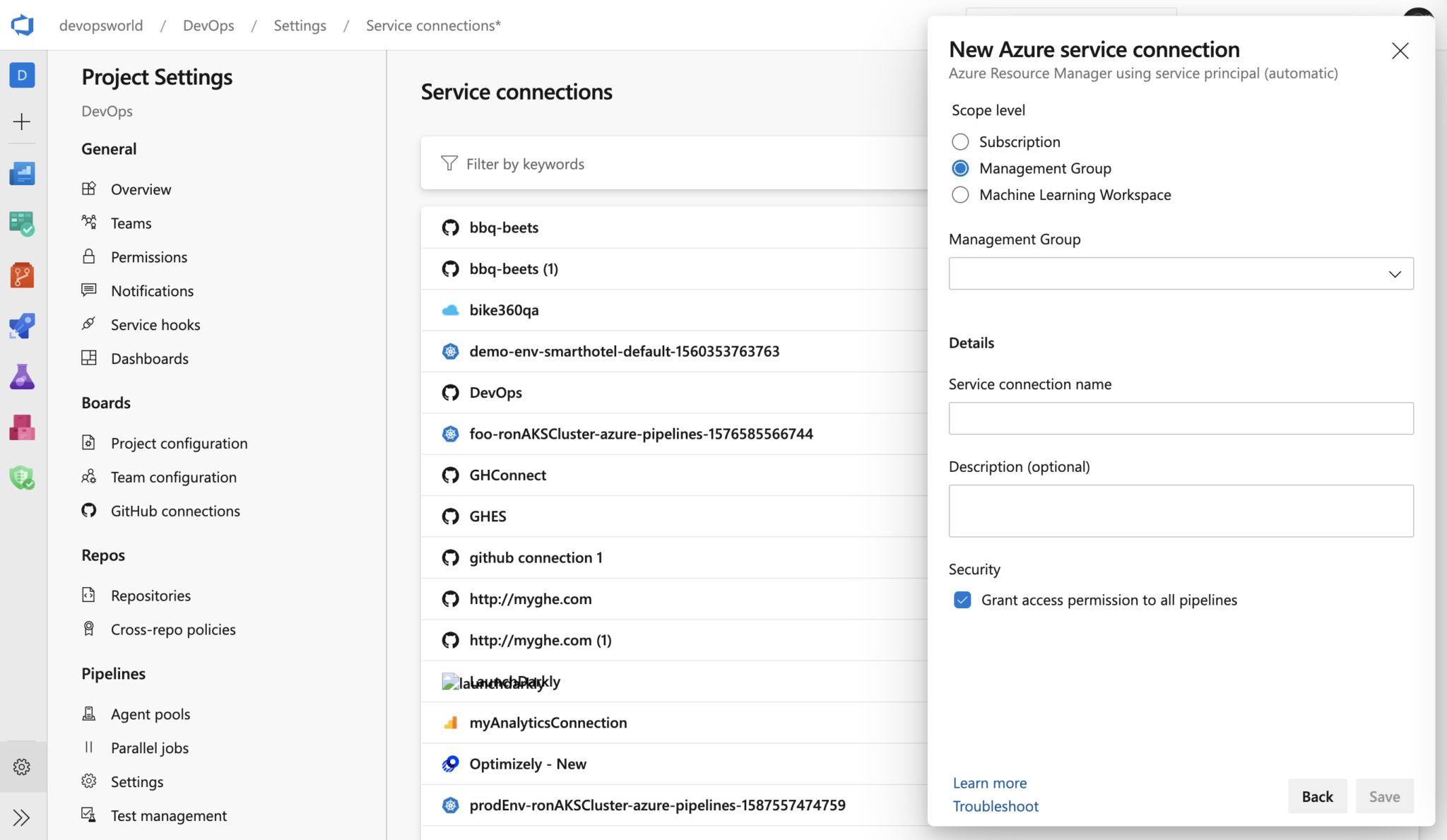

Refreshed UX for service connections

We have improved the service connection user experience. Further, enabled configuring approvals and checks on service connections. For approvals, we follow segregation of roles between the resource owners and developers. For instance, any pipeline runs that use a connection with the checks enabled will pause the workflow.

Thank you

Lastly, we want to thank all our users who have adopted Azure Pipelines. If you’re new to Azure Pipelines, get started for free on our website.. Learn what’s new and what you can do with Azure Pipelines. As always, we’d love to hear your feedback and comments. Feel free to comment on this post or tweet at @AzureDevOps with your thoughts.

Do the environments for VMs also create a deployment group?

Please provide the ability to copy/add an existing deployment pool as an environment.

I have a dev, test and prod deployment pool, which in turn a deployment group for my project was created. Now, I want to create a dev environment but I do not want to have to register a second agent. I want to instead see a dropdown that says "select an existing deployment pool to use as your environment."

Otherwise, I have to create the environment manually, which creates a pool under the hood and connects the pool to my...

Presently there is only two option i.e Kubernetes & Virtual machines in Environments . Can we add Azure App service in Environments ? Or It is in roadmap ?

What a music to my ears however I’m not seeing the option for the “multi-stage pipeline” under preview. Do you have a suggestion on how to go about enabling the feature?

Thanks for sharing this information, it is very helpful to me.

Stories

Nice to know that now YAML scripting for CD in GA for Azure DevOps Service, but when are we going get this functionality on Azure DevOps Server 2019? Any plans in coming quarterly release?

Under the heading "Resources in YAML pipelines" a new resource has been listed: packages. However, no matter how much I bing/qoogle, I can't find any reference/sample yaml on what the parameters are to use it. The best I can find is: here, but while it states packages can be used to trigger off azure artifacts (good), it's also devoid of any sample structure.

I tried guessing/trial-n-error, but came up short. It seems the pipeline accepts the resource but I cant figure out what params it wants. Any hints would be wonderful, been waiting for this feature for a...

Hey Justin I think I can help, try and use the YAML schema to view the properties\params.

https://dev.azure.com/{OrgName}/_apis/distributedtask/yamlschema?api-version=5.1I found these searching for packageResource.

"firstProperty": [ "package" ], "required": [ "package", "type", "connection", "name" ]This is cool. However, we cannot use it because it does not exist in the Azure DevOps Server 2019, i.e. the on-prem version. Can we move to the Services version? A complicated business, given the amount of the code and processes we have already.

It is ironic that the most loyal customers of Azure DevOps who started using it when it was still TFS without a trace of presence in the cloud are now left behind with an obsolete technology.

The feeling is that we are screwed for being loyal.

Thank you very much for nothing.

Mark – appreciate your feedback. We bundle only GA features with Azure DevOps Server. I’m sure you understand the reasoning behind it.

Since the YAML pipeline features were preview, it didn’t make the cut. But we will include in the next available ship vehicle.

It doesn’t seem that the approval checks allows me to schedule the release for a later time, so the deployment happens immediately. Does this mean that I have to get up at midnight whenever I want to do all my PROD deployments? I am wondering if this is being worked on, and if so I’d appreciate a link to some more information about when it might be released. Can’t really go whole hog on this just yet for many of our critical systems until this feature is available.

This is a great update and I like the direction this is heading.

Brilliant stuff! I actually thought this was already on “General Availability” as a month ago we moved away from Releases to full Yaml CD with shared templates that allow us to easily define a single deployment plan that gets reproduced on all environments!

I just wish the expression support on the yaml templates was better (specifically, parameter type checking, array length, better null support and handling)

Nice work @roopesh and team was great working with you all during the private preview 🙂 Matt D

Thank you @Matt for all the feedback and help during preview.