Introduction

In 2020, Microsoft CSE was involved in the process of a Smart Factory Transformation leveraging Microsoft technology, building an Industrial Internet of Things solution for factory automation. The customer was a global technology company that supplies systems for passenger cars, commercial vehicles, and industrial technology. Previously, their factories operated with a paper-based shop floor management process where production related data was documented manually with no analysis functionality. The purpose of the transformation was to improve the productivity of their plants and workers and improve the quality of the goods they produce.

Challenge and Objectives

The key goals of the project included:

- Monitor key performance indicators of machines running on the factory floor and configure which machines should be monitored to be able to react to any issues quickly before they affect the customer, while also enabling other monitoring benefits for shop floor management.

- Trace parts and batches end-to-end in their lifecycle with the ability to quantify waste in their value streams and identify issues across key steps.

- Leverage maintenance intelligence to reduce the downtime of machinery.

We started to transform their flagship factory as a lighthouse project to a digital platform to pave the way for the transformation of over 100 of their factories.

One dev crew from Microsoft worked on the connectivity part of the project for 15 months (organized into four project iterations). This part included collecting the OPC-UA based telemetry data on the edge within the plant, forwarding it to Azure for enrichment and storage. We also provided a rich set of APIs used by a custom user interface to view the data and manipulate the digital representation of the machines and signals in the cloud.

This article has the goal of outlining the project from the connectivity point of view.

Project Goals

- Empower a wider community to drive the digital transformation by connecting devices as an enabler.

- We have a proven set of steps for onboarding OPC-UA capable devices.

- Onboarding process is observable.

- Configuration process is automated for KepserverEX with drivers for Siemens S7, Modbus and Euromap devices.

- GUI is available for configuration.

- We can publish and enrich machine-generated data as messages that can be selectively subscribed/consumed by Factory Intelligence applications.

- Shortening the commissioning of machinery.

- Increase security and stability of configuration changes by reducing operational risk caused by configuration changes.

- Configuration history is available.

- Gap analysis using a threat Model process including a mitigation plan.

- RBAC model in place for controlling access to the solution.

- Increase continuous data availability and data quality.

- Successful device onboarding can be validated based on immediate feedback.

- Scale the connectivity solution to enable the onboarding of additional factory plants.

- Connectivity infrastructure supports stamp-based deployment architecture.

- Connectivity APIs are versioned.

- Exposed entities are uniquely identifiable.

Architecture

To reduce the complexity of the Industrial Internet of Things solution architecture, we first broke it down into two parts. One part runs on the factory floor and is used to collect machine data and configure on-site resources. The second part is deployed to the cloud and makes up the data processing, enrichment, monitoring and visualization part of the system.

The Industrial Internet of Things Solution on the Edge

Diagram of the Industrial Internet of Things solution architecture on the Edge / in the factory.

The IoT Edge device is the only portion of the solution that needs to reside on the factory floor. All other components run on Microsoft Azure. Its task is to collect signals (telemetry data) from OPC-UA and non-OPC-UA devices. Non-OPC-UA devices are managed by an OPC-UA gateway which collects telemetry data from them and outputs it as OPC-UA signals. Currently, KepServerEx is in used as the gateway.

The OPC Publisher edge module, which is part of the Industrial Internet of Things platform offered by Microsoft on GitHub, collects all data from the OPC-UA devices and gateways and sends them to IoT Hub. It is configured via a JSON file which gets pushed by the Pipeline Publisher as a cloud-to-device direct message through IoT Hub to a custom Edge module called the Configuration Controller. The task of the Configuration Controller is to monitor which configurations are supposed to be active, request them via the module Twin, receive them in compressed chunks – as a single cloud-to-device direct message can contain up to 256Kb of data – decompress, assemble, and write them to a shared filesystem in the JSON format expected by the OPC Publisher module. OPC Publisher detects changes to this file and updates its OPC UA node subscriptions accordingly.

The custom Gateway Configuration module is used by the Asset Registry to configure the gateway (KepServerEx) through its REST API, to onboard non-OPC-UA devices connected to the gateway. It can also connect to the gateway using opc.tcp and request sample metrics data from the newly configured devices to verify that they are working properly.

The OPC Twin edge module, also a part of the Industrial Internet of Things platform, is used to browse OPC UA devices and return their OPC UA nodes as signals they can emit to the Asset Registry. It is also controlled via cloud-to-device direct methods sent by the Asset Registry through IoT Hub.

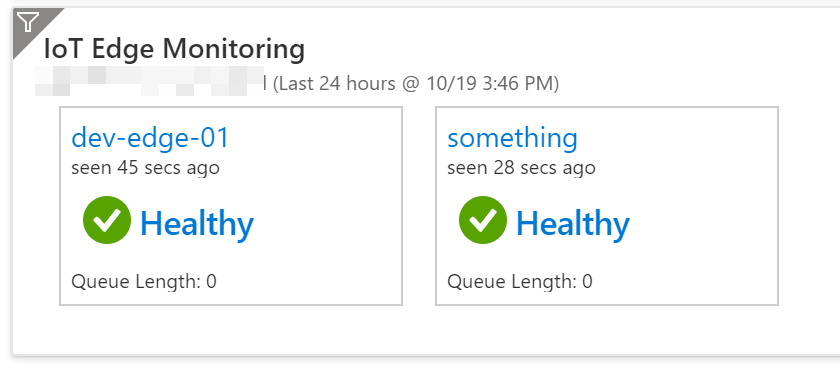

The Metrics Collector module, a new addition to the Industrial Internet of Things Platform, collects health metrics from all Edge modules that publish data on a Prometheus endpoint and forwards them to a Log Analytics workspace. From there they are displayed in workbooks in IoT Hub, which are provided by Microsoft, in a custom dashboard that monitors the entire solution. Custom alerts are then triggered if the Edge devices are deemed unhealthy based on available disk space or system memory, device to cloud message queue length and the last time the Metrics Collector module has successfully transmitted metrics data.

IoT Hub Edge device metrics workbook: Edge module details and health.

IoT Edge device health overview in the solution’s custom health dashboard.

The shared filesystem also hosts certificates and a chain of trust used by the OPC Twin and OPC Publisher modules to securely communicate with the OPC-UA gateway.

The Edge devices are automatically configured by IoT Hub with the proper Edge modules based on IoT Edge deployments in IoT Hub, as part of the release process.

The Industrial Internet of Things Solution in the Cloud

Diagram of the Industrial Internet of Things solution architecture in the cloud.

The services in the cloud serve two purposes: First, the Asset Registry and Pipeline Configuration act as digital representation of all the assets in the factory that the system interacts with. Much of its functionality is exposed as a REST API which makes it a control panel of the entire system that the UI uses. Second, the Machine Data Enrichment pipeline makes the signal data from the factory, ingested as telemetry to IoT Hub or read from files by the File Signal Processor, available to the other participants in an enriched, cleaned, and understandable form.

The Asset Registry is the source of truth for the entire system. It tracks all assets and their relationships, such as plants, devices, signals, gateways, and IoT Edge devices. Any change must go through the Asset Registry, and if necessary, it will synchronize this change to the external components. For example, if the user adds a gateway device to Asset Registry, it will go ahead and configure this device on KepServerEx through the Gateway Configuration Edge module on the factory floor.

Internally, it’s implemented as a shared Azure SQL database with several APIs:

- Asset Registry API: a simple CRUD API that gives access to most entities in a uniform fashion.

- Pipeline Configuration API and Pipeline Publisher API: APIs for signal pipeline management. We don’t just configure individual signals, we allow users to bundle them in “pipeline configurations”, version them and activate all the signals in a given bundle at once. We also keep a history of active pipeline configurations, so the users can roll back to a previous pipeline configuration in case of an error, for example.

- Frontend API – manually optimized, complex, read-only SQL queries to display data in the UI when CRUD operations aren’t enough.

The Industrial Internet of Things solution Asset Registry Data Model.

Machine Data Enrichment is a data pipeline that transforms a stream of “raw” telemetry signals coming from the factory into the form usable by the other internal teams / applications.

We found Stream Analytics the ideal Azure service for this goal. It can enrich a stream of events with the reference data obtained from the Asset Registry SQL database. It then can filter or replicate the stream to multiple destinations. All signals get forwarded automatically to the hot path, an Azure Event Hub. Signals requiring long retention times also get sent to the cold path, an Azure Data Lake. Signals that couldn’t be properly enriched are added to a file in Azure Storage for easy review. All of this happens with minimal configuration effort and with very little added latency.

Consumers of signal data can then choose which data source to use. For example, to assess signal quality, we save all signals from the Hot Path to Azure Data Explorer and built a Dashboard on top to visualize it.

In the middle of the engagement, we got a requirement to support a new, second source of signal data, namely CSV based. These signals are collected from devices and uploaded as files, and the Azure Data Explorer enrichment pipeline schema proved quite flexible to support this. We had two choices: add a second input to the existing Stream Analytics job or create a new Stream Analytics job to handle this new input stream. Ultimately, we chose the second option because the new event stream had a different event schema and would also arrive in large batches every few hours.

Another feature that the Machine Data Enrichment pipeline enables is tagging signals based on the use case they enable, identified by specialist that understands both the asset and the scenario. Tags are an array of predefined strings that include information about the signal in context such as: “Fault of type X” or “Relevant for end-to-end traceability”. Signals can then later be queried based on their tags.

The solution also consists of a web-based User Interface which allows the customer to interact with and configure the Asset Registry and Pipeline Configuration.

Architectural Design Choices

Why not use IoT Central?

Even though IoT Central offers a quick path for creating powerful IoT solutions in Azure with a PaaS offering, we have decided not to use it. The solution did not meet some of our key customer requirements: integration with AAD, device level authorization, extensibility, and geo-presence. Moreover, much of the value added by IoT Central such as visualization and reporting were already covered by a framework that the customer has in place.

Why OPC-UA and not MQTT?

Some gateways support forwarding events into MQTT. Leveraging MQTT would facilitate the work in publishing data to the cloud as we would be able to connect it directly to Edge Hub or Azure IoT Hub. However, the vision of the client, backed by the Azure Industrial Internet of Things architecture, is that by standardizing on OPC-UA, we would be able to eliminate the requirement of using a gateway when the shop floor machinery is updated. Moreover, OPC-UA offers features such as discoverability which enable scenarios that MQTT alone wouldn’t.

Why use KepServerEx and not another gateway?

We decided to rely on partners to translate proprietary protocols instead of relying on adding custom components from each vendor. When choosing a partner gateway, we decided with the customer to start with KepServerEx as it supports most protocols the customer has in all its worldwide factories. The client has experience in using the product, and it exposes the configuration through a REST API which was used in the project to remotely configure the gateway.

Service Bus vs Event Grid

The first use case for Service Bus is to consume Twin changes from Azure IoT Hub to determine and coordinate data pipeline configurations being pushed to the edge.

Service Bus was our choice as it fulfils our requirements which include competing consumers, pub/sub, and ordering of messages as well as alignment with the overall system architecture. We did not want to add an additional Azure service to solve a single problem.

SQL Server vs Cosmos Db vs Azure Digital Twin

Our requirements from a database point of view are to store the following information:

- Machine semantic model and mapping to OPC-UA resources (servers, endpoints, and nodes).

- Data pipeline configuration (signals, transformations, and enrichments)

We believe that Azure Digital Twins would be a good fit to solve the family tree problem (enterprises, divisions, factories, production lines, work center, etc.). The family tree data, however, is not owned by this project, only machine data is. Therefore, the project wouldn’t be using many of Azure Digital Twins’ features such as feeding telemetry into twins, getting twin statuses or alerts. For this reason, we have decided not to use it.

The requirements we have for a database match what a relational database offers:

- Relationship and integrity (machines -> devices -> signals -> signal type)

- Temporal data (Example: this device was assigned to this machine until 2 days ago)

- Integration with Stream Analytics: One of the options for data enrichment is to use Stream Analytics to augment the telemetry data with machine metadata.

We decided to use SQL Server as it supports the requirements and is a product that the customer already had experience with.

Data Destination Storage Options – Azure Data Explorer vs Time Series Insights, SQL Server or Cosmos DB

A single source for enriched machine generated data as queryable database for the solution was also required. The technologies considered for this were: Azure Data Explorer, Time Series Insights, SQL Server and Cosmos DB.

We have chosen Azure Data Explorer because we require an append-only, low latency analytical database. ADX also offers an out of the box solution for data retention, clean-up and cost optimization with a flexible caching policy. And, as this technology was already being used in the project, we were able to reuse the existing cluster and no additional expertise or monitoring was required.

Azure Data Explorer has good support for PowerBI for quick visualization of the data, but in the end, we have used Azure Data Explorer Dashboards as it was the easiest and fastest solution for our visualization requirements.

Testability

The architecture allows us to run end-to-end tests entirely automated and in the cloud. The Edge device can run in a virtual machine with an added Edge module, the opc-plc server. This is a stand-alone, easy to configure OPC-UA server that can act as the gateways or OPC-UA devices without the need of any additional infrastructure. The Edge modules can connect to it in the same way they do in the factory, just using a different address.

As the cloud services all expose REST APIs, the end-to-end tests can use them to provision a blank database with all the data needed, create pipeline configurations, deploy them to the Edge and monitor the connected IoT Hub’s Event Hub compatible endpoint to see that the expected data is being received. With only minor changes, the same end-to-end tests can also trigger the File Based Signal processor to pick up a pre-defined file from a blob store and make sure it processes the file-based signals correctly.

Observability

We use several features from the Azure Monitor suite to build robust observability of the whole fleet:

- Standard Metrics that all Azure services export. They are especially useful for monitoring the Machine Data Enrichment pipeline that consists exclusively of Azure services.

- Custom Metrics that we export from our custom C# applications to Azure Monitor. We use them to measure among other things business logic error rates and throughput of the File Based Signal processor.

- Metrics Collector module that exports module metrics from IoT Edge running on prem to Azure Monitor in the cloud.

- We set up several Custom Dashboards where we display selected, important metrics. There is one global dashboard that describes the overall service health, and multiple specific dashboards showing detailed information on topics like the signal enrichment pipeline health or signal quality.

- We have Alerts for the key metrics and get notified when any part of the service is unhealthy or is likely to become unhealthy soon. We have also tried to limit the number of alerts to avoid alert fatigue.

- Distributed Tracing gives us visibility into how our services interact with each other, which is especially useful when troubleshooting requests that failed or are too slow. The Application Insights .NET SDK collects distributed traces automatically for all synchronous calls between our custom code and Azure services. We also extended this to work with asynchronous calls by explicitly passing the trace ID along with any asynchronous request and continue the trace with this ID on the recipient’s side.

- Log Analytics was used extensively to create log queries with data in Azure Monitor Logs. These queries surface as visuals in the custom dashboard or as the triggers for alerts.

We found it beneficial to add the Application Insights SDK to all our custom C# apps early in their development. It automatically collects distributed traces and exceptions from the application, which helps with both local and remote troubleshooting.

Tracing the browsing of OPC-UA devices between the Asset Registry and the Edge in Application Insights.

Conclusion

By the end of the engagement, the customer learned a lot about building industrial Internet of Things solutions using Microsoft Azure services. We from the Microsoft team learned about the challenges of running software on the Edge in a factory and interfacing with various OPC-UA servers and gateways. The project goals were all met.

Some lessons learned from us include that we benefited from using a domain-driven design to develop the models and build a ubiquitous language and a mutual understanding of the domain together with the customer. Also, documenting the principles with code samples has helped to educate and align the developers.

We chose to approach our problems in a pragmatic way and focus on simplicity while resisting the urge to over-engineer the solution and practice Conference Driven Development.

Consistently starting the development of new components with architectural design reviews, writing the Markdown files together using Visual Studio Live share and gathering know-how by doing short spikes to test the viability of ideas proved valuable. This ensured we included everyone from the team in the envisioning phase and allowed us to detect potential gaps or issues in our approaches that could lead to problems or dead ends later.

Finally, spending some initial time discussing and deciding on the project structure, naming conventions and adding linters and code styling rules early to the project helped everyone to work together very quickly. This was especially important as we were pair-programming between developers from different companies. This was further helped by constantly keeping Teams chats open that could be joined at any time by peers working on the same part of the solution.

Acknowledgements

Contributors to the solution from the Microsoft team listed in alphabetical order by last name: Francisco Beltrao, Mikhail Chatillon, Sascha Corti, Laura Damian, Konstantin Gukov, Greta Jocyte, Anaig Marechal, Martin Weber. We had great support from Bill Berry, Ian Davis, and Larry Lieberman from the CSE IoT SA Team who built a generator for a KEPServerEX configuration API library and received great feedback and support from the Microsoft IoT Team in Germany, namely Vitaly Slepakov and Hans Gschoßmann.

Resources

Azure Industrial Intrnet o Things Platform Overview: https://docs.microsoft.com/azure/industrial-iot/overview-what-is-industrial-iot

Azure Industrial Internet of Things Platform Repository: https://github.com/Azure/Industrial-IoT

OPC Publisher Edge Module: https://azure.github.io/Industrial-IoT/modules/publisher.html

OPC Twin Edge Module: https://azure.github.io/Industrial-IoT/modules/twin.html

KepServerEx: https://www.kepware.com/en-us/products/kepserverex

knowing the solution from the inside: very well described!

Thank you guys for the policies, design reviews, linters and DDD you setup.

regarding digital twin: I really like the way you argumented on usage sql itstead.

great work!