Testing Reverse Engineering Tools/Framework

Hello, I am Manish Vasani, a Software Design Engineer in Test on the Phoenix Analysis and Tools team. In this post, I’ll be giving a brief overview of the Phoenix Pereader-based Analysis tools with an emphasis on the difficulties traditionally involved in testing these types of tools and how Phoenix makes things much easier. For those not familiar with Pereader, it’s a Phoenix component that reads Portable Executable (PE) files and converts them to Intermediate Representation (IR). You can find more information on Pereader in the Phoenix SDK Documentation/Phoenix Technical Overview Article.

Overview of Pereader based Analysis Tools

It was during my bachelor’s third year that I first heard the term “Decompiler”. A couple of my close friends had implemented an i386 to C transformation tool for their main project. Not surprisingly, this was the most talked about project in the department and rightly so. Reverse engineering is just so amazingly cool! You need to have the same expertise as a traditional compiler developer to understand the different compiler optimizations and codegen outputs in order to reverse engineer it. Additionally, a huge challenge lies in ensuring robustness and correctness of your tool against a vast and dynamic input matrix.

Quite often the term Reverse Engineering gets associated with the notion of applying algorithms or heuristics to map certain patterns in lower level constructs to higher level constructs. One needs to be clear that there are two distinct steps involved in this process: (a) Using the binary (and pdb for native binaries) as a repository of information and converting it to IR (b) Applying the algorithms to identify patterns in IR to convert them to higher level constructs. One of most widely used feature of the Phoenix framework is raising managed/native binaries to intermediate code representation using pereader (step a). Once the binary has been raised to Phoenix IR, Pereader client can do various types of analysis on the IR such as call dependency analysis (to build a call graph), security analysis (to identify security vulnerabilities in code), etc. Our test team’s job is to test the Pereader framework for these tools.

Testing Reverse Engineering Tools

Testing a reverse engineering tool has two main challenges:

1) The primary challenge, as I mentioned earlier, is the test matrix. Difficulty lies in the fact that different compilers (or even the same compiler used with different switches) have differing codegen outputs for the same piece of code. Your implementation will work fine for a subset of constructs or code patterns built with a selected set of compilers. But as soon as you expose it to a wider test matrix, you find the need to modify your algorithm to account for the new patterns that you encounter in the input. Everything might work fine for binaries built with c2 shipped with VS2005, but a cool new optimization switch added in VS2008 c2 or brand new machine instructions added by the hardware vendors might render your tool useless for these binaries. The test team needs to track such changes and should continuously add to their test matrix.

2) Another challenge in testing reverse engineering tools is how do you verify that you read\raise the binary correctly. The possible techniques that come to mind are:

a. Adding asserts and trace during different phases of the raise. This solution is not suitable for automated scenario testing as well as for the release builds, so I won’t dwell on this technique here.

b. Verifying semantic correctness of the raise: This can be done by lowering back the raised IR to binary level to generate a cloned binary and then verify the clone by either:

1) Executing the original and cloned binary and comparing the execution results

2) Comparing the original and cloned binary. For managed assemblies this can be done through metadata comparison, but there is no trivial technique for comparing native binaries and pdbs.

c. Dumping the raised IR for manual validation/comparison with IR dump during compile. This technique is suitable only if you have the IR dump during compile, against which you wish to compare.

None of these can individually provide a complete testing strategy. Strategy (b) [m4] seems appropriate for breadth wise and robustness testing against Real World binaries, but is does not guarantee 100% correctness. Piece of code which wasn’t raised correctly might lie inside a conditional statement and never get hit during normal execution flow. On the other hand, strategy (c) [m5] seems more appropriate for depth wise testing against targeted test code samples. But comparing IR dumps for huge Real World binaries will be non-trivial as you would expect some amount of noise in the dumps. Hence a hybrid of all these is normally required.

How Phoenix makes it easier to write tests for these tools

Now that I have given you some background on different testing strategies for Reverse engineering tools, I would like to explain how Phoenix aids us in implementing tests for each of these strategies. The modular and extensible architecture of Phoenix provides plug-in points for adding tests at various phases of the tool. I will mainly concentrate on testing strategy b (cloning the binary) and strategy c (correctness test through dumps).

Cloning Binaries through Pewriter

Phoenix Framework provides Pewriter APIs which enables the client to generate binaries from IR. Using these APIs we generate a clone of the original binary for our “Verifying semantic correctness” test. Once the clone has been generated, the exact technique to compare it with the original binary is for the client to decide. You can find more information about Pewriter in Phoenix SDK Documentation

Correctness Test through dumps

A basic correctness test for a Pereader based tool might dump out the IR and any other relevant information to the analysis tool and then compare it to a previously generated baseline dump or an expected output. In a more advanced automated test, you would want to have the baseline dump generated through a tool during compilation of the source and have a compare tool which compares these dumps.

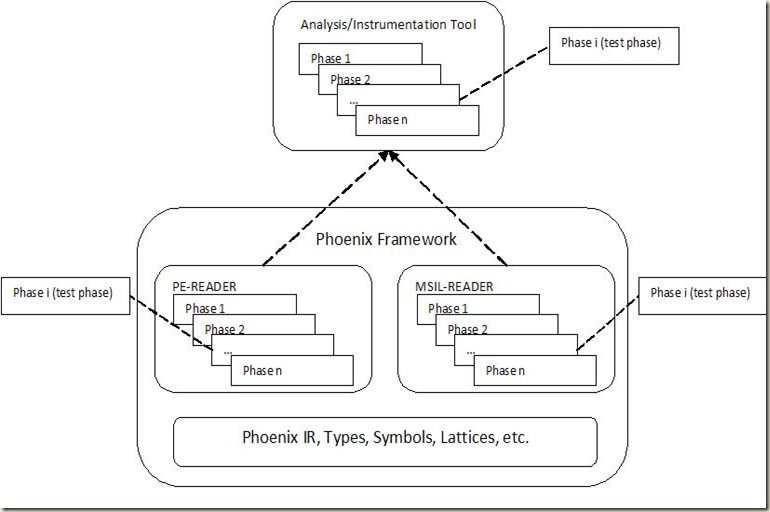

Let us consider the basic test first. Figure below demonstrates how you would design it using Phoenix:

The above figure shows how each of the Phoenix Components (eg. Pereader framework to raise binaries) can be represented as a list of Phoenix Phases. Our test can be implemented as a modular Test phase which plugs into the PhaseList for the client tool[m6] and would be executed when the client triggers its PhaseList execution. Let us consider an example scenario to understand this well.

Consider an analysis tool which uses the Pereader Phases to raise the binary and run static analysis using Phoenix lattice framework (if you are unfamiliar with the lattice framework you may ignore the specifics of the test). A test for this tool could be implemented as a LatticeTestPhase which runs lattice simulation on the raised IR and then dumps out the relevant lattice cell properties. [m7] The following code snippet shows how we can implement and plug in the Lattice Test Phase into the tool.

//Implementation of Lattice Test Phase

namespace TestPhases

{

public ref class LatticeTestPhase : public Phx::Phases::Phase

{

public:

static TestPhases::LatticeTestPhase ^

New

(

Phx::Phases::PhaseConfiguration ^ configuration);

);

static void StaticInitialize();

protected:

override void

Execute

(

Phx::Unit ^ unit

);

void OutputConstantCellProperties

(

Phx::Lattices::ConstantCell ^ constantCell

);

void OutputDeadCellProperties

(

Phx::Lattices::ConstantCell ^ deadCell

);

…

void

RunSimulation

(

Phx::FunctionUnit ^ functionUnit,

Phx::Simulators::RegionSimulator ^ simulator,

Phx::Collections::LatticeList ^ latticeList,

Phx::Lifetime ^ currentLifetime,

Phx::Simulators::SimulatorDirection directionToTest,

Phx::Simulators::SimulatorMode modeToTest

);

};

}

//Append the lattice test phase to the list of phases to be executed for the Pereader based tool

Phx::Phases::Phase ^

PEReaderBasedTool::BuildFunctionPhaseList

(

Phx::Phases::PhaseConfiguration ^ configuration

)

{

Phx::Phases::PhaseList ^ unitList;

// Append RaiseIR phase and other pereader phases

…

if (this->doLatticeTest)

{

// Plug in the Lattice test phase into Tool’s PhaseList

unitList->AppendPhase(TestPhases::LatticeTestPhase::New(configuration));

}

…

}

We can enable the lattice test by just turning on a switch for our client application. The actual validation of the lattice output will be done against a manually generated expected output file.

Automating the Correctness test

The next logical extension to our test is to automate it end-to-end. This can be done by using a Phoenix based c2 during the compile phase. Just as we plugged in the LatticeTestPhase into the PhaseList for Pereader based analysis tool, we can write a Phoenix c2 plug-in to insert the same LatticeTestPhase into c2 PhaseList. LatticeTestPhase will be executed during compile as well as after raise. A simple comparison tool can compare the outputs and provide an end to end automated test.

Conclusion

Compare this with having to implement the test for a non-Phoenix based tool. The points to consider are:

1) Does your compiler support adding plug-ins? If the answer is yes,

a. Can we re-use the same plug-in after raise?

b. Does the plug-in need to be statically linked? (With Phoenix, the plug-in can lie in a different dll)

2) Does the test plug-in logically fit into your overall architecture or do you require providing some dirty hooks to plug it in?

This is what makes Phoenix such a cool framework. You feel you have identified all the places where you can put into use and up comes a new one J. Hopefully you have enjoyed reading this and if you find another new way in which Phoenix can be used, please do share it with us.

Terminology:

c2: VC++ compiler backend

Binary Raising: Process of reverse engineering PE files into IR.

Phoenix Simulation based optimization and lattices: Phoenix Simulation-Based Optimization (SBO) is a framework for analyzing a function by simulating the execution of Intermediate Representation (IR) in an abstract interpreter called a lattice. Lattices can be used as a foundation to determine correct transformations in an optimizing compiler.

0 comments