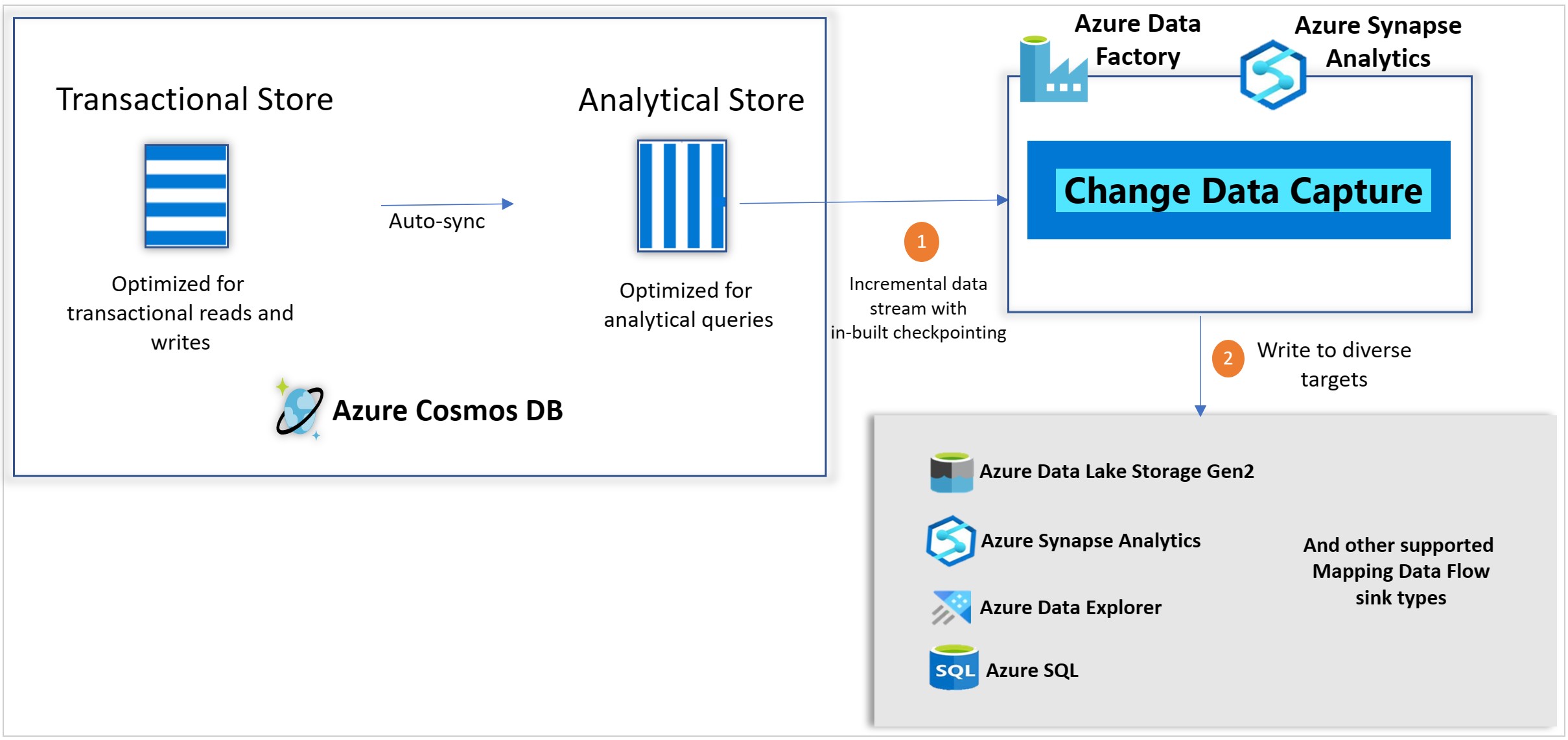

Azure Cosmos DB analytical store now supports Change Data Capture (CDC), for Azure Cosmos DB API for NoSQL and Azure Cosmos DB API for MongoDB. This capability, available in public preview, allows you to efficiently consume a continuous and incremental feed of changed (inserted, updated, and deleted) data from analytical store. CDC is seamlessly integrated with Azure Synapse and Azure Data Factory, providing a scalable no-code experience for high data volume. As CDC is based on analytical store, it does not consume provisioned RUs, does not affect the performance of your transactional workloads, provides lower latency and has lower TCO.

Click here for supported sink types on Mapping Data Flow.

Capabilities

In addition to providing incremental data feed from analytical store to diverse targets, CDC supports the following capabilities.

-

Support for applying filters, projections, and transformations on the change feed via source query

You can optionally use a source query to specify filter(s), projection(s), and transformation(s) which would all be pushed down to the analytical store. Below is a sample source-query that would only capture incremental records with Category = ‘Urban‘, project only a subset of fields and apply a simple transformation.

select ProductId, Product, Segment,

concat(Manufacturer, ‘-‘, Category) as ManufactCategory

from c

where Category = ‘Urban’ -

Support for capturing deletes and intermediate updates

Analytical store CDC captures deleted records and intermediate updates. The captured deletes and updates can be applied on sinks that support delete and update operations. The {_rid} value uniquely identifies the records and so by specifying {_rid} as key column on the sink side, the updates and deletes would be reflected on the sink.

-

Filter change feed for a specific type of operation (Insert | Update | Delete | TTL)

You can filter the CDC feed for a specific type of operation. For example, you have the option to selectively capture the Insert and update operations only, thereby ignoring the user-delete and TTL-delete operations.

-

Support for schema alterations, flattening, row modifier transformations and partitioning

In addition to specifying filters, projections and transformations via source query, you can also perform advanced schema operations such as flattening, applying advanced row modifier operations and dynamically partitioning the data based on the given key.

-

Efficient incremental data capture with internally managed checkpoints

Each change in Cosmos DB container appears exactly once in the CDC feed, and the checkpoints are managed internally for you. This helps to address the below disadvantages of the common pattern of using custom checkpoints based on the “_ts” value:

-

- The “_ts” filter is applied against the data files which does not always guarantee minimal data scan. The internally managed GLSN based checkpoints in the new CDC capability ensure that the incremental data identification is done, just based on the metadata and so guarantees minimal data scanning in each stream.

-

- The analytical store sync process does not guarantee “_ts” based ordering which means that there could be cases where an incremental record’s “_ts” is lesser than the last checkpointed “_ts” and could be missed out in the incremental stream. The new CDC does not consider “_ts” to identify the incremental records and thus guarantees that none of the incremental records are missed.

With CDC, there’s no limitation around the fixed data retention period for which changes are available. Multiple change feeds on the same container can be consumed simultaneously. Changes can be synchronized from “the Beginning” or “from a given timestamp” or “from now”.

-

Throughput isolation, lower latency and lower TCO

Operations on Cosmos DB analytical store do not consume the provisioned RUs and so do not impact your transactional workloads. CDC with analytical store also has lower latency and lower TCO, compared to using ChangeFeed on transactional store. The lower latency is attributed to analytical store enabling better parallelism for data processing and reduces the overall TCO enabling you to drive cost efficiencies.

-

No-code, low-touch, non-intrusive end-to-end integration

The seamless and native integration of analytical store CDC with Azure Synapse and Azure Data Factory provides the no-code, low-touch experience.

Scenarios

-

Consuming incremental data from Cosmos DB

You can consider using analytical store CDC, if you are currently using or planning to use below:

- Incremental data capture using Azure Data Factory Data Flow or Copy activity

- One time batch processing using Azure Data Factory

- Streaming Cosmos DB data

- Capturing deletes, intermediate changes, applying filters or projections or transformations on Cosmos DB Data

-

Analytical store sync latency

Note that analytical store has up to 2 min latency to sync transactional store data

-

Incremental feed to analytical platform of your choice

Change data capture capability enables end-to-end analytical story providing you the flexibility to write Cosmos DB data to any of the supported sink types. It also enables you to bring Cosmos DB data into a centralized data lake and join with data from diverse data sources. You can flatten the data, partition it and apply more transformations either in Azure Synapse or Azure Data Factory.

Setting up CDC in analytical store

You can consume incremental analytical store data from a Cosmos DB container using either Azure Synapse or Azure Data Factory, once the Cosmos DB account has been enabled for Synapse Link and analytical store has been enabled on a new container or an existing container.

On the Azure Synapse Data flow or on the Azure Data factory Data flow, choose the Inline dataset type as “Azure Cosmos DB for NoSQL” and Store type as “Analytical”, as seen below.

Please note that the linked service interface for Azure Cosmos DB for MongoDB API is not available on Dataflow yet. However, you would be able to use your account’s document endpoint with the “Azure Cosmos DB for NoSQL” linked service interface as a work around until the Mongo linked service is supported.

Eg: On a NoSQL linked service, choose “Enter Manually” to provide the Cosmos DB account info and use account’s document endpoint (eg: https://<accturi>.documents.azure.com:443/) instead of the Mongo endpoint (eg: mongodb://<accturi>.mongo.cosmos.azure.com:10255/)

In regards to:

> Please note that the linked service interface for Azure Cosmos DB for MongoDB API is not available on Dataflow yet. However, you would be able to use your account’s document endpoint with the “Azure Cosmos DB for NoSQL” linked service interface as a work around until the Mongo linked service is supported.

I am not able to see how I can connect my "source=Azure Cosmos DB for MongoDB API" using the method explained for Data Factory and configure MongoDB. Atlas as a target.

When I add the CDC from ADF>> Change Data Capture (preview) >> "New CDC...

Hello!

– MongoDB Atlas is not a supported sink in mapping data flow.

– Cosmos DB AS-CDC can currently be accessed through Dataflow and we are still working on enabling it under the “top level CDC resource” in ADF. You are trying the top level CDC resource route in ADF based on the your description.

Tks

Hello!

Looks interesting.

Regarding the following two statements

* “Analytical store CDC captures deleted records and intermediate updates.”

* “Changes can be synchronized from “the Beginning””

Does that mean that the entire history for all document versions of all documents is retained?

Correct.

It is possible to consume that Change Data Capture with an Spark connector?

Hello Thiago. No, it is not. We support ADF and Synapse data flows. Tks!

Got it.

Do you know if this feature is on the roadmap?

Not for now. Maybe in the future.

Hi Revin, Anitha, Rodrigo,

Thanks for the post. Very interesting.

Just a few words to mention that the first link to “Azure Cosmos DB analytical store” is broken. Nothing important as the right link can be guessed but I thought you probably wanted to know.

Thank you for your help. It’s fixed now!!