In today’s digital world, customers expect applications to be feature rich, tailored to their needs, and delivered quickly. For the modern-day business to survive and thrive, applications need to evolve quickly, scale fast and be highly resilient. Businesses need swift innovation through cloud-native architectures to meet these growing customer expectations.

Challenges Developers Face

- Monolithic Architecture: Tightly coupled components across layers makes changes very expensive and time consuming. Minor changes lead to full application testing and redeployment.

- Deployment process riddled with compatibility issues: During development, testing, deployment DevOps teams often spend hours and days trying to find and fix problem with the environment. Inconsistent environments are the number one bottleneck that decrease the flow of pipelines.

Modern Application Development approach

Modern application development enables developers to innovate rapidly by using cloud-native architectures with loosely coupled microservices. It is the chosen method to build and run modern large, complex applications and services. The microservice architecture arranges an application as a collection of loosely coupled services. This collection of services can be developed, tested, deployed, and versioned independently.

Containerization

Today, organizations are using containerization to reduce deployment complexity and increase release velocity. Containerization bundles the application’s code together with the related configuration files, libraries, and dependencies. By eliminating the dependency on the underlying environment containerization removes a critical bottleneck and makes applications seamlessly work across environments.

Docker is an open-source project for automating the deployment of applications as portable, self-sufficient containers that can run on the cloud or on-premises. Docker is now the de-facto standard for containerization and is supported by the most significant vendors in the Windows and Linux ecosystems.

Container Orchestration

Containers do a great job in increasing portability and simplifying DevOps for organizations. However, when deployed in large numbers, they create their own set of problems. Containers still need to be managed. They have to be deployed in host environments, monitored, made fault tolerant, and scaled up or down based on demand. Just as Docker has become the de-facto standard for containerization, Kubernetes has become the equivalent when it comes to container orchestration.

Kubernetes manages container-based applications and their associated networking and storage components. Kubernetes focuses on the application workloads and provides a declarative approach to deployments, backed by a robust set of APIs for management operations.

Continuous Delivery

Imperative methods for software deployment are good for small deployments but can become unmanageable to support environments when they scale. Infrastructure as Code (IaC) is a key DevOps practice and a component of continuous delivery. With IaC, DevOps teams can work together with a unified set of practices and tools to deliver applications and their supporting infrastructure rapidly and reliably at scale.

Introducing our AKS + Cosmos DB Reference Sample

The approach and technologies used today for building and delivering modern applications solves lots of problems and helps reduce complexity. However, the technologies used can themselves introduce complexity and often requires developers to learn new concepts and technologies.

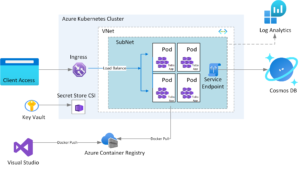

Deploying modern applications in an Azure environment requires developers learn and understand a large number of services. This can include services such as Azure Kubernetes Service, Managed Identity with Azure Active Directory, Azure Container Registry, Azure Networking, Azure Key Vault, Azure Log Analytics and many more.

To help developers using Azure Cosmos DB become familiar with the concepts and services required to support cloud native application development, we’ve built a comprehensive sample that demonstrates how to containerize our simple To-Do application from our Quickstarts, and deploy it into AKS with a host of Azure services properly configured for secure enterprise usage. This includes using Managed identities for AKS and Azure Cosmos DB with Role-based access control (RBAC) to control authorization to the data.

Two choices for deployment

Azure Kubernetes Services provides a means for Kubernetes to provision and manage Azure services through its Azure Service Operator (ASO). ASO consists of custom resource definitions (CRD) that define Azure services to be managed. Users familiar with Azure of course are familiar with Azure Resource Manager (ARM) and Bicep, a new domain-specific language (DSL) that provides a clean and concise means to provisioning and managing services in Azure.

To better demonstrate using these with Azure Cosmos DB, we’ve created two, nearly identical samples. There is a sample using Bicep to deploy all the resources, and a second that demonstrates how to use ASO for some of the Azure services in our sample.

Get started

To get started with our samples, visit our Azure Cosmos DB sample ToDo App on AKS Cluster GitHub repository. From there you can fork to your own repository or clone locally. Then follow the step-by-step guidance that will walk you through each step to containerize a web application, provision and configure all the required Azure services, configure AKS, and finally deploy our ToDo application.

Conclusion

Building modern applications in a cloud-native environment requires learning and understanding new concepts and new technologies. These can sometime be daunting. However, once understood, they will allow you to respond to changing needs faster and run resilient and scalable applications in the cloud. We hope you enjoy and find samples like this helpful. We’d love your feedback. Feel free to let us know here in the comments or in the Issues list for our repo.

Very good, this will be helpfull, thanks for shere