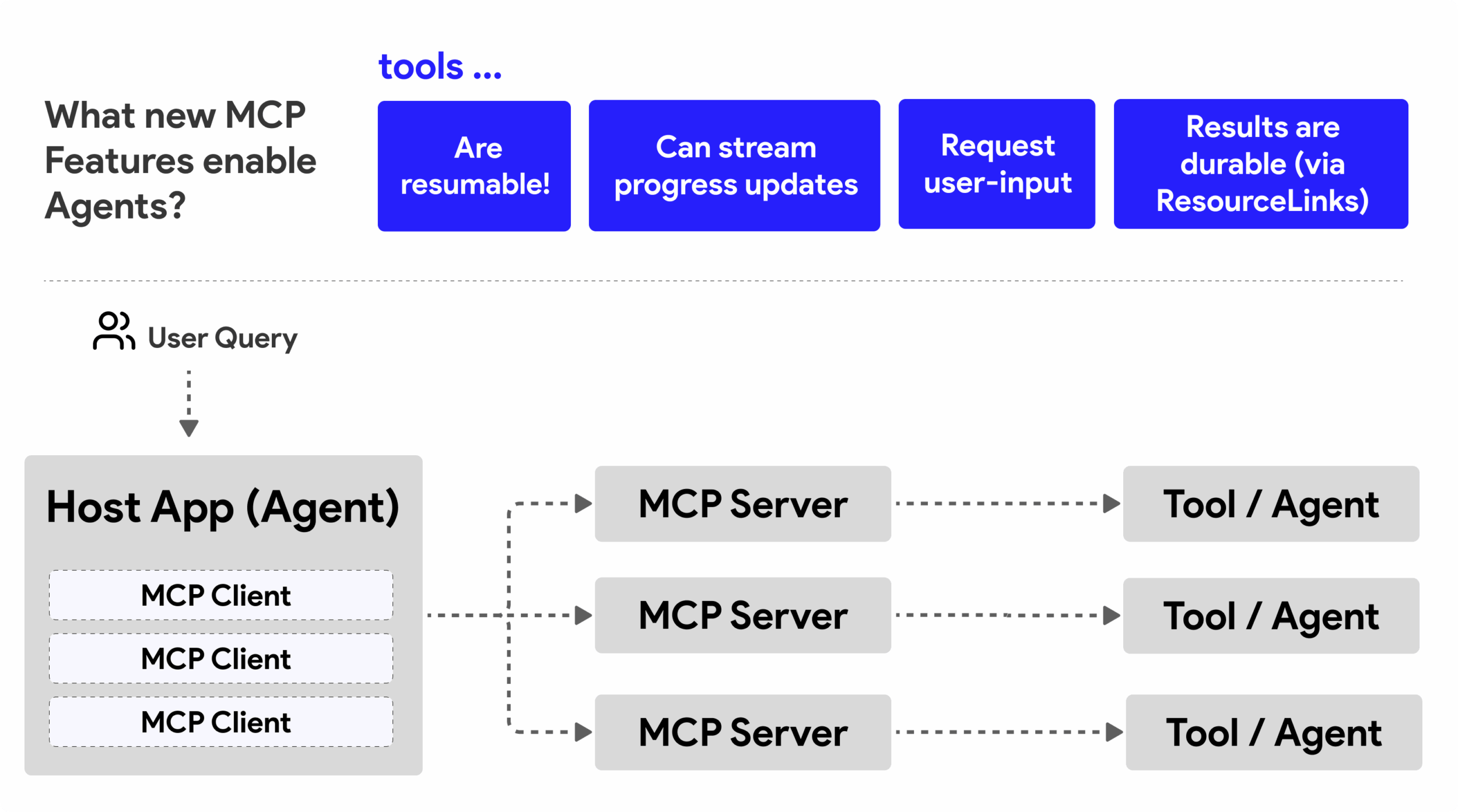

MCP has evolved significantly beyond its original goal of “providing context to LLMs.” With recent enhancements including resumable streams, elicitation, sampling, and notifications (progress and resources), MCP now provides a robust foundation for building complex agent-to-agent communication systems.

In this article, you’ll learn:

- How to build agent-to-agent communication with MCP capabilities where MCP hosts and tools both act as intelligent agents

- Four key capabilities that make MCP tools “agentic” – streaming, resumability, durability, and multi-turn interactions

- How to build long-running agents with a complete Python implementation (an MCP server with a travel agent and research agents that stream updates, request input and are resumable.)

TLDR; Can You Build Agent-to-Agent Communication on MCP?

Yes – you can compose features such as resumable streams (for session continuity), elicitation (for requesting user input), sampling (for requesting AI assistance), and progress notifications (for real-time updates) to build complex agent interactions! Check out the full code used for this article on GitHub .The Agent/Tool Misconception

As more developers explore tools with agentic behaviors (run for long periods, may require additional input mid-execution, etc.), a common misconception is that MCP is unsuitable primarily because early examples of its tools primitive focused on simple request-response patterns.

This perception is outdated. The MCP specification has been significantly enhanced over the past few months with capabilities that narrow the gap for building long-running agentic behavior:

- Streaming & Partial Results: Real-time progress updates during execution (with proposals supporting partial results). See docs on progress updates.

- Resumability: Clients can reconnect and continue after disconnection. See docs on resumability and redelivery on streamable HTTP transport.

- Durability: Results survive server restarts. Tools can now return Resource Links which clients can poll or subscribe to. See docs on Resource Links

- Multi-turn: Interactive input mid-execution via elicitation (requesting user input mid-execution) and sampling (requesting LLM completions from client/host application). See docs on elicitation and sampling.

These features can be composed to enable complex agentic and multi-agent applications; all deployed on the MCP protocol.

For simplicity, in this article we will refer to an “agent” as a tool that meets certain enhanced capabilities rather than introducing an entirely new concept. In turn, these agents are tools available on MCP servers and can be invoked by host applications through standard MCP client connections. Importantly, the host applications themselves are also agents – they coordinate tasks, maintain state, and make intelligent routing decisions. This creates true agent-to-agent communication: orchestrator agents (hosts) communicating with specialist agents (tools). What distinguishes these tools as “agentic” is their ability to satisfy the infrastructure requirements we will outline below.

What Makes an MCP Tool “Agentic”?

We define an agent as an entity that can operate autonomously over extended periods, handling complex tasks that may require multiple interactions or adjustments based on real-time feedback. To support these behaviors, MCP tools need four key infrastructure capabilities which are discussed below with use cases and how MCP supports each:

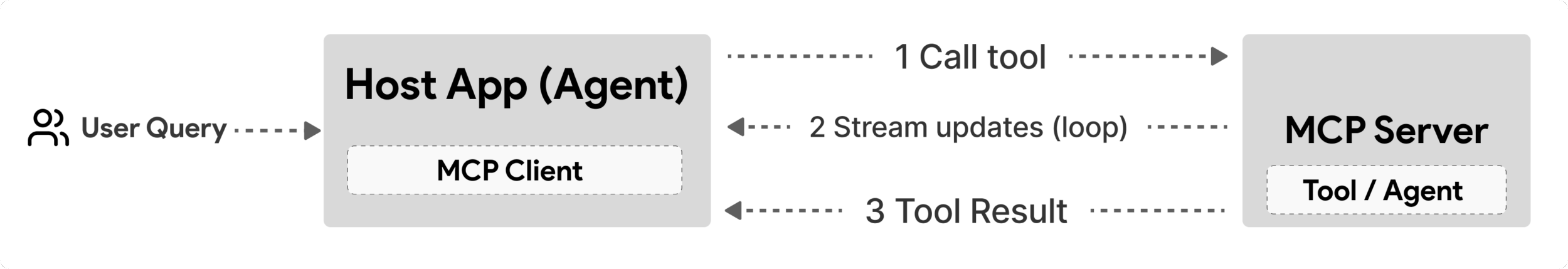

1. Streaming & Partial Results

Agents need mechanisms for real-time progress updates to keep users informed (observability, debugging, trust building) and streaming intermediate results that allow systems to react and adjust execution based on partial outputs. MCP supports this through progress notifications that stream status updates in real-time, with proposals underway to enhance partial result streaming capabilities

| Feature | Use Case | MCP Support |

| Real-time Progress Updates | User requests a codebase migration task. The agent streams progress: “10% – Analyzing dependencies… 25% – Converting TypeScript files… 50% – Updating imports…” | ✅ Progress notifications |

| Partial Results | “Generate a book” task streams partial results, e.g., 1) Story arc outline, 2) Chapter list, 3) Each chapter as completed. Host can inspect, cancel, or redirect at any stage. | ⚠️ Notifications can be “extended” to include partial results e.g., by including them in the message payload. There are open proposals to improve on this – 383, 776 |

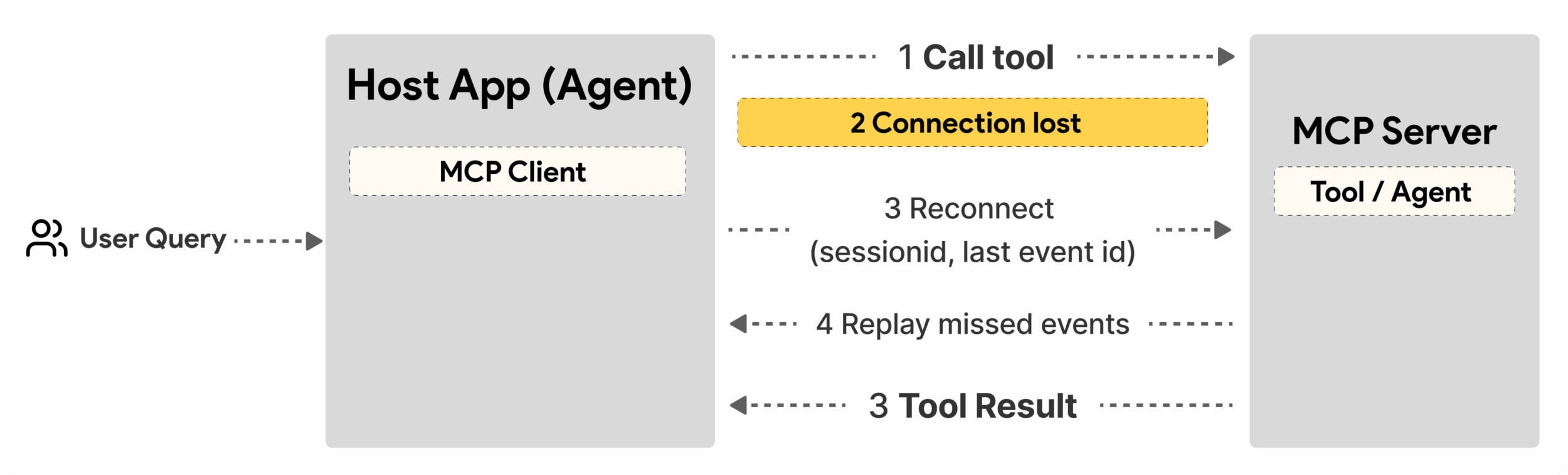

2. Resumability

Long-running agents benefit from maintaining task continuity across network interruptions, allowing clients to reconnect and resume where they left off rather than losing progress or restarting complex operations. The MCP StreamableHTTP transport today supports session resumption and message redelivery which enables this capability.

Implementation Note

To enable session resumption, servers must implement an EventStore to enable event replays on client reconnection. The community is also exploring transport-agnostic resumable streams (see PR PR #975) to extend this capability beyond StreamableHTTP.

| Feature | Use Case | MCP Support |

| Resumability | Client disconnects during long-running tool calls. Upon reconnection, session resumes with missed events replayed, continuing seamlessly from where it left off. | ✅ StreamableHTTP transport with session IDs, event replay, and EventStore |

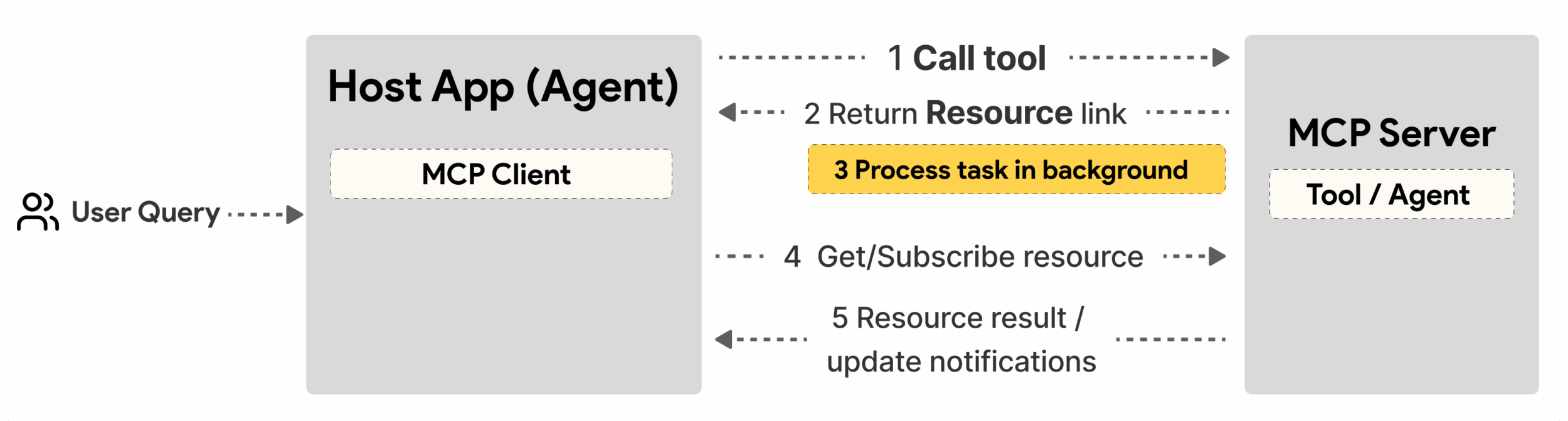

3. Durability

Long-running agents need persistent state that survives server restarts and enables out-of-band status/result checking, allowing progress tracking across sessions. This can be implemented with MCP through Resource links — in response to a tool call, tools can create a resource, immediately return its link, then continue processing in the background while updating the associated resource. Clients can poll or subscribe to the returned resource for status updates and results.

Scalability Consideration

Polling resources or subscribing for updates can consume significant resources at scale. The MCP community is exploring webhook and trigger mechanisms (including #992) that would allow servers to proactively notify clients of updates, reducing resource consumption in high-volume scenarios.| Feature | Use Case | MCP Support |

| Durability | Client makes a tool call for data migration task. Server returns a resource link immediately and updates the resource (tied to a database) as the task progresses in the background. Client can check status of task by polling the resource or subscribing for resource updates. | ✅ Resource links with persistent storage and status notifications |

4. Multi-Turn Interactions

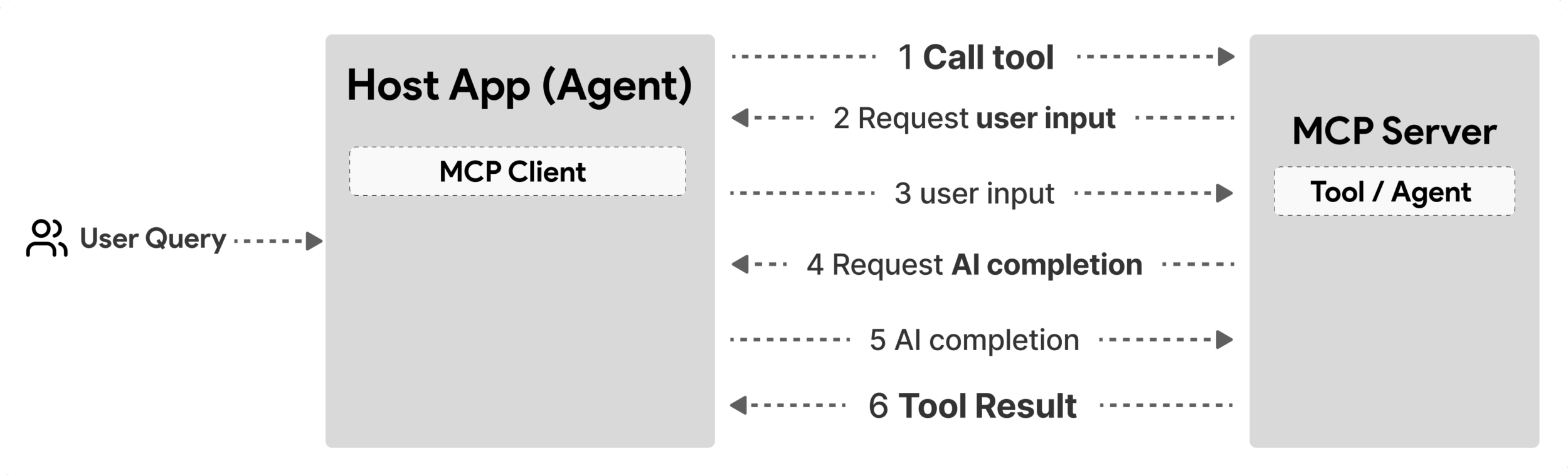

Agents may need additional input mid-execution—human clarification or approval for critical decisions, and AI-generated content or completions for complex subtasks. MCP fully supports these interactions through elicitation (requesting human input) and sampling (requesting LLM completions through the client), enabling agents to dynamically gather information needed during a tool call.

| Feature | Use Case | MCP Support |

| Multi-Turn Interactions | Travel booking agent requests price confirmation from user, then requests AI completions to summarize travel data before completing a booking transaction. | ✅ Elicitation for human input, sampling for AI input |

Implementing Long-Running Agents on MCP – Code Overview

As part of this article, we provide a code repository that contains a complete implementation of long-running agents using the MCP Python SDK with StreamableHTTP transport for session resumption and message redelivery. The implementation demonstrates how MCP capabilities can be composed to enable sophisticated agent-like behaviors.

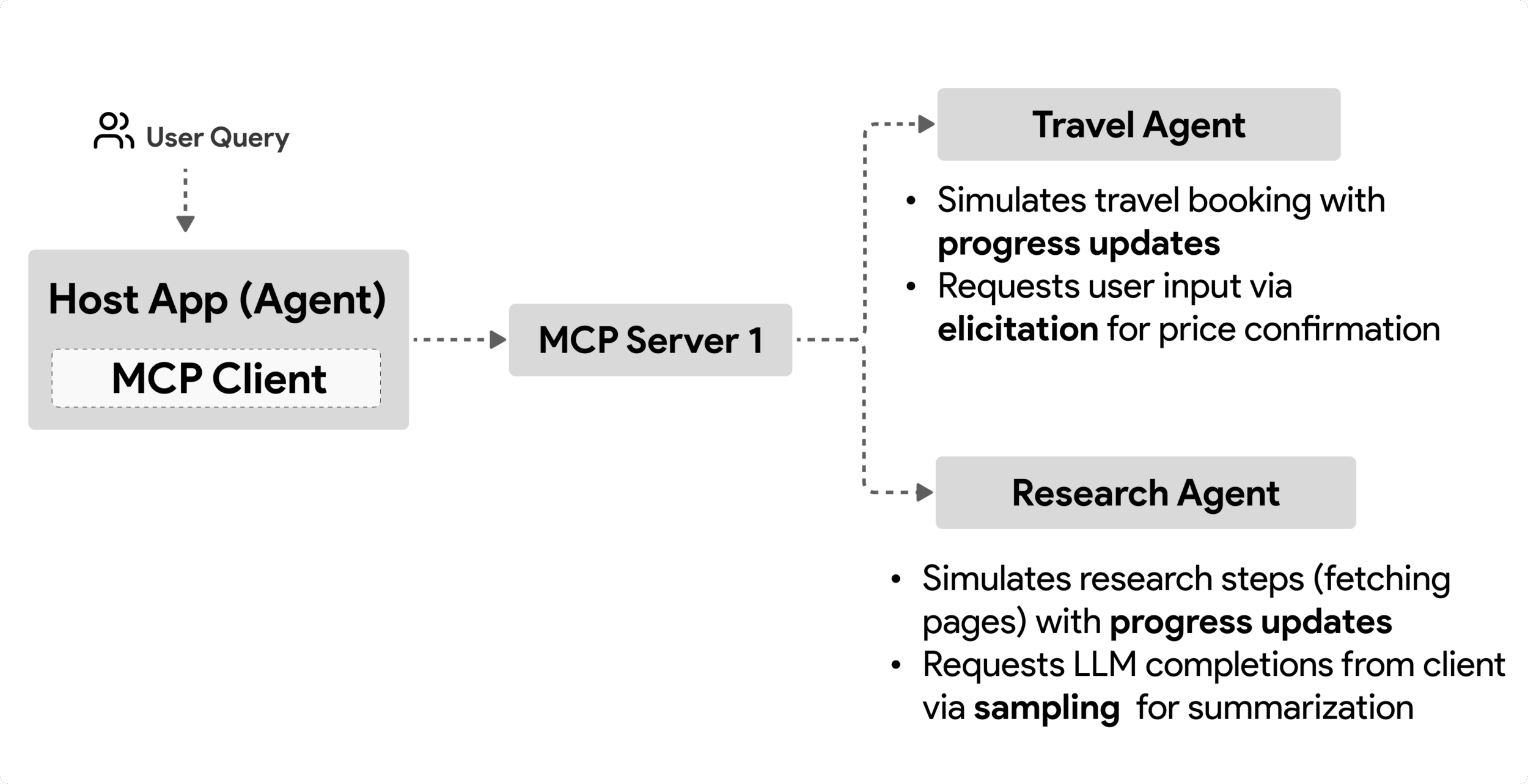

Specifically, we implement a server with two primary agent tools:

- Travel Agent – Simulates a travel booking service with price confirmation via elicitation

- Research Agent – Performs research tasks with AI-assisted summaries via sampling

Both agents demonstrate real-time progress updates, interactive confirmations, and full session resumption capabilities.

Project structure:

- server/server.py – Resumable MCP server with travel and research agents that demonstrate elicitation, sampling, and progress updates

- client/client.py – Interactive host application with resumption support, callback handlers, and token management

- server/event_store.py – Event store implementation enabling session resumption and message redelivery

Key Implementation Concepts

Streaming & Progress Updates – Real-time Task Status

Streaming enables agents to provide real-time progress updates during long-running tasks, keeping users informed of task status and intermediate results.

Server Implementation (agent sends progress notifications):

# From server/server.py - Travel agent sending progress updates

for i, step in enumerate(steps):

await ctx.session.send_progress_notification(

progress_token=ctx.request_id,

progress=i * 25,

total=100,

message=step,

related_request_id=str(ctx.request_id)

)

await anyio.sleep(2) # Simulate work

# Alternative: Log messages for detailed step-by-step updates

await ctx.session.send_log_message(

level="info",

data=f"Processing step {current_step}/{steps} ({progress_percent}%)",

logger="long_running_agent",

related_request_id=ctx.request_id,

)

Client Implementation (host receives progress updates):

# From client/client.py - Client handling real-time notifications

async def message_handler(message) -> None:

if isinstance(message, types.ServerNotification):

if isinstance(message.root, types.LoggingMessageNotification):

console.print(f"📡 [dim]{message.root.params.data}[/dim]")

elif isinstance(message.root, types.ProgressNotification):

progress = message.root.params

console.print(f"🔄 [yellow]{progress.message} ({progress.progress}/{progress.total})[/yellow]")

# Register message handler when creating session

async with ClientSession(

read_stream, write_stream,

message_handler=message_handler

) as session:

Elicitation – Requesting User Input

Elicitation enables agents to request user input mid-execution. This is essential for confirmations, clarifications, or approvals during long-running tasks.

Server Implementation (agent requests confirmation):

# From server/server.py - Travel agent requesting price confirmation

elicit_result = await ctx.session.elicit(

message=f"Please confirm the estimated price of $1200 for your trip to {destination}",

requestedSchema=PriceConfirmationSchema.model_json_schema(),

related_request_id=ctx.request_id,

)

if elicit_result and elicit_result.action == "accept":

# Continue with booking

logger.info(f"User confirmed price: {elicit_result.content}")

elif elicit_result and elicit_result.action == "decline":

# Cancel the booking

booking_cancelled = TrueClient Implementation (host provides elicitation callback):

# From client/client.py - Client handling elicitation requests

async def elicitation_callback(context, params):

console.print(f"💬 Server is asking for confirmation:")

console.print(f" {params.message}")

response = console.input("Do you accept? (y/n): ").strip().lower()

if response in ['y', 'yes']:

return types.ElicitResult(

action="accept",

content={"confirm": True, "notes": "Confirmed by user"}

)

else:

return types.ElicitResult(

action="decline",

content={"confirm": False, "notes": "Declined by user"}

)

# Register the callback when creating the session

async with ClientSession(

read_stream, write_stream,

elicitation_callback=elicitation_callback

) as session:Sampling – Requesting AI Assistance

Sampling allows agents to request LLM assistance for complex decisions or content generation during execution. This enables hybrid human-AI workflows.

Server Implementation (agent requests AI assistance):

# From server/server.py - Research agent requesting AI summary

sampling_result = await ctx.session.create_message(

messages=[

SamplingMessage(

role="user",

content=TextContent(type="text", text=f"Please summarize the key findings for research on: {topic}")

)

],

max_tokens=100,

related_request_id=ctx.request_id,

)

if sampling_result and sampling_result.content:

if sampling_result.content.type == "text":

sampling_summary = sampling_result.content.text

logger.info(f"Received sampling summary: {sampling_summary}")Client Implementation (host provides sampling callback):

# From client/client.py - Client handling sampling requests

async def sampling_callback(context, params):

message_text = params.messages[0].content.text if params.messages else 'No message'

console.print(f"🧠 Server requested sampling: {message_text}")

# In a real application, this could call an LLM API

# For demo purposes, we provide a mock response

mock_response = "Based on current research, MCP has evolved significantly..."

return types.CreateMessageResult(

role="assistant",

content=types.TextContent(type="text", text=mock_response),

model="interactive-client",

stopReason="endTurn"

)

# Register the callback when creating the session

async with ClientSession(

read_stream, write_stream,

sampling_callback=sampling_callback,

elicitation_callback=elicitation_callback

) as session:

Resumability – Session Continuity Across Disconnections

Resumability ensures that long-running agent tasks can survive client disconnections and continue seamlessly upon reconnection. This is implemented through event stores and resumption tokens (last event id).

Event Store Implementation (server holds session state):

# From server/event_store.py - Simple in-memory event store

class SimpleEventStore(EventStore):

def __init__(self):

self._events: list[tuple[StreamId, EventId, JSONRPCMessage]] = []

self._event_id_counter = 0

async def store_event(self, stream_id: StreamId, message: JSONRPCMessage) -> EventId:

"""Store an event and return its ID."""

self._event_id_counter += 1

event_id = str(self._event_id_counter)

self._events.append((stream_id, event_id, message))

return event_id

async def replay_events_after(self, last_event_id: EventId, send_callback: EventCallback) -> StreamId | None:

"""Replay events after the specified ID for resumption."""

# Find events after the last known event and replay them

for _, event_id, message in self._events[start_index:]:

await send_callback(EventMessage(message, event_id))

# From server/server.py - Passing event store to session manager

def create_server_app(event_store: Optional[EventStore] = None) -> Starlette:

server = ResumableServer()

# Create session manager with event store for resumption

session_manager = StreamableHTTPSessionManager(

app=server,

event_store=event_store, # Event store enables session resumption

json_response=False,

security_settings=security_settings,

)

return Starlette(routes=[Mount("/mcp", app=session_manager.handle_request)])

# Usage: Initialize with event store

event_store = SimpleEventStore()

app = create_server_app(event_store)Client Metadata with Resumption Token (client reconnects using stored state):

# From client/client.py - Client resumption with metadata

if existing_tokens and existing_tokens.get("resumption_token"):

# Use existing resumption token to continue where we left off

metadata = ClientMessageMetadata(

resumption_token=existing_tokens["resumption_token"],

)

else:

# Create callback to save resumption token when received

def enhanced_callback(token: str):

protocol_version = getattr(session, 'protocol_version', None)

token_manager.save_tokens(session_id, token, protocol_version, command, args)

metadata = ClientMessageMetadata(

on_resumption_token_update=enhanced_callback,

)

# Send request with resumption metadata

result = await session.send_request(

types.ClientRequest(

types.CallToolRequest(

method="tools/call",

params=types.CallToolRequestParams(name=command, arguments=args)

)

),

types.CallToolResult,

metadata=metadata,

)The host application maintains session IDs and resumption tokens locally, enabling it to reconnect to existing sessions without losing progress or state.

The video below shows an MCP host application UI (AutoGen Studio MCP Playground) connects to our server, lists the agents, calls them with interactions for streaming updates, providing user input and sampling. See the code repository on GitHub for instructions on how to run a local command line host application.

Extending to Multi-Agent Communication on MCP

Architecture Note

Our implementation already demonstrates agent-to-agent communication. The host application acts as an “Orchestrator Agent” that interfaces with users and routes requests to specialist agents (travel agent, research agent) on the MCP server.While our example connects to a single server for simplicity, the same orchestrator agent can coordinate tasks across multiple MCP servers, with each server exposing different specialist agents.

To scale the host agent (orchestrator) to remote MCP agent pattern to multiple servers, the host agent can be enhanced with:

- Intelligent Task Decomposition: Analyze complex user requests and break them into subtasks for different specialized agents

- Multi-Server Coordination: Maintain connections to multiple MCP servers, each exposing different agent capabilities

- Task State Management: Track progress across multiple concurrent agent tasks, handle dependencies and manage in-flight requests

- User Context Preservation: Maintain interaction context while coordinating between specialist agents

- Resilience & Retries: Handle failures, implement retry logic, and reroute tasks when agents become unavailable

- Result Synthesis: Combine outputs from multiple agents into coherent responses

With these capabilities, a single orchestrator agent can coordinate multiple specialist agents across different servers using the same MCP protocol primitives demonstrated in our single-server example.

Conclusion

MCP’s enhanced capabilities – resource notifications, elicitation/sampling, resumable streams, and persistent resources – enable complex agent-to-agent interactions while maintaining protocol simplicity. See the code repository for more information.

Overall, the MCP protocol spec is evolving rapidly; the reader is encouraged to review the official documentation website for the most recent updates – https://modelcontextprotocol.io/introduction

Acknowledgement: Huge thanks to Caitie McCaffrey, Marius de Vogel, Donald Thompson, Adam Kaplan, Toby Padilla, Marc Baiza, Harald Kirschner and many others for discussions, feedback and insights while writing this article.

0 comments

Be the first to start the discussion.