IoT with Azure SQL

IoT workloads can be characterized by high rates of input data, on both steady and burst streams, to be ingested from devices. A common design pattern for relational database systems involves a “landing zone” (or staging area) which is optimized for “absorbing the shock” of this high and spikey input rate, before data will be flowing into their its final destination (usually one or more tables) which instead is optimized for persistence and analysis.

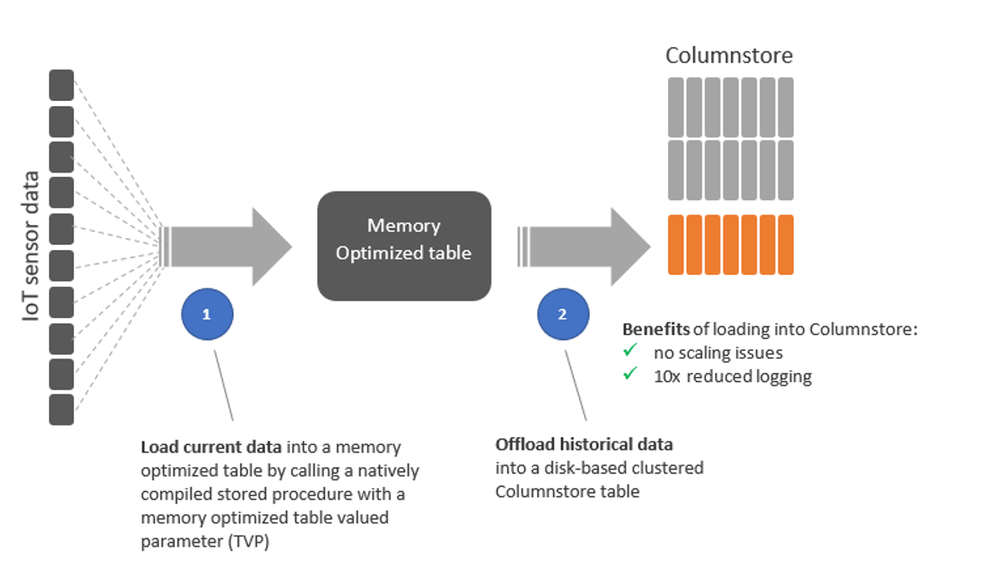

There are few knobs we can use to optimize our data models for these very different purposes, where likely the most evident involves different indexing approaches (a light one to privilege higher ingestion rate versus a more comprehensive to favor query and retrieval), but Azure SQL also provides very specialized capabilities like In-memory OLTP tables and Columnstore Indexes that can be used together to implement shock absorber patters in IoT solutions:

In-memory OLTP

By leveraging In-memory OLTP tables (only available on Premium/Business Critical databases) as a landing zone for incoming streams of events and messages from IoT devices, we can largely eliminate latching and locking contention that can affect regular tables (subject to things like last page insert scenarios). Another benefit of this approach is the ability to reduce the overhead of transaction log operations by minimizing IOs for SCHEMA_ONLY tables (where the risk of losing some events in case of a failure is acceptable) or reducing the impact of log updates for tables with schema and data persistence. By combining these capabilities with natively compiled stored procedures, we can also improve execution performance for all critical data management operations on ingested data. A common solution architecture in this space would likely include an event store (something like Azure IoT Hub or EventHub) where events will flow from devices, and an event processor pulling events from the store and using a memory optimized table valued parameter, batching 100s or 1000s of incoming invents into a single data structure, greatly reducing the number of database roundtrips and improving throughputs to potentially millions of events per second.

Columnstore Indexes

With billions of events potentially ingested per day, max In-memory OLTP tables size (which is proportional to Azure SQL compute size utilized, 52GB on BC_Gen5_40 database as an example) will easily become a limit, so a very common approach is to leverage those for the “hot” portion of the dataset (basically, newly ingested data), while offloading events to disk-based tables, optimized for persistence and analytics, through a bulk loading process to a Columnstore-based table that can, for example, be triggered by time or data volume.

By picking up the right batch size (e.g. between 102,400 and 1,048,576, depending on our events generation rate and logic) we can maximize efficiency by eliminating the need for moving new data rows into Columnstore’s delta rowgroup first, waiting for the Tuple Mover to kick in and compress them, and going instead straight to a compressed rowgroup reducing logging and increasing overall throughput easily by 10x, while also achieving similar level of data compression (which is a significant side effect while you’re dealing with 100s of billion events).

Once offloaded to a Columnstore-based table, events can be efficiently analyzed by running aggregations and time-series analytical workloads without impacting the ingestion process.

Benefits

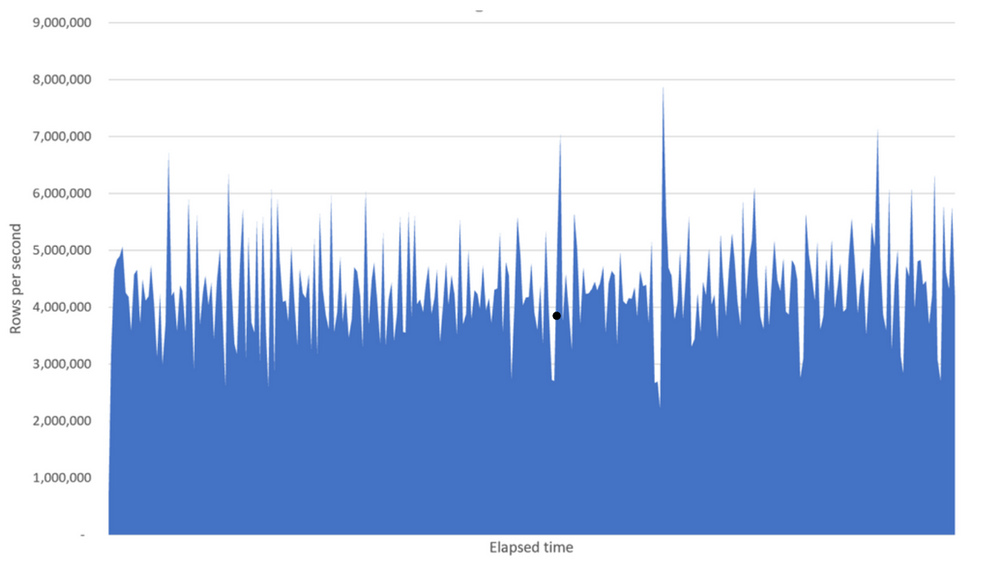

By adopting this design pattern for your IoT solutions, you can leverage a familiar relational engine environment while scaling to billions of events ingested per day and terabytes of compressed historical data stored. As a reference, you can start by looking at this packaged sample where we achieved a sustained 4.2 million events per second rate on average on a M-series 128 cores database.

For other articles in this series see:

Very impressive ingest rate. I am wondering for how long the DB can keep up? We know IoT data can become very voluminous (new metrics added, explosion of # of monitored devices, etc.). Even with an elastic pool, the MaxSize of all DBs last time I checked was 4TB. Performance in an initially empty DB is completely different from one that is almost full, say close to the 1-2TB MaxSize. Would you spin up new DBs as the current one gets full and move the older DB to a lower SKU machine so you still have access to older historical...

Hi Ferenc, even if 4TB of compressed columnstore data means a lot of stored events :) i agree that you may need more headroom for future growth. Spinning up a new "hot" DB to accomodate new data when previous one is full (also known as "coke bottling" pattern) is definitely an option, and there are also tools available to manage time-based sharding from an application perspective (https://docs.microsoft.com/en-us/azure/azure-sql/database/elastic-scale-introduction). Another option, if you don't need the millions of events/sec that "shock absorber" approach enables, would be to use Azure SQL DB Hyperscale service tier instead (https://docs.microsoft.com/en-us/azure/azure-sql/database/service-tier-hyperscale) where you're compromising a bit ingestion...