Are you tired of spending countless hours building APIs from scratch? With Data API Builder (DAB), you can create your API in just minutes! All you need to do is create a JSON configuration file to describe your database entities (tables, views, stored procedures, or collections) from your Azure SQL Database, SQL Server, Cosmos DB, PostgreSQL, or MySQL database. In this blog series, I will share a few examples of how to build your APIs with DAB using containers!

Using containers to build your APIs with DAB provides a consistent, isolated, portable, and lightweight local development experience that makes team collaboration easier.

Once the local development is completed, one possible next step is to deploy the same solution consistently to Azure services such as Azure Container Apps and Azure SQL Database.

Getting started with DAB

DAB, an open-source tool, simplifies the process of developing applications that require database interaction by automatically generating CRUD (Create, Read, Update, Delete) operations. It achieves this by reading entities directly from your database. It provides you with multiple ways to run the DAB Engine, offering flexibility to suit various preferences.

Command Line Interface (CLI)

Providing a straightforward and efficient means of running the engine.

DAB Source Code

Alternatively, for those who prefer more hands-on control, running the DAB Engine using the source code is an option, allowing customization and detailed configuration.

Container

Lastly, if convenience is a priority, employing a container offers a portable and encapsulated environment, simplifying the deployment and management of the DAB Engine. With these options, users can choose the method that aligns best with their workflow and requirements.

DAB’s workflow

The traditional DAB workflow requires to install it first. Once installed, you need to initialize DAB’s engine with a JSON configuration file, add your entities, and finally start DAB’s engine to get up and running. It’s a simple process that can be completed with just a few commands:

dab init –-database-type mssql –-connection-string "server=localhost;Database-Library;" dab add Book --source dbo.Book --permissions "anonymous:*" dab start

As mentioned before, the DAB workflow is simple enough. However, containers further simplify this process by eliminating the need for tool installations (except for the container runtime) and going through each individual step. This not only reduces errors but also enhances reproducibility in the workflow.

This portability facilitates seamless deployment on various platforms, fostering collaboration and accelerating development processes. Overall, containerization aligns with modern DevOps practices, providing efficiency and scalability in software delivery.

Let’s have a look at the first example of using DAB with containers.

Library demo

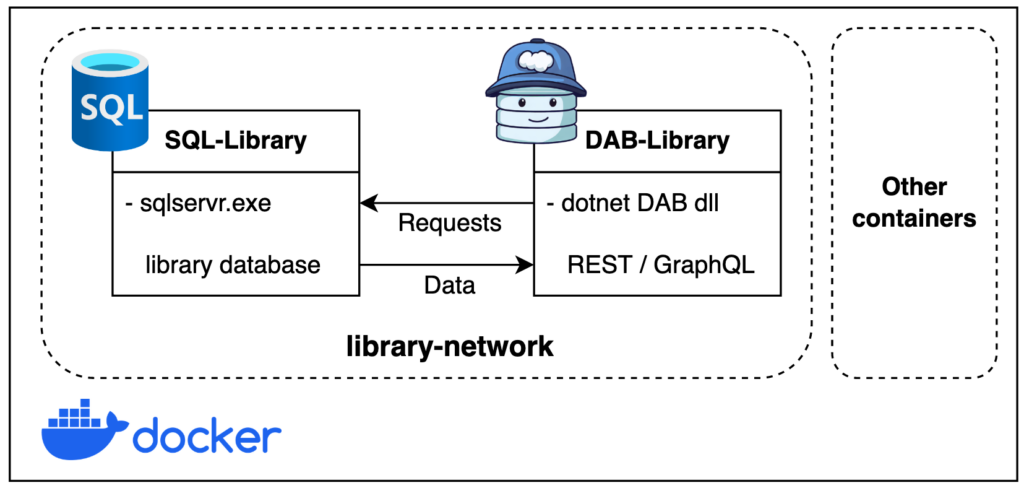

The following diagram represents the container architecture for this demo. The SQL-Library container manages data securely with Microsoft SQL Server 2022, while the DAB-Library container, powered by Data API Builder, offers REST and GraphQL endpoints that will make requests to our database.

This agile setup ensures efficiency and scalability for modern applications.

Prerequisites

These are the some required and option prerequisites to run DAB and SQL Server on containers:

- Docker / Podman

- GitHub (optional)

- Visual Studio Code / Azure Data Studio (optional)

Creating the container network

I will start this container exercise by creating Docker network. This virtual network will help the SQL Server container and DAB container talk to each other using the container hostname. It will also provide isolation and a private channel for our containers in this example.

# Create docker network docker network create library-network

Build the SQL Server container

Many documentation articles and examples exist for creating a SQL Server container using Docker. You can check the latest version of SQLCmd (Go), which allows you to create a SQL Server container with a single command following this Quickstart article. Or, if you are not familiar with SQL Server containers check this other Quickstart article that provides greater details.

The following docker run command will create an SQL Server container. It takes “SQL-Library” as the container name and the hostname.

It configures the container with essential environment variables, including the SQL Server System Administrator (SA) password set to P@ssw0rd! for security. For connectivity purposes, it maps the host port 1401 to container port 1433 for external access while connecting it to the previously created network library-network.

Finally, it runs the container in the background using Microsoft SQL Server 2022 latest container image available for Ubuntu 20.04.

# SQL Container docker run \ --name SQL-Library \ --hostname SQL-Library \ --env 'ACCEPT_EULA=Y' \ --env 'MSSQL_SA_PASSWORD=P@ssw0rd!' \ --publish 1401:1433 \ --network library-network \ --detach mcr.microsoft.com/mssql/server:2022-latest

Creating the sample database

You can use your preferred tool to execute the SQL script to create the sample database. However, I recommend using Azure Data Studio. If you are unfamiliar with Azure Data Studio, you can learn more you can learn more in Azure Data Studio here: aka.ms/azuredatastudio

Connect to your SQL Server container using localhost as server name, 1401 as port, and SA user and password. Then, execute the library.azure-sql.sql script included in the demo repository, to create the library database and all its objects.

This script initializes a library database with tables for books, authors, and their associations. It establishes a sequence for unique identifiers and includes sample data. Additionally, it creates a view (vw_books_details) for book details with aggregated author information. Finally, a stored procedure (stp_get_all_cowritten_books_by_author) fetches books co-authored by a specified author, supporting both partial and exact searches.

Build the DAB container

Now that our Library database is ready, it is time to start working on the API side using DAB. The DAB container image requires only one parameter the JSON configuration file where you need to define the connection string and the database entities.

Because we are working in this containerized environment, you don’t need to go through the usual DAB workflow (dab init, dab add, dab start). Once the container is started through the docker run command, the DAB engine will be listening on the defined port.

Utilizing Environment Variables in DAB with Containers

Starting from DAB v0.8.49, you can use environment variables to store sensitive data, for example a database connection string. These environment variables cat be set directly in the system or creating an .env file containing a list of different variables (key-value pairs). For more information check this previous blog post: Data API Builder Now Supports ENV Files.

This repository uses the following folder structure to store DAB’s configuration files:

├── DAB-Config ├── .env └── dab-config.json

With the flexibility that Docker provides in leveraging environment variables, I will be utilizing the .env file included on the demo repository to securely store sensitive information such as my database connection string. By defining the necessary variables in the .env file, like the example below:

CONN_STRING=Server=SQL-Library;Database=library;User ID=SA;Password=P@ssw0rd!;TrustServerCertificate=true

The data source section of the JSON configuration file is where you define the database type and connection string. Notice how this configuration file dynamically incorporates the securely stored connection string by referencing the CONN_STRING environment variable using the @env( ) syntax:

"$schema": "https://github.com/Azure/data-api-builder/releases/download/v0.9.7/dab.draft.schema.json",

"data-source": {

"database-type": "mssql",

"connection-string": "@env('CONN_STRING')"

},

"mssql": {

"set-session-context": true

}

Runtime and Entity Configurations

The runtime section encompasses configurations for operational aspects. Defining settings for REST and GraphQL endpoints like host mode and authentication providers. In the entities section, data entities like Author and Book tables are defined with specific configurations and permissions.

This last section enables seamless integration and exposure to your data entities via Data API Builder, aligning with your application requirements.

You can check the complete JSON file in the demo respository.

Setting Up DAB-Library Container

The following docker run command establishes a container named DAB-Library using the latest version of Microsoft’s Data API Builder:

# DAB Container docker run \ --name DAB-Library \ --volume "./DAB-Config:/App/configs" \ --publish 5001:5000 \ --env-file "./DAB-Config/.env" \ --network library-network \ --detach mcr.microsoft.com/azure-databases/data-api-builder:latest \ --ConfigFileName /App/configs/dab-config.json

This container leverages Docker’s functionality to mount the local folder ./DAB-Config as a volume inside the container at /App/configs. This is the default path used by DAB’s engine to read the configuration file, that will be pass to this container in runtime. The host port 5001 is mapped to container port 5000 for external access while connecting it to the previously created network library-network.

The environment file flag points to the environment file at ./DAB-Config/.env, managing sensitive information in this case SQL Server’s connection string. Networking is handled by –network library-network, connecting the container to the specified network.

Finally, it runs the container in the background using Data API Builder’s latest container image while setting the configuration file.

Testing REST and GraphQL endpoints

To ensure the smooth functionality of your Data API Builder (DAB) container, a series of tests can be conducted once the container is up and running on your localhost. You can initiate a health check using the following command:

curl -v http://localhost:5001

This curl command will return a verbose response, confirming the health status of your DAB container listening on port 5001.

For testing the DAB runtime REST endpoints, consider using the following commands to retrieve information about Book and Author entities:

curl -s http://localhost:5001/api/Book | jq curl -s http://localhost:5001/api/Author | jq

Additionally, you can leverage the power of jq to filter and display specific data. For example:

curl -s http://localhost:5001/api/Book?$first=2 | jq '.value[] | {id, title}'

curl -s http://localhost:5001/api/Author | jq '.value[1] | {id, first_name, last_name}'

The first curl command retrieves the first two records from the Book entity using the $first=2 query parameter, then uses jq to format and display specific fields, id and title.

The second curl command fetches data from the Author entity and utilizes jq to extract and present specific fields, focusing on the second record’s id, first_name, and last_name.

When working with GraphQL, you can use tools like curl or browser based GraphQL playgrounds. For example, using curl:

curl -X POST \

-H "Content-Type: application/json" \

-d '{"query": "{ books(first: 2, orderBy: {id: ASC}) { items { id title } } }"}' \

http://localhost:5001/graphql | jq

And this is how you can build your APIs with DAB using containers.

Conclusion

This first instance of blog series showcased the seamless integration of Data API Builder (DAB) with containerization for rapid API development. By encapsulating SQL Server and DAB in Docker containers, developers benefit from a consistent, isolated, and lightweight local environment.

Future topics will delve into advanced container options as Docker compose, also deployment strategies on Azure, offering insights for both seasoned developers and those new to containerized workflows. Stay tuned for more practical guidance on how to build your APIs with DAB using containers.

0 comments