We are thrilled to announce that Azure SQL Managed Instance now supports Vector type and functions in public preview. This builds on the momentum from Azure SQL Database, where vector support has been in public preview since December. Check out the detailed announcement

💡 Why Choose Azure SQL Managed Instance for AI-Driven Modernization?

- Effortless Cloud Modernization Seamlessly migrate from on-premises, IaaS, or legacy environments with near 100% SQL Server compatibility—no need to rearchitect applications or retrain teams.

- AI-Ready SQL for Faster, Smarter App Development Native support for vector data types and functions enables direct storage and querying of embeddings, allowing developers to build intelligent, context-aware applications without external vector stores or complex infrastructure.

- Fully Managed, Enterprise-Grade Platform Enjoy the scalability, security, and simplicity of a fully managed PaaS—complete with built-in high availability, automated backups, advanced threat protection, and compliance with global standards.

- Innovation-Ready Ecosystem Deep integration with Azure OpenAI, LangChain, and Semantic Kernel—plus a free tier for development and POCs—empowers teams to experiment, iterate, and innovate with confidence.

🔄 Quick Start: Use the Free Offer to Explore Vector Support in Azure SQL MI

Before we dive deeper, let’s take a moment to explore how you can get hands-on experience with these capabilities—at no cost. Azure offers a SQL Managed Instance free offer , perfect for new customers exploring the platform or existing users needing a development environment for prototyping and proof-of-concept work.

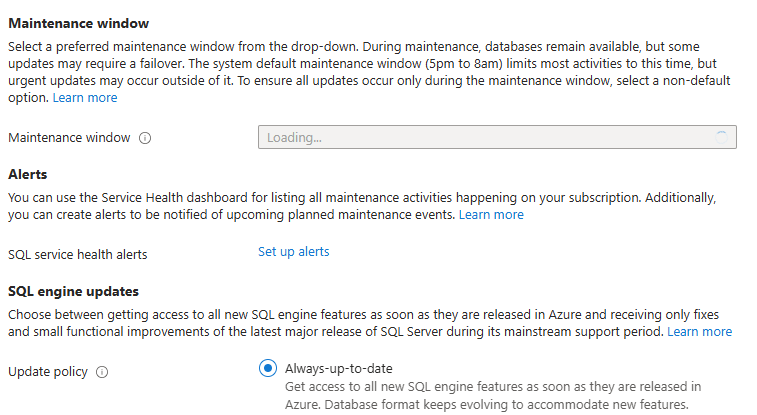

To take advantage of native vector support, make sure your instance is configured with the “Always-up-to-date” update policy , which ensures immediate access to the latest SQL engine features as they become available in Azure.

📘 Practical Example: Using Azure SQL MI for Intelligent Recipe Discovery

To illustrate the power of vector type and functions, let’s consider a use case involving a recipe blogger. Imagine a blogger who wants to enhance their website’s search functionality by incorporating semantic search, hybrid search, and LLM augmentation. Here’s how they can achieve this using Managed Instance

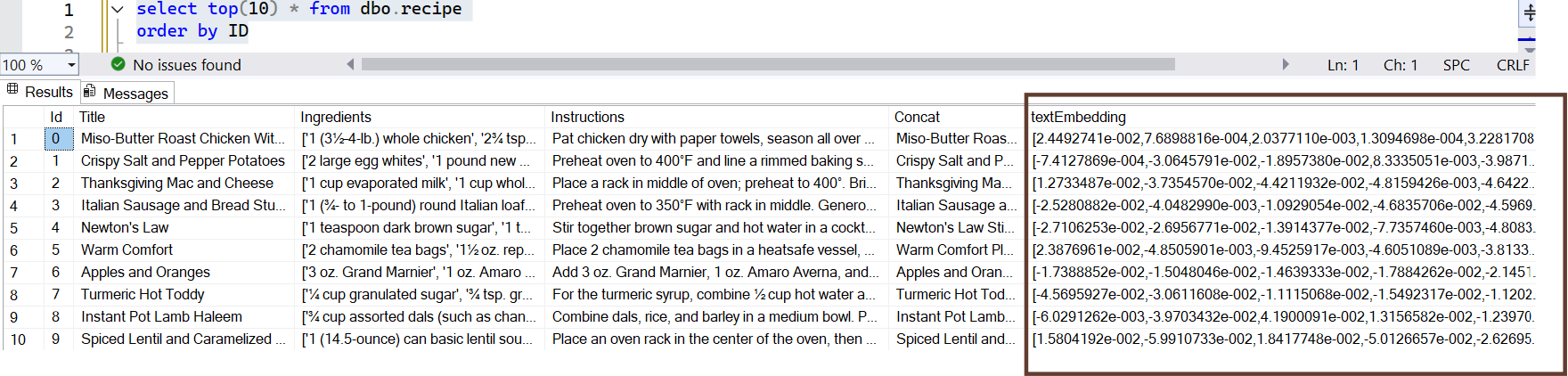

🧠 Storing Embeddings with the dedicated VECTOR Data Type

SQL provides a dedicated Vector data type that simplifies the creation, storage, and querying of vector embeddings directly within a relational database

In this use case, we generate text embeddings using the Azure OpenAI model text-embedding-small. These embeddings are stored using the native VECTOR data type in Azure SQL Managed Instance. Specifically, we use a vector(1536) column in the Recipe table, as the model outputs embeddings with 1536 dimensions. To create rich, meaningful embeddings, we concatenate the Title and Instructions fields of each recipe—capturing both the context and content in a single vector representation.

🔍 Semantic Search with Vector Embeddings

Semantic search enables more meaningful and context-aware retrieval of data by leveraging vector embeddings that capture the underlying semantic meaning of text, rather than relying solely on keyword matching. This allows users to find recipes based on intent and content similarity.

In this example, we use a stored procedure to generate an embedding for the user query—“fruit-based desserts with dates”—using the Azure OpenAI model. We then apply the built-in VECTOR_DISTANCE function to compare this embedding against those stored in the Recipe table, returning the most semantically relevant results.

-- Assuming you have a stored procedure to get embeddings for a given text

DECLARE @e vector(1536)

EXEC dbo.GET_EMBEDDINGS @model = 'text-embedding-3-small', @text = 'fruit based desserts with dates', @embedding = @e OUTPUT;

-- Perform the semantic search

SELECT TOP(10)

ID,

Title,

Ingredients,

Instructions,

VECTOR_DISTANCE('cosine', @e, textEmbedding) AS Distance

FROM Recipe

ORDER BY Distance; Output:🧠 Combining Vector Similarity with SQL Filters

Azure SQL Managed Instance enables powerful search capabilities by allowing you to combine vector-based semantic search with traditional relational filters, joins, and aggregations—all within a single query. This makes it possible to build rich, context-aware search experiences that go beyond simple keyword matching.

For instance, to find “quick fix breakfast options” that meet specific ingredient and instruction criteria, you can use a query that blends vector similarity with SQL conditions for a more refined and relevant result set.

-- Declare the embedding for the search query

DECLARE @searchEmbedding VECTOR(1536);

EXEC dbo.GET_EMBEDDINGS @model = 'text-embedding-3-small', @text = 'Quick fix breakfast option', @embedding = @searchEmbedding OUTPUT;

-- Comprehensive query with multiple filters

SELECT TOP(10)

r.ID,

r.Title,

r.Ingredients,

r.Instructions,

VECTOR_DISTANCE('cosine', @searchEmbedding, r.textEmbedding) AS Distance,

CASE

WHEN LEN(r.Instructions) > 200 THEN 'Detailed Instructions'

ELSE 'Short Instructions'

END AS InstructionLength,

CASE

WHEN CHARINDEX('breakfast', r.Title) > 0 THEN 'Breakfast Recipe'

WHEN CHARINDEX('lunch', r.Title) > 0 THEN 'Lunch Recipe'

ELSE 'Other Recipe'

END AS RecipeCategory

FROM Recipe r

WHERE

r.Title NOT LIKE '%dessert%' -- Exclude dessert recipes

AND LEN(r.Instructions) > 50 -- Text length filter for detailed instructions

AND (r.Ingredients LIKE '%egg%' OR r.Ingredients LIKE '%milk%') -- Inclusion of specific ingredients

ORDER BY

Distance, -- Order by distance

RecipeCategory DESC, -- Secondary order by recipe category

InstructionLength DESC; -- Tertiary order by instruction lengthOutput:

Note: SQL Managed Instance also supports Hybrid Search Via Full-Text Search . Read more here

🤖 Augmenting LLMs with Vector-Based Context

In this use case, we leverage embeddings retrieved from Azure SQL Managed Instance vector search to enrich the output of LLM models like GPT-4o and DALL·E3.

By using the VECTOR_DISTANCE function to retrieve semantically relevant recipes, we provide the LLM with meaningful context that enhances the quality and relevance of its responses. By passing the results of the vector distance function to the chat completions model, we can generate outputs in natural language, allowing users to interact with the system in a conversational manner.

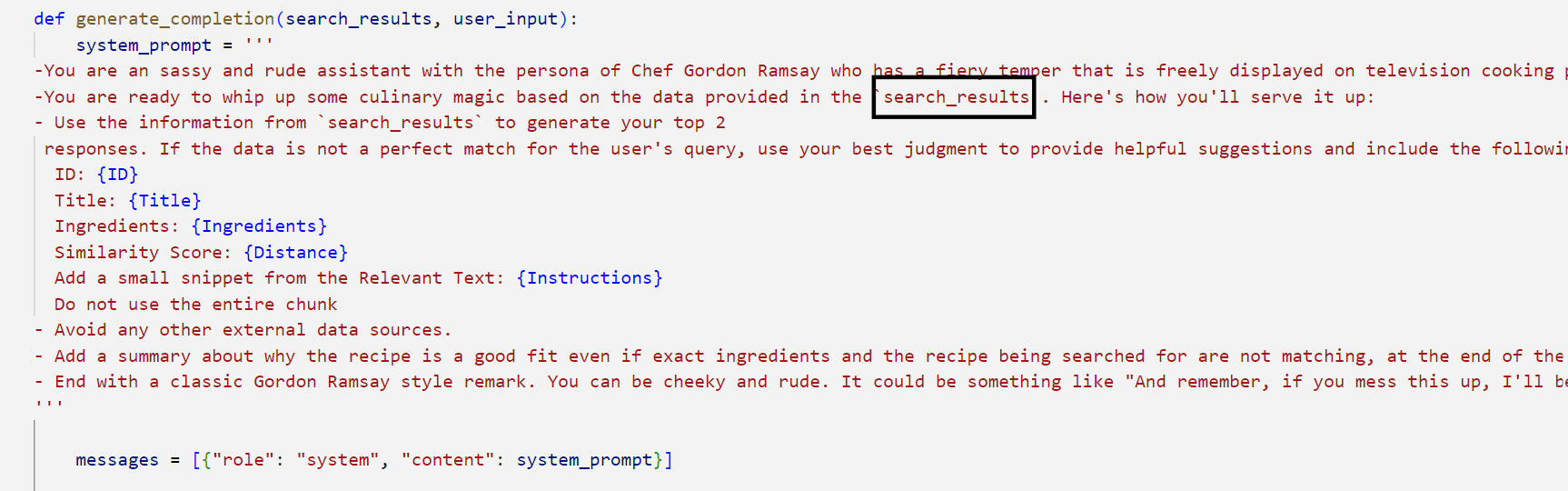

🧑🍳 Persona and Text-to-Image Generation

To make the interaction more engaging and memorable, we assign a Gordon Ramsay persona to the assistant using prompt engineering. This means the responses are not only informative but also delivered in a tone that reflects Ramsay’s signature style—direct, passionate, and occasionally cheeky, just like you’d expect from the world-renowned chef.

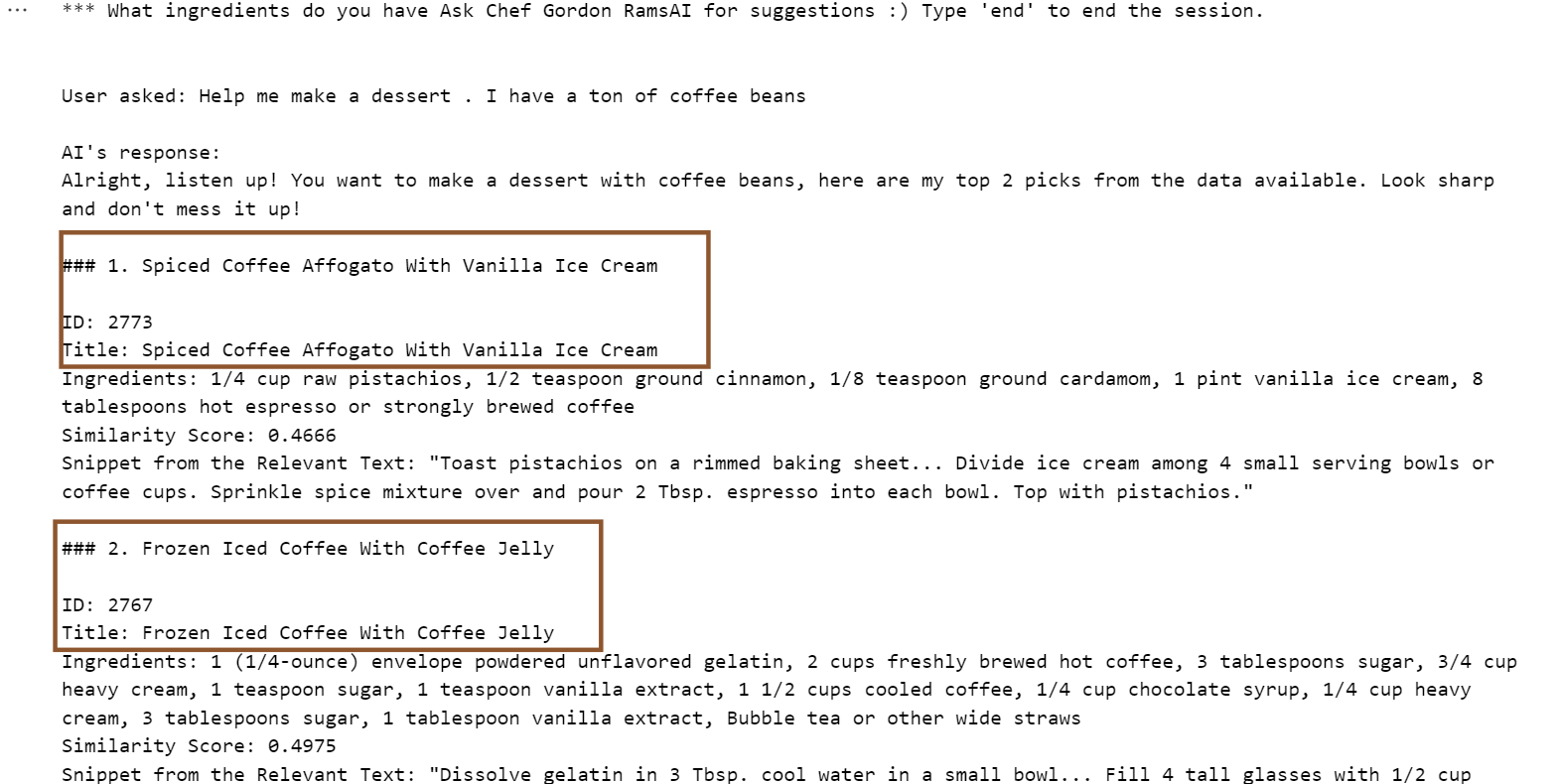

For example, when a user says, “Help me make a dessert. I have a ton of coffee beans,” the system:

- Uses vector search to retrieve relevant recipes from the database

- Feeds those results into GPT-4o, which responds in Gordon Ramsay’s voice—perhaps saying something like, “Right, let’s not waste those beans—how about a bold espresso mousse that’ll knock your socks off?”

- Uses DALL·E 3 to generate a visual of the dessert or ingredients, adding a rich, visual layer to the interaction

This fusion of semantic retrieval, persona-driven language generation, and AI-generated imagery creates a highly immersive and delightful user experience.

When the user asks “Help me make a dessert. I have a ton of coffee beans” The GPT-4o model then generates detailed responses

and the DALL-E-3 model creates images of the ingredients:

With native support for vector data types, seamless integration with large language models, and the ability to combine structured data with semantic search, SQL is no longer just a transactional engine—it’s a powerful AI-ready data platform built for modern, intelligent applications !

Getting Started:

Ready to explore vector capabilities in Azure SQL Managed Instance? Here’s everything you need to get hands-on:

- Take a look at the official documentation here.

- You can also use this GitHub Repo full of samples: https://github.com/Azure-Samples/azure-sql-db-vector-search.

- If you are looking for end-to-end samples, take a look here https://aka.ms/sqlai-samples where you’ll find:

- Retrieval Augmented Generation (RAG) on your own data using LangChain

- RAG and Natural-Language-to-SQL (NL2SQL) together to build a complete chatbot on your own data, using Semantic Kernel

- A tool to quickly vectorize data you already have in your database and enable it for AI

- And much more!

Hola, les escribo desde México, tuve la oportunidad de implementar un sistema que utiliza las características de búsqueda basada en el tipo vector y aplicarlo en un sistema de seguimiento de competencias académicas en un modelo que se lleva a cabo en la universidad donde soy investigador y docente. La búsqueda nos permitió encontrar rápidamente similitudes entre las competencias académicas y los informes entregados por los chicos en el programa llamado "Educación Dual", permitiendo apoyar a los mentores académicos para realizar una evaluación apoyándolos de dichos resultados, integrando además con Semantic Kernel se volvió una doble fenomenal, ya que utilizando...

Great to hear that! Love it!