In a previous post, Introducing Form Recognizer client library: AI-powered document extraction, we introduced the Form Recognizer client libraries as powerful tools which allow you to extract data from documents and leverage the document understanding technology offered through Azure Cognitive Services. With this blog, we’ll explore some of the new features and take a closer look at what can you do with some Python code.

Highlighted Features

The new, beta Form Recognizer client libraries target the 2.1-preview version of the service and include many new features and quality improvements – (see What’s new in Form Recognizer? for a list of everything that’s new). In this blog, we will highlight the following features:

To try out these new features in the Python client library, run the following command to install the library:

pip install azure-ai-formrecognizer --pre

Checkbox/Selection Mark Detection

The Form Recognizer client libraries previously allowed you to extract text field values and tables from forms, and now will additionally extract checkboxes or radio buttons to tell you whether or not they have been selected. A selection mark is extracted from a form and its state is determined as selected or unselected, in addition to its bounding box coordinates and confidence.

Selection marks are returned in the client library when…

- Recognizing content (OCR) – the client library will return all selection marks found per page and, if keyword argument

include_field_elements=Trueis passed into a client recognize method (this is default forbegin_recognize_content), selection marks are also returned as a fundamental form element type. - Recognizing fields from custom forms – we can train our forms with or without labeled data. When training with labels, selection marks can be recognized as a labeled field on forms as a label/selection mark pair.

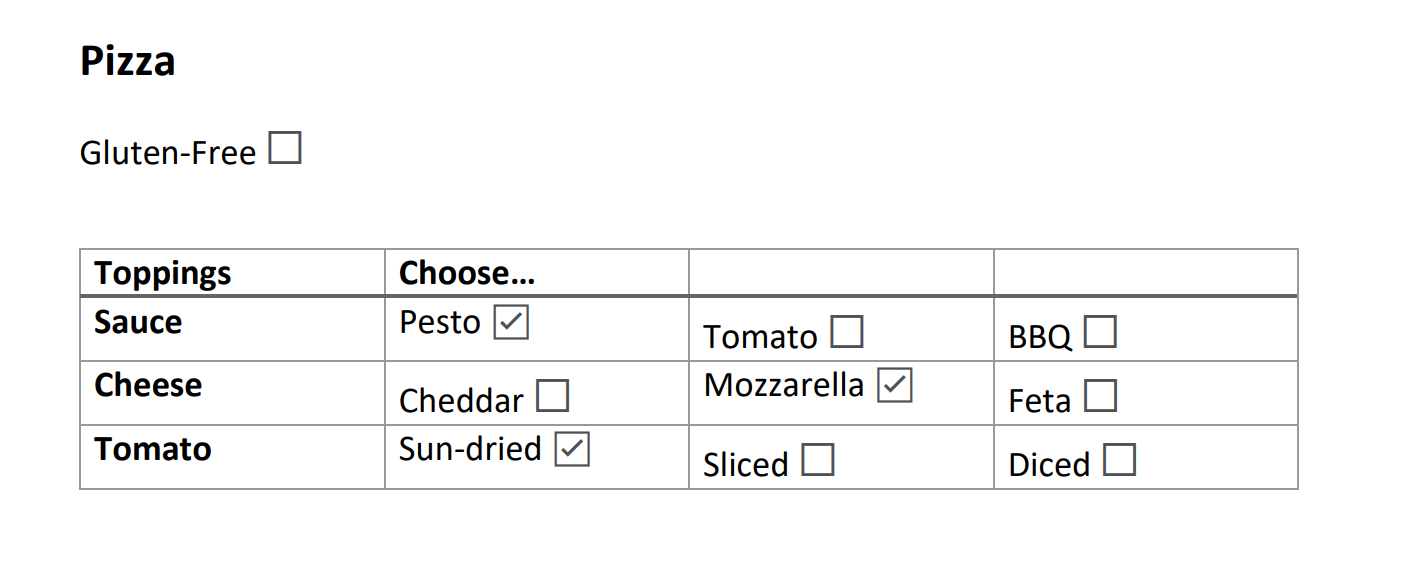

We’ll look at an example that demonstrates selection mark detection when recognizing content from a form. Here we have a simplified order form for a pizza. The customer can choose their desired toppings by checking the boxes next to what they want on their pizza:

We can use the begin_recognize_content method on FormRecognizerClient to recognize all the content on the page, including text, tables, and selection marks. In the code below we check the field elements present in each table cell for any of “selectionMark” kind. From here, we can determine which checkboxes are selected and which toppings should be added to the pizza.

from azure.core.credentials import AzureKeyCredential

from azure.ai.formrecognizer import FormRecognizerClient

form_recognizer_client = FormRecognizerClient(endpoint="<endpoint>", credential=AzureKeyCredential("<api-key>"))

with open("pizza.png", "rb") as source:

poller = form_recognizer_client.begin_recognize_content(form=source)

form_pages = poller.result()

pizza_toppings = []

page = form_pages[0]

for table in page.tables:

for cell in table.cells:

print("Cell[{}][{}] has text '{}'".format(

cell.row_index,

cell.column_index,

cell.text

))

if cell.field_elements:

for element in cell.field_elements:

if element.kind == "selectionMark":

print("...Checkbox in cell is '{}' and has confidence of {}".format(

element.state, element.confidence

))

if element.state == "selected" and element.confidence > 0.8:

pizza_toppings.append(cell.text)

print("nPizza toppings chosen: {}".format(pizza_toppings))

Output:

Cell[0][0] has text 'Toppings'

Cell[0][1] has text 'Choose ...'

Cell[0][2] has text ''

Cell[0][3] has text ''

Cell[1][0] has text 'Sauce'

Cell[1][1] has text 'Pesto'

...Checkbox in cell is 'selected' and has confidence of 0.992

Cell[1][2] has text 'Tomato'

...Checkbox in cell is 'unselected' and has confidence of 0.988

Cell[1][3] has text 'BBQ'

...Checkbox in cell is 'unselected' and has confidence of 0.718

Cell[2][0] has text 'Cheese'

Cell[2][1] has text 'Cheddar'

...Checkbox in cell is 'unselected' and has confidence of 0.988

Cell[2][2] has text 'Mozzarella'

...Checkbox in cell is 'selected' and has confidence of 0.994

Cell[2][3] has text 'Feta'

...Checkbox in cell is 'unselected' and has confidence of 0.983

Cell[3][0] has text 'Tomato'

Cell[3][1] has text 'Sun-dried'

...Checkbox in cell is 'selected' and has confidence of 0.985

Cell[3][2] has text 'Sliced'

...Checkbox in cell is 'unselected' and has confidence of 0.972

Cell[3][3] has text 'Diced'

...Checkbox in cell is 'unselected' and has confidence of 0.894

Pizza toppings chosen: ['Pesto', 'Mozzarella', 'Sun-dried']

Extract fields from business cards using a prebuilt model

Prebuilt models offer the convenience of extracting fields from a form without having to train a model. In this version of the client library, Form Recognizer has expanded their available prebuilt models to include business cards and invoices.

If you go to a meeting or conference where you might collect a significant number of business cards, it can be tedious to manually enter contact information from each card. The business card prebuilt model handles this task for you by extracting common fields from each business card for you like personal contact info, company name, job title, and more. See all the fields returned on a business card in the service documentation.

In our example, we will recognize the contact name, company name, job title, and email from the business card found in the below image and write the extracted information to a database.

Note that we use a file in the example, however the begin_recognize_business_cards_from_url method exists if you prefer to extract fields using an image located at a publicly available URL.

from azure.core.credentials import AzureKeyCredential

from azure.ai.formrecognizer import FormRecognizerClient

form_recognizer_client = FormRecognizerClient(endpoint="<endpoint>", credential=AzureKeyCredential("<api-key>"))

with open("business-card.png", "rb") as source:

poller = form_recognizer_client.begin_recognize_business_cards(source)

business_card = poller.result()

for card in business_card:

contact = BusinessCardContact() # create record to hold contact info

contact_names = card.fields.get("ContactNames")

if contact_names:

for contact_name in contact_names.value:

contact.info.append((contact_name.value["FirstName"].value, contact_name.value["FirstName"].confidence))

contact.info.append((contact_name.value["LastName"].value, contact_name.value["LastName"].confidence))

company_names = card.fields.get("CompanyNames")

if company_names:

for company_name in company_names.value:

contact.info.append((company_name.value["CompanyNames"].value, company_name.value["CompanyNames"].confidence))

job_titles = card.fields.get("JobTitles")

if job_titles:

for job_title in job_titles.value:

contact.info.append((job_title.value["JobTitles"].value, job_title.value["JobTitles"].confidence))

emails = card.fields.get("Emails")

if emails:

for email in emails.value:

contact.info.append((email.value["Emails"].value, email.value["Emails"].confidence))

write_to_database(contact)

Extract fields from invoices using a prebuilt model

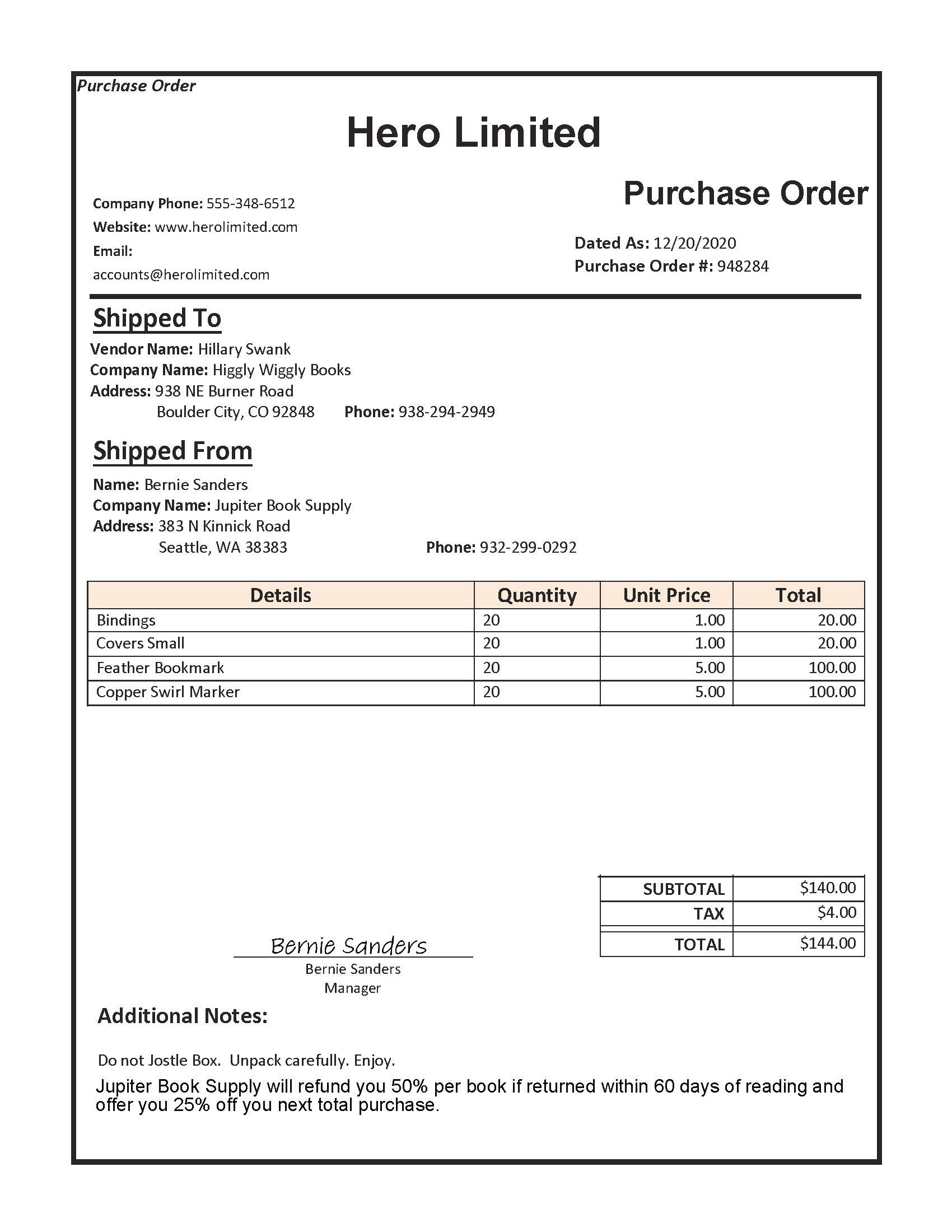

In addition to receipts and business cards, Form Recognizer can now analyze and extract information from invoices using its prebuilt invoice models. The prebuilt model can extract invoice text, tables, and information such as customer, vendor, invoice ID, invoice due date, total, invoice amount due, tax amount, ship to, bill to, and more. See all the fields and their types returned on a invoice in the service documentation.

Imagine you have a company that needs to process many purchase order forms. It would be time consuming to manually enter each of the relevant field values into a database so let’s try automating it! In our example, we will extract the customer name, invoice ID, date, shipping address, and total from the following invoice below and write the information to our database.

Note that we use a file in the example, but the begin_recognize_invoices_from_url method exists if you prefer to extract fields using an image located at a publicly available URL.

from azure.core.credentials import AzureKeyCredential

from azure.ai.formrecognizer import FormRecognizerClient

form_recognizer_client = FormRecognizerClient(endpoint="<endpoint>", credential=AzureKeyCredential("<api-key>"))

with open("invoice.png", "rb") as source:

poller = form_recognizer_client.begin_recognize_invoices(source)

invoices = poller.result()

for form in invoices:

invoice = InvoiceInformation() # create record to hold invoice information

customer_name = form.fields.get("CustomerName")

if customer_name:

invoice.info.append((customer_name.value, customer_name.confidence))

invoice_id = form.fields.get("InvoiceId")

if invoice_id:

invoice.info.append((invoice_id.value, invoice_id.confidence))

invoice_date = form.fields.get("InvoiceDate")

if invoice_date:

invoice.info.append((invoice_date.value, invoice_date.confidence))

shipping_address = form.fields.get("ShippingAddress")

if shipping_address:

invoice.info.append((shipping_address.value, shipping_address.confidence))

invoice_total = form.fields.get("InvoiceTotal")

if invoice_total:

invoice.info.append((invoice_total.value, invoice_total.confidence))

write_to_database(invoice)

Model Compose

Form Recognizer provides you with prebuilt models and also allows you to create custom models. In earlier versions, each custom model corresponded to a single form type, and you had to write logic to choose which model to use to read data out of a given form.

With composed models, you can combine multiple models together and call the model with a single model ID. When a document is submitted to be recognized with the composed model, the service performs a classification step which routes it to the correct custom model. Let’s look at an example.

When arriving at the doctor’s office, a patient has to fill out a number of different forms as part of the check-in process. These forms might consist of a patient history form, HIPAA disclosure, insurance information form, and an emergency contact form. The doctor’s office has a great IT system that uses Form Recognizer to quickly extract information from these forms.

To set this up, the system developer trains a model with labels for each type of form (see how to train a model with labels in the article Train a Form Recognizer model with labels using the sample labeling tool) and creates a simple UI where the forms can be submitted and analyzed. Now, instead of requiring the user of the system to scan and match each form type to correct model ID, the system developer creates a composed model to let the service do it for them. The code below shows how to take a collection of custom models and compose a single model that encompasses all your form types.

In the code snippet below, FormTrainingClient exposes the begin_create_composed_model method which takes a list of model IDs and an optional model name to associate with your composed model. The call to composed model is a long running operation and the client library polls the service until the result is ready. A CustomFormModel type is returned which contains information about your model including data on each of the submodels that make it up.

from azure.core.credentials import AzureKeyCredential

from azure.ai.formrecognizer import FormTrainingClient

form_training_client = FormTrainingClient(endpoint="<endpoint>", credential=AzureKeyCredential("<api-key>"))

patient_check_in_form_models = [

"<patient-history-form-model-id>",

"<HIPAA-form-model-id>",

"<insurance-form-model-id>",

"<emergency-contact-form-model-id>"

]

poller = form_training_client.begin_create_composed_model(

patient_check_in_form_models, model_name="Patient Check-in"

)

composed_model = poller.result()

print("Patient Check-in Forms Model Info:")

print("Model ID: {}".format(composed_model.model_id))

print("Model name: {}".format(composed_model.model_name))

print("Is this a composed model?: {}".format(composed_model.properties.is_composed_model))

print("Status: {}".format(composed_model.status))

print("nRecognized fields in each submodel of the composed model:")

for submodel in composed_model.submodels:

print("The submodel has model ID: {}".format(submodel.model_id))

print("The submodel with form type {} has an average accuracy '{}'".format(

submodel.form_type, submodel.accuracy

))

for name, field in submodel.fields.items():

print("...The model found the field '{}' with an accuracy of {}".format(

name, field.accuracy

))

Now the composed model can be used to recognize any of the check-in forms while letting the service choose the correct model for analysis. A form_type_confidence is returned on the RecognizedForm to indicate the confidence of the model chosen for the submitted form. The system developer can use this value to add logic to the system in cases where the composed model could not accurately predict the type of form submitted and may require the user to manually specify form type.

from azure.core.credentials import AzureKeyCredential

from azure.ai.formrecognizer import FormRecognizerClient

form_recognizer_client = FormRecognizerClient(endpoint="<endpoint>", credential=AzureKeyCredential("<api-key>"))

with open("<patient-history-form>", "rb") as fd:

patient_history_form = fd.read()

poller = form_recognizer_client.begin_recognize_custom_forms(

model_id=composed_model.model_id, form=patient_history_form

)

result = poller.result()

for form in result:

print("Form has type {}".format(form.form_type))

print("Predicted form type confidence {}".format(form.form_type_confidence))

print("Form was analyzed with model with ID {}".format(form.model_id))

for name, field in form.fields.items():

print("...Label '{}' has value '{}' with a confidence score of {}".format(

name, field.value, field.confidence

))

Note: Model compose is available only in train with labels scenarios. However, the functionality to create a model that first classifies a form and then extracts data from it is already available in models trained without labels.

We had a chance to look at some new features of Form Recognizer in this blog that expand the set of out-of-the-box forms you can recognize, the types of fields you can recognize in the forms, and simplifies the development of applications that use custom form types. The service continues to evolve so keep an eye out for more exciting new features in the future!

Further Documentation

- Form Recognizer Service Documentation

- Python Reference Documentation, Readme

- .NET Reference Documentation, Readme

- JavaScript/TypeScript Reference Documentation, Readme

- Java Reference Documentation, Readme

Azure SDK Blog Contributions

Thank you for reading this Azure SDK blog post! We hope that you learned something new and welcome you to share this post. We are open to Azure SDK blog contributions. Please contact us at azsdkblog@microsoft.com with your topic and we’ll get you set up as a guest blogger.

Azure SDK Links

- Azure SDK Website: aka.ms/azsdk

- Azure SDK Intro (3 minute video): aka.ms/azsdk/intro

- Azure SDK Intro Deck (PowerPoint deck): aka.ms/azsdk/intro/deck

- Azure SDK Releases: aka.ms/azsdk/releases

- Azure SDK Blog: aka.ms/azsdk/blog

- Azure SDK Twitter: twitter.com/AzureSDK

- Azure SDK Design Guidelines: aka.ms/azsdk/guide

- Azure SDKs & Tools: azure.microsoft.com/downloads

- Azure SDK Central Repository: github.com/azure/azure-sdk

- Azure SDK for .NET: github.com/azure/azure-sdk-for-net

- Azure SDK for Java: github.com/azure/azure-sdk-for-java

- Azure SDK for Python: github.com/azure/azure-sdk-for-python

- Azure SDK for JavaScript/TypeScript: github.com/azure/azure-sdk-for-js

- Azure SDK for Android: github.com/Azure/azure-sdk-for-android

- Azure SDK for iOS: github.com/Azure/azure-sdk-for-ios

- Azure SDK for Go: github.com/Azure/azure-sdk-for-go

- Azure SDK for C: github.com/Azure/azure-sdk-for-c

- Azure SDK for C++: github.com/Azure/azure-sdk-for-cpp

So 2.1 has been in beta/preview for a long time. Is there a planned release date?