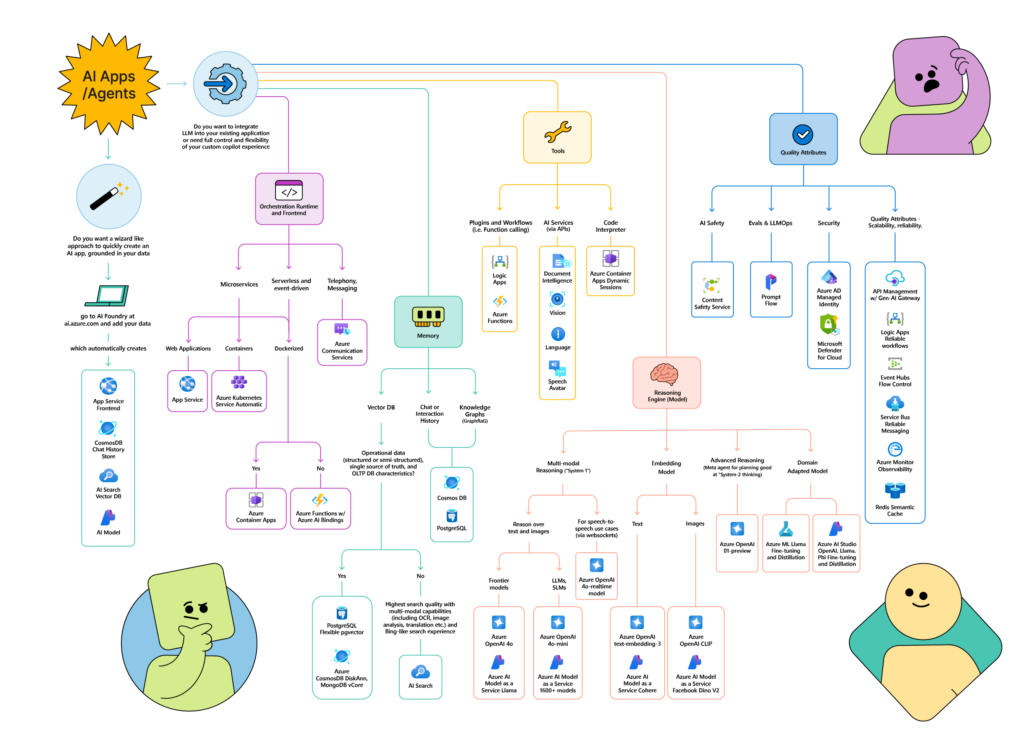

As organizations explore new AI-powered experiences and automated workflows, there’s a growing need to move beyond experiments and proofs-of-concept to production-ready applications. This guide walks you through the essential steps and decisions for building robust AI applications in Azure, focusing on reliability, security, and enterprise-grade quality.

Why Choose Azure’s Managed Services?

It’s easy to experiment with generative AI models and create proof-of-concept demos, but building production-ready applications that can scale reliably is a different challenge entirely. When deploying AI-powered applications for real business use, you need infrastructure that provides consistent performance, robust security, and reliable operations. Did you know that OpenAI’s ChatGPT, GitHub Copilot, and Microsoft’s Copilots, are all deployed on Azure’s managed services? Managed services reduce uncertainty when deploying AI agents with specific goals and guardrails, making them accessible to organizations of all sizes.

In this article I am going to provide you with a visual map to help you decide which Azure AI service is best for your use case. Let’s get started:

Need a Quick Start?

The quickest way to get started is by going to Azure AI Foundry at https://ai.azure.com, which serves as your central hub for AI development, offering:

- Playgrounds: Add your data and ground models to your content through managed RAG (Retrieval Augmented Generation) with just a few clicks. Deploy production-ready chat experiences quickly without complex setup

- Prompt Flow: Enables you to do evaluation-driven development, tune prompts, integrate with tools, and provides built-in observability and troubleshooting

- Agent Service: Enable secure, scalable single-purpose agents with managed RAG, managed function calling, and bring-your-own customization options. Seamlessly integrate with enterprise systems

Pro Tip: Start Your AI Journey with Azure AI Foundry

Azure AI Foundry provides everything you need to kickstart your AI application development journey. It offers an intuitive platform with built-in development tools, essential AI capabilities, and ready-to-use models (1800+!). As your needs grow, you can seamlessly integrate additional Azure managed services to enhance your AI solutions further. This makes it the ideal starting point for both beginners and experienced developers.Need More Control? Let’s Build Your Stack

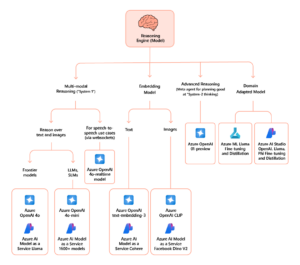

What Kind of Model Do You Need?

Selecting the right AI model is a critical decision that impacts your application’s capabilities, performance, and cost-effectiveness. Azure offers a comprehensive range of models to address different requirements, from multimodal reasoning to specialized tasks. Here’s a guide to help you choose the most suitable model for your specific needs:

| Requirement | Options |

| Multimodal reasoning (text + images) |

|

| Sensitive to latency and cost |

|

| Embeddings for search or classification |

|

| Working with images |

|

| Advanced reasoning (System-2) |

|

Pro Tip: Choosing the Right Model

How Will Your Agent Remember Things?

When building AI applications, choosing the right storage solution is crucial for managing different types of data effectively. Here’s a guide to help you select the appropriate memory solution for your needs:

| Requirement | Options |

| Search Capabilities |

|

| Frequently Accessed Knowledge |

|

| Episodic Memory (interaction history) and Knowledge Graphs |

|

| Operational Data with Semantic Retrieval |

|

Pro Tip: Choosing the Right Memory Solution

Start by evaluating your search needs – Azure AI Search provides comprehensive multi-modal search capabilities with built-in AI services integration. For frequent data access, consider combining Azure Redis Cache for performance-critical operations with a persistent storage solution like Cosmos DB. When building knowledge graphs, leverage the GraphRAG solution accelerators available for both Cosmos DB and PostgreSQL to simplify implementation.Where Will You Run Your Application?

Choosing the right runtime environment and frontend infrastructure is crucial for your AI application’s performance, scalability, and maintainability. Azure offers various options to match your specific deployment needs, from simple web apps to complex containerized solutions. Here’s a guide to help you select the most appropriate runtime configuration:

| Requirement | Options |

| Web Applications |

|

| Serverless and Event-Driven |

|

| Container Orchestration |

|

| Communication Features |

|

Pro Tip: Choosing the Right Runtime Environment

How Will Your AI Agent Take Action?

When building AI applications that need to interact with the real world, you’ll need tools that enable your agents to take actions, process information, and integrate with enterprise systems. Azure provides a comprehensive set of tools that let your AI agents create real-world impact while maintaining security and control. With the AI Agent Service in Azure AI Foundry, integrating these tools has become even more streamlined. Here’s a guide to help you choose the right tools for your AI application:

| Requirement | Options |

| Plugins and Workflows (Function Calling) |

|

| AI Services (via APIs) |

|

| Code Interpreter |

|

Pro Tip: Leveraging AI Agent Service for Tool Integration

How Will You Ensure Quality and Safety?

Enterprise AI applications require comprehensive quality controls across safety, evaluation, security, and reliability dimensions. Azure provides integrated services to help you build AI applications that meet the highest quality standards. Here’s a guide to help you implement the right quality attributes:

| Requirement | Options |

| Quality Attributes & Reliability |

|

| AI Safety |

|

| Evaluation & LLMOps |

|

| Security |

|

Pro Tip: Building Enterprise-Grade AI Applications

Need Additional Development Support?

When building AI applications, you can accelerate your development by leveraging battle-tested frameworks that provide abstracted design patterns and pre-built integrations with the above managed services:

| Framework | Capabilities |

| Semantic Kernel | Microsoft’s open-source SDK that integrates LLMs with conventional programming languages (C#, Python, Java). Ideal for enterprise applications requiring tight integration with existing code. |

| AutoGen | Framework for building multi-agent applications, enabling sophisticated agent-to-agent interactions and complex task completion. |

| Langchain | Popular framework for building LLM applications with ready-to-use components for common patterns like RAG, agents, and chains. |

| LlamaIndex | Data framework specialized in connecting custom data with LLMs, offering advanced RAG capabilities and data connectors. |

Ready to Start Building?

Choose your path based on your needs:

- Want the quickest start? Head to Azure AI Foundry for a guided experience with built-in best practices and patterns.

- Need more control? Start with AI App templates for common patterns, or build your stack from scratch by selecting your models, memory solutions, and deployment options from the choices above.

- Looking for development frameworks? Use battle-tested frameworks like Semantic Kernel, AutoGen, or LangChain that provide abstracted design patterns and pre-built integrations for rapid development.

Remember: Major players like ChatGPT, GitHub Copilot, and Microsoft’s Copilots all run on these same services – you’re building on proven infrastructure. To accelerate your development:

- Landing Zone Reference Architectures: Ready-to-deploy infrastructure templates that follow best practices for security, scaling, and governance

- AI App Templates: Quickly customize existing AI applications for your specific business needs using production-tested patterns

Prerequisites to Get Started

Before you begin, ensure you have:

- An Azure subscription

- User role with Azure AI Developer permissions

- Azure AI Inference Deployment Operator permissions (if models aren’t already deployed)

This guide will continue to evolve as Azure’s AI capabilities expand. Start building today and transform your AI experiments into production-ready applications!

Санкт-Петербург является важным городом России.

Город расположен у дельты Невы и известен своей историей.

Архитектурный стиль сочетает величественные дворцы и новые архитектурные решения.

Этот город славится музеями и насыщенной программой.

Исторический центр создают особую атмосферу.

Путешественники приезжают сюда круглый год, чтобы насладиться красотой.

Он является значимой деловой площадкой.

В итоге, Санкт-Петербург привлекает людей со всего мира.

https://www.elephantjournal.com/profile/befec44381/

Деятельность в области недропользования — это комплекс работ, связанный с изучением и использованием недр.

Оно включает добычу полезных ископаемых и их дальнейшую переработку.

Недропользование регулируется установленными правилами, направленными на безопасность работ.

Грамотный подход в недропользовании способствует экономическому росту.

оэрн

Чтение СМИ является необходимым в информационном пространстве.

Оно помогает оставаться информированным и разбираться в текущих процессах.

Оперативная информация позволяют принимать взвешенные решения.

Чтение СМИ способствует расширению кругозора.

https://telegra.ph/Moskva-12-25-7

Несколько точек зрения помогают сравнивать факты.

В профессиональной сфере СМИ дают возможность следить за тенденциями.

Регулярное изучение материалов формирует навыки анализа.

Таким образом, чтение СМИ помогает лучше понимать мир.

As I see on this great graph, the monthly billing it will be very expensive.

This post and the YouTube video are both really helpful. I’m thinking of starting to craft some solutions for my clients using similar ideas. How up-to-date is this information?

Thank you, Adolfo. It is very up-to-date, and we will continue updating it as things progress.