The new Windows MIDI Services – Spring 2023 Update

In November, I introduced the new Windows MIDI Services. Since then, we’ve been busy with development, prototyping, events, and more. We’re coming up on an important milestone, so it seems like a good time for another update. First, a bit about MIDI 2.0 in general, and then I’ll update you on Windows MIDI Services.

MIDI is about Collaboration, not competition

I love this headline from Athan (President of the MIDI Association), so I am totally stealing it here. The context of that post is the joint meeting with AMEI in Japan (more on that in a moment) but generally applies to MIDI all-up.

The MIDI Association is made up of hardware and software companies and more, all interested in MIDI. AMEI is the equivalent organization in Japan. Between the two, most companies making MIDI equipment, and all of the major operating system companies, are represented and able to participate in standards development and approval. The products of those discussions are kept within the two organizations, but only until they are approved for publication. In this way, there is broad participation from companies with real interest in the standards, but also protection to help prevent unfinished standards from leaking and resulting in a fragmented ecosystem of apps and devices. The end result is a better experience for musicians and other users of MIDI.

The MIDI Association includes member-driven working groups as well as the Technical Standards Board (TSB). The TSB is the governing body for standards which are worked on in the working groups and deals with publication of work created by the different working groups. I am a contributor in the Network MIDI 2.0 Transport working group, and also the OS API Working Group (more on that in a moment). I am also a member of the MIDI 2.0 umbrella working group.

In addition to the technical groups, the MIDI Association is governed by the Executive Board (EB). The EB includes members from a number of member companies, Athan Billias (President) and me, the Chair of the Executive Board. The TSB and EB are both elected by the voting membership of the MIDI Association.

All of the working groups and boards inside the MIDI Association are made up of corporate/organizational dues-paying members. Those members, no matter what size company they represent, all get a single equal vote.

Where the Standards are Today

The initial MIDI 2.0 standards were published in 2020 and have been available online, for free, since then. They are a solid core, with contributions by many hardware and software companies. But as some companies began actual implementations, they found some aspects cumbersome, difficult, or unnecessarily complex. There were also some gaps in the feature set. That’s not a statement about lack of participation or “developing behind closed doors”, like has been suggested online (OS companies, hardware companies, and software companies were all involved in the MIDI 2.0 specifications development), but just the matter of developing a new specification from scratch during a time when the industry continues to move forward. So the revision process began.

The MIDI Association voting members approved the updated MIDI 2.0 specifications in March at the joint meeting with AMEI in Tokyo. AMEI is nearly complete with their own review and final vote (any day now) on the updated UMP specification. This is important to us, because the new features covered in that spec, like Function Blocks, and the deprecation of a couple features that were just cumbersome in practice, have been under NDA with The MIDI Association and AMEI. We’re implementing to these updated features and have code referencing them in our repo. That means, that until those features are published, we can’t open this repo to the public. Luckily, that is coming very soon (days or weeks at most), so we’ll open the repo very soon.

As an example of one important specification change: Microsoft is not implementing the (now deprecated) Protocol Negotiation aspect from MIDI CI. Instead, when a MIDI 2.0 USB device is plugged into a Windows PC with the new Windows MIDI Services, we treat it as a MIDI 2.0 device. But we provide the backwards compatibility with MIDI 1.0 APIs through our services. When combined with changes to Function Blocks, this approach is cleaner and just makes more sense. (The devices themselves, when plugged into a PC that doesn’t support MIDI 2.0 UMP, will be seen as a MIDI 1.0 byte stream device). The device manufacturers we’ve spoken with are all on-board with our approach here.

OS API Working Group

Different developers can implement a good standard in completely different ways. When you’re looking at something like an API for that standard, implementing in different ways can cause no ends of headaches for developers, especially if they create cross-platform applications.

In the MIDI Association, we have an OS API Working Group that has been meeting since last fall. This is something I had suggested to the MIDI 2 Working Group to help keep us all in sync. That WG has participation from all of the major operating systems companies (Apple, Google, Microsoft, and Linux/ALSA), and also MIDI SMEs and some hardware and DAW companies. It’s where we come together to agree on implementations where there’s wiggle room in the spec, so we’re not doing anything radically different from OS to OS. We’ve focused on things like naming, Function Block handling, Function Blocks vs USB Group Terminal Blocks, UMP Endpoints vs Ports, and much more.

MIDI 2.0 Implementation Guide

One of the outputs of the OS API Working group will be a MIDI 2.0 implementation guide which helps cover things that shouldn’t be in a specification, but are necessary to understand. Agreed upon across standards organizations, there’s a somewhat fuzzy line that has to be drawn between what a standards organization is allowed to supposed to put in a spec (the “what”) as opposed to the “how” and best practices which have to live outside the specification. It can seem a little cumbersome at times, but it’s how standards work. Personally, I’d prefer to see standards be strongly opinionated about most things they present, but that’s not really how it works. Additionally, a specification can’t really reference specific operating system differences, for obvious reasons. The spec has to live outside today’s implementations, and be valid for decades to come.

So the OS API Working Group is drafting a living implementation guide which will include details from the different operating systems, best practices, and more. We expect to release a draft of that in the next few months.

Cross-company Cooperation and the Joint AMEI Meeting in Tokyo

Back at the end of March, AMEI and some MIDI Association members, including major OS companies from the OS API Working Group, got together in Tokyo to collaborate on MIDI 2.0. Thanks to Torrey Walker from Apple for conceiving this and making it happen. Hardware companies in Japan brought their new and prototype MIDI 2.0 devices, and tested them with in-progress drivers and apps on macOS, Android, and Windows. The 15 hours in an airplane seat was rough on the back :), but the trip was absolutely worth it. Bonus: I love visiting Tokyo.

In this pic from the event, you can see Robert Wu from Google, Torrey Walker from Apple, me from Microsoft, and in the background, Dr Gerhard Lengeling from Apple Logic.

The three days were spent testing and ensuring that we’re all doing things the same way (or generally so) so we can have the best user experience possible, no matter what computer or app or device you use.

One of the big things we discussed at the meeting is naming of MIDI entities. There’s much more to naming in MIDI 2.0 than there ever was in MIDI 1.0. That’s a good thing, because it provides a better level of customization for everyone and improves clarity. But it can get confusing quickly if the hardware and OS companies don’t follow similar naming patterns.

(Aside: other than when presenting, I was actually masked up almost the entire time of that event, despite what the pics show. Gladly, I made it through this meeting, the flights, the NAMM show and the MVP summit without a repeat of last year’s nasty COVID infection. 😛 )

Another thing we discussed was the importance of unique identification numbers (USB iSerial) for USB devices to help us better support user customization scenarios in the future. Without that, operating systems have to make a lot of assumptions about whether a device you just plugged in is the same one it saw yesterday. This is a bit of a bugbear of mine, so I’ll write another blog post about that in the future.

The final (but not least important!) note from the meeting is that the MIDI Association formally approved the updated MIDI 2.0 specifications at the event, with the final vote happening in-room. That paved the way for the AMEI review and for us get closer to being able to share our work with you. This was an historic moment for MIDI!

After the event, Microsoft and AMEI met separately to discuss the USB driver project status, answer questions, and discuss fine points of the Windows implementation plans. That went well, and they are as excited as we are about what’s coming.

The collaboration between members of the MIDI Association is so important to help ensure that you all have the best possible developer and user experiences for MIDI 2.0. We really want to get this right.

MIDI 2.0 at the NAMM Show in Anaheim

Following just over a week after the Tokyo meeting, the NAMM show in Anaheim, California was important for MIDI because it celebrated both the 40th anniversary of MIDI, and the 50th anniversary of HipHop. MIDI and electronic music are both joined at the hip because they grew up together and greatly influenced each other. The closing concert put on by the MIDI Association was absolutely amazing.

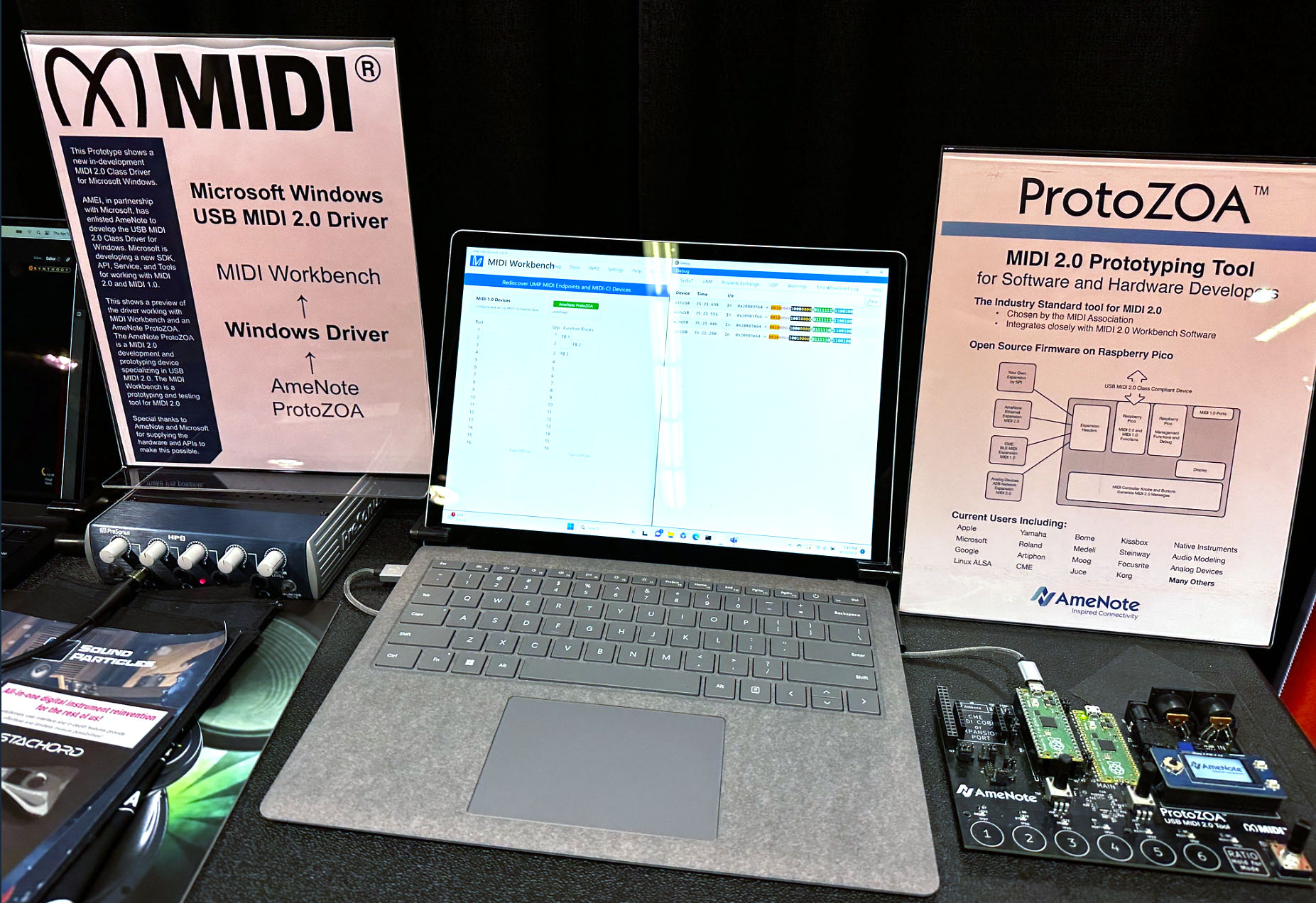

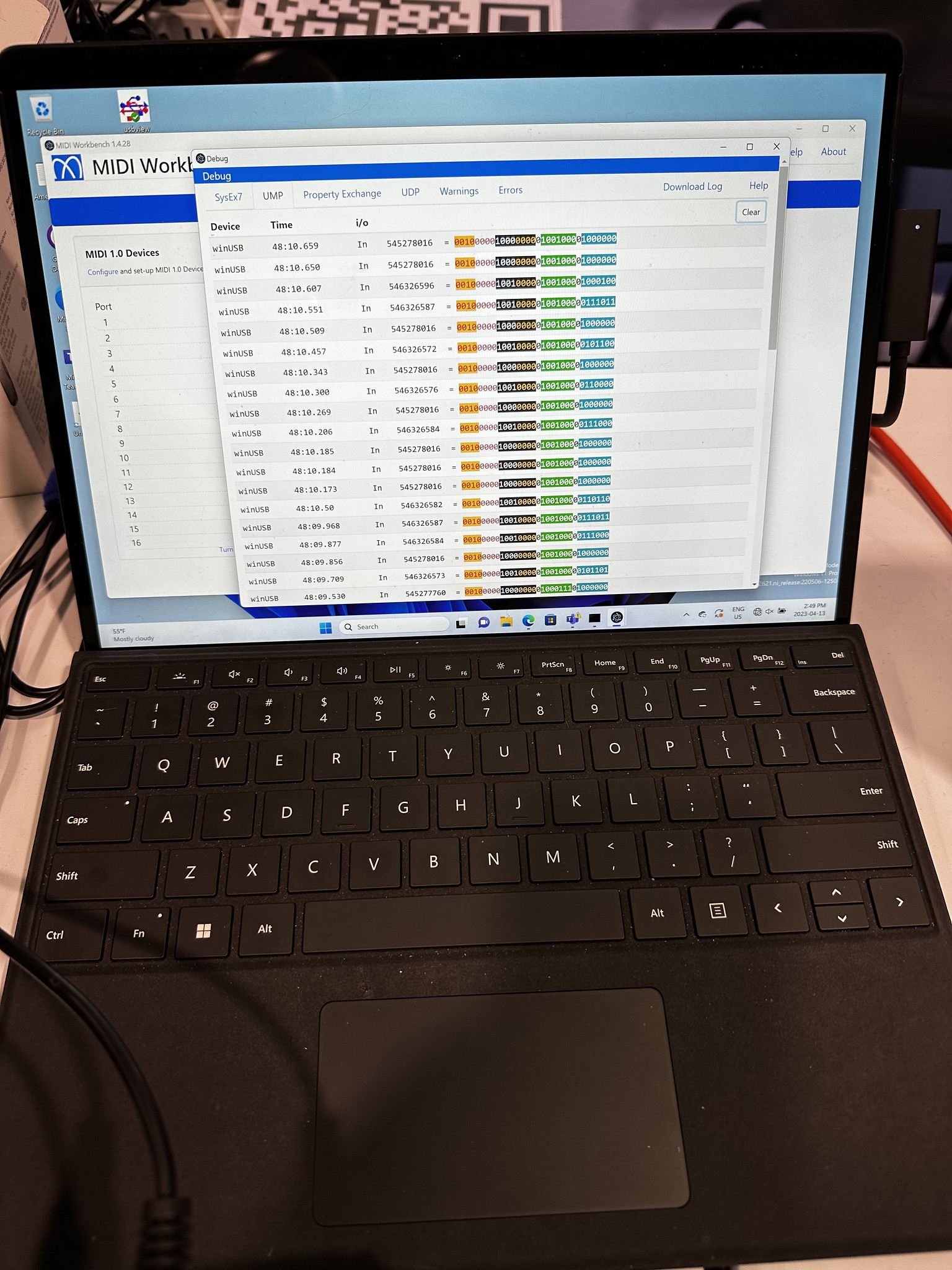

Microsoft had the work-in-progress MIDI 2.0 driver on display in the MIDI Association and AmeNote booths, running on both the i5 Surface Laptop as an x64 binary, and the Arm64 Surface Pro as an Arm64 binary.

Here’s the MIDI driver, being used via a user-mode shim from the MIDI Association’s MIDI Workbench Electron app. It’s connected to an AmeNote ProtoZOA MIDI 2.0 development device, and is shown receiving MIDI UMP messages.

And here it is on an Arm64 device, again connected to a ProtoZOA. In this case, the messages are coming from another prototype MIDI 2.0 transport connected to the ProtoZOA.

Apple, Linux (ALSA), and Android also had their products on display in the MIDI Association booth. It was great to see us all together in the booth, showing that MIDI 2.0 is really here.

More of my personal photos from the NAMM show here.

Finally, for MIDI Association members, several companies, including Microsoft, showed their working Network MIDI 2.0 prototypes at the prototyping meeting on Sunday morning after the NAMM show. I only attended the first hour, just to show the network MIDI work, and then had to run to the airport to head to Redmond for our MVP Summit event. The prototypes were all implementing the protocol as it currently stands. Although they were not yet production code (for example, the Microsoft code doesn’t have any of the error correction in place yet), they were functional for MIDI use. For Microsoft, we expect to be able to ship Network MIDI 2.0 within a short time after the protocol is finalized, approved, and published.

That’s it for important events. Let’s cover a bit about MIDI 2.0 itself.

Some Important Concepts

There are just a few key concepts that folks need to grok to understand our MIDI 2.0 implementation and the rest of what I cover here.

Data Formats

MIDI 1.0 used a stream of bytes as its data format, with most messages taking up to three bytes, all optimized for serial communications as it existed in 1983. MIDI 2.0 uses the Universal MIDI Packet (UMP) as its data format. Whereas the bytes in MIDI 1.0 were just that continuous stream (still packetized into 32 bit packets on USB, but acted like a stream), the packet format in MIDI 2.0 has discrete sized messages, including SysEx, that range from 32 bits to 128 bits in size. This fundamentally changes API design and message handling.

There are documented rules for converting between the MIDI 1.0 byte stream data format and MIDI 2.0 UMPs and between MIDI 2.0 protocol messages and MIDI 1.0 protocol messages. We’re following those, and even using code from the MIDI Association in our own implementation to ensure we stay compatible. That code is currently available to members, and will be public when the specifications are published.

Protocol vs Data Format

The term “MIDI 2.0” is contextually overloaded as it covers a related suite of technologies. At the highest level, it refers to a body of work including MIDI CI, the MIDI 2.0 Protocol over UMP, and MIDI 1.0 Protocol over UMP (for backwards compatibility). But we want to clarify here that there’s a difference between the protocol and the data format.

- MIDI 1.0 byte stream data format can only carry MIDI 1.0 protocol messages

- MIDI 2.0 UMP data format can carry MIDI 2.0 protocol as well as MIDI 1.0 protocol messages, repackaged as UMPs. The UMP specification defines both MIDI 1.0 and MIDI 2.0 protocol UMP messages. For the most part, when it comes to the OS implementation, it’s just easier to think of MIDI 2.0 as being the new umbrella that covers everything including MIDI 1.0.

Windows MIDI Services uses UMP as our data format regardless of the origin of the data. When a MIDI 1.0 data format device is connected to Windows, we convert that stream to/from UMP to surface it to the API. In this way, we have uniform handling of all data (which also makes plugin development easier) and new applications only need to deal with one data format.

This is possible because the MIDI 2.0 UMP Specification calls out both the support for existing MIDI 1.0 protocol messages in UMP, as well as rules by which they are converted.

UMP Endpoint vs Port

Much of the communication in MIDI 2.0 is bidirectional, not just unidirectional. So, whenever you see “UMP Endpoint” here, think “two MIDI ports making an inseparable in/out pair”, if you want to easily understand what we’re saying here. That’s not exactly orthogonal, but it’s conceptually close enough.

- A UMP Endpoint is a bi-directional (usually) stream for sending/receiving UMP messages to a single device. A UMP Endpoint may have 16 groups (16 in and 16 out max) of data, each of which can have 16 channels of information.

- A Port is a MIDI 1.0 uni-directional connection supplied by a single device. It can handle, at most, 16 channels of information in a single direction. It’s common for a device to offer more than one MIDI 1.0 Port.

Behind the scenes, USB MIDI 1.0 and USB MIDI 2.0 aren’t quite as different as you may imagine from the above. On Windows, USB MIDI 1.0 Cable numbers became logical Ports. Those cable numbers are quite similar to group numbers in MIDI 2.0. When we send a packet of USB MIDI 1.0 information to a USB MIDI 1.0 device, we have to chunk it into USB packets, each of which includes the cable number. In MIDI 2.0, the group is now an explicit part of the message, so it will work the same way across any transport. Because the group is part of the UMP itself, it doesn’t make sense to move that out into a port construct, and deal with the potential of conflicting group numbers in the UMPs. This approach is one of the big things the API working group discussed with the manufacturers in Japan, in fact.

Where that becomes more interesting is how we deal with backwards compatibility with our existing WinMM and WinRT APIs. If you connect a MIDI 2.0 device to Windows and want to access it through a MIDI 1.0 API for backwards compatibility:

- We treat the MIDI 2.0 group like a Cable number in MIDI 1.0, and map each group to a MIDI 1.0 port. So a single UMP endpoint with 5 active input groups and 5 active output groups will appear as 10 different MIDI 1.0 ports (5 inputs, 5 outputs) to the WinMM and WinRT APIs, as expected.

- We translate to/from the MIDI 1.0 data format at the different abstraction layers around the service. For a MIDI 1.0 device used by a MIDI 1.0 API, there’s an additional transformation in the abstraction design so we can ensure the service deals only with UMPs (especially important for plugins and more). Given the additional speed afforded by the new buffer approach, this should be fine in practice. For a MIDI 2.0 device, there’s only the single translation at the abstraction layer connected to the WinMM and WinRT APIs.

Other than when required for byte stream/UMP translation due to the device type, MIDI 1.0 <-> MIDI 2.0 protocol message translation is optional and handled through the SDK with explicit calls from the app, rather than enforced in the service. This is another topic we discussed in the Tokyo meeting. We will revisit this decision in the future if needed, but we’re erring on the side of app control here. This comes into play when a MIDI 2.0 device has a block which indicates MIDI 1.0 level functionality, requiring MIDI 1.0 UMP messages instead of MIDI 2.0 UMP messages.

Of course, we’ll let applications know about the device they’re talking with, the block information, the protocol, and the transport, so they can make intelligent decisions about any translation.

Windows MIDI Services Update

Now that we’ve covered what’s happening with MIDI 2.0 all-up, and covered a few key concepts, let’s talk more about the Windows implementation.

What we’ve been up to

As mentioned above, the repo is still private until the final AMEI vote and approval, and then the publishing of the specifications on midi.org. But we haven’t been sitting still. We’ve been working on various aspects of Windows MIDI Services. Much of that work is internal infrastructure decisions and changes to support the driver. Other work is on the driver itself, the services, API and SDK, tools, and prototypes.

Let’s set expectations: There are no usable builds for developers or users just yet. That will come later this summer and fall. When you look at the repo today, you’ll see some code, but not enough to really dive into yourself. Don’t be disappointed. Being at this stage on the public code is good, because it means we are still early enough in the implementation to be more open to feedback and suggestions.

The Team

I saw it suggested in a YouTube video that I am the only person working on this project. I’m flattered, but it’s incorrect. I just happen to make myself visible, because I’ve been in a lot of community-focused roles at Microsoft. There’s much more work here than any one person can do, especially when it comes to infrastructure and design. Here’s a bit more information about the team.

The AmeNote team is working on the USB MIDI driver which handles USB MIDI 1.0 and USB MIDI 2.0 devices. They are an independent team, but working closely with us. We have weekly sync meetings as well as other touchpoints as needed. We’re also in the same working groups in the MIDI Association.

The SiGMa (Silicon, Graphics, and Media) team in Windows is working on the infrastructure for the driver, service, configuration, and more, and on the Windows Service implementation based on their expertise with the Audio service. That includes the buffer mechanisms for speaking to the driver, and then the cross-process buffer implementation for communication with the API. This work isn’t as visible, but it couldn’t possibly be more important. It’s the heart of Windows MIDI Services. The prototyping and measuring of the high-performance buffers (with a lot of testing to prove them out) for MIDI is also key to project success. This all builds upon expertise and best practices that team brings to the project.

I am working on tools, SDK, and API, and with the developer and musician communities. That may sound like a lot, but the API is really just a pipe into the service, and the SDK is the app-focused friendly entry point into the API. The SDK itself is using lessons learned from the bazillion MIDI 2.0 prototypes I’ve built since 2019. I’m also working on some new prototypes, like Network MIDI 2.0. The tools are just the settings app right now, and are super fun to work on. I’ve had a request from the DAW companies to provide a command-line tool for a couple key settings app features (like a dump of the system for support), and so will add that as well as part of the command-line app complement to the settings app.

Development Styles and Approaches

We (the sub-teams) have different development approaches, which is why you’ll see different styles of activity in GitHub. Each team is encouraged to work in the way that works best for them. For example, the SiGMa team has internal development, build, validation, and testing infrastructure that is specifically designed to keep a high quality bar for anything that will ship in-box in Windows. So the Service and related abstraction layers and components are developed there, and then put snapshots on GitHub. We’ve discussed accepting PRs and other details, and I’m confident the team will be agile there. For the SDK, tools, and more, I prefer to work directly with the Github repo as that suits my style of work, and will also make open collaboration with others in the community even easier. So you’ll see more activity there. For AmeNote, they are working on the USB driver code in their own environment and also putting snapshots on GitHub. But once the driver code meetings certain milestones, it will be a Github-first project. Finally, prototype work that is covered under MIDI Association NDA because the specification is not yet published is not in the repo. For example, the Network MIDI 2.0 prototype work.

You’ll also see different styles of coding in the repo for different component parts. Within any given subproject, we’ll stick to what is more efficient and standard for that project. For example, the service code follows patterns long-established for Windows Services inside Microsoft, and also builds on regular COM rather than WinRT, because that’s the right approach for the service and is in-line with our internal guidelines. The driver code follows its own approaches, dictated largely by the constraints of kernel mode development (for example, only a small part of the standard library is available in kernel mode). This is all good. We’re not dictating a single coding style across the entire Windows MIDI Services.

In the end, the only code we expect to remain closed-source is the work we’ve had to make to run all this on Windows (USB stack, DDI, etc.). Everything else is intentionally open source and permissively licensed, including the work that is being mirrored from our internal repo. We are open by default on this project, and everything in the repo is buildable using available tools.

Language and Framework

New general purpose APIs in Windows are broadly required to be provided as WinRT, to maximize the languages they are available to. This doesn’t mean they are restricted to Store apps, or are sandboxed in some way. WinRT is a richer and modern version of COM, with rules around it for how it works with applications. It is neither garbage collected, nor does it rely on a runtime like .NET, so it is appropriate for performance-sensitive APIs and languages/frameworks which wouldn’t normally carry a runtime with their deployment. One nice thing about WinRT is that we can provide projections to support C++, C#/.NET, JavaScript, Rust, and more.

C++/WinRT is not C++/CX Anyone who worked with WinRT from C++ just a few years ago may recall the language extensions like the caret. C++ developers used to working within standards, with tools and build environments set up for that, preferred a more standards-based approach. C++/WinRT is a header-based standard approach for C++ 17+. The documentation is under the “UWP” folder in MS Learn due to how it started, but it’s available to desktop apps as well.

Although I am reasonably competent in C++ and C++/WinRT (and always learning, so if when you see something we can do better, be sure to constructively let us know and/or submit a PR), and despite C++ being the first language I ever developed anything in professionally back in the early 90s, I’ve spent the last two decades+ working primarily in C#.

I also wanted to ensure our healthy C# development community could participate in the project, and that we could build upon the wealth of tools, libraries, and more available to C# developers. So tools and apps are all C# and follow the coding styles I’ve used there. Plus, my team is good friends with the WinUI team, and actually includes the Community Toolkit leads, so we have a ton of expertise there.

The API and SDK are both C++/WinRT, but you can smell a bit of C# in how they are structured and formatted. As much as I love C#, C++/WinRT is the right language there because of the required Arm64EC support, and how it doesn’t carry any real runtime dependency.

What about Rust? I investigated using Rust (and rs/WinRT) for creating the SDK and API. It’s a great language and our WinRT support now enables authoring components. The one place where it falls down right now is there’s no direct support for Arm64EC. Arm64EC is important for our music application developers, and is only implemented in C++ today. That said, we’ll ensure application developers using Rust can use the API and SDK. We’re just not authoring in Rust for this project.

MIDI Services, API, and SDK

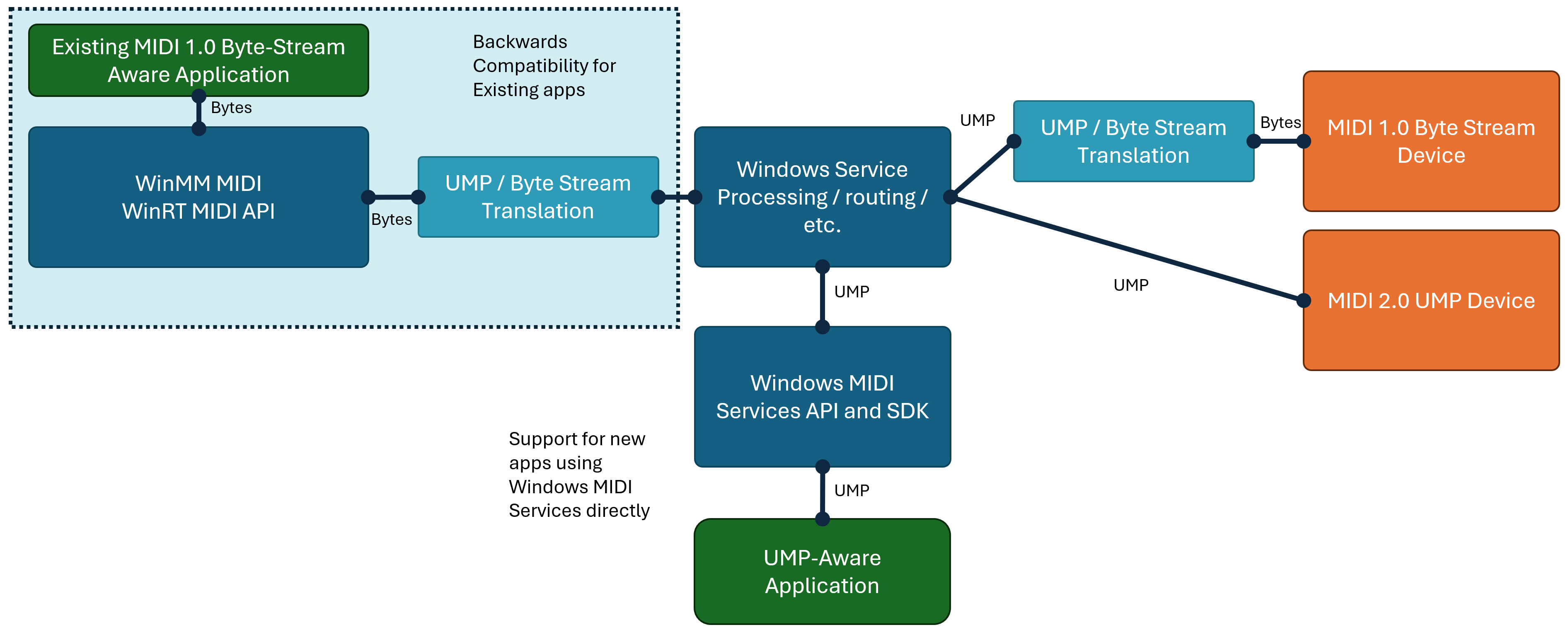

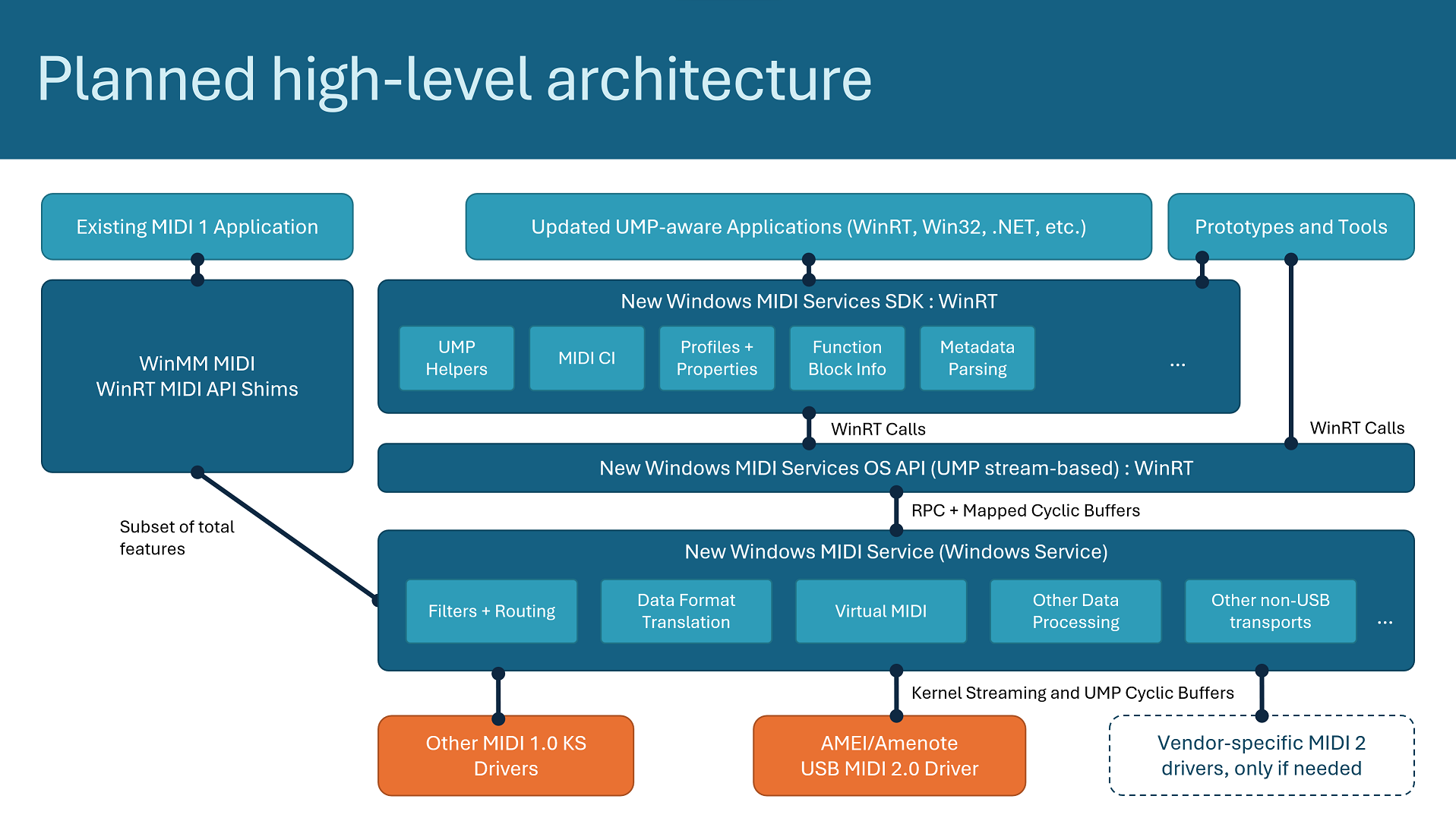

MIDI 1.0 on Windows today is essentially a thin API over the drivers. It’s difficult to expand or offer any new features without creating a new kernel mode driver for each thing, and even then, the constraints are significant. The new version is based on a Windows service, and uses transport and feature plugins when no driver is technically required (when you consider USB, Network, BLE, Virtual, and even Serial, the only one which technically requires a kernel driver is USB. The others are drivers today due to constraints in our existing design). Here’s the current design we’re working towards:

Here are just a few important points about the design:

- Not everything here will be delivered in the first release. We are focusing on performance and compatibility, with USB as the first transport.

- The API, service, driver, etc. will not ship in Windows right away. We want to give it a little shake-out time to make sure that it is compatible, stable, and performant before we ship it in-box. We will work closely with our app and hardware partners to help ensure that process is easy to navigate, friendly to users, and provides a great experience. We’ll also work to get this all in-box as soon as it’s ready and we’re sure we have everything in the API that is needed without having to break compatibility in an update.

- When Windows MIDI Services is installed on a PC, we replace parts of the existing API infrastructure to route WinMM and WinRT MIDI 1.0 through the new service and driver. This provides MIDI 1.0-level access to devices for backwards compatibility with existing apps. It also requires a lot of testing in real-world scenarios.

- For developers, the new API/SDK is the best way to have full MIDI 1.0 and MIDI 2.0 functionality in an app. The new Windows MIDI Services provides everything the old APIs provide, and much more.

- We recommend that all apps use the app SDK, and not the API directly. The API is designed to not break with changes to the MIDI 2.0 standard updates (new messages, etc.), and new transports, so tends to be very loosely typed and not super friendly in spots. This is by design so we can grow and not be stuck in place for 30 years. The SDK wraps all that, and moves with the speed of MIDI. The API will eventually ship with Windows, and the SDK will be available separately.

- The new MIDI 2.0 API/SDK is multi-client, meaning multiple applications can connect to a single device. This is one of the benefits of a service-based approach. This will also bring multi-client to the WinMM and WinRT APIs over time, but not necessarily on day one.

- Apps will be able to use virtual devices/UMP Endpoints to talk to each other

- The transport and other plugin APIs will be open source, available to third-parties to develop and prototype

- Our MIDI Abstraction Layer will be able to interface with the existing kernel-mode MIDI 1.0 drivers that WinMM and WinRT MIDI are able to use today.

- For support, we’re currently targeting the last public release of Windows 10 for as long as it is supported, and current supported public releases of Windows 11. This is a bit of extra work because we have to have some of the USB and related changes put into our servicing channels, but we’re working on it. That said, please use Windows 11. 🙂

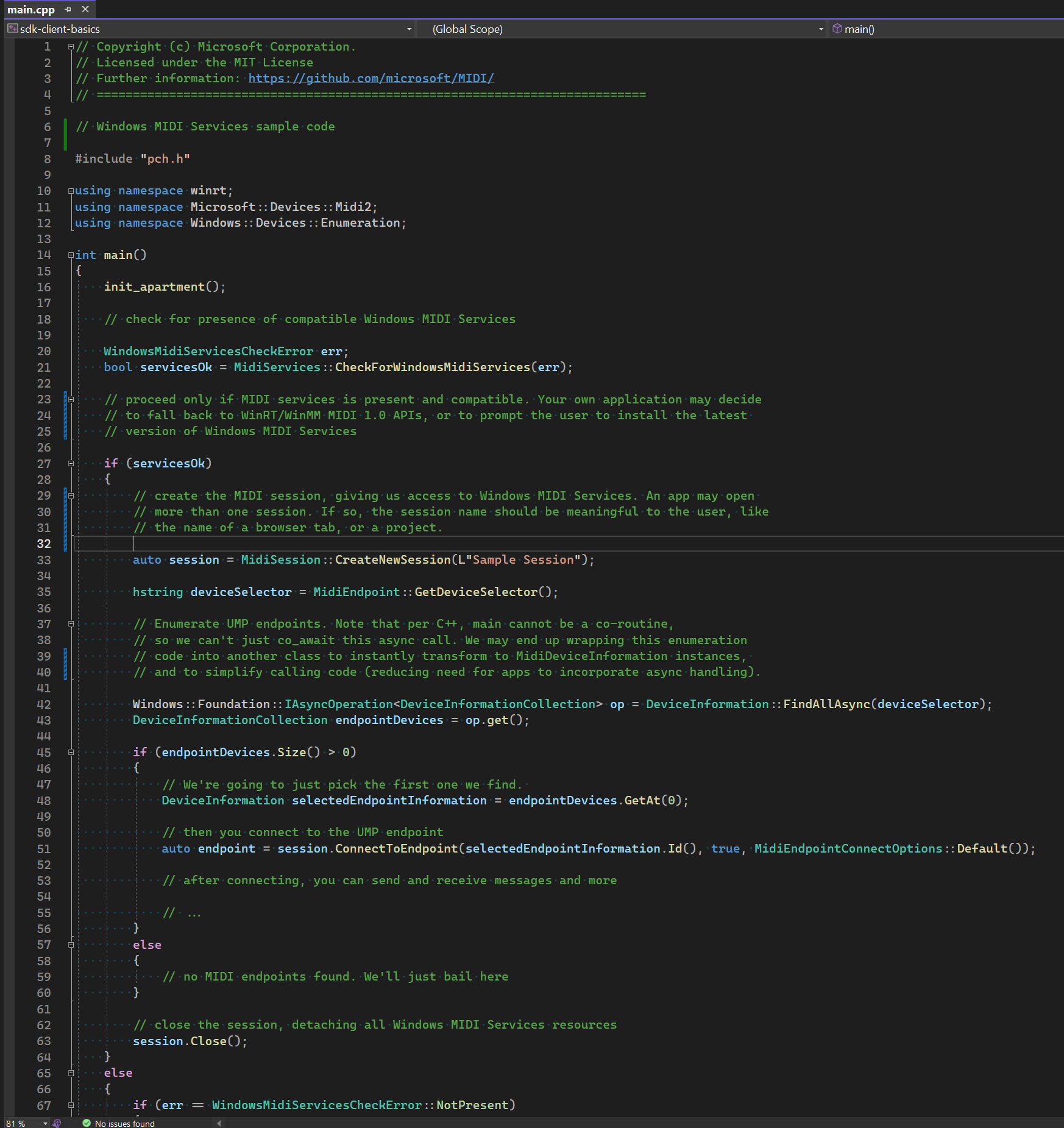

Along with the SDK we are providing sample code. Initially this will be for C++ and possibly C#. Here’s an example of the type of samples we’ll have:

This, and the related documentation, will eventually be published in Microsoft Learn, where our other APIs and concepts are documented. But we’re prioritizing sample code to get developers started.

USB MIDI 2.0 Driver

At the AMEI meeting in Tokyo, we had an early development version of our USB MIDI 2.0 class driver which we used to verify that different devices were able to connect to Windows and be identified correctly as MIDI 2.0 devices. This was a big milestone for us because we had some challenges getting this ready in Windows without breaking anything else out there today. Part of that was because our MIDI 1.0 driver is actually part of our USB Audio 1.0 driver, and we’re only replacing the MIDI 1.0 functionality in that in the new driver. Another, is that our USB Audio 2.0 driver incorrectly claims MIDI 2.0 for itself in the descriptors, following the pattern of the USB Audio 1.0 / MIDI 1.0 driver. This is a bug that wasn’t discovered until this project, so we’ve had to make some changes there. Finally, MIDI 2.0 requires changes to the device driver interface because of both the bi-directional endpoint nature, and the change in data format from a byte stream to the Universal MIDI Packet (UMP). Most of those changes are things we’ve had to bake into Windows.

The released version of the kernel-mode driver is planned to use our ACX (audio class extensions), with changes to handle MIDI, and the high speed buffer implementation from SiGMa. Together, they enable better MIDI throughput, easier debugging, and better power management than we’ve had in the past.

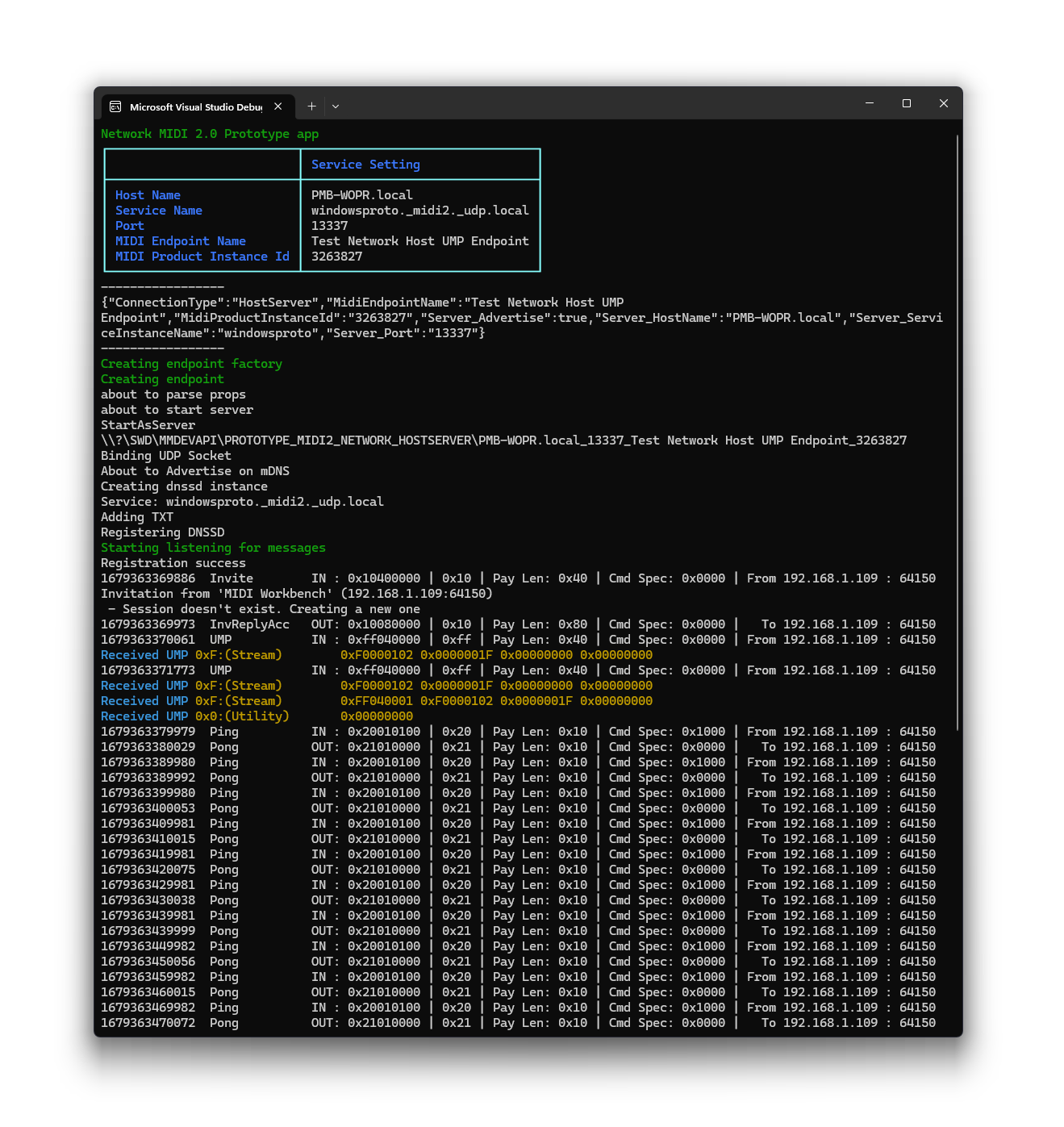

Network MIDI 2.0 Prototype and the Settings app

As much effort as we need to spend on USB, I’m more excited for Network MIDI 2.0. Networking has come a long way since the rtpMIDI days, as has Windows support for everything that’s required to make it work.

I spent much of the first half of this year working on the Network MIDI 2.0 prototype. My goal here was three-fold

- Ensure that the specification we’re working on in the MIDI Association will function on Windows without third-party applications or drivers. In this case, it’s all user-mode code, and we are able to use mDNS natively on Windows.

- Provide a second transport to help validate some of the service abstraction layers.

- Provide a source of MIDI 2.0 data that can be used to build out the settings app traffic monitor

The initial prototype of the Network MIDI Transport was C#/WinRT, then moved to a C# console app driving a C++/WinRT Component.

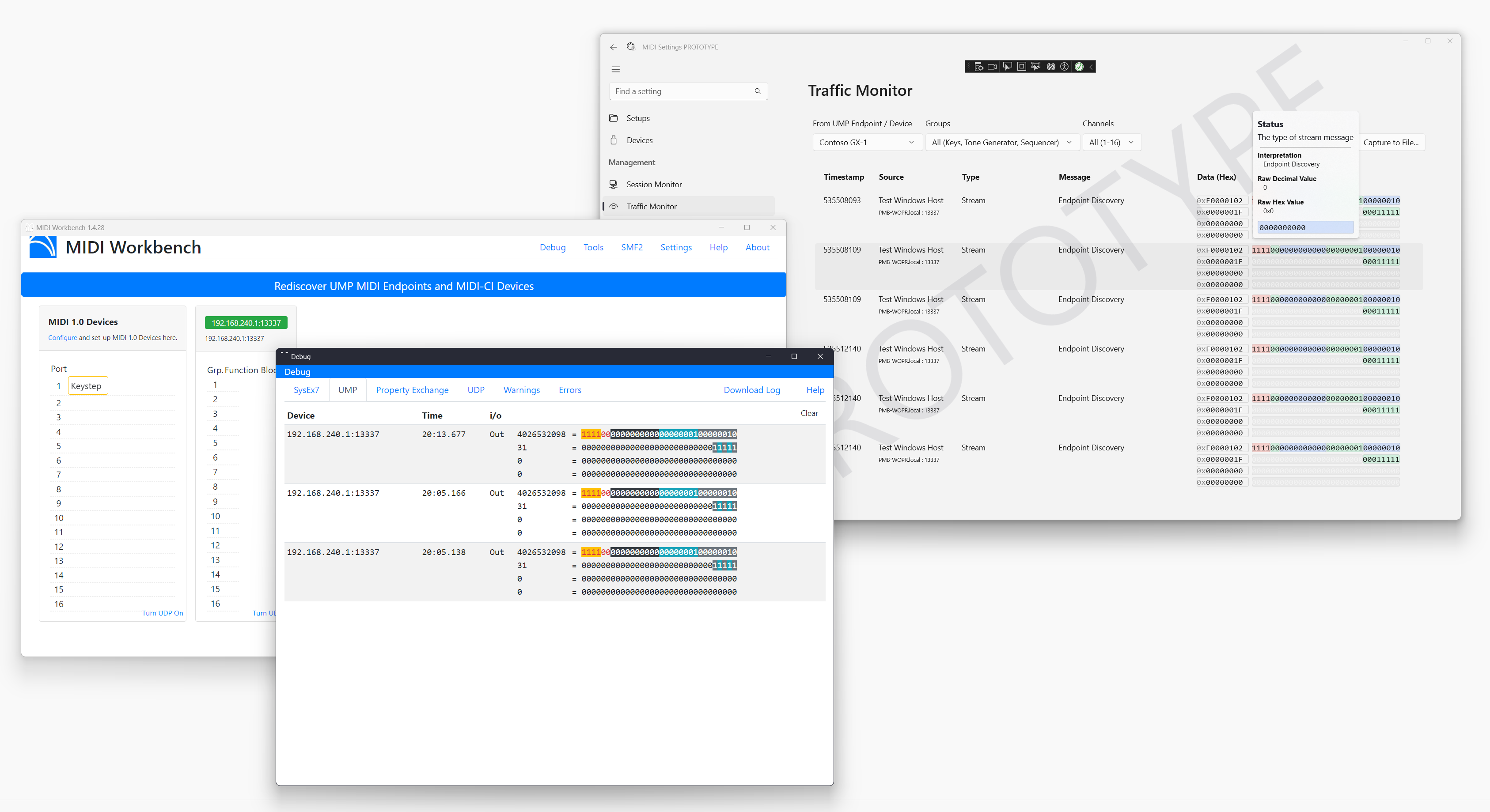

From there, it progressed, for more compelling demo purposes and to also exercise aspects of the settings app, into using the C++/WinRT component directly from the Settings prototype app, without any service or other infrastructure. (This is why it was refactored to C++/WinRT anyway).

In that shot, you can see the settings app at the top right, talking to the network MIDI transport. In this case, it’s communicating with the MIDI Workbench app on the same PC, although I also tested it across other PCs on the network.

A good chunk of this C++ code will make it into the Transport Plugin we’ll integrate with the Windows service once the Network MIDI transport spec is finalized. The Network MIDI 2.0 standard is a really good one, and is simpler than RTP MIDI, so we’re looking forward to having that as part of the platform.

BLE MIDI 1.0

I’ve been in communication with some folks in the community who have pointed out some issues with our Bluetooth MIDI 1.0 implementation. We have some bugs there, and the BLE MIDI 1.0 spec is actually quite lean on details, so there are simply some incompatibilities we need to sort through.

Given that our BLE MIDI 1.0 implementation in Windows is tightly integrated into the WinRT MIDI stack today, and has a number of bugs, the approach we’re going to take going forward here is two-fold

- Existing MIDI 1.0 BLE drivers from third-parties are expected to work with Windows MIDI Services, but are not the long-term solution. We love these products and the developers who create them, we just want to make it as easy as possible for our customers going forward, and also build upon this code if/when a BLE MIDI 2.0 transport standard comes out.

- We’re implementing a new clean-room version of BLE MIDI 1.0 in the repo, in the open, after our initial Windows MIDI Services release. This will be a user-mode transport plugin like the others, with no need for a driver.

Why are we re-implementing it? Well, we have additional BLE APIs available to us which weren’t available back then, and the overall structure will be different given that this will be a plugin rather than code inside WinRT MIDI 1.0. There’s also redundant code in our existing implementation that is now common, in our service, across all transports. Given those changes, it just doesn’t make sense for us to go through all the reviews and changes required to open source the existing internal codebase. Stay tuned for that work to start showing up in the repo once our initial work on the new Windows MIDI Services is stable.

Note that there is no BLE MIDI 2.0 specification at this time.

Virtual MIDI 2.0

Virtual MIDI for Windows MIDI Services will be coming after the initial release as well. I’m particularly excited about this one because app-to-app MIDI on Windows, without requiring an additional driver, is something we’ve really wanted to do for a while now.

Edit: Based on developer feedback (Thanks Giel!), I’m working on getting a simple MIDI loopback device in here for release so it can be used for testing. It will be a pair of bi-directional UMP Endpoints wired together so they can be used to test your own send/receive MIDI 2.0 capabilities in your apps. Can’t promise 100% just yet, but I’ve bumped it up in the priority list. It will be useful to many of us in any case.

Others

We’ll work with Web MIDI folks, middleware developers like JUCE, and others to incorporate the changes here and make it easy for everyone to use with their existing tools and frameworks.

What Next?

The next big step is the repo opening up to the public once the specifications are published. After that, we’ll continue to be hyper focused on a stable initial version of the new Class Driver, Service, API, and SDK. Initial versions of the tools, additional transports as discussed, and more will start to flow out after that point as well. Our initial vision and planned feature set hasn’t changed.

This may read like a stock blog post “generate engagement/interaction CTA” footer, but I’m truly interested in what you all are interested in learning more about. MIDI 2.0 is new to most people, and I know there are questions. Are there aspects of MIDI 2.0 and/or Windows MIDI Services that you are particularly interested in hearing more about? Something you want a deeper dive on? Let me know in the comments.

We’re really excited about what this will bring to musicians and other MIDI users on Windows. Thanks for joining us as we continue on our way to a more open, agile, and feature-rich MIDI stack on Windows.

I can’t wait for the midi 2.0 revolution! Let’s get a new synth engine for the playback of midi2 files!

How will legacy C applications be able to link with the MIDI 2.0 API/services ? Will tools and/or a manual be included in the SDK ?

C++ apps can use C++/WinRT, which is a header-only C++ interop approach which works for UWP and Desktop applications, to talk to the API. C apps will need to incorporate some C++ to do this.

You can learn more here:

https://learn.microsoft.com/windows/uwp/cpp-and-winrt-apis/intro-to-using-cpp-with-winrt

Pete

I really have nothing to add here, but I wanted to say how much I love those pictures! ^_^