MIDI @ 40 and the new Windows MIDI Services

The 40th anniversary of MIDI is coming up, and here at Microsoft, we’re working on our MIDI 2.0 implementation and stack updates with some help from AMEI and AmeNote. With all of this in progress, it’s a good time take a look back at some personal history with MIDI, and prepare for what the future will bring.

Warning: The first half of this post is going to be more of a personal retrospective rather than a product or project focus. If you want to skip all that, feel free to head down to the bottom of the post where there’s a brief intro to the new Windows MIDI Services and a pointer to the ADC session. Or just wait until the next posts where I’ll share more technical details. :).

My personal history with MIDI

Back in the second half of the 80s, I got my first “real” synthesizer: a Roland HS-60. The HS-60 was a Juno 106 inside, with all the same electronics, but with speakers and front panel ink designed more for the home organ market. This was affordable by a teenager working for minimum wage at a grocery store because no one wanted analog synths back in the era of the Roland D-50 and Yamaha DX-7 (and shortly after, the Korg M1). The speakers, and the audio input on the HS-60, were especially useful because I didn’t have anything more than a cheap cassette radio boom box for audio in my room.

Within a year either direction, a few other key things happened:

- I got my first computer, a Commodore 128 (ok, it was for “the whole family” but I was the only one really interested in it, and it wasn’t long before I completely bogarted it and then moved it out of the family room and into my own room). This was the biggest Christmas present my parents had ever purchased, and I am forever grateful for the sacrifices they made to make that happen. They even knew I was a present snoop, so my dad kept it at my Nana’s place on the cape and drove the 3 hours each way on Christmas eve to go get it.

- As a result of the HS-60 purchase, I landed a job at a small music store in my town, tuning guitars, selling Roland, Korg, and Casio keyboards, and minding the register.

- Finally, I was able to borrow (yes, legitimately borrow) from my small high school, during break, a Yamaha DX100, a SIEL EX-600 expander, and an Akai 12 bit sampler, with quick disk drive. That, and a DX-7, was pretty much all they had for electronic music, and almost no one was into anything on than the DX-7. Rather than let them sit unused, the music director was happy to let me borrow them.

That all led me to to discover the power of MIDI. First to link together the different synthesizers to stack the sound, for the ubiquitous “percussive plus pad” sound that was so popular back then, and later through my at-cost purchasing power from working at the music store, to sequence through a Passport MIDI interface and Master Tracks running on the Commodore 128.

I don’t have many photos from the time (hey, cameras were expensive, and so was film and developing), but this is one of them showing the three borrowed devices and just off on the left, my HS-60.

And yes, I was a huge Boston fan. There was a bit of Massachusetts pride involved, but also, I just loved the sound. I still have my Rockman Soloist, although the 80’s spring-steel headphones are long gone. The first Apple II program I wrote in school drew the Boston logo using vector graphics. Took me forever, and I was quite pleased with the results, until I printed it out and found it was stretched 200% or so because of printer vs display aspect ratios. 🙂

I could only afford one synth of my own at first, but between borrowing from the school music department over breaks, and then bringing home new synths from the store to learn them overnight, I always had a lot of MIDI devices kicking around. Later, I bought a Roland MT-32 and then a Sound Canvas for even more MIDI goodness.

I’m pretty sure I still have some 50′ MIDI cables from back then, complete with beige masking tape labels on the ends. 🙂

As a freshman in college, I remember my roommate being profoundly disappointed that I hogged a whole wall with my Commodore, a couple synths, and a bunch of cabling. I was being selfish, but I fixed this later not by being less selfish, but by rooming with music majors, who totally understood. This is not to say I could ever play well, mind you. I just liked playing with the synthesizers and sound modules. 🙂

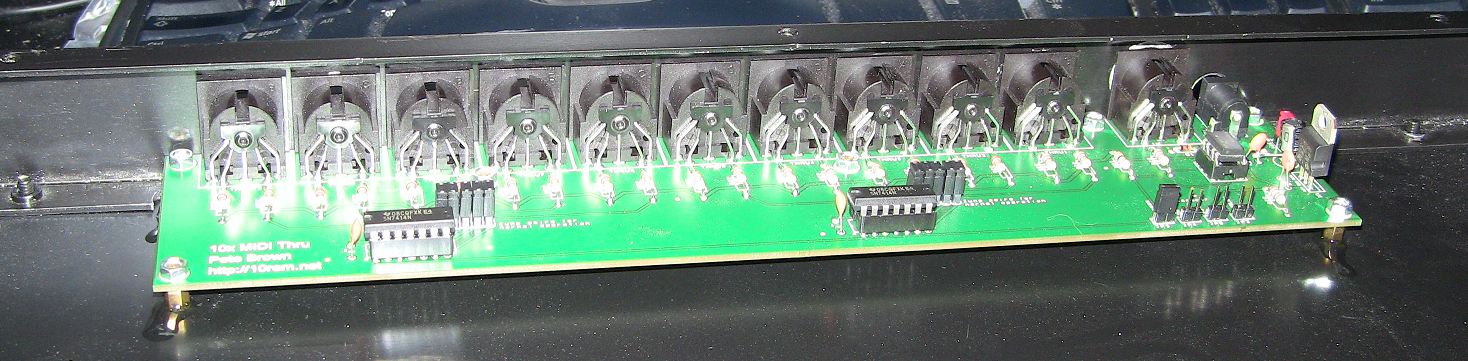

Fast-forward to slightly more recent times, and I not only continued to use MIDI with my PCs, but also to create a hardware MIDI Thru box which wouldn’t suffer the latency that a long thru-chain of devices would. That was really my first time creating my own MIDI hardware, and the first PC I had ever sent out to be manufactured. These days, it’s quite easy to get a PCB made from any number of inexpensive prototyping services. But at the time I created that board, I think it cost me just over $100 to have it made.

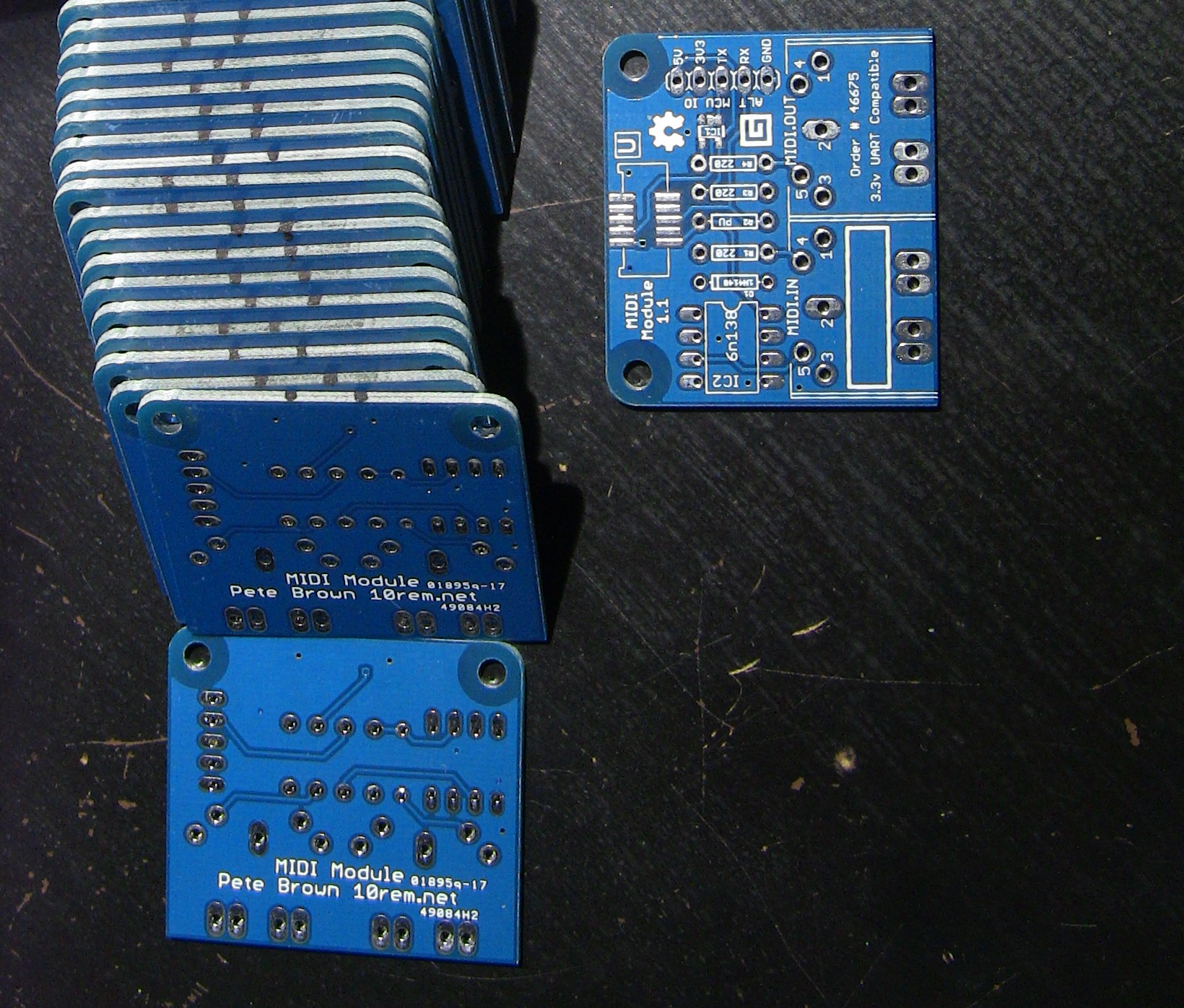

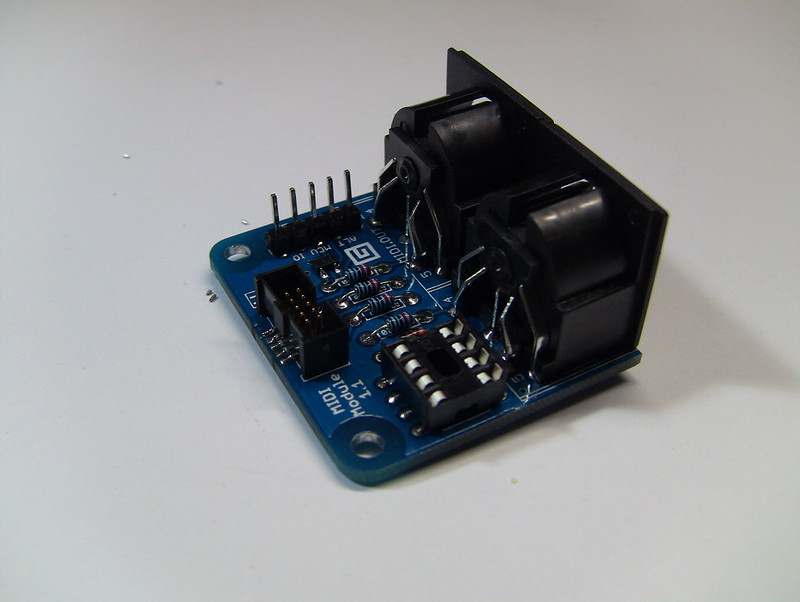

Later, I created a MIDI 1.0 interface (and related API) for the .NET Gadgeteer embedded devices. These are small microcontroller devices which ran a lightweight version of the .NET Framework. I also wrote a compatible library in C for STM8 and also STM ARM microcontrollers.

I did a lot more MIDI hobby work at the time, including different software, a MIDI library for UWP apps, a PowerShell library to automate MIDI functions and a MIDI SysEx transfer app in the Microsoft Store on Windows.

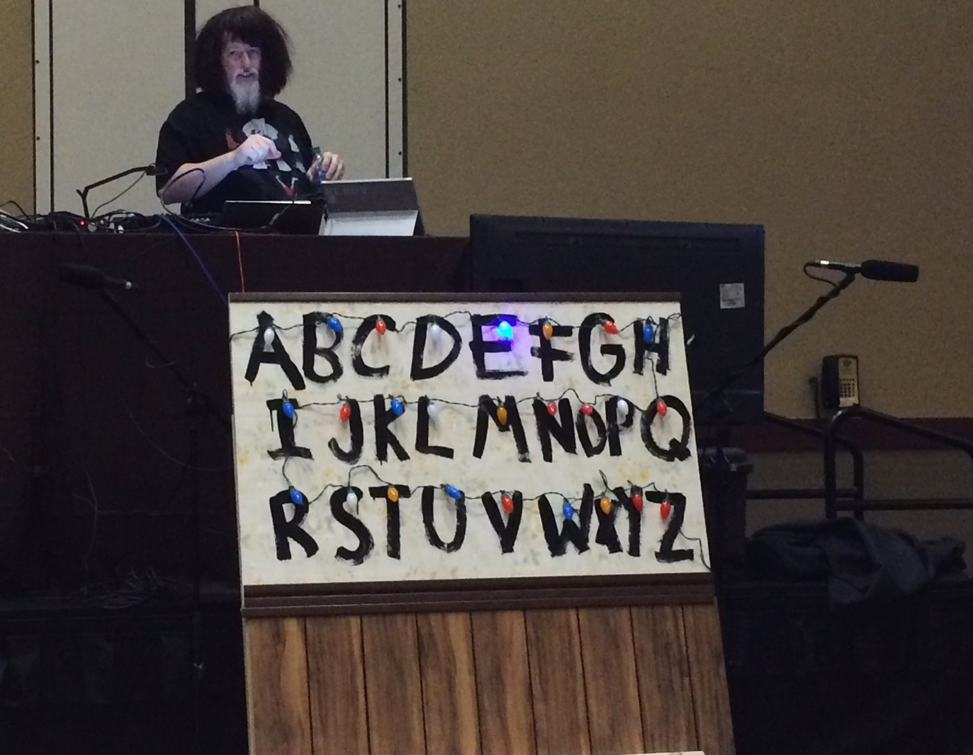

To keep things interesting, and somewhat unique, I’d work MIDI into demos I’d do at various developer events. At one point, I made a Stranger Things Christmas light wall which used cognitive services and a UWP app to recognize voice and then display text on the wall while playing the Stranger Things theme through MIDI on an Analog Four.

At another time, I even managed to control a drone on stage in Belgium (or maybe it was Amsterdam?) and later in Columbia, using a MIDI controller, WinRT MIDI, and the DJI quadcopter SDK.

In recent years, I’ve also been the Microsoft representative to the MIDI Association, and later voted in to serve on the MIDI Association Executive Board, where I’m currently chair.

That’s all just to say that I’ve had a lot of interest in MIDI over the years.

And for as long as I’ve worked at Microsoft, I’ve thought we could do more with MIDI to better serve the folks who use it most: musicians. There are a number of like-minded people here — both users and engineers, on the audio team, on the driver teams, and more. Some of those same people are the ones who created WinRT MIDI and also added BLE MIDI 1.0 support to it.

About MIDI 1.0

MIDI 1.0 was released in 1983. During the time since then, there have been additions to MIDI (like MPE), building on the core foundation of MIDI 1.0 7-bit data, transmitted in 8 bit bytes (the zeroed high bit is to help with identifying message data vs interjected real time messages, or a new message). But the core protocol has been mostly unchanged.

MIDI 1.0 channel voice messages look like this:

| What | Bits |

|---|---|

| Op Code | 4 bits (values 0-15) |

| Channel | 4 bits (values 0-15, represented as 1-16 in software/hardware) |

| Data Byte 1 | Lower 7 bits are data. High bit is zero. Values 0-127 |

| Data Byte 2 (if used) | Lower 7 bits are data. High bit is zero. Values 0-127 |

You can see the full list of MIDI 1.0 messages here.

If you’ve ever wondered why so many synthesizers have different parameter values in the range of 0 to 127, like envelope steps, it’s mostly because of the MIDI 1.0 standard. Not all values are based on a 7 bit byte (like the 14 bits used for pitch bend by combining both data bytes), but most are, including notes themselves.

Many synthesizers, especially more recent ones, would handle mapping to/from internal higher-resolution values, or offer the ability to use more complex registered parameters or even sysex to set higher-resolution values, all while staying with the MIDI 1.0 byte stream protocol.

There’s some clever use of the first two fields to allow for more than 16 possible messages, but the total set of different message types is still quite limited in MIDI 1.0.

MIDI 1.0 originally supported only a 5-pin DIN serial connection at 31,250 bits per second. Later transport standards were added for MIDI over USB in 1999, MIDI over the network (RTP MIDI), and MIDI over Bluetooth LE (BLE MIDI) in recent years. These other standards adapted the MIDI 1.0 byte stream to, in some cases, packetized transport protocols, adding some transport-specific restrictions like fitting SysEx messages into a BLE packet.

The MIDI 1.0 standard was and is a great universal standard that is easily understood and implemented by low-cost 1980’s class microcontrollers. It had grown enough to prove out what customers and developers want and need, and to start to show a few cracks here and there.

MIDI 1.0 Performance Characteristics

The 31,250 bits per second is an interesting speed. With the serial protocol using 10 bits for each byte, and most common MIDI 1.0 messages taking 3 bytes (like note on/off), that means that it takes 30 bits to turn on a note (and then another 30 bits to turn the note off).

From that, you can calculate that it takes just about 1ms to send a DIN MIDI note-on message, all other possible overhead ignored. So if you hold down a big two-handed chord of 6 notes, the last note in that chord, the notes will turn on at (approximately) 1ms intervals, with the last note-on message received 7ms after the first note was held down. These numbers grow when you consider channel pressure (aftertouch) and pitch bend which often accompany these messages.

We’re only talking about the DIN wire protocol speed here. That’s ignoring overhead in the processors of MIDI devices, which often adds more latency. In reality, it tends to be closer to 2ms per message, depending on how many are grouped together at once. In early synthesizers from the 80s, it can be quite a bit higher than 2ms.

MIDI 2.0

Of course, computers and electronic instruments have changed a lot since the early 1980s. There’s a desire for faster message transmission, more per-note expressivity without taking up all the available channels, better support for non-keyboard instruments and different scales (like exact pitch notes instead of just the existing discrete note numbers), more efficient device firmware update payloads, jitter reduction, and more.

Additionally, there’s been real desire for instruments to be able to learn about each other, and to automatically map parameters and controls based on an agreed-up instrument profile. All those manual mapping steps, or third-party wrapper apps we’ve had to use for our instrument plugins would no longer be necessary when they implement the new protocol.

This, and the desire for backwards compatibility with MIDI 1.0, led to the development of the MIDI 2.0 standard, MIDI Capability Inquiry (MIDI-CI), the Universal MIDI Packet (UMP), and the USB MIDI 2.0 transport (others coming, but USB is first), and USB MIDI 2.0 device class specification.

MIDI-CI is built upon SysEx, compatible with MIDI 1.0. As such, it can run on any MIDI 1.0 or MIDI 2.0 connection, as long as that connection is bi-directional (USB, for example, or even two paired DIN cables). It is technically part of the MIDI 2.0 suite of updates, but it’s the most backwards-compatible addition.

You can learn more about MIDI-CI and MIDI 2.0 here, in the official specifications (requires login). Note these are the first versions of the specs; there are updates in progress which change MIDI CI and MIDI 2.0 for the simpler and better, and also add serveral additional messages. Those are in review at the time of this writing, and should be voted on by MIDI Association members, and adopted as official specifications soon.

MIDI 2.0 is built upon the UMP rather than the byte stream MIDI 1.0 uses. There are four different sizes of UMP (32 bits, 64, 96, 128), each based around 32 bit words which follow prescribed formats, packing data into nibbles, bytes, shorts, or words as appropriate, and transmitted as fast as the transport protocol will allow.

Most messages include a 4-bit group field in addition to a MIDI 1.0-compatible 4-bit channel. This effectively provides 256 “channels” per endpoint. There’s more to say about that and how it gets presented when we start talking about the latest standards updates and the APIs.

MIDI 1.0 messages have 1:1 equivalents to allow for transport over UMP. Taking the MIDI 1.0 example from above, here’s the 32 bit UMP version

| What | Bits |

|---|---|

| Message Type | 4 bits (values 0-15) |

| Group | 4 bits (values 0-15) |

| Op Code | 4 bits (values 0-15) |

| Channel | 4 bits (values 0-15, represented as 1-16 in software/hardware) |

| Data Byte 1 | Lower 7 bits are data. High bit is zero. Values 0-127 |

| Data Byte 2 (if used) | Lower 7 bits are data. High bit is zero. Values 0-127 |

The encapsulation of MIDI 1.0 messages in UMP makes it simple to provide translation and a common message format in the API.

The combination of Message Type and opcode gives you up to 256 distinct messages. Groups are an expansion of the channel concept. For MIDI 1.0 devices, the group is most closely related to a port. We’ll have more details on this when covering the API.

In addition to the 32-bit MIDI 1.0 messages, there are 64-bit MIDI 2.0 messages which expand upon the MIDI 1.0 messages with additional fidelity. Here’s a Note On message, for illustration:

| What | Bits |

|---|---|

| Message Type | 4 bits (values 0-15) |

| Group | 4 bits (values 0-15) |

| Op Code | 4 bits (values 0-15) |

| Channel | 4 bits (values 0-15, represented as 1-16 in software/hardware) |

| Note Number | 7 bits (for compatibility) |

| Attribute Type | 8 bits |

| Velocity | 16 bits |

| Attribute Data | 16 bits |

The Attribute Type and Attribute Data allow for much more flexible note messages, and are covered in detail in the MIDI 2.0 specification. The takeaway is that you are no longer limited to 127 discrete note values.

There are many other messages which expand what MIDI can do, including discovery and informational messages, jitter reduction time stamps, information queries, and messages designed for bulk dumps of data in a faster way than 7-bit SysEx messages support today.

MIDI 2.0 devices are bi-directional rather than unidirectional by default. The bi-directional nature (working with streams rather than in/out ports), the change from a byte stream to a packetized protocol, the new messages, the additions of groups to the concept of channels, and the new USB device class, all made it obvious that we needed to have a different API than what we use today — something that supports all of these features, and is far more easily extensible for new messages and transports.

What is this all leading to?

This is all leading to Windows MIDI Services, a combined open source and Windows internal project underway at Microsoft.

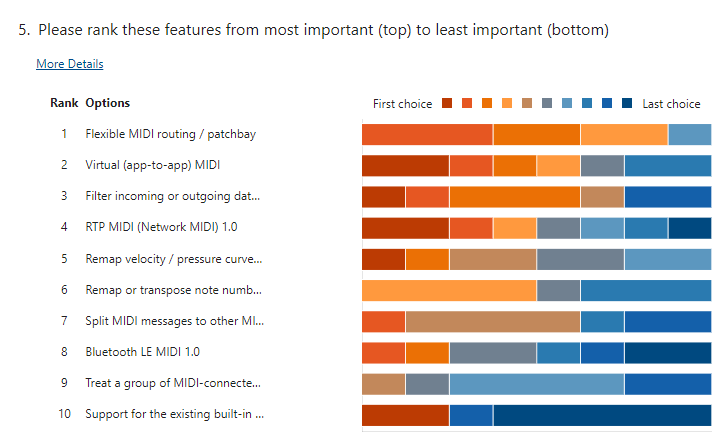

Over the spring and summer, I prototyped a lot of ideas and presented them to the teams at Microsoft. The intent was to show what we can do with MIDI on Windows. Most of my prototyping was in C# (it’s seriously easier to create Windows Services in .NET), but especially for a client-side library, the code needs to be C++. Plus, during prototyping, I got to ignore things like security, and overall robustness. I also polled developers and members of the community to validate some assumptions I had about what folks wanted in an API. Here’s one set of responses from end-users of MIDI on Windows.

From that, in the early fall, the audio team stepped up and combined their own best practices and ideas with suggestions I had from the prototype, and came up with a great extensible architecture that does all the things I had hoped it would.

At the same time, the aformentioned AMEI driver contribution project kicked off to create the USB MIDI 2.0 driver for Windows as MIT-licensed open source.

Everything which can be open source in the project, is and will be. Some things, like any shims we need in Windows to repoint the existing MIDI 1.0 APIs, or any code we adapt from shipping code today, will remain internal to Windows and not OSS. We’re trying to minimize those cases, though. As a proper open source project, we will consider pull requests and contributions from the community and encourage participation in the project. The code involved will include C++ for the service and USB driver, plugins, and transports; C++/WinRT for the API and related code; and C# for the settings application and end-user tools. So there’s something there for most everyone to contribute to.

Learn more at the ADC session

I’ll leave this post here, and plan to share more technical details in upcoming blog posts. In the meantime, I encourage you to watch the Audio Developer Conference (ADC) 2022 session that is jointly presented by Apple, Google, Microsoft, and the MIDI Association. You can learn more about that session here:

https://sched.co/19weE

Of course, once we can be fully open sourced (when the MIDI Association/AMEI NDA is lifted on the new features), you’ll also be able to see progress right on Github as well.

I’m really excited about this open source project, and can’t thank AMEI and AmeNote enough for their contribution to MIDI! I hope you will join us.

Hi Pete,

I would like to discuss a custom application I need developed for NAMM 2023.

I'm an attorney by day in New York, and a home studio musician by night.

I recently invented and I have a patent pending on a new musical instrument that allows anyone to play sophisticated chords and chord sequences with one finger with no knowledge of music theory and no experience playing any instrument. It has a second component that ties the other hand to the pentatonic scale of the currently playing chord, with the index, middle and ring fingers tied to the root, third, and...

Great article! Gives me hope there is progress! I've been watching MIDI 2.0 for a few years now, patiently & excitedly. It's been a long wait. Since 2018 I've been developing a wearable & wireless MIDI controller that utilizes a motion / AR-type approach and while it does cut the mustard in terms of my original goals... its true potential will only be unlocked by MIDI 2.0. This project isn't my day job so I've chosen to just wait until the new standard gains some traction and I can implement it, rather than try an...

Why is this being developed in C++ rather than Rust? This does not appear to be a good or safe decision. We have to start to move past C/C++ for the sake of everyone’s security.

Python? Perl? ... Will this support those massive sound files created ... https://www.thestrad.com/featured-stories/new-software-allows-users-to-recreate-the-sound-of-a-stradivarius/10896.article ... and don't forget Linux!? A fluidsynth upgrade or replacement would be wonderful. Hints, hints, hints ... tantalizing hints required!

I actually don't mind 'c', but c++ causes goosebumps and hair raising on the back of my neck shivers. [There are nice ways to protect allocations of memory, buffers and pointers ... and don't allow "(void *)" or 'union' for anything!] Whereas looking at a simple routine that is having unexpected behavior in c++ due to use of all the complicated features of c++ ......

It would be great if we could regard announcements such as this as being entirely positive. Making the MIDI stack largely open source is particularly welcome news. Microsoft's early efforts in the realm of multimedia were groundbreaking. Some of the best and earliest MIDI authoring tools first appeared on Windows. The initial design changes proposed for the WinRT MIDI stack sounded fantastic! Unfortunately, Microsoft's execution has been very problematic in key areas of the Windows technology stack over the past 10 years. The WinRT MIDI stack was broken and unusable for at least 5...

Thanks Mario

I get the skepticism and concern. We feel that making the API, service, driver, and tools permissive open source, and involving partners and the community, will help us deliver something useful and impactful for music making.

In the end, the proof will be in what is delivered. I hope you stick with us. 🙂

Pete