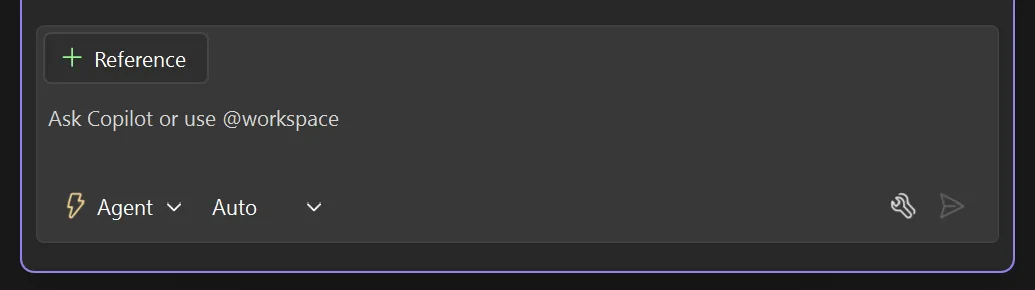

Faster responses, a lower chance of rate limiting, and 10% off premium requests for paid users – auto picks the best available model for each request based on current capacity and performance. With auto, you don’t need to choose a specific model. Copilot automatically selects the best one for your task. Auto model selection in Chat is rolling out in preview to all GitHub Copilot users.

How auto model selection works

Auto selects the best model to ensure that you get the optimal performance and reduce the likelihood of rate limits. Auto will choose between GPT-5, GPT-5 mini, GPT-4.1, Sonnet 4.5, and Haiku 4.5 and other models, unless your organization has disabled access to these models. Once auto picks a model, it uses that same model for the entire chat session. As we introduce picking models based on task complexity, this behavior will change over the next iterations.

For paid users, we currently primarily rely on Claude Sonnet 4.5 as the model powering auto.

When using auto model selection, Visual Studio uses a variable model multiplier based on the automatically selected model. If you are a paid user, auto applies a 10% request discount. For example, if auto selects Sonnet 4.5, it will be counted as 0.9x of a premium request.

If you are a paid user and run out of premium requests, auto will always choose a 0x model (for example, GPT-4.1), so you can continue using auto without interruption.

What’s next

Our long-term vision for auto. We aim to make auto the best model selection for most users and to achieve this, here’s what we plan next:

- Dynamically switch between small and large models based on the task – this flexibility ensures that you get the right balance of performance and efficiency, while saving on requests

- Add more language models to auto

- Let users on a free plan take advantage of the latest models through auto

- Improve the model dropdown to make it more obvious which models and discounts are used

Thanks 😊

Unfortunately those are two different teams, vscode driving the direction. Kind of absurd but popularity wins i guess, vscode is more used than visual studio. Although probably from a business perspective enterprise development with paid IDEs should be priority.

Great addition 🙂

2 questions:

– Is there a place that shows the roadmap on a quarterly basis? (or at minimum what is planned for the current Q?)

– Is Visual studio going to catch up to VS code in regard to copilot experience ? the difference in experience is massive today? should all VS users just migrate to VS code?

Valid question. Needed this lately and it didn’t accept images as input. I was sure that this was already a working feature, deception.

When are we going to be able to attach image / screenshot to copilot chat in Visual Studio?

I am not yet convinced I like this feature. Based on my experience so far, some models are not integrated as well as others. Hopefully this will be a user choice and not admin imposed.