Just like designing with accessibility in mind became a common software design consideration, designing software with environmental sustainability at its core will be the next evolution in software development life cycle.

Environmental footprint of the cloud is commonly measured in terms of Scope 1, 2, and 3 emissions. Scope 1 emissions are direct emissions such as a company’s car fleet, air travel, buildings, and manufacturing. Scope 2 emissions comprise purchased energy and power sources. Scope 3 emissions are indirect emissions from a company’s supply chain, contractors, suppliers, and vendors. In the case of the cloud, energy is a major component of the total carbon footprint, so is the data center infrastructure – millions of the servers running across the world in the data centers measure 4 football fields in size and consume hundreds of megawatts of power.

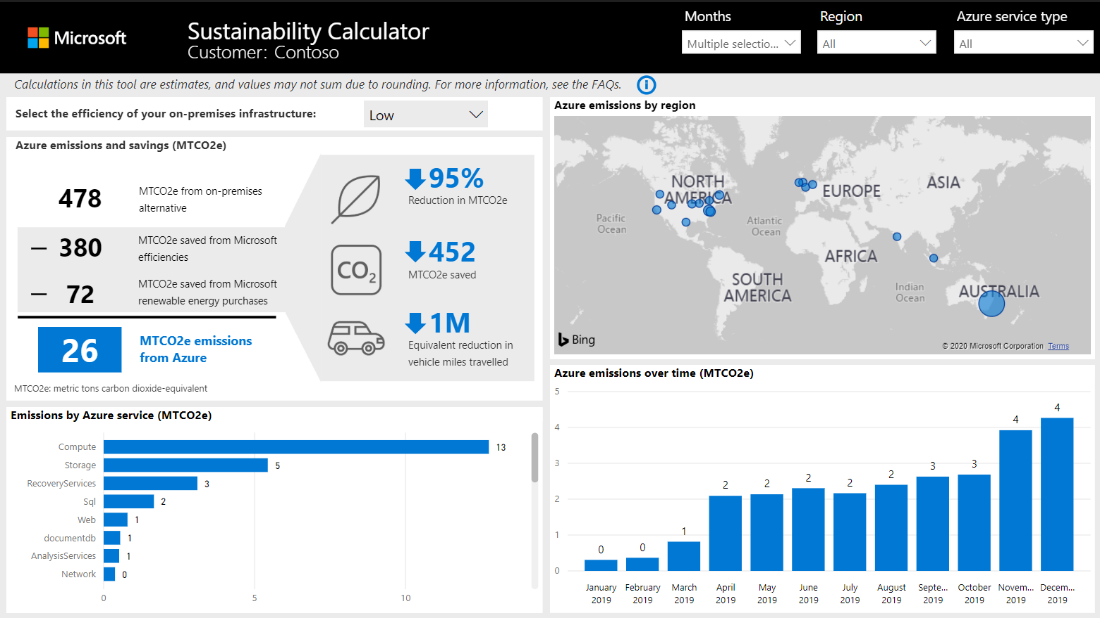

While migration of on-prem infrastructure to the cloud provides tangible environmental benefits along with cost savings to the enterprise customers, cloud infrastructure overall, is also much more sustainable because of the hyper-scale and efficiency of the cloud infrastructure. Customers can evaluate Global Warming Potential of running on-prem workloads vs. in the cloud using the first of a kind sustainability calculator.

Sustainable Networking

While improving the mix of energy sources and increasing circularity of the hardware running in the data centers are fundamental steps needed to make cloud ecosystem more sustainable, there is an additional aspect of the cloud infrastructure that does not get as much attention as it should. Independent research has shown that the network fabric used to connect cloud services to the end consumer can account for as much as 60% of the power drawn by the data center hosting the service and the application1,2. While the exact estimates may vary, there is no doubt that networking is responsible for a significant portion of the total cloud carbon footprint.

Energy supplier, Ovo Energy, analyzed how much CO2 is produced in a single sent email and estimates that if UK-based adults would send one less email per day, they would “reduce carbon output by over 16,433 tons a year – the equivalent of 81,152 flights to Madrid or taking 3,334 diesel cars off the road”3. Given that such small changes can lead to such massive environmental benefits, it becomes clear that a lot of gains can be achieved through a different architectural approach for how software services running in the cloud are architected, planned, and deployed. These gains can be achieved independently from the hardware design that is used to run the corresponding workloads in the cloud.

Not all cloud services are the same. The total carbon footprint of a service as measured by the power required to run it, depends not only on the number of virtual machines used by the service, but also on the bandwidth needed to support these applications. For example, an application requiring near real-time updates as opposed to batch synchronization can result in much more power drawn due to throughput required. In fact, according to the same research, the majority of the power draw falls on what is called access layer or the layer that connects the cloud provider’s internal wide area network to the end customer. Examples of access layer in terms of physical network topology could be 5G or LTE towers and other network equipment required to carry bits of data from the core network of the cloud provider to the end consumer of the service.

These findings support recently published principles of sustainable software engineering.

- Network connectivity plays an important role in developing more sustainable software and cloud infrastructure.

- Along with changing software engineering patterns, developers can take advantage of the new cloud infrastructure architecture to make their services use less power by getting their compute heavy workloads closer to the end customer.

- Reducing need for bandwidth heavy workloads or reducing network overhead for large data migration projects helps reduce customer’s overall carbon footprint as well as the carbon footprint of the cloud service itself.

Practical Scenarios

So how can enterprises and cloud providers leverage these insights? Thinking about how to best utilize available network infrastructure upfront can reduce customer’s carbon footprint as well as the carbon footprint of their cloud provider. This also represents a paradigm shift in how cloud services are designed, planned, and deployed. Just like designing with accessibility in mind became a common software design consideration, designing software with environmental sustainability will be the next evolution in software development life cycle.

With that in mind, it’s important to ask about software engineering patterns that could reduce the total amount of traffic sent between customers and services running in the cloud. Below are a few examples of how this can be done using a mix of using the latest hardware and software innovations:

- Reduce the real-time bandwidth requirements by utilizing intelligent AI driven services like Microsoft Graph. Graph API can be used to prioritize real-time updates by using information on the degrees of separation between teams and individuals within the enterprise. Microsoft Graph can be used to determine highest priority contacts in your network and push real-time updates to these users first, while batching or time shifting updates to the rest of your network.

- Get compute heavy workloads closer to the end user, thus reducing the number of trips and hops bits have to travel between end user and the backend cloud service. Edge Computing allows customers to run compute and storage intensive applications using a dedicated appliance installed right in their own data center, office or branch and connect to the cloud only as needed. This drastically reduces the amount of data being sent to and from the cloud.

- Simplify, expedite, and reduce carbon footprint of large data migration projects by moving data physically rather than over the wire. Large data migration projects often entail migrating petabytes of data from on prem data center into the cloud. Even with modern high-throughput fiber optic networks, such projects can take days, in some cases weeks to complete. Azure Data box is a set of encrypted storage bearing devices that range from 8-TBs to 1PB in size and allow customers to move data from and into the cloud by writing data onto the device and physically shipping it to the end location whether it is on-prem data center or Azure Region.

You can learn more about emerging discipline at the intersection of climate science, software practices and architecture, electricity markets, hardware and data centre design at Principles of Sustainable Software Engineering page.

1 Andrae, Anders. (2017). Total Consumer Power Consumption Forecast. https://www.researchgate.net/publication/320225452_Total_Consumer_Power_Consumption_Forecast ↑

2 Jalali, Fatemeh. (2015). Energy Consumption of Cloud Computing and Fog Computing Applications. https://minerva-access.unimelb.edu.au/bitstream/handle/11343/58849/Jalali_Fa_thesis.pdf?sequence=1&isAllowed=y ↑

3 Ovo Energy. “’Think Before You Thank’: If every Brit sent one less thank you email a day, we would save 16,433 tonnes of carbon a year – the same as 81,152 flights to Madrid”. https://www.ovoenergy.com/ovo-newsroom/press-releases/2019/november/think-before-you-thank-if-every-brit-sent-one-less-thank-you-email-a-day-we-would-save-16433-tonnes-of-carbon-a-year-the-same-as-81152-flights-to-madrid.html ↑

0 comments