In June 2020, we shared how Azure.com achieves a global scale and how Sustainable Software Engineering principles were at the center. In this post we discuss how serverless architecture helped us create more sustainable apps and saved us 10x in azure spend for our data pipeline middleware. By shifting our workloads from always-on to on-demand (i.e. sustainable engineering), our carbon efficiency has significantly improved.

Migration to Serverless

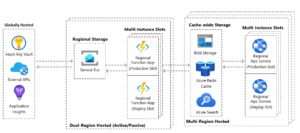

In November 2020, Azure.com successfully completed the migration of dozens of data pipelines from dedicated always-on App Service WebJobs to Serverless Functions using consumption-based utilization. Our primary motivators were reduction in COGS savings, sustainable engineering, and design simplicity. Each datacenter used to host its own data pipeline for handling external data integrations. With the new strategy, we only run our data pipelines in a single geography, but use a shared service bus for business continuity. The architecture is depicted below and summarizes the before and after workflows. Azure.com data pipelines are data ingestion workflows that take external data stores and extract-transform-load (ETL) them to local regional data stores for high-availability. An example workflow for us would be the Azure.com blog. We extract data from an external data store and transform it to local data stores (e.g. cache-aside pattern) such as redis, blob storage, and search used in our page rendering layer.

WebJobs Design Architecture – Dedicated Compute

Serverless Design Architecture – Elastic Compute

Another optimization we made architecturally was adopting a push data model to reduce unnecessary API calls when no data had changed in the underlying source. Where possible, this tradeoff required tighter-coupling with external systems (using a service bus pub/sub pattern), but the payoff was increased savings as we only use compute, storage, and networking when necessary. Previously we used timer cron jobs to ping API endpoints even when data had not changed and in some cases wastefully refreshing the entire data targets.

Once we adopted function apps per data pipeline, we deprecated our legacy webjobs footprint, reducing our COGS spend 10x. This architectural refactoring reduced not only our Azure Spend but also our carbon consumption by 10x as we no longer spent wasteful cycles doing no-ops or refreshing data with the same dataset. This approach is consistent with sustainability principle of energy proportionality – specifically “maximizing utilization levels” by using elastic consumption-based compute. With push-data models we were able to make a more sustainable architecture and take a simpler design approach.

Scalable Challenges – Sustainable Wins

There were several data pipeline scalability challenges we encountered during this migration. The first challenge that we ran into was message drops as our service bus endpoint was being throttled. Throttling only triggered under high messaging load. Our solution was to leverage service bus autoscale for elastic messaging units. The default messaging units enable a single worker, while scaling out during peak demands supported multiple service bus workers. The second challenge we overcame to further reduce network utilization was employing batched publishers and subscribers rather than sending or receiving one service bus message at a time per function. We can publish or accept a bulk number of messages. This significantly reduced the chattiness of the function / service bus interface and improved the data throughput.

Your Journey

Tell us in the comments below how you are applying sustainable principles into your application architecture. Kudos to the Azure.com engineering team who helped make this progress towards sustainability possible!

Future Roadmap

Azure.com continues to drive towards more sustainable architecture. Our next step is leveraging .NET 5.0 for our content rendering pipeline further driving towards increased request per second (RPS) per dedicated App Service node and reducing our compute footprint.

0 comments