We previously introduced observability within the Semantic Kernel. For further insights, please refer to our previous blog post, and you can also explore our learn site for additional details.

To summarize, observability is an essential aspect of your application stack, particularly in today’s landscape where AI plays a significant role in numerous applications. Given that large language models are inherently stochastic, they do not consistently generate the same outputs from identical inputs. As an application developer, it is vital for your team to have the capability to monitor the transactions made by your application to these models. This enables you to assess and optimize your application’s performance throughout the development process and troubleshoot issues during production.

We are excited to announce that we have partnered with the Azure AI Foundry team to bring you a better AI observability experience to Semantic Kernel.

We have upgraded to use the latest Azure AI Foundry SDK. Together with their new tracing capability, you are getting the latest features as well as telemetry data that follows the GenAI semantic conventions whenever you are using an Azure AI Inference service or Azure OpenAI endpoint,

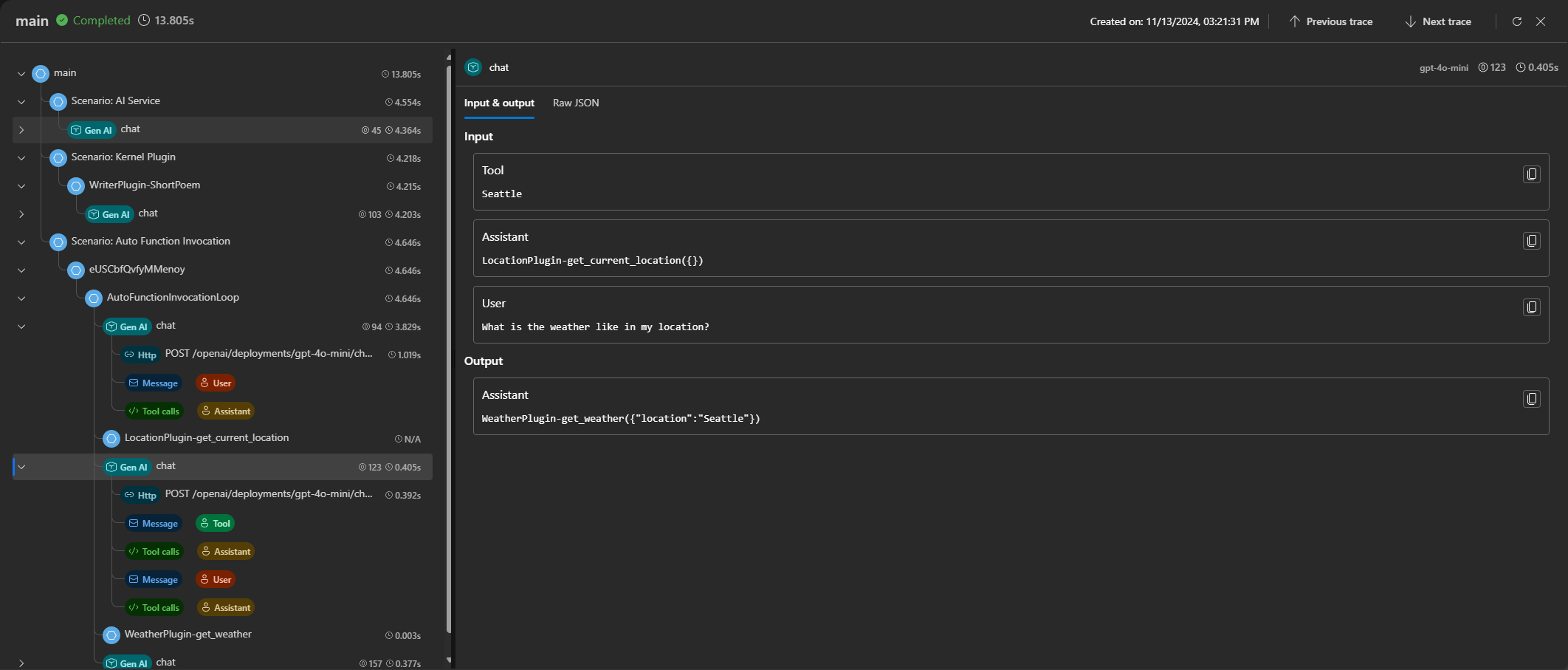

Another noteworthy feature is the new Tracing UI in Azure AI Foundry Portal (formerly Azure AI Studio). If you are developing your AI application using Azure AI Inference or Azure OpenAI, you will benefit from a comprehensive resource management, deployment, and monitoring experience, all conveniently integrated within the Azure platform.

We encourage you to visit our GitHub repository, where you will find a sample designed to run various scenarios for generating telemetry data. For a detailed walkthrough of the sample, please refer to our learn site.

The Semantic Kernel team is dedicated to empowering developers by providing access to the latest advancements in the industry. We encourage you to leverage your creativity and build remarkable solutions with SK! Please reach out if you have any questions or feedback through our Semantic Kernel GitHub Discussion Channel. We look forward to hearing from you! We would also love your support, if you’ve enjoyed using Semantic Kernel, give us a star on GitHub.

0 comments