Today we’re featuring a guest author, Akshay Kokane, who’s a Software Engineer at Microsoft within the Azure CxP team. He’s written an article we’re sharing below, focused on a Step-by-Step Guide to Building a Powerful AI Monitoring Dashboard with Semantic Kernel and Azure Monitor: Master TokenUsage Metrics and Custom Metrics using SK Filters. We’ll turn it over to Akshay to share more!

Semantic Kernel is one of the best AI Framework for enterprise application. It provides cool and useful out of box features. In this blog we will look into how you can integrate Semantic Kernel with Azure Monitors, to meter your Token Usage. Semantic Kernel provides default metrics to meter the token usage.

Additionally, we will explore Filters in Semantic Kernel and how they can be used to emit custom metrics.

Let’s understand first what are types to tokens.

Types of Token Usages

- 📄 Prompt Token Usage: This refers to the number of tokens that make up the input prompt you provide to the model. For example, if you input the sentence “What is the capital of France?”, this sentence will be broken down into a series of tokens. The total number of these tokens is the prompt token usage.

- 💬 Completion Token Usage: This refers to the number of tokens generated by the model in response to your prompt. If the model responds with “The capital of France is Paris,” this response will also be broken down into tokens, and their total is the completion token usage.

- 🔢 Total Token Usage (Prompt + Completion): This is simply the sum of the prompt and completion token usage. It represents the total number of tokens used in the interaction, which is important for tracking and managing costs, as many LLMs charge based on the number of tokens processed.

Purpose of Plotting this dashboard

- ⚙️ Optimization: If you’re building an application that uses an LLM, minimizing token usage can reduce costs and improve performance.

- 💡 Insights: Understanding the relationship between different prompts and token usage helps in refining the prompts for better, more concise outputs.

- 📊 Monitoring: Keeping track of token usage is crucial, especially when working within token limits imposed by the LLM service or when dealing with large volumes of requests.

📝 Step by Setp Guide For Recording Metrics for Token Usage using Default Semantic Kernel Token Metrics and Azure Monitors

Step 1: Lets create Azure Monitor’s AppInsights resource. You can use this link — https://portal.azure.com/#create/Microsoft.AppInsights

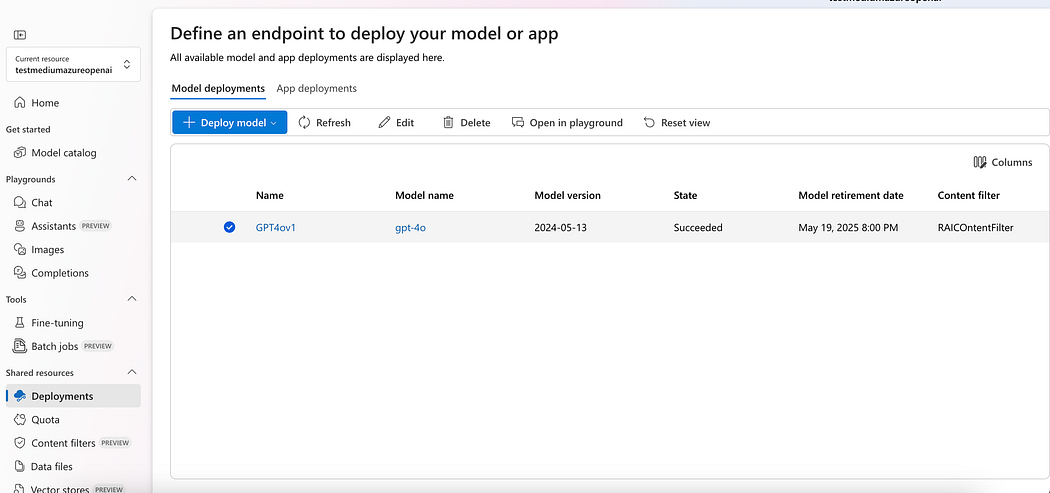

Step 2: Make your Azure OpenAI deployment ready. You can also use OpenAI. Want to learn difference between Azure OpenAI and OpenAI or how to create deployments in Azure OpenAI? Check out my previous blog: https://medium.com/gopenai/step-by-step-guide-to-creating-and-securing-azure-openai-instances-with-content-filters-061293f0a042

Step 3: In your Semantic Kernel .NET application, implement metering using the Meter class from the System.Diagnostics.Metrics namespace. Add the ConnectionString from your Application Insights resource, as demonstrated in Step 1.

var meterProvider = Sdk.CreateMeterProviderBuilder()

.AddMeter("Microsoft.SemanticKernel*")

.AddAzureMonitorMetricExporter(options => options.ConnectionString = "InstrumentationKey=<COPY IT FROM YOUR RESOURCE>")

.Build();

Step 4: Build a Kernel and invoke your first message

var builder = Kernel.CreateBuilder().AddAzureOpenAIChatCompletion(modelId, endpoint, apiKey);

Kernel kernel = builder.Build();

var response = await kernel.InvokePromptAsync("hello");

Step 5: Lets try Summarisation prompt

Step 6: Go to Application insights -> metrics to view token usage. If everything was done right, you should be able to see the graph

Now you can pin these metrics to a dashboard. You can create a new one or use an existing dashboard. Additionally, you can pin the metrics to an Azure Grafana dashboard, which is an enterprise version of the open-source Grafana dashboard. Based on my experience, Azure Grafana is very easy to use.

📝 Step by Step Guide For Recording Custom Metrics using Semantic Kernel and Azure Monitors

In most recent updates, Semantic Kernel included filters. Filters allow developers to add custom logic that can be executed before, during, or after a function is invoked. They provide a way to manage how the application behaves dynamically, based on certain conditions, ensuring security, efficiency, and proper error handling. Filters help in preventing undesired actions, such as blocking malicious prompts to Large Language Models (LLMs) or restricting unnecessary information exposure to users.

There are 3 types of filters Semantic Kernel offers:

- 🛡️ Prompt Render Filters: These filters allow developers to modify prompts before they are sent to an LLM, ensuring that the content is safe or properly formatted.

- 🔄 Auto Function Invocation Filters: A new type of filter designed for scenarios where LLMs automatically invoke multiple functions. This filter provides more context and control over the sequence and execution of these functions.

- ⚙️ Function Invoked Filters: These filters allow developers to override default behavior or add additional logic during function execution. We can export metrics for Token Usage after every function execution.

Now, let’s start implementing! 🚀

Step 1: Lets create a simple plugin, which will process the user prompt. If the Large Language Model (LLM) knowledge is insufficient to answer a user’s question, Retrieval-Augmented Generation (RAG) is employed.

using System.ComponentModel;

public class Plugins {

private readonly Kernel Kernel;

private const string resume = @"# John Doe

Email: john.doe@example.com

Contact: (123) 456-7890

# Experience

## Software Engineer at TechCorp

- Developed and maintained web applications using JavaScript, React, and Node.js.

- Collaborated with cross-functional teams to define, design, and ship new features.

- Implemented RESTful APIs and integrated third-party services.

- Improved application performance, reducing load time by 30%.

## Junior Developer at CodeWorks

- Assisted in the development of web applications using HTML, CSS, and JavaScript.

- Participated in code reviews and contributed to team discussions on best practices.

- Wrote unit tests to ensure code quality and reliability.

- Provided technical support and troubleshooting for clients.

# Skills

- Programming Languages: JavaScript, Python, Java, C++

- Web Technologies: HTML, CSS, React, Node.js, Express.js

- Databases: MySQL, MongoDB

- Tools and Platforms: Git, Docker, AWS, Jenkins

- Other: Agile Methodologies, Test-Driven Development (TDD), Continuous Integration/Continuous Deployment (CI/CD)

# Certifications

- Certified Kubernetes Administrator (CKA)

- AWS Certified Solutions Architect – Associate

- Microsoft Certified: Azure Fundamentals

# Education

## Bachelor of Science in Computer Science

- University of ABC, 2016-2020

- Relevant coursework: Data Structures, Algorithms, Web Development, Database Systems

## High School Diploma

- Example High School, 2012-2016

- Graduated with honors";

public Plugins (Kernel kernel)

{

this.Kernel = kernel;

}

[KernelFunction, Description("Use this plugin function as entry point to any user question")]

public async Task<string> ProcessPrompt(string userprompt)

{

var classifyFunc = Kernel.CreateFunctionFromPrompt($@"Do you have knowledge to answer this question {userprompt}?

If yes, then reply with only ""true"" else reply ""false"". Don't add any text before or after bool value ", functionName: "classifyFunc");

var response = await classifyFunc.InvokeAsync(Kernel);

Console.WriteLine(response.ToString());

KernelArguments arg = new KernelArguments();

var renderedPrompt = userprompt;

if (response.ToString().Equals("false"))

{

var document = resume; // AI Search/VectorDB can be used for fetching the relevant document

arg.Add("document", document);

renderedPrompt += "Using {{$document}} answer the following question: " + renderedPrompt;

}

var answerTheQuestionFunc = Kernel.CreateFunctionFromPrompt(renderedPrompt, functionName: "answerTheQuestionFunc");

response = await answerTheQuestionFunc.InvokeAsync(Kernel, arg);

return response.ToString();

}

}

Don’t forget to register your Plugin with Kernel,

kernel.Plugins.AddFromObject(new Plugins(kernel));

So the flow will be something like this:

Step 2: Add Filter using IFunctionInvocationFilter . This filter will emit metrics called “RagUsage”. If the context has argument named “document”, that means RAG technique will be used (usedRag=true), if it is not there it means RAG was not used (usedRag = false).

public class RAGUsageFilter : IFunctionInvocationFilter

{

public async Task OnFunctionInvocationAsync(FunctionInvocationContext context, Func<FunctionInvocationContext, Task> next)

{

var functionName = context.Function.Name;

var meter = new Meter("Microsoft.SemanticKernel", "1.0.0");

var ragCounter = meter.CreateCounter<int>("semantic_kernel.ragUsage");

if (context.Arguments.ContainsName("document"))

{

var renderedPrompt = "";

ragCounter.Add(1, KeyValuePair.Create<string, object>("UsedRag", false));

}

else

{

ragCounter.Add(1, KeyValuePair.Create<string, object>("UsedRag", true));

}

await next(context);

}

}

Then update the kernel builder to add this as singleton IFunctionInvocationFilter

var builder = Kernel.CreateBuilder()

.AddAzureOpenAIChatCompletion(modelId, endpoint, apiKey);

builder.Services.AddSingleton<IFunctionInvocationFilter, RAGUsageFilter>();

Kernel kernel = builder.Build();

Step 3: Enable AutoFunctionCalling. I am going to use OpenAI FunctionCalling, to automatically invoke the plugin function.

OpenAIPromptExecutionSettings openAIPromptExecutionSettings = new()

{

ToolCallBehavior = ToolCallBehavior.AutoInvokeKernelFunctions

};

KernelArguments args = new KernelArguments();

args.ExecutionSettings = new Dictionary<string, PromptExecutionSettings>() {

{PromptExecutionSettings.DefaultServiceId, openAIPromptExecutionSettings}

};

Now lets invoke the prompt, and record if user questions requires RAG or not

// RAG needed, as the question is about my resume

var response = await kernel.InvokePromptAsync("Tell me more about my resume", args);

response.ToString()

// RAG not needed, as the question is general question

var response = await kernel.InvokePromptAsync("Tell me story about lion", args);

response.ToString()

Note: I am using .NET Interactive notebook, but the above code blocks can be inside the loop and can take input from users to give real chat like experience.

Finally we can get this dashboard

0 comments