Over the past several weeks, the Semantic Kernel team has been hard at work preparing for the v1.0.0 release at the end of the calendar year. As part of this change, we wanted to complete any remaining breaking changes so developers could have a stable API moving forward. If you are interested in seeing what these changes are, you can preview them using our nightly builds.

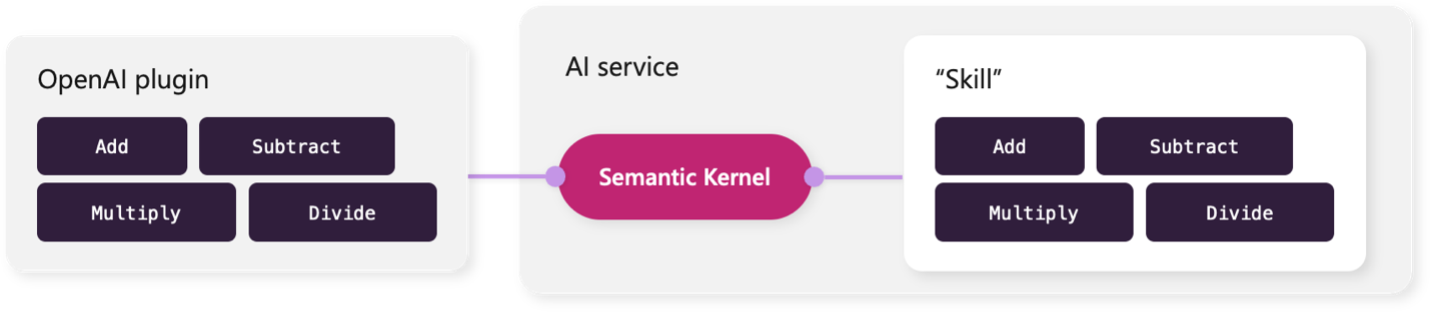

We will provide a blog post with all of the significant changes next week when we provide the first release candidate (RC), but before then, we wanted to provide clarity of the biggest change we’re making: renaming “skills” to plugins. We’ve done this so we can better align the internal workings of Semantic Kernel with the plugin specification developed by OpenAI. We believe this will help reduce concept counts and make Semantic Kernel even simpler to use moving forward.

What are “plugins” today?

Thanks to the spec defined by OpenAI, any developer can take their existing APIs, describe them using both an OpenAPI specification and an ai-plugin.json file so ChatGPT can intelligently perform actions on behalf of a user. A common (and powerful) example is to use the Bing plugin so ChatGPT can answer questions using current information from the world wide web.

Because OpenAI plugins simply describe the behavior of APIs served up on HTTP endpoints, plugin authors can allow their plugins to be used by any other AI service. In the future, this will include Teams Business Chat and Bing Chat. This is powerful because it means you as a developer can write code once and allow your customers to leverage your plugin no matter which agent they use.

Today, you can already import these plugins into Semantic Kernel.

If you’re building your own agent with Semantic Kernel, we also make it easy to import plugins defined with an OpenAI spec so your planners can leverage the functions when auto-generating plans for users. Below is a code sample of how you would previously import an AI plugin.

// Add the math plugin using the plugin manifest URL

const string pluginManifestUrl = "http://localhost:7071/.well-known/ai-plugin.json";

var mathPlugin = await kernel.ImportAIPluginAsync("MathPlugin", new Uri(pluginManifestUrl));

“Skills” in Semantic Kernel aren’t that much different…

What’s traditionally been called “skills” in Semantic Kernel are like OpenAI plugins. Like plugins, they have the following traits:

| Similarity | Skills | Plugins |

| Both have a collection of actions that can be performed by an agent. | SKFunctions | HTTP endpoints |

| Both semantically describe what the action is, when it should be used, and how to use. | SKFunction decorators | OpenAPI route specification |

| Both can be imported into the kernel so they can be invoked and used in plans. |

ImportSkill() |

ImportAIPluginAsync() |

The only difference is where they’re run: locally or remotely.

Whereas OpenAI plugins are remote services that you can call with HTTP requests, “skills” are native or semantic functions that are called by the same process that’s running your kernel.

There are pros and cons to both approaches. By running “skills” in the same process, you can get performance improvements and more easily debug your code if something went wrong. Unfortunately, “skills” are difficult to share with others and cannot be seamlessly imported into agents like ChatGPT, Teams Business Chat, or Bing chat.

This makes it difficult to choose: build a plugin or a “skill”?

Because of the subtle differences, developers have a hard choice. Do you build a “skill” to get the efficiency gains? Or spin up a new service that your customers can use in their own AI applications?

This choice is also hard because it’s not easy today to convert between the two types. Not only would you need to refactor your code to express each SKFunction as an HTTP endpoint, but you’d also need to retest your code with Semantic Kernel because planners today treat “skills” and plugins subtly differently.

This choice is also hard because it’s not easy today to convert between the two types. Not only would you need to refactor your code to express each SKFunction as an HTTP endpoint, but you’d also need to retest your code with Semantic Kernel because planners today treat “skills” and plugins subtly differently.

This is changing; “skills” will become plugins.

Instead of forcing developers like yourself to make a choice, we want to remove the choice all together. In the future, you won’t build “skills” or OpenAI plugins; you’ll just build plugins.

The future version of “skills” (i.e., plugins) will have the following benefits:

- They will be imported the same way as OpenAPI plugins.

- Authentication will behave the same as OpenAPI plugins.

- They will have all the same metadata as OpenAPI plugins.

- The planner will treat them the same way as OpenAPI plugins.

- Exposing them as HTTP endpoints with an OpenAPI spec will be automated.

With these changes, what few differences exist between “skills” and OpenAI plugins will disappear. They will become functionally identical and the only “choice” you as a developer will need to make is how you share them and consume them.

To begin this journey, we’re updating our APIs.

Getting to the point where native and semantic functions are functionally identical to OpenAI plugins will take some time, so the first incremental step we will be taking is updating our APIs for v1.0.0 so that we have a solid foundation moving forward (and no future breaking changes).

This most profoundly changes the import methods for functions added to the kernel. Instead of importing “skills,” the import methods will more accurately describe what they do: import “functions.”

| Before | After |

ImportSkill() |

ImportPluginFromType() |

ImportSemanticSkillFromDirectory() |

ImportPluginFromPromptDirectory() |

ImportGrpcSkillFromDirectory() |

ImportPluginFromGrpcDirectory() |

ImportAIPluginAsync() |

ImportPluginFromOpenApiAsync() |

After the functions have been imported, you’ll then be able to find them in the Functions property of the kernel. For example, to find a function by its name, you will now use the GetFunction() method on the Function property.

KernelFunction function = kernel.Plugins["searchPlugin"]["search"];Other changes in the SDK.

In addition to the import method updates, there are a few other changes that have occurred within the SDK that you should be aware of:

- Many of the configuration objects (e.g., OpenApiSkillExecutionParameters have been renamed so that “Skill” has been replaced with “Function”).

- Out-of-the-box “skills” (e.g., Core Skills, MS Graph Skills, Document Skills, etc.) have been renamed to plugins.

- NuGet packages with “skill” in the name will be deprecated and replaced with versions with “plugin” in the name instead.

Keep an eye out for other changes next week.

Besides updating skills to plugins, we’re also excited to rip the band aid off for other breaking changes that we believe will improve the usability of the SDK. These include (but are not limited to):

- Standardizing the interface for planners.

- Support generic LLM request settings.

- Make ContextVariables a proper dictionary.

- Replace the FunctionsView with a List.

- And more!

If you have questions about the latest round of changes or need help addressing any breaking changes. Feel free to reach out to us on Discord or by creating an issue on GitHub.

0 comments