Following on from our blog post a couple months ago: Microsoft’s Agentic AI Frameworks: AutoGen and Semantic Kernel, Microsoft’s agentic AI story is evolving at a steady pace. Both Azure AI Foundry’s Semantic Kernel and AI Frontier’s AutoGen are designed to empower developers to build advanced multi-agent systems. The AI Frontier’s team is charging ahead pushing the boundaries of multi-agent approaches, building new agentic patterns as well as a growing library of purpose-built agents, such as Magentic One, while the Semantic Kernel team builds on years of enterprise expertise to enable developers to build agents that can be integrated into enterprise applications.

Both teams are working together to move forward to converge these frameworks on a unified runtime and set of design principles that will make it easier to experiment with state-of-the-art agentic patterns—and then transition those experiments into production-grade, enterprise-supported solutions. This blog post outlines the technical details behind that convergence, focusing on common runtime architectures and agent framework enhancements in both AutoGen and Semantic Kernel.

In out recent roadmap post, Semantic Kernel Roadmap H1 2025: Accelerating Agents, Processes, and Integration, we outlined 3 ways in which AutoGen and Semantic Kernel are integrating:

- Converging Agent Runtimes: We are actively working together on harmonizing the core components between AutoGen and Semantic Kernel. This convergence will simplify development workflows and enhance the interoperability of multi-agent systems. We are planning a blog post with more technical details on this in the coming week.

- Hosting AutoGen agents in Semantic Kernel: You will soon have the flexibility to host AutoGen agents within the Semantic Kernel ecosystem. This means that if you’re already working with AutoGen, you can seamlessly migrate and manage your agents in Semantic Kernel.

- AutoGen integrates with Semantic Kernel: AutoGen can now leverage the powerful capabilities of Semantic Kernel, allowing developers to build on their existing investment in Semantic Kernel. This includes an AutoGen adapter for Semantic Kernel’s vast AI connector library as well as a Semantic Kernel based AutoGen assistant agent.

Let’s dive into how we’re doing this…

Shared Runtime for AutoGen and Semantic Kernel

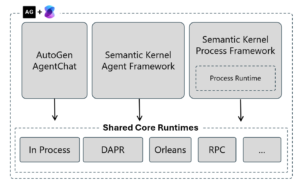

A central design goal of this convergence effort is the adoption of a common runtime and interfaces. By aligning the execution model, both frameworks can leverage shared abstractions for agent orchestration and process management.

Key points include:

- Shared Runtime Repository: A dedicated repository (public soon!) is being used to develop packages that work across both frameworks. This initiative simplifies runtime abstractions into a single package consumed by both AutoGen and Semantic Kernel.

- Hosting Support: The shared runtime can be hosted in a number of different ways, either in process, for development or simple agentic systems or on top of a distributed system framework like Dapr or Orleans.

Hosting AutoGen Agents within Semantic Kernel

Semantic Kernel is adding connectors to support integrating agents from other services and libraries (such as OpenAI Assistant agent and Azure AI Agents) and now, with AutoGen agents, starting with v0.2 AutoGen agents. This provides a migration path from AutoGen v0.2 agents into Semantic Kernel. This will work as of SK Python 1.20.0 (and take look at our sample).

import asyncio

import os

from autogen import ConversableAgent

from semantic_kernel.agents.autogen.autogen_conversable_agent import AutoGenConversableAgent

async def main():

cathy = ConversableAgent(

"cathy",

system_message="Your name is Cathy and you are a part of a duo of comedians.",

llm_config={

"config_list": [

{

"model": "gpt-4o-mini",

"temperature": 0.9,

"api_key": os.environ.get("OPENAI_API_KEY")

}

]

},

human_input_mode="NEVER", # Never ask for human input.

)

joe = ConversableAgent(

"joe",

system_message="Your name is Joe and you are a part of a duo of comedians.",

llm_config={

"config_list": [

{

"model": "gpt-4",

"temperature": 0.7,

"api_key": os.environ.get("OPENAI_API_KEY")

}

]

},

human_input_mode="NEVER", # Never ask for human input.

)

# Create the Semantic Kernel AutoGenAgent

autogen_agent = AutoGenConversableAgent(conversable_agent=cathy)

async for content in autogen_agent.invoke(

recipient=joe,

message="Tell me a joke about NVDA and TESLA stock prices.",

max_turns=3

):

print(f"# {content.role} - {content.name or '*'}: '{content.content}'")

if __name__ == "__main__":

asyncio.run(main())

AutoGen integrates with Semantic Kernel

AutoGen can now leverage Semantic Kernel connectors to expand functionalities. The following is an example of using Semantic Kernel’s Anthropic AI connector as a model client in AutoGen using the AutoGen.

#AutoGen (0.4) using Semantic Kernel AI connector

anthropic_client = AnthropicChatCompletion(

ai_model_id="claude-3-5-sonnet-20241022",

api_key=os.environ["ANTHROPIC_API_KEY"],

service_id="my-service-id", # Optional; for targeting specific services within Semantic Kernel

)

settings = AnthropicChatPromptExecutionSettings(

temperature=0.2,

)

sk_kernel = Kernel()

@sk_kernel.filter(FilterTypes.PROMPT_RENDERING)

async def prompt_rendering_filter(context: PromptRenderContext, next):

await next(context)

context = await business_logic.remove_sensitive_data(context)

rejected, reason = await business_logic.check_prompt(context)

if (rejected):

raise Exception("Prompt rejected: " + reason)

sk_client = SKChatCompletionAdapter(

anthropic_client, kernel=sk_kernel, prompt_settings=settings

)

# Create an local embedded runtime.

runtime = SingleThreadedAgentRuntime()

business_logic.ContosoAgent.register(runtime, "ContosoAgent", lambda: business_logic.ContosoAgent(sk_client))

try:

runtime.start()

await runtime.send_message(business_logic.EmployeeMessage("Who is Spartacus?"), AgentId("ContosoAgent", "default"))

await runtime.stop_when_idle()

except Exception as e:

print(e)

By bringing together runtimes, graduating core agent functionalities, and designing for distributed, event-based interactions, the Semantic Kernel and AI Frontiers teams are laying the groundwork for a future where developers can experiment freely with innovative agent patterns—and then smoothly transition those ideas into scalable, supported applications.

You can help! Now is the time to try this functionality out and give us feedback

AutoGen team is on Discord and checkout the issue tracker on GitHub and the Semantic Kernel team can be found on the Semantic Kernel GitHub Discussion Channel or join us every week for our Community Call

We look forward to hearing from you. Happy coding!

0 comments