Introduction

With the latest launch of the Azure AI Inference SDK for Azure AI Studio Models Catalog, we are happy to announce that we have also made available our dedicated Azure AI Inference Semantic Kernel Connector.

This connector is specially designed on top of the published Azure AI Inference SDK and is targeted to allow easy access to a comprehensive suite of AI models in the Azure AI Model Catalog for inferencing for use in your Semantic Kernel projects.

What is the Azure AI Model Catalog?

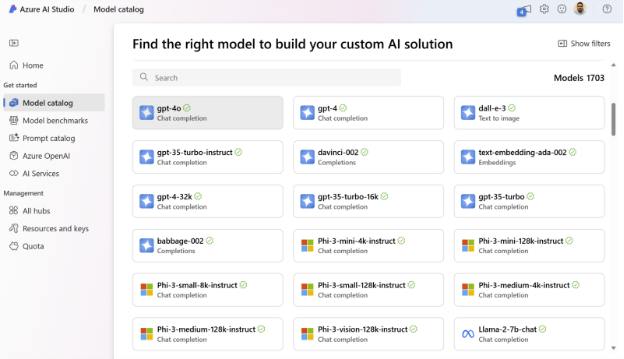

The Model Catalog in Azure AI Studio is a one-stop shop for exploring and deploying AI models.

Models from the catalog can be deployed to Managed Compute or as a Serverless API.

Some key features include:

- Model Availability: The model catalog features a diverse collection of models from providers such as Microsoft, Azure OpenAI, Mistral, Meta, and Cohere. This ensures you can find the right model to satisfy your requirements.

- Easy to deploy: Serverless API deployments remove the complexity about hosting and provisioning the hardware to run cutting edge models. When deploying models with serverless API, you don’t need quota to host them and you are billed per token

- Responsible AI Built-In: Safety is a priority. Language models from the catalog come with default configurations of Azure AI Content Safety moderation filters which detect harmful content.

For more details, see the Azure AI Model Catalog documentation.

Get Started

- Deploy a model like Phi-3. For more details, see the Azure AI Model Catalog deployment documentation.

- Install the Connectors.AzureAIInference package in your existing project from NuGet.

Add the following code to your application to start making requests to your model service. Make sure to replace your key and endpoint with those provided with your deployment.

var chatService = new AzureAIInferenceChatCompletionService(

endpoint: new Uri("YOUR-MODEL-ENDPOINT"),

apiKey: "YOUR-MODEL-API-KEY");

var chatHistory = new ChatHistory("You are a helpful assistant that knows about AI.");

chatHistory.AddUserMessage("Hi, I'm looking for book suggestions");

var reply = await chatService.GetChatMessageContentAsync(chatHistory);

For more details, see the Azure AI Inference Samples.

Conclusion

We’re excited to see what you build! Try out the new Azure AI Inference Connector give us your feedback. Please reach out if you have any questions or feedback through our Semantic Kernel GitHub Discussion Channel. We look forward to hearing from you! We would also love your support, if you’ve enjoyed using Semantic Kernel, give us a star on GitHub.

0 comments