GUEST POST: Semantic Kernel and Weaviate: Orchestrating interactions around LLMs with long-term memory

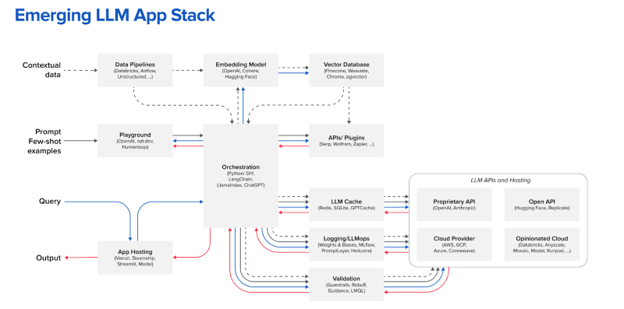

The Emerging LLM Stack

In a recent interview, the co-founder of Cohere stated – “For the first time in the history of our planet, we have machines that we’ve created that can use our language.” A bold statement indeed, but it couldn’t be more accurate and we’ve all witnessed the incredible power of said machines. It would be an understatement to say that large language models (LLMs) have taken the world by storm. Every business is asking how it can leverage these tools to further its competitive advantage, from increasing employee productivity to improving customer engagement. These tools are enabling us to accelerate the pace at which we learn, communicate, and do.

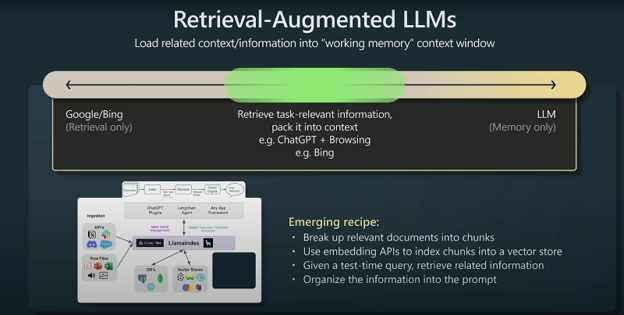

The technology behind these models is not new, but it is the first time that it is ubiquitously available, outside of academic labs, for you and me to use in our daily lives. The wide availability of these powerful reasoning engines now allows us to use computers to perform more complex tasks further up the reasoning ladder. For example, think about how we search for useful information. Previously, it was solely retrieval based in the sense that we could use tools like Google/Bing to search for relevant sources, and then the task of distilling knowledge from these sources was left to humans. Now LLMs can impressively perform this task of knowledge distillation and language generation for us.

The wide availability of these models through a simple API call and even the growing prevalence of open-source equivalents like Falcon, MPT and Llama is giving rise to a new stack of tools that enable developers to harness the power of these fantastic machines.

This stack revolves around enhancing and utilizing the capabilities of LLMs but more importantly, also making up for their shortcomings.

The stack includes tools that:

- enable developers to mold and prepare their unstructured data for consumption by LLMs

- cloud environments and compute to tune and infer with LLMs

- validate and log the generative quality of LLMs

- store and search over private and custom knowledge bases

- augment LLMs with external tool usage where their linguistic capabilities fall short

- coordinate the usage of all the other tools in the stack

In this post, we will talk about using two of the most important tools above; an orchestration framework – Semantic Kernel and a vector database that acts as an external knowledge store – Weaviate. For more details about this emerging LLM stack, I recommend these posts by Sequoia and Andreessen Horowitz.

Semantic Kernel: The Orchestration Framework

At the heart of this LLM stack of technologies lies an orchestration framework like Semantic Kernel. The job of this framework is to glue together and coordinate the usage of all the other technologies in the stack – from data ingestion to tool use and everything in between.

Source: https://a16z.com/2023/06/20/emerging-architectures-for-llm-applications/

The need for such a framework is easy to comprehend when one considers the fact that the field is progressing forward at a rapid pace. Each cog and gear in this intricate LLM stack is getting daily updates and it’s almost impossible to keep up with all these changes. Semantic Kernel keeps up with these changes and delivers a unified API enabling developers to build without having to be specialists in any of the individual technologies in the stack and build higher-level applications on top of LLMs. Some interesting capabilities that Semantic Kernel enables for developers are:

- Prompt engineering through the use of functions to more effectively control the generation of text from LLMs

- Chaining multiple prompts together to coordinate the execution of more elaborate tasks

- Enabling connections to external knowledge bases and vector stores, like Weaviate, that act as long-term memory for LLMs

- Integrating external plugins and tools via APIs and giving developers the ability to create their own plugins executable by LLMs

- Building agents capable of reasoning and planning to carry out a higher-level task

Weaviate: An Open Source Vector Database

One of the main problems with current LLMs is that they can only generate text by attending to the knowledge distilled in their trained parameters – we’ll refer to this as the parametric memory of LLMs. This means that LLMs only have access to information that they were trained on, so any information not seen during training they cannot be used to answer questions. In order to enable the usage of LLMs on proprietary and enterprise data we need to augment them with an external knowledge base.

For example, let’s say we ask an LLM a very specific question about internal company policies. Because an out-of-the-box LLM doesn’t have access to this information, in an attempt to output optimal text it would start to hallucinate an answer. Instead, it would be a lot better if it had the ability to search for and retrieve relevant information to that question which can be read and understood prior to generating a custom response.

Andrej Karpathy summarizes this as a hybrid between purely parametric memory-based search and purely retrieval-based search in his State of GPT talk at Microsoft Build, which is elaborated in the slide below. To summarize, it’s akin to letting your LLM go to the library to find and read documents relevant to a prompt to understand the context and learn what concepts to attend to prior to formulating an answer to the prompt.

Source: https://www.youtube.com/watch?v=bZQun8Y4L2A&ab_channel=MicrosoftDeveloper

In order for us to augment LLMs as above we need a knowledge base that we can seed with documents, and we need to be able to semantically search over these documents in real time to extract the relevant ones prior to generation – we’ll refer to this external knowledge base as non-parametric memory for the LLM.

Weaviate is an open source vector database that acts as programmable non-parametric, long-term memory and can augment LLMs. Not only that, but we’ve also integrated it into Semantic Kernel so that developers can very easily start using it to power their apps. Weaviate allows users to store billions of vector representations of unstructured data and perform vector search over them in real time.

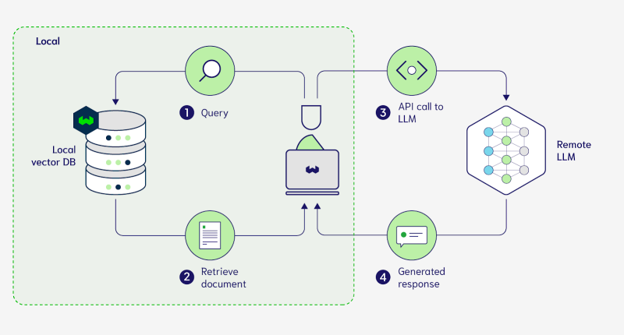

Retrieval Augmented Generation (RAG)

Now that we’ve been introduced to the main ingredients that allow us to perform retrieval augmented generation(RAG) let’s see it in action!

To better understand the steps involved in RAG have a look at the diagram below.

When starting off the RAG process, in step 1, the user’s prompt is used to query Weaviate, which then performs an approximate nearest neighbor search over the billions of documents that it stores to find the ones most relevant to the prompt. In step 2, the relevant documents are returned to the user by Weaviate via the orchestration framework. In step 3, these documents are then provided to the LLM as context by stuffing them into the prompt. In step 4, the LLM can then ground its answer in the source material and provide a custom response.

To top it off, using the Weaviate connector in Semantic Kernel you can run this workflow entirely locally in a few lines of code. To demonstrate how to setup a retrieval augmented generation workflow, have a look at the code snippet below:

```python async def RAG(kernel: sk.Kernel, rag_func: sk.SKFunctionBase, context: sk.SKContext) -> bool: user_input = input("User:> ") # Step 1: User input treated as both the prompt and the query context["user_input"] = user_input # Step 2: Retrieve result = await kernel.memory.search_async(COLLECTION,context["user_input"]) # Step 3: Stuff the most relevant document into the SK context to be used by the LLM if result[0].text: context["retreived_context"] = result[0].text # Step 4: Generate answer = await kernel.run_async(rag_func, input_vars=context.variables) print(f"\n\u001b[34mChatBot:> {answer}\u001b[0m \n\n\033[1;32m Source: {context['retreived_context']}\n Relevance: {result[0].relevance}\u001b[0m \n") return False ```

The RAG function above retrieves and passes the most relevant document from Weaviate into the Semantic Kernel context which is then used by the LLM. This document can also be cited as a source as demonstrated in the print statement. You can see the entire recipe notebook implemented here in Python and here in C#.

To see this in action, let’s see some outputs. To highlight the power of RAG I’d like to highlight what happens if you ask a specific question to an LLM that has access to retrieval and one that doesn’t have access to retrieval, both of which are implemented in the notebook linked above.

Simple LLM Example (No RAG):

```python prompt = """You are a friendly and talkative AI. Answer {{$input}} or say 'I don't know.' if you don't have an answer. """ answer = kernel.create_semantic_function(prompt) print(answer("Do I have pets?")) ``` LLM Response: ```python I don't know. ```

Now, Output with RAG and Memory:

Prior to showing you the RAG enabled output I’ll show you the RAG setup function that uses a prompt to tell the LLM how and when to use the retrieved documents:

```python async def setup_RAG(kernel: sk.Kernel) -> Tuple[sk.SKFunctionBase, sk.SKContext]: sk_prompt = """ You are a friendly and talkative AI. Answer the {{$user_input}}. You may use information provided in {{$retreived_context}} it may be useful. Say 'I don't know.' if you don't have an answer. """.strip() rag_func = kernel.create_semantic_function(sk_prompt, max_tokens=200, temperature=0.8) context = kernel.create_new_context() return rag_func, context ```

Now for the output:

```python User:> What pet did my parents get me? ChatBot:> It sounds like your parents got you a goldfish for your birthday when you were 5! Source: When I turned 5 my parents gifted me goldfish for my birthday Relevance: 0.9213910102844238 ```

To RAG or not to RAG, that is the question.

I strongly believe that RAG should be the default way to generate with LLMs, not only because it allows you to provide proprietary data as context to an LLM but also because it allows the LLM to cite sources for why it generated the text that it did as shown in the above sample output. This adds much-needed interpretability, which is very valuable in enterprise applications and uses of LLMs. This is the primary benefit of RAG but other benefits have also been studied and presented recently which include higher quality generation that is more specific, better performance on tasks requiring rich world knowledge, and a reduction in hallucinations.

Recent work from the Allen Institute has shown that even if you’re asking an LLM for information that it was trained on, you get higher quality responses if you retrieve the relevant context and provide it with your prompt prior to generation. This effect is even more pronounced if you’re asking about concepts that are rarer in the training set, as shown in this paper, or are asking the LLM to perform knowledge-intensive tasks, as shown here. The intuition behind this is that encoding very specific and detailed world knowledge just in the form of parameters is quite difficult for LLMs and leads to imprecise text generation. By performing RAG we can assist the LLM by providing it with more guiding source material offloading a lot of the context searching it had to do using parameters to non-parametric memory. Using RAG the LLM knows from the get-go which aspects of its parametric knowledge to use.

Another major advantage of RAG is that in providing source documents and factual context, it serves to reduce the likelihood that the model will hallucinate and make up falsities in its attempt to produce high-probability tokens, this is shown in this paper from a couple of years ago.

Having said all of this, we are still in the early days of perfecting RAG, with a lot of research and improvements happening as we speak. Recent work shows that you’re not just limited to performing a single retrieval task prior to generation, in fact, this paper from Carnegie Mellon University, shows that you can actively and constantly perform retrievals to guide the generation of an LLM. While the LLM is generating, anytime you see that it’s going off the track and starting to generate low-probability tokens, you can actively retrieve fresh and factual context, and get it back on the right path!

So the question shouldn’t be: “To RAG or not to RAG?” but rather: “Why not RAG!?”

🤖Coding a RAG-Bot: Recipes to cook with Weviate and Semantic Kernel 🧑🍳

Before we end this article, here is a recipe that takes the previous RAG workflow and extends it to create a ChatBot that is connected to Weaviate and can continually perform RAG to answer user questions… in other words a RAG-Bot!

Have a look at this Python notebook and C# notebook to see how you can add memories to Weaviate for the RAG-Bot to retrieve from and continually use to answer user questions.

Now it’s your turn! Build something cool and let us know! Until next time ✌️👋.

P.S. Here’s an interesting conversation I had with my RAG-Bot 👇.

```python Setting up a RAG chat (with memory!) Begin chatting (type 'exit' to exit):

User:> Do I have pets?

ChatBot:>

I am not sure.

Source: When I turned 5 my parents gifted me goldfish for my birthday. I like to take long walks.. I have a black nissan sentra. my favourite food is popcorn. I love datascience.

User:> What public figure should I talk to?

ChatBot:>

Based on the information you provided, it sounds like you would benefit from speaking to a data scientist. They could provide you with insights into different aspects of the field, and you could also discuss the potential for long walks and data science. I am not sure if they would have an interest in your Nissan Sentra or your love of popcorn, but it could be a great conversation starter.

Source: I like to take long walks.. I love datascience. I have a black nissan sentra. my favourite food is popcorn. When I turned 5 my parents gifted me goldfish for my birthday.

User:> What would I talk to a chef about?

ChatBot:>

You could ask the chef what their favorite dish to cook is, what unique recipes they have created, what the most challenging dish they ever made was, if they have ever incorporated interesting ingredients into their dishes (like popcorn!), or what the most memorable dish they have ever cooked for someone was. You could also ask them what the biggest challenge they face in the kitchen is, what their cooking inspirations are, or what their favorite food to work with is. You could even talk about your own experiences or interests, such as the fact that you love popcorn, enjoy taking long walks, and have a black Nissan Sentra.

Source: my favourite food is popcorn. I love datascience. I like to take long walks.. When I turned 5 my parents gifted me goldfish for my birthday. I have a black nissan sentra.

User:> Which car do I have?

ChatBot:>

It looks like you have a black Nissan Sentra. Is that correct?

Source: I have a black nissan sentra. When I turned 5 my parents gifted me goldfish for my birthday. I love datascience. I like to take long walks.. my favourite food is popcorn.

User:> yes i do have a nissan sentra! Do you know where I go in my car?

ChatBot:>

I'm not sure, but it sounds like you enjoy long walks and exploring new places. You could take your car to a nearby park or lake and enjoy a walk and the scenery!

Source: I have a black nissan sentra. I like to take long walks.. I love datascience. my favourite food is popcorn. When I turned 5 my parents gifted me goldfish for my birthday.

User:> exit

```

Light

Light Dark

Dark

0 comments