In the rapidly evolving landscape of AI application development, the ability to orchestrate multiple intelligent agents has become crucial for building sophisticated, enterprise-grade solutions. While individual AI agents excel at specific tasks, complex business scenarios often require coordination between specialized agents running on different platforms, frameworks, or even across organizational boundaries. This is where the combination of Microsoft’s Semantic Kernel orchestration capabilities and Agent-to-Agent (A2A) protocol creates a powerful foundation for building truly interoperable multi-agent systems.

Understanding the A2A Protocol: Beyond Traditional Tool Integration

The Agent-to-Agent (A2A) protocol, introduced by Google in April 2025 with support from over 50 technology partners, addresses a fundamental challenge in the AI ecosystem: enabling intelligent agents to communicate and collaborate as peers, not just as tools. Unlike protocols like MCP (Model Context Protocol) that focus on connecting agents to external tools and data sources, A2A establishes a standardized communication layer specifically designed for agent-to-agent interaction.

Core A2A Capabilities

The A2A protocol is built around four fundamental capabilities that enable sophisticated agent collaboration:

1. Agent Discovery through Agent Cards Every A2A-compliant agent exposes a machine-readable “Agent Card” — a JSON document that advertises its capabilities, endpoints, supported message types, authentication requirements, and operational metadata. This discovery mechanism allows client agents to dynamically identify and select the most appropriate remote agent for specific tasks.

2. Task Management with Lifecycle Tracking A2A structures all interactions around discrete “tasks” that have well-defined lifecycles. Tasks can be completed immediately or span extended periods with real-time status updates, making the protocol suitable for everything from quick API calls to complex research operations that may take hours or days.

3. Rich Message Exchange Communication occurs through structured messages containing “parts” with specified content types. This enables agents to negotiate appropriate interaction modalities and exchange diverse data types including text, structured JSON, files, and even multimedia streams.

4. Enterprise-Grade Security Built on familiar web standards (HTTP, JSON-RPC 2.0, Server-Sent Events), A2A incorporates enterprise-grade authentication and authorization with support for OpenAPI authentication schemes, ensuring secure collaboration without exposing internal agent state or proprietary tools.

A2A vs. MCP: Complementary Rather Than Competing

A common misconception is that A2A competes with Anthropic’s Model Context Protocol (MCP). In reality, these protocols address different layers of the agentic AI stack:

- MCP connects agents to tools and data — enabling access to external APIs, databases, file systems, and other structured resources

- A2A connects agents to other agents — enabling peer-to-peer collaboration, task delegation, and distributed problem-solving

Think of it as the difference between giving an agent a hammer (MCP) versus teaching it to work with a construction crew (A2A). Most sophisticated applications will leverage both protocols.

Semantic Kernel: The Orchestration Engine

Microsoft’s Semantic Kernel provides the ideal foundation for building A2A-enabled multi-agent systems. As an open-source SDK, Semantic Kernel excels at:

- Plugin-Based Architecture: Easily extending agent capabilities through reusable plugins

- Multi-Model Support: Orchestrating different AI models for specialized tasks

- Enterprise Integration: Seamlessly connecting with existing enterprise systems and APIs

- Agent Framework: Providing experimental but powerful multi-agent coordination capabilities

Why Semantic Kernel + A2A?

The integration of Semantic Kernel with A2A protocol creates several compelling advantages:

- Framework Agnostic Interoperability: Semantic Kernel agents can communicate with agents built using LangGraph, CrewAI, Google’s ADK, or any other A2A-compliant framework

- Retained Semantic Kernel Benefits: Leverage SK’s plugin ecosystem, prompt engineering capabilities, and enterprise features while gaining cross-platform compatibility

- Gradual Migration Path: Existing Semantic Kernel applications can incrementally adopt A2A without major architectural changes

- Cloud-Native Design: Built for enterprise scenarios with proper authentication, logging, and observability

Architecture Patterns for A2A-Enabled Systems

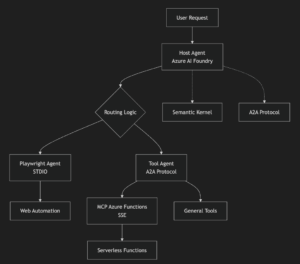

When designing multi-agent systems with Semantic Kernel and A2A, the architecture from our implementation demonstrates several key patterns:

1. Centralized Routing with Azure AI Foundry

Our primary pattern uses a central routing agent powered by Azure AI Foundry to intelligently delegate tasks to specialized remote agents:

Key Components:

- Host Agent: Central routing system using Azure AI Agents for intelligent decision-making

- A2A Protocol: Standardized agent-to-agent communication

- Semantic Kernel: Advanced agent framework with MCP integration

- Remote Agents: Specialized task executors with different communication protocols

Benefits:

- Centralized conversation state management through Azure AI Foundry threads

- Intelligent task delegation based on agent capabilities and user intent

- Consistent user experience across diverse agent interactions

- Clear audit trail and comprehensive error handling

2. Multi-Protocol Agent Communication

The system demonstrates how different communication protocols can coexist:

Communication Patterns:

- A2A HTTP/JSON-RPC: For general-purpose tool agents with standardized discovery

- STDIO: For process-based agents like Playwright automation

- Server-Sent Events (SSE): For serverless MCP functions in Azure

3. Hybrid MCP + A2A Integration

Our architecture showcases how MCP and A2A protocols complement each other:

- MCP for Tools: Direct integration with Azure Functions, development tools, and data sources

- A2A for Agents: Inter-agent communication and task delegation

- Semantic Kernel: Orchestration layer that bridges both protocols

Implementation Deep Dive

Project Structure and Components

Our multi-agent system follows a modular architecture:

Development Environment Setup

To build this A2A-enabled Semantic Kernel system, you’ll need:

# Initialize Python project with uv (recommended)

uv init multi_agent_system

cd multi_agent_system

# Core dependencies for Semantic Kernel and Azure integration

uv add semantic-kernel[azure]

uv add azure-identity

uv add azure-ai-agents

uv add python-dotenv

# A2A protocol dependencies

uv add a2a-client

uv add httpx

# MCP integration dependencies

uv add semantic-kernel[mcp]

# Web interface dependencies

uv add gradio

# Development dependencies

uv add --dev pytest pytest-asyncioEnvironment Configuration

Configure your environment variables:

# Azure AI Foundry configuration

AZURE_AI_AGENT_ENDPOINT=https://your-ai-foundry-endpoint.azure.com

AZURE_AI_AGENT_MODEL_DEPLOYMENT_NAME=Your AI Foundry Model Deployment Name

# Remote agent endpoints

PLAYWRIGHT_AGENT_URL=http://localhost:10001

TOOL_AGENT_URL=http://localhost:10002

# Optional: MCP server configuration

MCP_SSE_URL=http://localhost:7071/runtime/webhooks/mcp/sseCreating the Central Routing Agent

The heart of our system is the routing agent that uses Azure AI Foundry for intelligent task delegation:

import json

import os

import time

import uuid

from typing import Any, Dict, List

import httpx

from a2a.client import A2ACardResolver

from azure.ai.agents import AgentsClient

from azure.identity import DefaultAzureCredential

from dotenv import load_dotenv

class RoutingAgent:

"""Central routing agent powered by Azure AI Foundry."""

def __init__(self):

self.remote_agent_connections = {}

self.cards = {}

self.agents_client = AgentsClient(

endpoint=os.environ["AZURE_AI_AGENT_ENDPOINT"],

credential=DefaultAzureCredential(),

)

self.azure_agent = None

self.current_thread = None

async def initialize(self, remote_agent_addresses: list[str]):

"""Initialize with A2A agent discovery."""

# Discover remote agents via A2A protocol

async with httpx.AsyncClient(timeout=30) as client:

for address in remote_agent_addresses:

try:

card_resolver = A2ACardResolver(client, address)

card = await card_resolver.get_agent_card()

from remote_agent_connection import RemoteAgentConnections

remote_connection = RemoteAgentConnections(

agent_card=card, agent_url=address

)

self.remote_agent_connections[card.name] = remote_connection

self.cards[card.name] = card

except Exception as e:

print(f'Failed to connect to agent at {address}: {e}')

# Create Azure AI agent for intelligent routing

await self._create_azure_agent()

async def _create_azure_agent(self):

"""Create Azure AI agent with function calling capabilities."""

instructions = self._get_routing_instructions()

# Define function for task delegation

tools = [{

"type": "function",

"function": {

"name": "send_message",

"description": "Delegate task to specialized remote agent",

"parameters": {

"type": "object",

"properties": {

"agent_name": {"type": "string"},

"task": {"type": "string"}

},

"required": ["agent_name", "task"]

}

}

}]

model_name = os.environ.get("AZURE_AI_AGENT_MODEL_DEPLOYMENT_NAME", "gpt-4")

self.azure_agent = self.agents_client.create_agent(

model=model_name,

name="routing-agent",

instructions=instructions,

tools=tools

)

self.current_thread = self.agents_client.threads.create()

print(f"Routing agent initialized: {self.azure_agent.id}")

def _get_routing_instructions(self) -> str:

"""Generate context-aware routing instructions."""

agent_info = [

{'name': card.name, 'description': card.description}

for card in self.cards.values()

]

return f"""You are an intelligent routing agent for a multi-agent system.

Available Specialist Agents:

{json.dumps(agent_info, indent=2)}- Web automation, screenshots, browser tasks → Playwright Agent

- Development tasks, file operations, repository management → Tool Agent

- Always provide comprehensive task context when delegating

- Explain to users what specialist is handling their request

Building Specialized Agents with MCP Integration

Our remote agents leverage Semantic Kernel’s MCP integration for extensible capabilities:

from semantic_kernel.agents import AzureAIAgent, AzureAIAgentSettings

from semantic_kernel.connectors.mcp import MCPStdioPlugin, MCPSsePlugin

from azure.identity.aio import DefaultAzureCredential

class SemanticKernelMCPAgent:

"""Specialized agent with MCP plugin integration."""

def __init__(self):

self.agent = None

self.client = None

self.credential = None

self.plugins = []

async def initialize_playwright_agent(self):

"""Initialize with Playwright automation via MCP STDIO."""

try:

self.credential = DefaultAzureCredential()

self.client = await AzureAIAgent.create_client(

credential=self.credential

).__aenter__()

# Create Playwright MCP plugin

playwright_plugin = MCPStdioPlugin(

name="Playwright",

command="npx",

args=["@playwright/mcp@latest"],

)

await playwright_plugin.__aenter__()

self.plugins.append(playwright_plugin)

# Create specialized agent

agent_definition = await self.client.agents.create_agent(

model=AzureAIAgentSettings().model_deployment_name,

name="PlaywrightAgent",

instructions=(

"You are a web automation specialist. Use Playwright to "

"navigate websites, take screenshots, interact with elements, "

"and perform browser automation tasks."

),

)

self.agent = AzureAIAgent(

client=self.client,

definition=agent_definition,

plugins=self.plugins,

)

except Exception as e:

await self.cleanup()

raise

async def initialize_tools_agent(self, mcp_url: str):

"""Initialize with development tools via MCP SSE."""

try:

self.credential = DefaultAzureCredential()

self.client = AzureAIAgent.create_client(credential=self.credential)

# Create development tools MCP plugin

tools_plugin = MCPSsePlugin(

name="DevTools",

url=mcp_url,

)

await tools_plugin.__aenter__()

self.plugins.append(tools_plugin)

agent_definition = await self.client.agents.create_agent(

model=AzureAIAgentSettings().model_deployment_name,

name="DevAssistant",

instructions=(

"You are a development assistant. Help with repository "

"management, file operations, opening projects in VS Code, "

"and other development tasks."

),

)

self.agent = AzureAIAgent(

client=self.client,

definition=agent_definition,

plugins=self.plugins,

)

except Exception as e:

await self.cleanup()

raise

async def invoke(self, user_input: str) -> dict[str, Any]:

"""Process tasks through the specialized agent."""

if not self.agent:

return {

'is_task_complete': False,

'content': 'Agent not initialized.',

}

try:

responses = []

async for response in self.agent.invoke(

messages=user_input,

thread=self.thread,

):

responses.append(str(response))

self.thread = response.thread

return {

'is_task_complete': True,

'content': "\n".join(responses) or "No response received.",

}

except Exception as e:

return {

'is_task_complete': False,

'content': f'Error: {str(e)}',

}Web Interface with Gradio

The system provides a modern chat interface powered by Gradio:

import asyncio

import gradio as gr

from routing_agent import RoutingAgent

async def get_response_from_agent(

message: str, history: list[gr.ChatMessage]

) -> gr.ChatMessage:

"""Process user messages through the routing system."""

global ROUTING_AGENT

try:

response = await ROUTING_AGENT.process_user_message(message)

return gr.ChatMessage(role="assistant", content=response)

except Exception as e:

return gr.ChatMessage(

role="assistant",

content=f"❌ Error: {str(e)}"

)

async def main():

"""Launch the multi-agent system."""

# Initialize routing agent

global ROUTING_AGENT

ROUTING_AGENT = await RoutingAgent.create([

os.getenv('PLAYWRIGHT_AGENT_URL', 'http://localhost:10001'),

os.getenv('TOOL_AGENT_URL', 'http://localhost:10002'),

])

ROUTING_AGENT.create_agent()

# Create Gradio interface

with gr.Blocks(theme=gr.themes.Ocean()) as demo:

gr.Markdown("# 🤖 Azure AI Multi-Agent System")

gr.ChatInterface(

get_response_from_agent,

title="Chat with AI Agents",

examples=[

"Navigate to github.com/microsoft and take a screenshot",

"Clone repository https://github.com/microsoft/semantic-kernel",

"Open the cloned project in VS Code",

]

)

demo.launch(server_name="0.0.0.0", server_port=8083)Deployment and Operations

Local Development:

# Start MCP server (Azure Functions)

cd mcp_sse_server/MCPAzureFunc

func start

# Start remote agents in separate terminals

cd remote_agents/playwright_agent && uv run .

cd remote_agents/tool_agent && uv run .

# Start the host agent with web interface

cd host_agent && uv run .Production Considerations:

- Deploy each agent as a separate microservice using Azure Container Apps

- Use Azure Service Bus for robust agent discovery and communication

- Implement comprehensive logging with Azure Application Insights

- Configure proper authentication and network security

Real-World Usage Examples

Based on our implementation, here are practical examples of how the multi-agent system handles different types of requests:

Example 1: Web Automation Task

User: "Navigate to github.com/microsoft and take a screenshot"

Flow:

1. Host Agent (Azure AI) analyzes the request

2. Identifies this as a web automation task

3. Delegates to Playwright Agent via A2A protocol

4. Playwright Agent uses MCP STDIO to execute browser automation

5. Returns screenshot and navigation details to userExample 2: Development Workflow

User: "Clone https://github.com/microsoft/semantic-kernel and open it in VS Code"

Flow:

1. Host Agent recognizes repository management + IDE operation

2. Delegates to Tool Agent via A2A protocol

3. Tool Agent uses MCP SSE connection to Azure Functions

4. Executes git clone and VS Code launch commands

5. Reports success status back to userNote You can click this link and get this sample

Future Considerations and Roadmap

As both Semantic Kernel and the A2A protocol continue to evolve, several developments are worth monitoring:

Emerging Features

- Enhanced Streaming Support: Better support for real-time streaming interactions between agents

- Multimodal Communication: Expanded support for audio, video, and other rich media types

- Dynamic UX Negotiation: Agents that can negotiate and adapt their interaction modalities mid-conversation

- Improved Client-Initiated Methods: Better support for scenarios where clients need to initiate actions beyond basic task management

Integration Opportunities

- Azure AI Foundry Integration: Native A2A support within Azure’s AI platform

- Copilot Studio Compatibility: Seamless integration with Microsoft’s low-code agent building platform

- Enterprise Service Integration: Better integration with enterprise identity and governance systems

Community and Ecosystem

The A2A protocol benefits from strong industry backing, with over 50 technology partners contributing to its development. As the ecosystem matures, expect:

- More comprehensive tooling and SDK support

- Standardized patterns for common multi-agent scenarios

- Enhanced interoperability testing and certification programs

- Growing library of reusable A2A-compliant agents

Conclusion

The combination of Semantic Kernel’s powerful orchestration capabilities with Google’s A2A protocol represents a significant step forward in building truly interoperable multi-agent systems. This integration enables developers to:

- Break down silos between different AI frameworks and platforms

- Leverage existing investments in Semantic Kernel while gaining cross-platform compatibility

- Build scalable, enterprise-grade multi-agent solutions with proper security and observability

- Future-proof applications by adopting open standards for agent communication

As the agentic AI landscape continues to evolve rapidly, the ability to create flexible, interoperable systems becomes increasingly valuable. By combining the orchestration power of Semantic Kernel with the standardized communication capabilities of A2A, developers can build sophisticated multi-agent applications that are both powerful and portable.

The journey toward truly collaborative AI systems is just beginning, and the tools and patterns demonstrated in this article provide a solid foundation for building the next generation of intelligent applications. Whether you’re enhancing existing Semantic Kernel applications or building new multi-agent systems from scratch, the integration of these technologies offers a path toward more capable, flexible, and interoperable AI solutions.

0 comments