Bring your AI Copilots to the edge with Phi-3 and Semantic Kernel

Today we’re featuring a guest author, Arafat Tehsin, who’s a Microsoft Most Valuable Professional (MVP) for AI. He’s written an article we’re sharing below, focused on how to Bring your AI Copilots to the edge with Phi-3 and Semantic Kernel. We’ll turn it over to Arafat to share more!

It’s true that not every challenge can be distilled into a straightforward solution. However, as someone who has always believed in the power of simplicity, I think a deeper understanding of the problem often paves the way for more elegant and effective solutions. In an era where data sovereignty and privacy are paramount, every client is concerned about their private information being processed in the cloud. I decided to explore the avenues of 100% local Retrieval-Augmented Generation (RAG) with Microsoft’s Phi-3 and my favorite AI-first framework, Semantic Kernel.

Last Sunday, when I boarded a flight with my colleagues for our EY Microsoft APAC Summit, I thought I’d finish some of the pending tasks on the 8.5 hour long flight. Unfortunately, this did not happen as our flight came with no in-flight WiFi. This gave me an idea of launching Visual Studio, LM Studio and rest of the dev tools that would work without any the internet. Then, I decided to build something that was on my list for a long time. Something that may give you a new direction or an idea to help your customers to meet their productivity needs through all local / on-prem RAG powered AI agent.

In my blog posts, I try to address those pain points which are not commonly covered by others (otherwise, I feel I am just adding a redundancy). For now, I have seen a lot of folks working with local models ranging from Phi-2 to Phi-3 to Llama 3 and so on. However, those solutions are not either covering the support for the most robust enterprise framework, .NET or they were not descriptive enough for newbie AI developers. Therefore, I decided to take this challenge and thought to address it in a few upcoming posts.

RAG with on-device Phi-3 model

In this post, we’re going to build a basic Console App to showcase the capability as how you can build a local Copilot solution using Phi-3 and Semantic Kernel without complementing any online service such as Azure OpenAI or OpenAI and the likes. We will then see how you can add RAG capabilities to it by just using a temporary memory and textual content. In our next post, we will cover a complete RAG solution that’d cover the documents like Word, PDF, Markdown, JSON etc. with a constructive memory store. Below is an outcome of what you will achieve with this post. Keep reading on how to achieve this smoothly. 🏎️

Background

Before we move to the prerequisites, let me share how I got here and my motivation going forward. If you’ve been keeping up with the latest AI-first frameworks like LangChain, Semantic Kernel and Small Language Models (SLM) like Phi-3, MobileBERT, or T5-Small, you’ll know that developers and businesses are creating fascinating use-cases and techniques in the Generative AI spectrum. One of the very common ways is either to use Ollama or LMStudio and host your model on a localhost and communicate locally with your apps / library and so on. However, as per my previous work with ML.NET, I’ve always loved the file based access to machine learning models. Few weeks ago, when I saw Phi-3 is available with ONNX format, I thought I should build a simple RAG solution with that to avoid any localhost connectivity.

After hours of research, I have figured out that the only person who has done this work with .NET is Bruno Capuano (massive thanks to you, Bruno) and he has written a great blog post about it which also includes Phi-3 Cookbook samples for your learning. I figured out that the all the samples were using the ONNX Gen AI library which was created by Microsoft MVP, feiyun (Unfortunately, I don’t know the real name) and that was archived. This made me nervous as I did not want to suggest something which is not maintained.

After digging out a little, I found out that feiyun has submitted a PR in Semantic Kernel repo for it to be a part of the official package. This made me super excited and as soon as the new version got published (last week), I thought I should create my demo using the same package.

Pre-requisites

As discussed above, for us to create a very simple .NET app, we need few essential packages as well as a Phi-3 model.

Download Phi-3

We will be using the smallest Phi-3 variant which is phi-3-mini-4k-instruct-onnx and can easily be downloaded through Hugging Face either using their huggingface-cli or git. You can save this model to your choice of folder, I have downloaded them to my D:\models folder.

Note: Just be very patient when downloading using git as it might take forever. For me, it took more than an hour on 75 Mbps internet. It’s that bad!

Semantic Kernel

My previous post talks in detail about Copilot and the step-by-step process to build one with Semantic Kernel. In this post, we’ll go straight to the point. Let’s create a simple Console App .NET 8 and add the latest Microsoft.SemanticKernel package to it with a version 1.16.2. In addition to this package, we will also add Microsoft.SemanticKernel.Connectors.Onnx with a version 1.16.2-alpha which will allow us to use the ONNX model you downloaded few minutes ago.

Just replace your Program.cs with this code and change the path of your model to make it work.

#pragma warning disable SKEXP0070

#pragma warning disable SKEXP0050

#pragma warning disable SKEXP0001

#pragma warning disable SKEXP0010

// Create a chat completion service

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

using Microsoft.SemanticKernel.Connectors.OpenAI;

using Microsoft.SemanticKernel.Embeddings;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Plugins.Memory;

// Your PHI-3 model location

var modelPath = @"D:\models\Phi-3-mini-4k-instruct-onnx\cpu_and_mobile\cpu-int4-rtn-block-32";

// Load the model and services

var builder = Kernel.CreateBuilder();

builder.AddOnnxRuntimeGenAIChatCompletion("phi-3", modelPath);

// Build Kernel

var kernel = builder.Build();

// Create services such as chatCompletionService and embeddingGeneration

var chatCompletionService = kernel.GetRequiredService<IChatCompletionService>();

Console.ForegroundColor = ConsoleColor.Cyan;

Console.WriteLine("""

_ _ _____ _____

| | | | | __ \ /\ / ____|

| | ___ ___ __ _| | | |__) | / \ | | __

| | / _ \ / __/ _` | | | _ / / /\ \| | |_ |

| |___| (_) | (_| (_| | | | | \ \ / ____ \ |__| |

|______\___/ \___\__,_|_| |_| \_\/_/ \_\_____|

by Arafat Tehsin

""");

// Start the conversation

while (true)

{

// Get user input

Console.ForegroundColor = ConsoleColor.White;

Console.Write("User > ");

var question = Console.ReadLine()!;

// Enable auto function calling

OpenAIPromptExecutionSettings openAIPromptExecutionSettings = new()

{

ToolCallBehavior = ToolCallBehavior.EnableKernelFunctions,

MaxTokens = 200

};

var response = kernel.InvokePromptStreamingAsync(

promptTemplate: @"{{$input}}",

arguments: new KernelArguments(openAIPromptExecutionSettings)

{

{ "input", question }

});

Console.ForegroundColor = ConsoleColor.Green;

Console.Write("\nAssistant > ");

string combinedResponse = string.Empty;

await foreach (var message in response)

{

//Write the response to the console

Console.Write(message);

combinedResponse += message;

}

Console.WriteLine();

}

Now, if you run this code, you will see this:

Although, we’re not there yet with RAG but you can still chat with Phi-3 model in a similar way as you’d do with any other Large Language Model (LLM).

Embeddings

Now, as I mentioned in my start that the aim of this post is to go all local (not even localhost), this brings another challenge as how we can add embedding capability without using Azure OpenAI, OpenAI, Llama or the similar models.

Option 1

Well, we won’t go with all of this. Rather, there’s another cool addition to our ecosystem and that’s called, .NET Smart Components. They have brought us the capability of Local Embeddings. Whilst there is another option of local embedding using BertOnnxTextEmbeddingGeneration as a part of ONNX package but I haven’t seen any working examples of that yet.

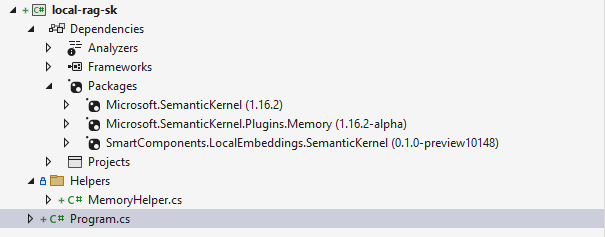

Now, in order to achieve that, first we need a LocalEmbeddings package. I will come to the code part later with everything together to make your life easier. However, that’s how the project structure will look like.

UPDATE: 17 August, 2024

When I published my post, I got a few messages that a lot of folks were not able to run this code because they were encountering issues with Smart Component’s LocalEmbeddings and Microsoft.SemanticKernel.Connectors.Onnx working together. Whilst I raised this bug myself to the Semantic Kernel repo, I have figured out that my good friend and Microsoft AI MVP, Jose Luis Latorre and David Puplava suggested a fantastic solution to overcome this problem.

Option 2

Let’s download a bge-micro-v2 from Hugging Face in a similar way as described above. bge-micro-v2 is a lightweight model suitable for smaller datasets and devices with limited resources. It’s designed to provide fast inference times at the cost of slightly lower accuracy compared to larger models. We’ll be using it for embeddings. Once you have downloaded it, all you have to do is, instead of using LocalEmbeddings, you will have to replace it with the following code:

// Your PHI-3 model location

var phi3modelPath = @"D:\models\Phi-3-mini-4k-instruct-onnx\cpu_and_mobile\cpu-int4-rtn-block-32";

var bgeModelPath = @"D:\models\bge-micro-v2\onnx\model.onnx";

var vocabPath = @"D:\models\bge-micro-v2\vocab.txt";

// Load the model and services

var builder = Kernel.CreateBuilder();

builder.AddOnnxRuntimeGenAIChatCompletion("phi-3", phi3modelPath);

builder.AddBertOnnxTextEmbeddingGeneration(bgeModelPath, vocabPath);

Memory

As I mentioned earlier in the post, the focus is around showing the capability of how you can bring up offline SLMs to your apps therefore, for the storage, we will not be going with any fancy capability rather a simple text-based dictionary. In this case, we’ll going with VolatileMemory store for now with SemanticTextMemory. We will also make use of TextMemoryPlugin for its out of the box Recall function so we can re-use to find out the answers from the memory.

Let’s also create a folder called Helpers inside our project and within that, create a class and call it MemoryHelper.cs(refer to the picture in earlier section). This will contain the sample data I created for our organisation collection which I named as TheLevelOrg(Inspired by Harris Brakmic). You can simply replace everything in your MemoryHelper.cs with the below:

Now you can go and replace your previous Program.cs file so it will have all the memory capabilities. I have included the code comments for you to understand it better.

As we continue to explore this new world of SLMs, we find new ways to solve problems and innovate. This post is a step towards when you won’t have to rely on the internet capabilities at all for high compute and better results. Next, we’ll take on the challenge of integrating different types of documents into our RAG solution. Stay tuned as we’ll dive deeper into memory and document processing to enhance our AI capabilities.

It failed to answer in other languages. Is it a model issue or SK issue?

Replicate issue with this “who are you? answer in Japanese”