Every system needs to be able to add AI to its workflow to empower the users to complete their task much faster. The Semantic Kernel team and community have been working hard to create a Java based kernel to support Java developers to unleash AI into their apps. I will walk you through this journey below.

We will review the following concepts with examples:

- Kernel and use of Inline Function.

- Plugins and Functions loaded from directory.

- Plugins and Functions as pipeline.

- Giving memory to LLM, using local volatile memory and Azure Cognitive Search.

All the examples are on GitHub: https://github.com/sohamda/SemanticKernel-Basics

Step #1: Create a Kernel

The kernel orchestrates a user’s ask. To do so, the kernel runs a pipeline / chain that is defined by a developer. While the chain is run, a common context is provided by the kernel so data can be shared between functions.

First create a Kernel object:

client.azureopenai.key=XXYY1232 client.azureopenai.endpoint=https://abc.openai.azure.com/ client.azureopenai.deploymentname=XXYYZZ

You can get these details from the Azure portal (endpoint and key) and Azure OpenAI (AOAI) studio (deployment name).

This article uses deployment “gpt-35-turbo” language model.

How to create such resource in Azure, follow this > How-to – Create a resource and deploy a model using Azure OpenAI Service – Azure OpenAI | Microsoft Learn

Then add:

AzureOpenAISettings settings = new AzureOpenAISettings(SettingsMap.

getWithAdditional(List.of(

new File("src/main/resources/conf.properties"))));

OpenAIAsyncClient client = new OpenAIClientBuilder().endpoint(settings.getEndpoint()).credential(new AzureKeyCredential(settings.getKey())).buildAsyncClient();

TextCompletion textCompletion = SKBuilders.chatCompletion().build(client, settings().getDeploymentName());

KernelConfig config = SKBuilders.kernelConfig().addTextCompletionService("text-completion", kernel -> textCompletion).build();

Kernel kernel = SKBuilders.kernel().withKernelConfig(config).build();

Step #2: Add a Function

Function? Yes 😊

Think of this as a prompt you provide to ChatGPT to direct it to do something. The below example, shows, how you create a “reusable” prompt, aka function, to instruct the LLM to summarize a given input.

String semanticFunctionInline = """

{{$input}}

Summarize the content above in less than 140 characters.

""";

Step#3: Instruct LLM to use this Function + Additional Settings

Now before you invoke AOAI API, you need to add this prompt/function to the call and some additional settings, such as maxTokens, temperature, top etc.

FYI: LLMs are non-deterministic, meaning, the output for the same input might be different every time you invoke them. The “temperature” parameter controls that, so higher temperature is higher level of non-deterministic behavior and lower temperature is lower that behavior.

CompletionSKFunction summarizeFunction = SKBuilders

.completionFunctions(kernel)

.createFunction(

semanticFunctionInline,

new PromptTemplateConfig.CompletionConfigBuilder()

.maxTokens(100)

.temperature(0.4)

.topP(1)

.build());

log.debug("== Run the Kernel ==");

Mono<SKContext> result = summarizeFunction.invokeAsync(TextToSummarize);

log.debug("== Result ==");

log.debug(result.block().getResult());

“TextToSummarize” is the user input, a String which we want to summarize using the “semanticFunctionInline” function and LLM.

Here is an example –> InlineFunction.java

CONGRATULATIONS!! 😊 You created your first JAVA program to interact with LLM using Semantic Kernel.

Step #4: Plugins and Functions

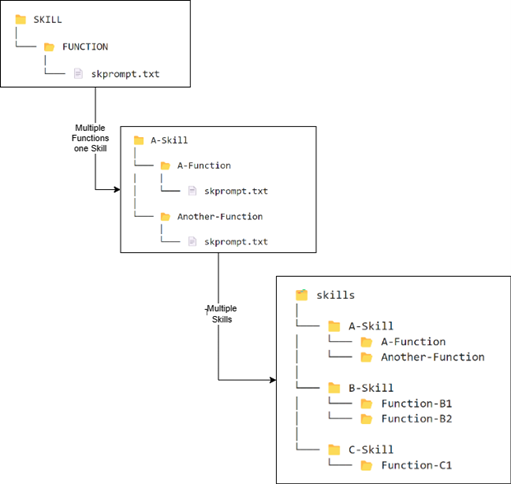

Functions are reusable prompts with AOAI API settings which can be used when invoking LLMs. A collection of functions is called a Plugin.

A Function is represented by a “skprompt.txt” and optionally a “config.json”.

Functions and Plugins are organized in a directory structure and ideally placed in the classpath. In this article examples, the Plugins and Function are placed inside “/resources” folder, but you can think of separating them into their own repo and releasing them into JARs and using them into your application code as a dependency, making them easy to use and also make them part of the DevOps workflow and reusable by multiple applications.

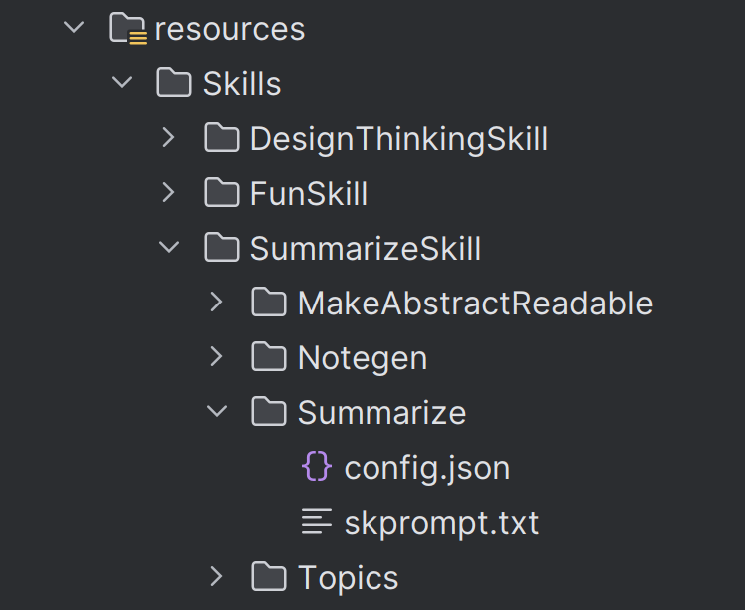

Considering SummarizeSkill in resources folder, with multiple functions in it:

To use, Summarize function from above with Semantic Kernel.

ReadOnlyFunctionCollection skill = kernel.importSkillFromDirectory("SummarizeSkill", "src/main/resources/Skills", "SummarizeSkill");

CompletionSKFunction summarizeFunction = skill.getFunction("Summarize", CompletionSKFunction.class);

log.debug("== Set Summarize Skill ==");

SKContext summarizeContext = SKBuilders.context().build();

summarizeContext.setVariable("input", TextToSummarize);

log.debug("== Run the Kernel ==");

Mono<SKContext> result = summarizeFunction.invokeAsync(summarizeContext);

The code above does the following:

- Loads a Plugins (Summarize) from a directory in classpath.

- Define the function (Summarize) which needs to be used.

- Add the input to the function and set it in the context

- Invoke AOAI API with the function.

Here is a starter example –> SummarizerAsPrompt.java

Step #5: Plugin& Function as code

Well, we all love the fact that we can represent Plugins and functions as a Java class. Then we can instantiate and use it in OOB way and reduce String manipulation while using them.

Say No More 😊

Still not in main branch, but this submodule defines a few you can use –> semantickernel-plugin-core

You can also implement your own, using annotations @DefineSKFunction, @SKFunctionInputAttribute, @SKFunctionParameters

In the below example, we use an existing Plugin, Summarize and its function Summarize, so basically the same example as in Step #4 but this time with object references.

import com.microsoft.semantickernel.coreskills.ConversationSummarySkill;

log.debug("== Load Conversation Summarizer Skill from plugins ==");

ReadOnlyFunctionCollection conversationSummarySkill =

kernel.importSkill(new ConversationSummarySkill(kernel), null);

log.debug("== Run the Kernel ==");

Mono<SKContext> summary = conversationSummarySkill

.getFunction("SummarizeConversation", SKFunction.class)

.invokeAsync(ChatTranscript);

The above code does the following:

- Instantiate ConversationSummarySkill object.

- Define the function (SummarizeConversation) which needs to be used.

- Add the input to the function and set it in the context

- Invoke AOAI API with the function.

You can also use Plugins and Function for all sorts of tasks within an AI pipeline. Think of transformation, translation, executing business logic and others. So don’t see Plugins and functions as only to invoke LLM, but also do some other tasks which are needed for next step in the pipeline or transformation of output from previous step.

Example code samples –> SummarizerAsPlugin.java and SkillPipeline.java

Step #6: Giving LLM some memory

What’s memory? Think of giving LLM information to support it when executing a (or a set) of Plugins. For example, you want to get a summary of your dental insurance details from a 100-page insurance document, here you might choose to send the whole document along with your query to the LLM, but every model in LLM has a limit on tokens what it can process with per request. To manage that token limitation, it is helpful if you can search the document first, get the relevant pages or texts out of it and then query the LLM with those selected pages/texts. That way LLM can efficiently summarize the content and not run out of tokens per request. The ”selected pages/texts” in this example are Memories.

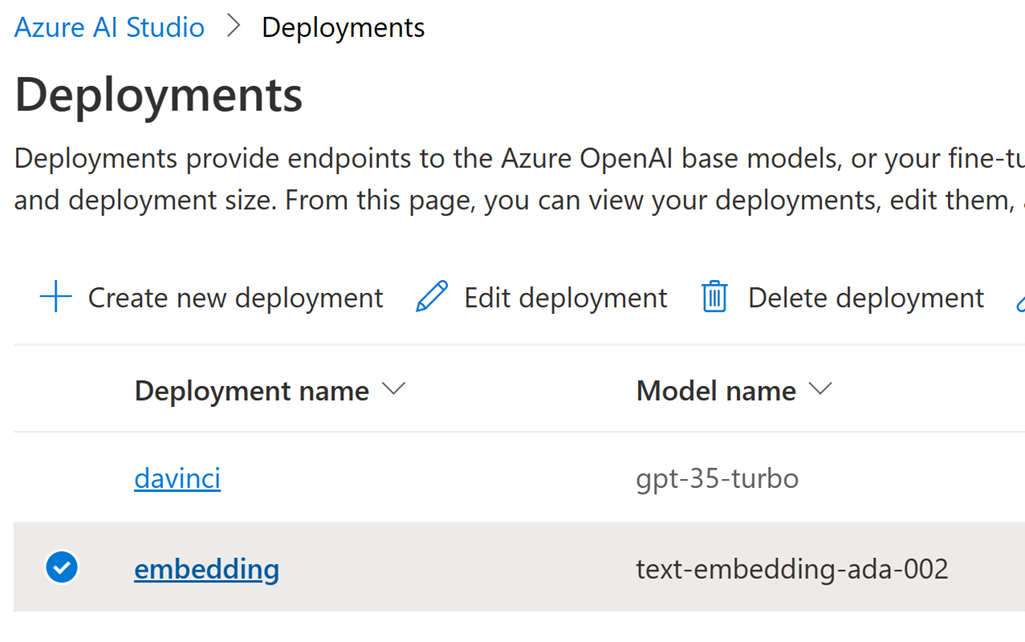

For this example, we will be using 2 Kernels, one with AI Services type Embedding and another one with TextCompletion.

In Azure AI Studio, you will also need 2 deployments.

Kernel with Embedding is instantiated as follows:

OpenAIAsyncClient openAIAsyncClient = openAIAsyncClient(); EmbeddingGeneration<String> textEmbeddingGenerationService = SKBuilders.textEmbeddingGenerationService() .build(openAIAsyncClient, "embedding"); Kernel kernel = SKBuilders.kernel() .withDefaultAIService(textEmbeddingGenerationService) .withMemoryStorage(SKBuilders.memoryStore().build()) .build();

The above example uses a volatile memory, a local simulation of a vector DB.

Another way is to use Azure Cognitive Search, then the kernel would be instantiated as follows:

Kernel kernel = SKBuilders.kernel()

.withDefaultAIService(textEmbeddingGenerationService)

.withMemory(new AzureCognitiveSearchMemory("<ACS_ENDPOINT>", "<ACS_KEY>"))

.build();

After you store your data in the memory, you can search on it, which gives you back the relevant sections of the memory.

kernel.getMemory() .searchAsync(“<COLLECTION_NAME>”, “<QUERY>”, 2, 0.7, true);

Then use this search results, extract the memory as text and then run, for example, a Summarizer Plugin using another Kernel object which uses TextCompletion as AI Service.

List<MemoryQueryResult> relevantMems = relevantMemory.block();

StringBuilder memory = new StringBuilder();

relevantMems.forEach(relevantMem -> memory.append("URL: ").append(relevantMem.getMetadata().getId())

.append("Title: ").append(relevantMem.getMetadata().getDescription()));

Kernel kernel = kernel();

ReadOnlyFunctionCollection conversationSummarySkill =

kernel.importSkill(new ConversationSummarySkill(kernel), null);

Mono<SKContext> summary = conversationSummarySkill

.getFunction("SummarizeConversation", SKFunction.class)

.invokeAsync(relevantMemory);

log.debug(summary.block().getResult());

The results will be a summary of the query you initially executed on the Embedding kernel.

Example Code –> SKWithMemory.java and SKWithCognitiveSearch.java

Next Steps

Get involved with the Java Kernel development by contributing and following the public Java board to see what you might want to pick up.

Join the Semantic Kernel community and let us know what you think.

0 comments