Today, we’re excited to announce our collaboration with NVIDIA. In Azure AI Foundry, we’ve integrated NVIDIA NIM microservices and the NVIDIA AgentIQ toolkit into Azure AI Foundry—unlocking unprecedented efficiency, performance, and cost optimization for your AI projects. Read more on the announcement here.

Optimizing performance with NVIDIA AgentIQ and Semantic Kernel

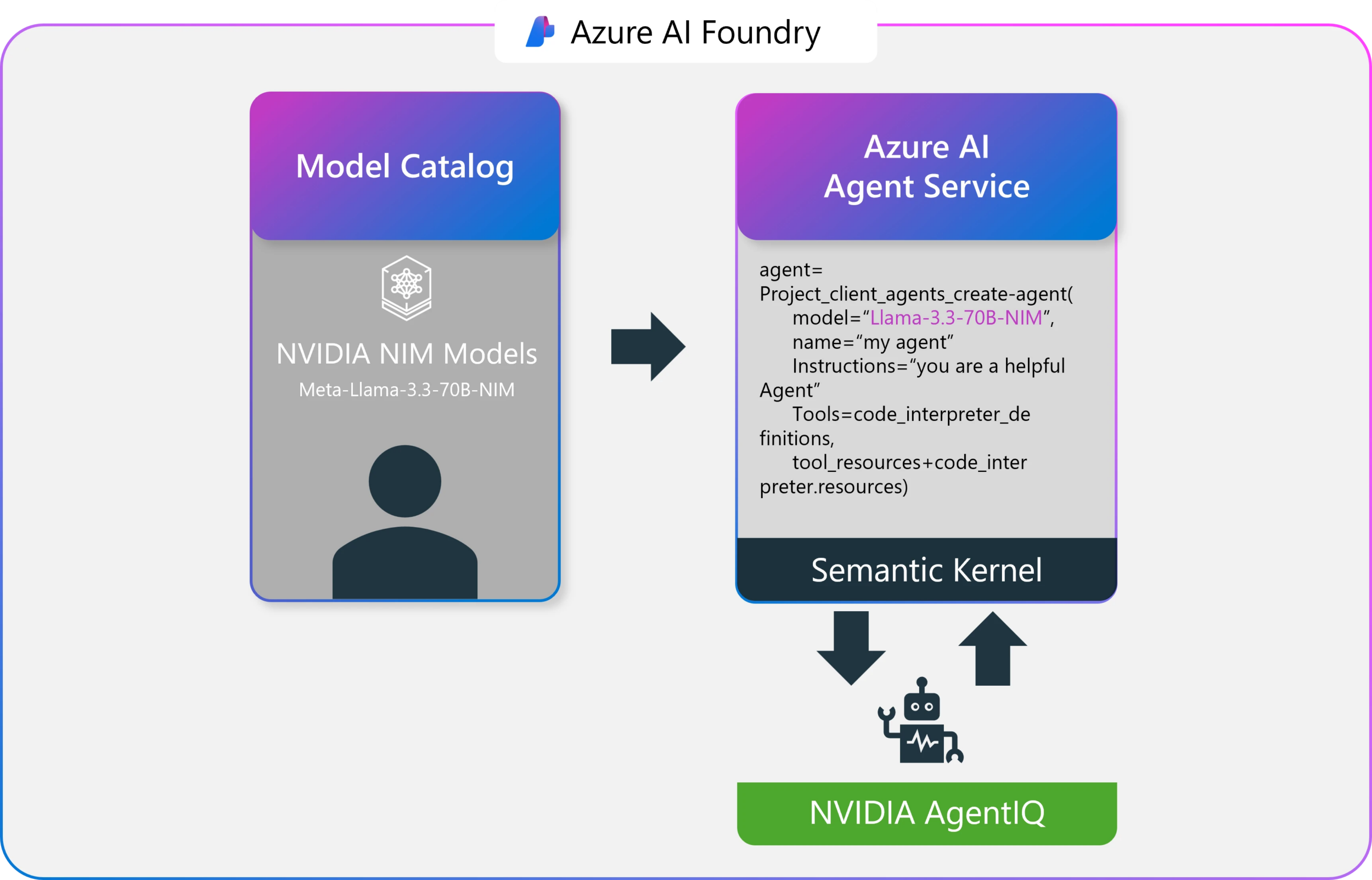

Once your NVIDIA NIM microservices are deployed, NVIDIA AgentIQ takes center stage. This open-source toolkit is designed to seamlessly connect, profile, and optimize teams of AI agents, enables your systems to run at peak performance. AgentIQ delivers:

- Profiling and optimization: Leverage real-time telemetry to fine-tune AI agent placement, reducing latency and compute overhead.

- Dynamic inference enhancements: Continuously collect and analyze metadata—such as predicted output tokens per call, estimated time to next inference, and expected token lengths—to dynamically improve agent performance.

- Integration with Semantic Kernel: Direct integration with Azure AI Foundry Agent Service further empowers your agents with enhanced semantic reasoning and task execution capabilities.

This intelligent profiling not only reduces compute costs but also boosts accuracy and responsiveness, so that every part of your agentic AI workflow is optimized for success.

In addition, we will soon be integrating the NVIDIA Llama Nemotron Reason open reasoning model. NVIDIA Llama Nemotron Reason is a powerful AI model family designed for advanced reasoning. According to NVIDIA, Nemotron excels at coding, complex math, and scientific reasoning while understanding user intent and seamlessly calling tools like search and translations to accomplish tasks.

Ready to dive in?

Deploy NVIDIA NIM microservices and optimize your AI agents. Below are details to get up and running. Details about the NVIDIA Text Embedding Connector can be found here.

This connector enables integration with NVIDIA’s NIM API for text embeddings. It allows you to use NVIDIA’s embedding models within the Semantic Kernel SDK.

Quick start

Initialize the kernel

from semantic_kernel import Kernel

kernel = Kernel()Add NVIDIA text embedding service

You can provide your API key directly or through environment variables

from semantic_kernel.connectors.ai.nvidia import NvidiaTextEmbedding

embedding_service = NvidiaTextEmbedding(

ai_model_id="nvidia/nv-embedqa-e5-v5", # Default model if not specified

api_key="your-nvidia-api-key", # Can also use NVIDIA_API_KEY env variable

service_id="nvidia-embeddings", # Optional service identifier

)Add the embedding service to the kernel

kernel.add_service(embedding_service)Generate embeddings for text

texts = ["Hello, world!", "Semantic Kernel is awesome"]

embeddings = await kernel.get_service("nvidia-embeddings").generate_embeddings(texts)Now that you have generated the embeddings, you can seamlessly integrate them into your application to enhance its AI capabilities.

0 comments