Today we’re featuring Sticos on our Semantic Kernel blog to highlight their customer journey with AI and Semantic Kernel.

Who is Sticos

- Company focused on helping Norwegian businesses, communes, accountants, and auditors understand regulations since 1983

- Sticos covers the market from Rv9 in Trondheim

- Sticos is a part of Visma company since 2021, with 127 employees, 70 of us are lawyers, accountants, and system developers

- We love building problem solving software for our customers who are accountants, auditors, SMEs and Public sector with over 7000 customers.

Our Vision: Sticos is determined to become the most customer driven company in the world. Together we make incomprehensible legislation easy and practical!

What We Do: We combine legal expertise and technology to make it easy for businesses to comply with regulations and to make right and smart decisions.

Sticos’ history in AI

In February 2017, before any LLM we launched a HR Chatbot. It could answer HR related questions, and you could even say to the @else mobile app that “I am sick today”. It would report a sick leave to the HR system and notify your closest leader. This was built using neural networks and self-made embedding models.

Fast forward to 2023 the Open AI started releasing the large language models and generative AI, we had the know how to start using this straight away with information and self-made articles published in our encyclopedia named Sticos Oppslag.

An easy prototype was created as a proof of concept where vector search was done in memory and the existing search engine was used. The resulting prototype worked great, and we could see that this would help our users find answers faster than in traditional search.

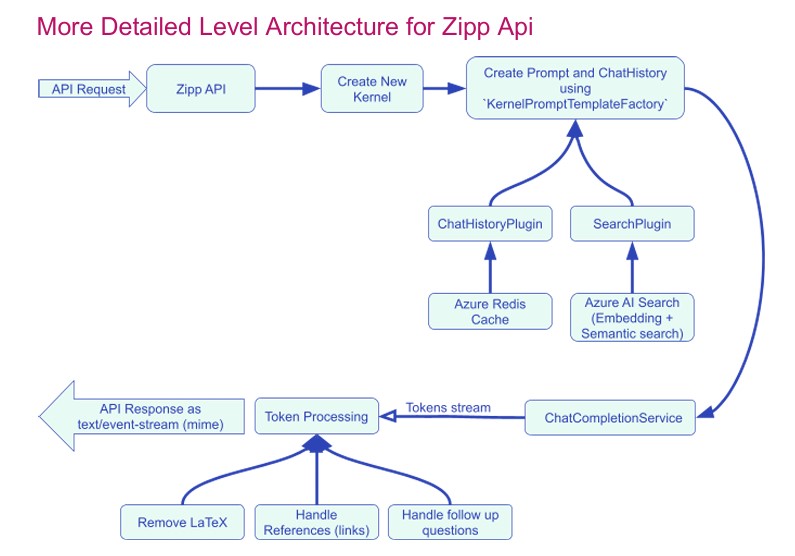

We figured that we should go from prototype to production code with all bells and whistles. So, we contracted two from Visma Resolve to help us build out the Zipp API using Azure AI Search and Azure Open AI SDK. In November 2023 we released the first production version of Zipp. We saw that our code already was a bit bloated, and after listening to an Hanselminutes podcast episode we learned about Semantic Kernel that was being made by Microsoft. We looked at it and realised that we could drastically simplify our code by using this enterprise ready AI framework. We also saw that our plans by expanding the API to support multiple AI pipelines with different skills would also be simplified. We decided to go ahead with switching Azure Open AI SDK with Semantic Kernel at this time.

Using Semantic Kernel has simplified our code and made it more maintainable and futureproof.

Lessons from using Semantic Kernel for a Year

The technology is moving fast, and below are our learned during this year in production

A Kernel per API Request

For each API request we build a Kernel from scratch. We do this for multiple reasons.

- For scalability we had to load balance our requests to multiple Azure Open AI instances.

- To be able to gradually roll out new generative models we are able to configure which model is used per API client. This way we can activate a new and better model with a client with less API requests, see that it works and gradually roll out to the other clients.

- For GDPR reasons we have API clients that only want to have data processed inside EU, we can route these requests to Azure Open AI instances inside EU.

Experienced vs New AI Users

We have experienced that a user that has not much experience with generative AI tools starts querying the assistant using normal web search terms using key words to find an answer to the problem they are struggling with. For example, “what are the requirements for stocktaking lists” if you want the legal requirements for such lists for stores that are doing stock taking. Instead, we see that the more experienced users shift over to get the assistant to perform the task they are working on. From a more experienced user we see this instead

“I need to write a letter to my customers about the preparations they should make before stocktaking. Write a suggestion where you refer to the bookkeeping regulations’ requirements for stocktaking lists.” The assistant will instead write a full letter using the information found through our search service. This saves our more experienced users a lot of time! Since they get a head start at writing that letter and only need to change small details instead of writing it from scratch.

Zipp Powered by Semantic Kernel

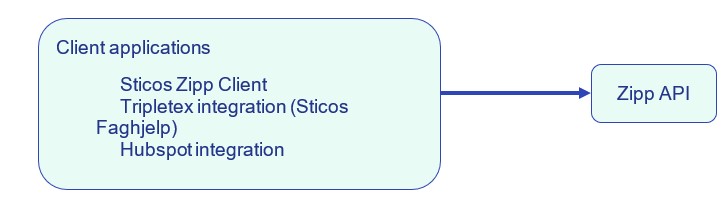

We have created a product named Zipp which is a RAG based setup using Semantic Kernel as base framework. The product is one API with multiple client applications.

High Level Architecture

Sticos: Customer Facing solution with Semantic Kernel

The goal of the customer facing solution is to help our users to quickly find correct answers. Saving them time they can use on other important tasks. There are two client applications for this. Zipp which is a product and application which we own and an integration with Tripletex named Sticos Faghjelp. Both are chatbots where users can ask questions, they need answers for. Tripletex is a cloud-based ERP system with 400 000 users.

- Been in production since 6th November 2023

- Has 8,000 users across 6,000 companies

- Customer satisfaction score of 86 (Scale from 0-100)

- 70 000+ questions answered so far

- The 30-day retention rate is around 70% with Zipp and 40% with Tripletex integration.

- We have seen questions at every hour of the day

Sticos Internal Tool

We have also made an integration with Zipp API through our Hubspot ticketing system. Our expert lawyers, accountants and auditors help our customers by answering questions directly to us. When a user sends in a question to hubspot, a ticket will be created. When a new ticket is made it kicks off a process that sends the question to Zipp and creates a note to the ticket that our experts can use as help to form a reply. This has been set up in such a way that it is an A/B test. The control does not answer anything, and B is where a note is added with Zipp answer. This way we can measure the effectiveness of having this internal tool. After 16 000 questions we have obtained statistical significance and on average we are using 8% less time per ticket, which is around 1 FTE. As Zipp gets better we hope to improve this number as well.

Furthermore, we have discovered that questions where Zipp is struggling to give answers actually have a significantly higher answering time than others. This indicates that if the question is difficult for Zipp it is also difficult for humans.

Future work at Sticos

Next year, Sticos will start making assistants for other Sticos Products which will not necessarily have a RAG setup but will use plugins far more extensively. Fetching our customers’ own data and exposing it through an assistant make our users more effective in their daily work. Learn more about Sticos here: https://www.sticos.no/

0 comments

Be the first to start the discussion.