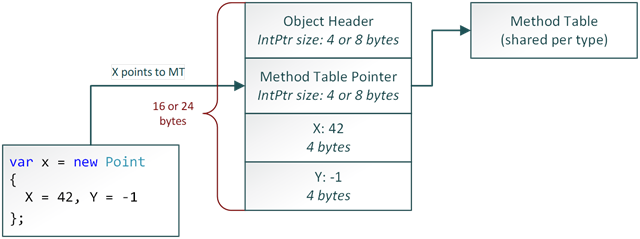

The layout of a managed object is pretty simple: a managed object contains instance data, a pointer to a meta-data (a.k.a. method table pointer) and a bag of internal information also known as an object header.

The first time I’ve read about it, I’ve got a question: why the layout of an object is so weird? Why a managed reference points into the middle of an object and an object header is at a negative offset? What information is stored in the object header?

When I started thinking about the layout and did a quick research, I’ve got few options:

- JVM used a similar layout for their managed objects from the inception.

- It could sound a bit crazy today but remember that C# has one of the worst features of all times (a.k.a. array covariance) just because Java had it back in the day. And compared to that decision, reusing some ideas about the structure of an object doesn’t sound that unreasonable.

- Object header can grow in size with no cross-cutting changes in the CLR.

- Object header holds some auxiliary information used by CLR and it is possible that CLR will require more information than a pointer size field. And indeed, .Net Compact Framework used in mobile phones has different headers for small and large objects (see WP7: CLR Managed Object overhead for more details). Desktop CLR never used this ability but it doesn’t mean that it is impossible in the future.

- Cache line and other performance related characteristics.

Chris Brumme — one of the CLR architects, mentioned in the comment on his post “Value Types“ that cache friendliness is the very reason for the managed object layout. It is theoretically possible that due to cache line size (64 bytes) it will be more efficient to access fields that are closer to each other. This means that dereferencing method table pointer with the following access to some field should have some performance difference depending on the location of the field inside the object. I’ve spent some time trying to proof that this is still true for modern processors but was unable to get any benchmarks that showed the difference.

After spending some time trying to validate my theories, I’ve contacted Vance Morrison asking this very question and got the following answer: current design was made with no particular perf considerations.

So, the answer to the question – “Why the managed object’s layout is so weird?”, is simple: “historical reasons”. And, to be honest, I can see a logic for moving object header at a negative index to emphasize that this piece of data is an implementation detail of the CLR, the size of it can change in time, and it should not be inspected by a user.

Now, it’s time to inspect the layout in more details. But before that, let’s think about, what extra information CLR can be associated with a managed object instance? Here are some ideas:

- Special flags that GC can use to mark that an object is reachable from application roots.

- Special flag that notifies GC that an object is pinned and should not be moved during garbage collection.

- Hash code of a managed object (when a GetHashCode method is not overridden).

- Critical section and other information used by a lock statement: thread that acquired the lock etc.

Apart from instance state, CLR stores a lot of information associated with a type, like method table, interface maps, instance size and so on, but this is not relevant for our current discussion.

IsMarked flag

Managed object header is a multi-purpose chameleon that can be used for many different purposes. And you may think that the garbage collector (GC) uses a bit from the object header to mark that the object is references by a root and should be kept alive. This is a common misconception, and few very famous books are to blame (*).

(*) Namely “CLR via C#” by Jeffrey Richter, “Pro .NET Performance” by Sasha Goldstein at al and, definitely, some others.

Instead of using the object header, the CLR authors decided to use one clever trick: the lowest bit of a method table pointer is used to store a flag during garbage collection that the object is reachable and should not be collected.

Here is an actual implementation of ‘mark’ flag from the coreclr repo, file gc.cpp, lines 8974 (**):

#define marked(i) header(i) -> IsMmarked();

#define set_marked(i) header(i)->SetMarked()

#define clear_marked(i) header(i)->ClearMarked()

// class CObjectHeader

BOOL IsMarked() const

{

return !!(((size_t)RawGetMethodTable()) & GC_MARKED);

}

void ClearMarked()

{

RawSetMethodTable(GetMethodTable());

}

void SetMarked()

{

RawSetMethodTable((MethodTable*)(((size_t)RawGetMethodTable()) | GC_MARKED));

}

MethodTable* GetMethodTable() const

{

return((MethodTable*)(((size_t)RawGetMethodTable()) & (~(GC_MARKED))));

}

(**) Unfortunately, the gc.cpp file is so big that github refuses to analyze it. This means that I can’t add a hyperlink to a specific line of code.

Managed pointers in a CLR heap are aligned on 4-byte or 8-byte address boundaries depending on a platform. This means that 2 or 3 bits of every pointer are always 0 and can be used for other purposes. The same trick is used by JVM and called ‘Compressed Oops’ – the feature that allows JVM to have 32 gigs heap size and still use 4 bytes for managed pointer.

Technically speaking, even on a 32-bit platform there is 2 bits that can be used for flags. Based on a comment from the object.h file we can think that this is indeed the case and the second lowest bit of the method table pointer is used for pinning (to mark that the object should not be moved during compaction phase of garbage collection). Unfortunately, it is not clear, is true or not, because SetPinned/IsPinned methods from the gc.cpp (lines 3850-3859) are implemented based on a reserved bit from the object header and I was unable to find any code in the coreclr repo that actually sets the bit of the method table pointer.

Next time we’ll discuss how locks are implemented and will check how expensive they are.

0 comments