Linkai Yu explores the retrieval-augemented generation pattern with Azure OpenAI Assistants API.

The Retrieval-Augmented Generation (RAG) pattern is the standard for integrating ground AI with local data. One powerful way to implement RAG is through the function call or tool call feature in the Function Call API. When examining the samples provided by the Azure OpenAI Assistant API Function Call, Completion API Function Call, or Autogen Function Call, they all require the function metadata to be specified in the code. Imagine you have hundreds of data I/O functions, ranging from SQL queries to microservice calls, and your application needs to support various user prompts that cannot be reliably predicted by pre-defining the functions to call.

The question then becomes, “Is there is a way to figure out the functions needed after the user prompt is received, and then configure the Tools parameter (in the Completion API and Assistant API) to configure Autogen’s agent llm_config and function map dynamically?”

This blog tries to answer this question. We are going to use Autogen as an example since it supports function call very well.

The benefit of this approach is that once you have this architecture in place, you can connect up with backend data IO functions. This will result in an application that allows user to ask variety of questions in different combinations without developer having to change the app logic code. Developers can add functions to the list without having to change the application file.

Let’s suppose your app has a list of functions:

- read_file

- save_to_file

- get_health_insurance_account

- get_health_insurance_policy

- get_policy_benefits

- summarize_policy_content

- find_careproviders

- analyze_sentiment

- ask_a_question

A user may ask this question:

“I have an insurance account with me (Brad Smith) as policy holder. I’d like to get the benefits, have it summarized in a paragraph and save it to file c:\mybenefits.txt”

Or this question:

“I have an insurance account with me (Brad Smith) as policy holder. I’d like to find healthcare providers in my area (New York), and save it to file c:\careproviders.txt”

Or… other questions so long as your app have data IO functions to support data operation.

The algorithm to approach this use case is this:

- We provide (register) a generic function that knows how to find the function name given a description of a function. E.g. RegisterFunction(“find insurance policy”)

- We ask AI to make a function call to RegisterFunction(<description>) if it needs information from the user agent.

- The user agent invokes RegisterFunction() with the description it received from AI

- The RegisterFunction() does a vector DB search for function name using the function description.

- With the function name, it looks up the actual function in the functions dictionary (key=name, value=function). With the function, it registers it with user agent (for function map) and assistant agent (for llm_config). The function information is made available to AI to call.

- AI makes the right function call. User agent invokes the function.

To make this process happen, we need these data structures:

- A dictionary of all the functions.

- We use this dictionary to find the function object so that we can register it with user agent and assistant agent

- A vector DB that has three fields: id, name, description.

- The id is to maintain record uniqueness. The name is for the function name. The description is a text description of the function for vector search.

- A decorator to decorate the function with a description property.

- This allows the vector DB to have the function description field. It also allows RegisterFunction() function to provide the user agent the function description.

This is what the function table used to populate vector DB:

functions_table = [

{“id”: “1”,”func”: read_file},

{“id”: “2”,”func”: save_to_file},

{“id”: “3”,”func”: get_health_insurance_account},

{“id”: “4”,”func”: get_health_insurance_policy },

{“id”: “5”,”func”: get_policy_benefits },

{“id”: “6”,”func”: summarize_policy_content },

{“id”: “7”,”func”: find_careproviders },

{“id”: “8”,”func”: analyze_sentiment },

{“id”: “9”,”func”: ask_a_question},

]

This is the function dictionary created at runtime using the functions_table:

functions_dict = {item[“func”].__name__: item[“func”] for item in functions_table}

This is the function decorator for description

def desc(desc):

def wrapper(f):

f.__desc__ = desc

return f

return wrapper

Example usage of the decorator:

@desc(“read a file”)

def read_file(file_path: Annotated[str, “Name and path of file to read.”]) -> Annotated[str, “file content”]:

Code explanation:

We put all the functions in a separate file to be included by the main file. This will allow the update of the functions file without having to update the main file.

- Define the data IO functions:

def desc(desc): def wrapper(f): f.__desc__ = desc return f return wrapper # define custom functions: @desc("read the content of a file") def read_file(file_path: Annotated[str, "Name and path of file to read."]) -> Annotated[str, "file content"]: … @desc("save the content to a file") def save_to_file(file_path: Annotated[str, "full path to the file"], content: Annotated[str, "content"]) -> Annotated[str, "status: success or error"]:

2. Enter all the functions in a function_table:

functions_table = [

{"id": "1","func": read_file},

{"id": "2","func": save_to_file},

{"id": "3","func": get_health_insurance_account},

{"id": "4","func": get_health_insurance_policy },

{"id": "5","func": get_policy_benefits },

{"id": "6","func": summarize_policy_content },

{"id": "7","func": find_careproviders },

{"id": "8","func": analyze_sentiment },

{"id": "9","func": ask_a_question},

]3. The framework file that manages the function API file.

We use chromdb for in memory vector DB for the sake of convenience. For better performance, using Azure Cognitive Search service is recommended.

from pydantic import BaseModel, Field

from typing_extensions import Annotated

import autogen

import os

from dotenv import load_dotenv

import _FunctionFactory_5 as functions

# load llm config

load_dotenv()

config_list = [{

'model': os.getenv("AZURE_OPENAI_MODEL"),

'api_key': os.getenv("AZURE_OPENAI_API_KEY"),

'base_url': os.getenv("AZURE_OPENAI_ENDPOINT"),

'api_type': 'azure',

'api_version': os.getenv("AZURE_OPENAI_API_VERSION"),

'tags': ["tool", "gpt-4"]

}]

llm_config={

"config_list": config_list,

"timeout": 120,

}

# functions dict for lookup used by get_function(function_name)

functions_dict = {item["func"].__name__: item["func"] for item in functions.functions_table}

# in memory vector database for function lookup

import chromadb

documents = []

metadatas = []

ids = []

# populate the documents, metadatas and ids for the functions

for item in functions.functions_table:

documents.append(item["func"].__desc__)

metadatas.append({"name": item["func"].__name__})

ids.append(item["id"])

# create the collection and add the documents

client=chromadb.Client()

collection = client.create_collection("functions")

collection.add(

documents=documents, # we embed for you, or bring your own

metadatas=metadatas, # filter on arbitrary metadata!

ids=ids, # must be unique for each doc

)

# function factory to get a function based on the description. the fuction will be called by the user proxy agent

from typing import Callable, Any, Dict

def get_function(description: str) -> Callable[..., Any]:

"""

use the description to find the function based on vector search for now, use the hard coded function map for testing

args:

description (str): the description of the function.

returns:

Callable[..., Any]: the function.

"""

results = collection.query(

query_texts=[description],

n_results=1,

# where={"metadata_field": "is_equal_to_this"}, # optional filter

# where_document={"$contains":"search_string"} # optional filter

)

name = results["metadatas"][0][0]["name"]

func = functions_dict.get(name, None)

if func is not None:

return func

else:

print(f"get_function() error: function found for: {description}")

raise Exception(f"get_function fail to find function for: {description})")

# function to register other functions for agent to call, given the description

@functions.desc("register the function for agent based on the given description")

def register_functions(function_description: Annotated[str, "description of the function to register."]) -> Annotated[str, "registration result"]:

"""

register the functions based on the description

args:

function_description (str): the description of the function to register.

returns:

str: registration result

"""

func = get_function(function_description)

assistant.register_for_llm(name=func.__name__, description=func.__desc__)(func)

user_proxy.register_for_execution(name=func.__name__)(func)

return f"registering: {func.__name__} for: '{function_description}'"

# create user agent and assistant agent

user_system_message = """

You are a helpful AI agent. when you talk with assistant, help them to make their response as accurate as possible to the user's requirement. make sure to execute the task in the correct order of the assistant's response.

"""

assistant_system_message = """

For coding tasks, only use the functions you have been provided with.

do not generate answer on your own. do not guess.

for tasks that needs to access user local resources, do not generate python code. use the functions provided to you.

if you don't have enough information to execute the task, call the given 'register_functions' function with a brief description e.g. 'get insurance policy'.

if you need to save content to or read content from a file, call register_functions function to register functions that can save to or read from file.

Reply TERMINATE when the task is done.

"""

import typing;

assistant = None

user_proxy = None

def Create_Agents( ) -> typing.Tuple[autogen.UserProxyAgent, autogen.AssistantAgent]:

global assistant

global user_proxy

assistant = autogen.AssistantAgent(

name="assistant",

system_message=assistant_system_message,

llm_config=llm_config,

)

user_proxy = autogen.UserProxyAgent(

name="User",

llm_config=False,

is_termination_msg=lambda msg: msg.get("content") is not None and "TERMINATE" in msg["content"],

system_message=user_system_message,

human_input_mode="NEVER",

max_consecutive_auto_reply=12,

code_execution_config={

"work_dir": "coding",

"use_docker": False,

}, # Please set use_docker=True if docker is available to run the generated code. Using docker is safer than running the generated code directly.

)

# register the fundamental functions

assistant.register_for_llm(name="register_functions", description=register_functions.__desc__)(register_functions)

user_proxy.register_for_execution(name="register_functions")(register_functions)

return user_proxy, assistant

# reset the agents to their initial state

def Reset_Agents():

global user_proxy

global assistant

user_proxy.clear_history()

user_proxy.function_map.clear()

assistant.llm_config=llm_config

# register the fundamental functions

assistant.register_for_llm(name="register_functions", description=register_functions.__desc__)(register_functions)

user_proxy.register_for_execution(name="register_functions")(register_functions)

# test the function factory

if __name__ == "__main__":

print("functions table:")

for item in functions.functions_table:

print(f"function: {item['func'].__name__}, id: {item['id']}")

print()

print("functions_dict:")

for key, value in functions_dict.items():

print(f"key: {key}, value: {value}")

print()

print("test get function find_careproviders")

func = functions_dict.get("find_careproviders")

print(func.__name__)

print()

print("test register_function")

user_proxy, assistant = Create_Agents() # to initialize the agents

for item in functions.functions_table:

register_functions(item["func"].__desc__)

print(f"function: {item['func'].__name__}, id: {item['id']}")

print()

print("test user_proxy.function_map")

for item in user_proxy.function_map:

print(f"key: {item}")

while True:

user_input = input("Enter your input: ")

if user_input == "exit":

break

chat_result = user_proxy.initiate_chat(

assistant,

message=user_input,

max_turns=10,

)

print("chat complete")

Reset_Agents()The main file:

import autogen

import _autogenRAG_5 as autogenRAG

user_proxy, assistant = autogenRAG.Create_Agents()

# take user input prompt and call the assistant

while True:

user_input = input("Enter your input: ")

if user_input == "exit":

break

chat_result = user_proxy.initiate_chat(

assistant,

message=user_input,

max_turns=12,

)

print("chat complete")

autogenRAG.Reset_Agents()At runtime, you can provide this prompt as an example:

Read file prompt-find-benefits.txt. Take the content as user input and execute it. When finish the task, reply TERMINATE.

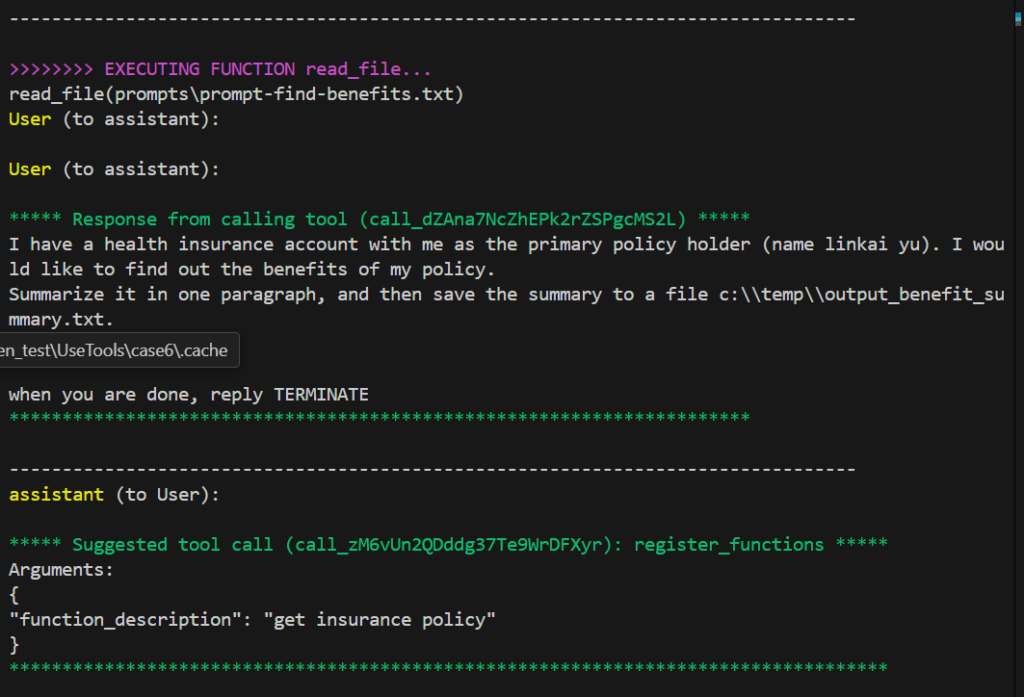

The content of the prompt-find-benefits.txt is this:

I have a health insurance account with me as the primary policy holder (name linkai yu). I would like to find out the benefits of my policy.

Summarize it in one paragraph, and then save the summary to file c:\\temp\\output_benefit_summary.txt.

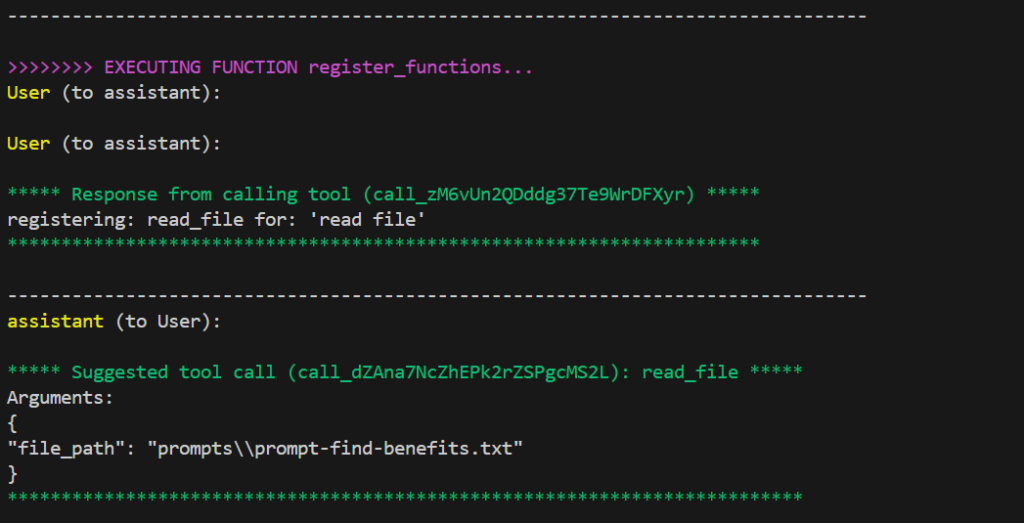

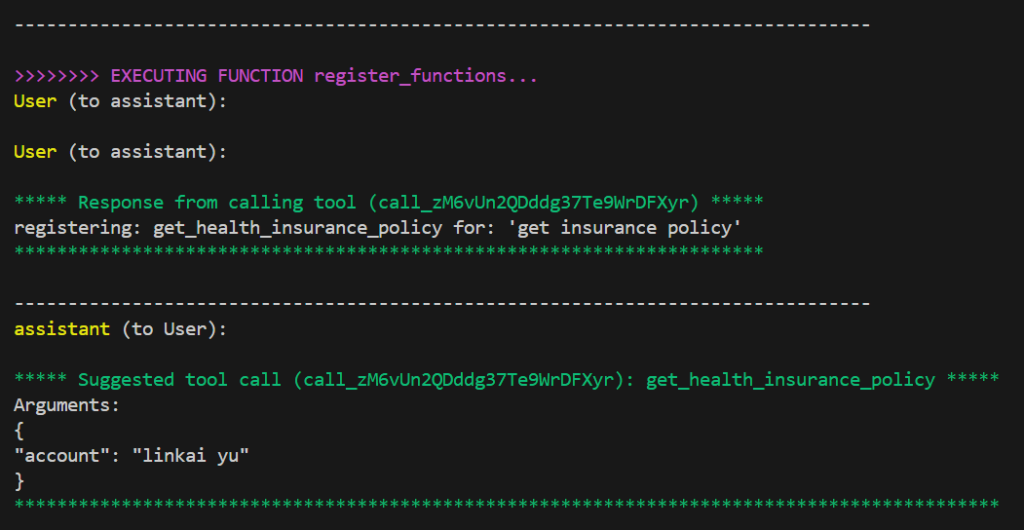

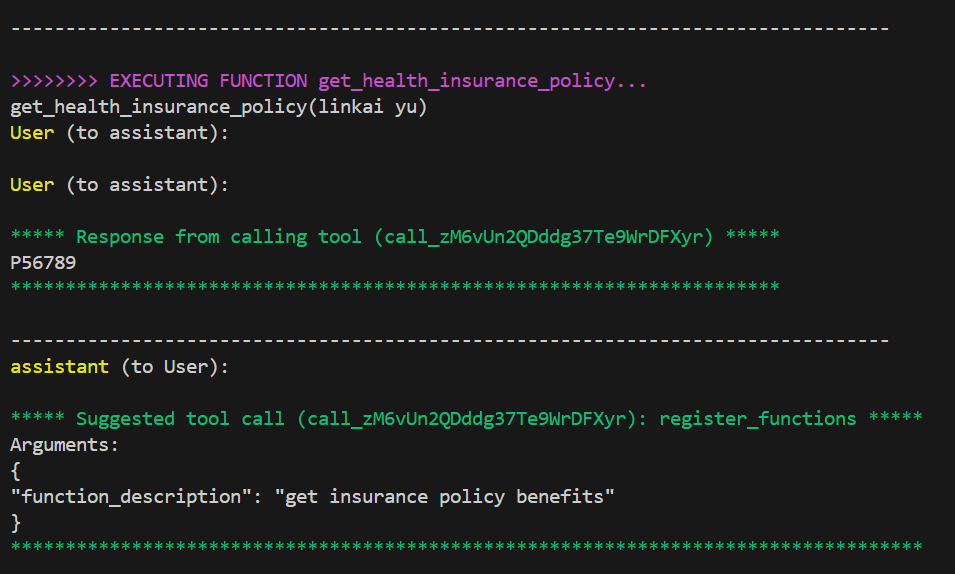

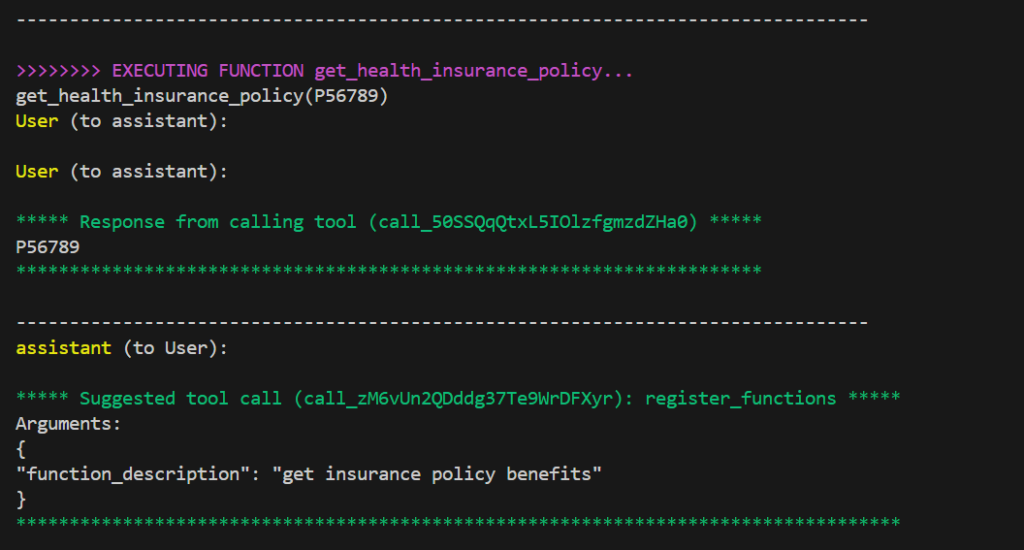

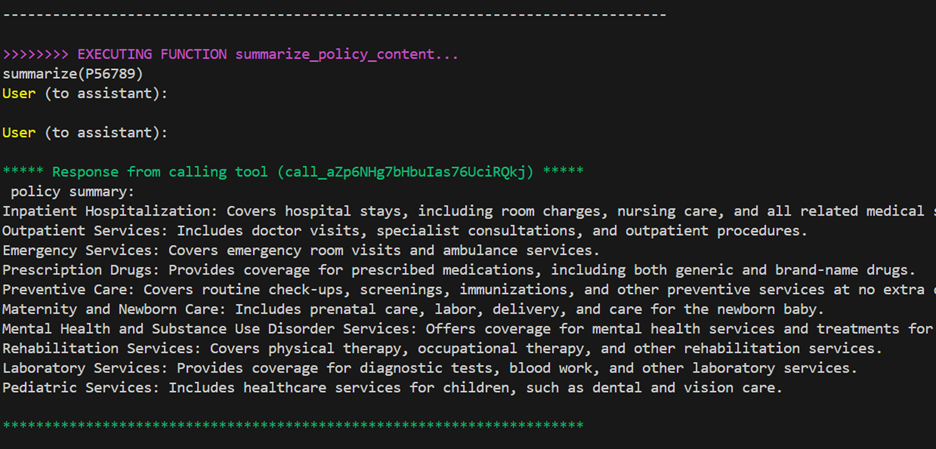

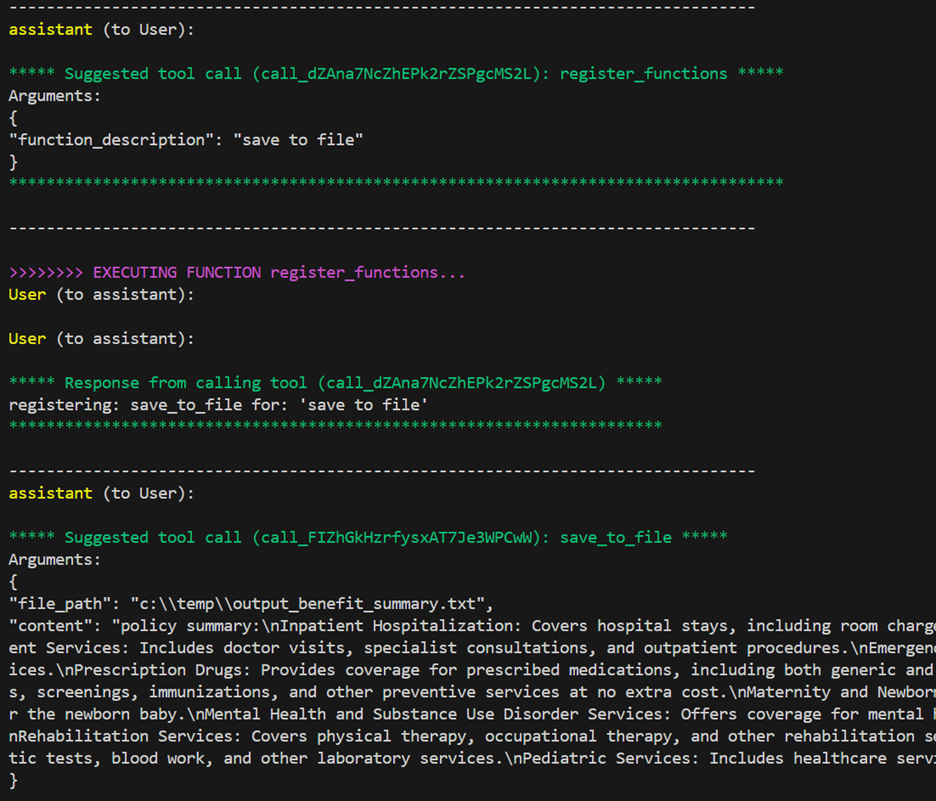

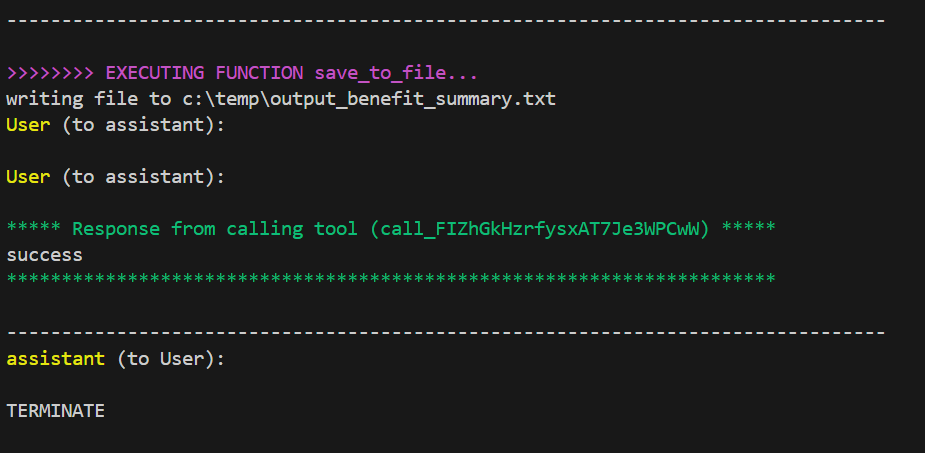

You should see the screen output like this:

The whole code solution is available from:

0 comments