If you’re familiar with C#, then you most likely heard that you should always override Equals and GetHashCode for custom structs for performance reasons. To better understand the importance and the rationale behind this advice we’re going to look at the default behavior to see why and where the performance hit comes from. Then we’ll look at a performance bug that occurred in my project and at the end we’ll discuss some tools that can help to avoid the issue altogether.

How important the issue is?

Not every potential performance issue affects an end-to-end time of your application. Enum.HasFlag is not very efficient (*), but unless it is used on a very hot path, it would not cause a severe issue for your product. The same is true for defensive copies caused by non-readonly structs in the readonly contexts. The issues are real but they’re unlikely to be visible in regular applications.

(*) The implementation was fixed in .NET Core 2.1 and as I’ve mentioned in the previous post, now you can mitigate the issue with a custom HasFlag implementation for the older runtimes.

But the issue we’re talking about today is different. If a struct does not provide Equalsand GetHashCode, then the default versions of these methods from System.ValueType are used. The versions that could easily affect an application’s end-to-end performance in a very significant way.

Why the default implementations are so slow?

The CLR authors tried their best to make the default implementations of Equals and GetHashCode for value types as efficient as possible. But there are a couple of reasons why they won’t be as efficient as a custom version written by hand (or generated by a compiler) for a specific type.

- Boxing allocation. The way the CLR is designed, every call to a member defined in

System.ValueTypeorSystem.Enumtypes cause a boxing allocation (**).

(**) Unless the method is a JIT intrinsic. For instance, in Core CLR 2.1 the JIT compiler knows about Enum.HasFlag and emits a very optimal code that causes no boxing allocations.

- Potential collisions of the default

GetHashCodeimplementation. An implementer of a hash function faces a dilemma: make a good distribution of the hash function or to make it fast. In some cases, it’s possible to achieve them both, but it is hard to do this generically inValueType.GetHashCode.

The canonical hash function of a struct “combines” hash codes of all the fields. But the only way to get a hash code of a field in a ValueType method is to use reflection. So, the CLR authors decided to trade speed over the distribution and the default GetHashCode version just returns a hash code of a first non-null field and “munges” it with a type id (***) (for more details see RegularGetValueTypeHashCode in coreclr repo at github).

(***) Based on the comment in the CoreCLR repo, this behavior may change in the future.

public readonly struct Location

{

public string Path { get; }

public int Position { get; }

public Location(string path, int position) => (Path, Position) = (path, position);

}

var hash1 = new Location(path: "", position: 42).GetHashCode();

var hash2 = new Location(path: "", position: 1).GetHashCode();

var hash3 = new Location(path: "1", position: 42).GetHashCode();

// hash1 and hash2 are the same and hash1 is different from hash3

This is a reasonable behavior unless it’s not. For instance, if you’re unlucky enough and the first field of your struct has the same value for most instances, then a hash function will provide the same result all the time. And, as you may imagine, this will cause a drastic performance impact if these instances are stored in a hash set or a hash table.

- Reflection-based implementation is slow. Very slow. Reflection is a powerful tool when used correctly. But it is horrible if it’s used on an application’s hot path.

Let’s see how a poor hash function that you can get because of (2) and the reflection-based implementation affects the performance:

public readonly struct Location1

{

public string Path { get; }

public int Position { get; }

public Location1(string path, int position) => (Path, Position) = (path, position);

}

public readonly struct Location2

{

// The order matters!

// The default GetHashCode version will get a hashcode of the first field

public int Position { get; }

public string Path { get; }

public Location2(string path, int position) => (Path, Position) = (path, position);

}

public readonly struct Location3 : IEquatable<Location3>

{

public string Path { get; }

public int Position { get; }

public Location3(string path, int position) => (Path, Position) = (path, position);

public override int GetHashCode() => (Path, Position).GetHashCode();

public override bool Equals(object other) => other is Location3 l && Equals(l);

public bool Equals(Location3 other) => Path == other.Path && Position == other.Position;

}

private HashSet<Location1> _locations1;

private HashSet<Location2> _locations2;

private HashSet<Location3> _locations3;

[Params(1, 10, 1000)]

public int NumberOfElements { get; set; }

[GlobalSetup]

public void Init()

{

_locations1 = new HashSet<Location1>(Enumerable.Range(1, NumberOfElements).Select(n => new Location1("", n)));

_locations2 = new HashSet<Location2>(Enumerable.Range(1, NumberOfElements).Select(n => new Location2("", n)));

_locations3 = new HashSet<Location3>(Enumerable.Range(1, NumberOfElements).Select(n => new Location3("", n)));

_locations4 = new HashSet<Location4>(Enumerable.Range(1, NumberOfElements).Select(n => new Location4("", n)));

}

[Benchmark]

public bool Path_Position_DefaultEquality()

{

var first = new Location1("", 0);

return _locations1.Contains(first);

}

[Benchmark]

public bool Position_Path_DefaultEquality()

{

var first = new Location2("", 0);

return _locations2.Contains(first);

}

[Benchmark]

public bool Path_Position_OverridenEquality()

{

var first = new Location3("", 0);

return _locations3.Contains(first);

}

Method | NumOfElements | Mean | Gen 0 | Allocated | -------------------------------- |-------------- |--------------:|--------:|----------:| Path_Position_DefaultEquality | 1 | 885.63 ns | 0.0286 | 92 B | Position_Path_DefaultEquality | 1 | 127.80 ns | 0.0050 | 16 B | Path_Position_OverridenEquality | 1 | 47.99 ns | - | 0 B | Path_Position_DefaultEquality | 10 | 6,214.02 ns | 0.2441 | 776 B | Position_Path_DefaultEquality | 10 | 130.04 ns | 0.0050 | 16 B | Path_Position_OverridenEquality | 10 | 47.67 ns | - | 0 B | Path_Position_DefaultEquality | 1000 | 589,014.52 ns | 23.4375 | 76025 B | Position_Path_DefaultEquality | 1000 | 133.74 ns | 0.0050 | 16 B | Path_Position_OverridenEquality | 1000 | 48.51 ns | - | 0 B |

If the first field is always the same, the default hash function returns the same value for all the elements. This effectively transforms a hash set into a linked list with O(N) for insertion and lookup operations. And the operation that populates the collection becomes O(N^2) (N insertions with O(N) complexity per insertion). It means that the insertion of 1000 elements into a set will cause almost 500_000 calls to ValueType.Equals. A method, that uses reflection under the hood!

And as you can see from the benchmark, the performance may be tolerable if you’re lucky and the first element of the struct is unique (Position_Path_DefaultEqualitycase). But the performance may be horrible if this is not the case.

Real-world issue

Now you can guess what kind of issue I’ve faced recently. A couple of weeks ago, I received a bug report that the end-to-end time for an app I’m working on increased from 10 seconds to 60. Luckily the report was very detailed and contained an ETW trace that immediately showed the bottleneck: ValueType.Equals was taking 50 seconds.

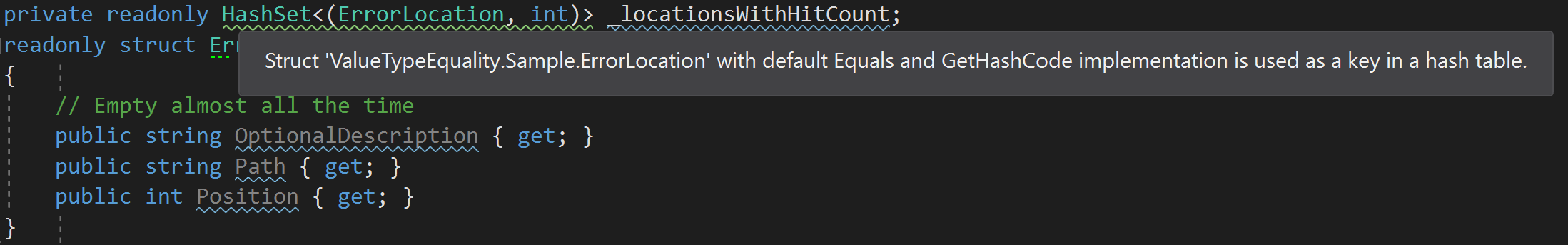

After a very quick look at the code it was clear what was the problem:

private readonly HashSet<(ErrorLocation, int)> _locationsWithHitCount;

readonly struct ErrorLocation

{

// Empty almost all the time

public string OptionalDescription { get; }

public string Path { get; }

public int Position { get; }

}

We used a tuple that contained a custom struct with default equality implementation. And unfortunately, the struct had an optional first field that was almost always equals to string.Equals. The performance was OK until the number of elements in the set increased significantly causing a real performance issue, taking minutes to initialize a collection with tens of thousands of items.

Is the default implementation of ValueType.Equals/GetHashCode always slow?

Both ValueType.Equals and ValueType.GetHashCode have a special optimization. If a type does not have “pointers” and is properly packed (I’ll show an example in a minute) then more optimal versions are used: GethashCode iterates over an instance and XORs blocks of 4 bytes and Equals method compares two instances using memcmp.

// Optimized ValueType.GetHashCode implementation

static INT32 FastGetValueTypeHashCodeHelper(MethodTable *mt, void *pObjRef)

{

INT32 hashCode = 0;

INT32 *pObj = (INT32*)pObjRef;

// this is a struct with no refs and no "strange" offsets, just go through the obj and xor the bits

INT32 size = mt->GetNumInstanceFieldBytes();

for (INT32 i = 0; i < (INT32)(size / sizeof(INT32)); i++)

hashCode ^= *pObj++;

return hashCode;

}

// Optimized ValueType.Equals implementation

FCIMPL2(FC_BOOL_RET, ValueTypeHelper::FastEqualsCheck, Object* obj1, Object* obj2)

{

TypeHandle pTh = obj1->GetTypeHandle();

FC_RETURN_BOOL(memcmp(obj1->GetData(), obj2->GetData(), pTh.GetSize()) == 0);

}

The check itself is implemented in ValueTypeHelper::CanCompareBits and it is called from both ValueType.Equals and from ValueType.GetHashCodeimplementations.

But the optimization is very tricky.

First, it is hard to know when the optimization is enabled and even minor changes in the code can turn it “on” and “off”:

public struct Case1

{

// Optimization is "on", because the struct is properly "packed"

public int X { get; }

public byte Y { get; }

}

public struct Case2

{

// Optimization is "off", because struct has a padding between byte and int

public byte Y { get; }

public int X { get; }

}

For more information about memory layout see my blogpost “Managed object internals, Part 4. Fields layout”.

Second, a memory comparison will not necessarily give you the right results. Here is a simple example:

public struct MyDouble

{

public double Value { get; }

public MyDouble(double value) => Value = value;

}

double d1 = -0.0;

double d2 = +0.0;

// True

bool b1 = d1.Equals(d2);

// False!

bool b2 = new MyDouble(d1).Equals(new MyDouble(d2));

-0.0 and +0.0 are equal but have different binary representations. This means that Double.Equals returns true, but MyDouble.Equals returns false. For most cases the difference is non-substantial, but just imagine how many hours you may potentially spend trying to fix an issue caused by this difference.

And how can we avoid the issue in the future?

You may wonder, how the issue mentioned above may appear in the real world? One obvious way to enforce Equals and GetHashCode for structs is to use FxCop rule CA1815. But there is an issue with this approach: it is a bit too strict.

A performance critical application may have hundreds of structs that are not necessarily designed to be used in hash sets or dictionaries. This may cause app developers to suppress the rule that can backfire ones a struct use cases changes.

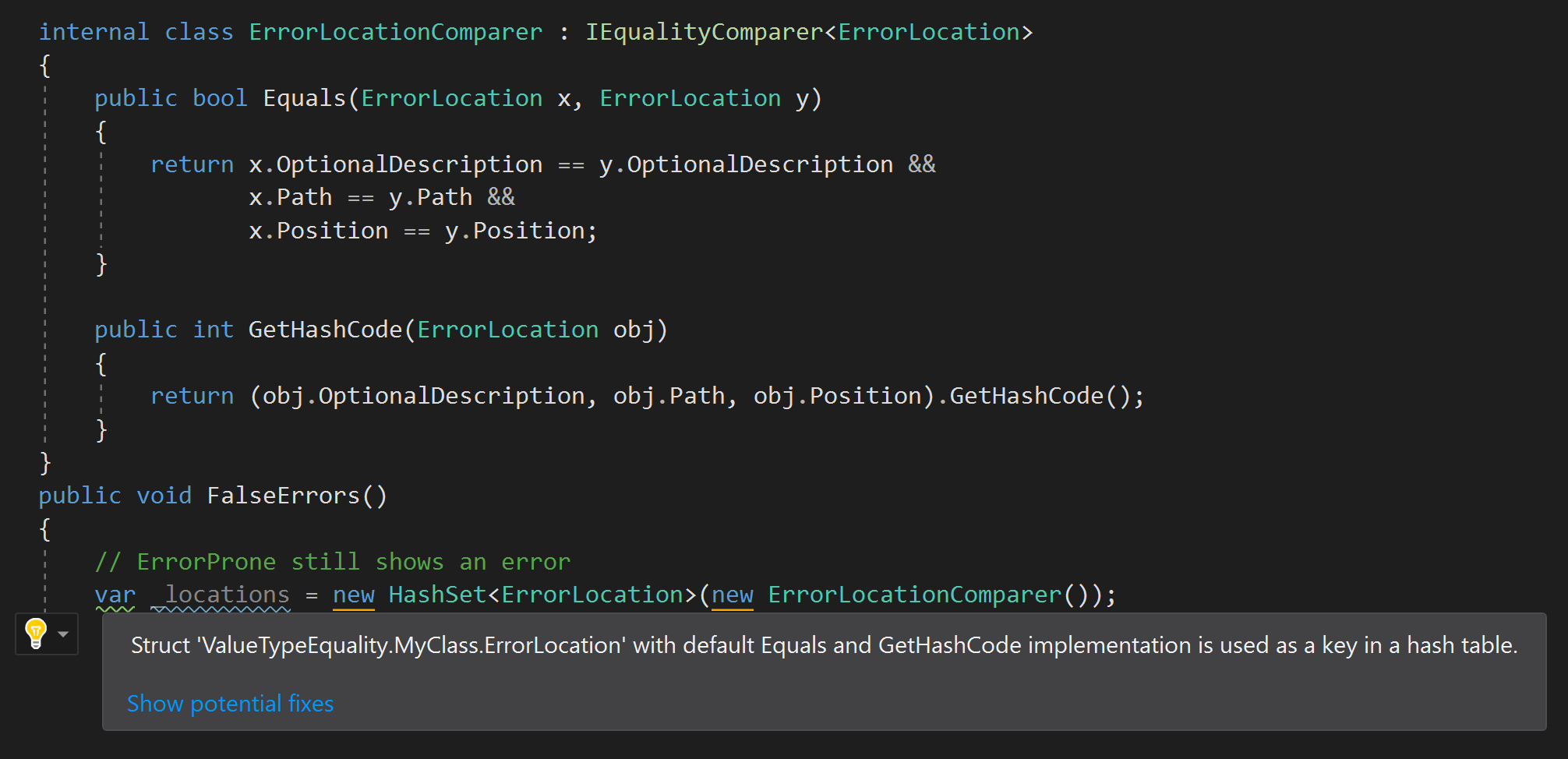

A more appropriate approach is to warn a developer when “inappropriate” struct with default equality members (defined in the app or in a third-party library) is stored in a hash set. Of course, I’m talking about ErrorProne.NET and the rule that I’ve added there ones I’ve faced this issue:

The ErrorProne.NET version is not perfect and will “blame” a valid code if a custom equality comparer is provided in a constructor:

But I think it still valid to warn when a struct with default equality members is used by not when it is produced. For instance, when I tested my rule I’ve realized that System.Collections.Generic.KeyValuePair<TKey, TValue> defined in mscorlib, does not override Equals and GetHashCode. Today it is unlikely that someone will define a variable of type HashSet<KeyValuePair<string, int>> but my point is that even BCL can violate the rule and it is useful to catch it until its not too late.

Conclusion

- The default equality implementation for structs may easily cause a severe performance impact for your application. The issue is real, not a theoretical one.

- The default equliaty members for value types are reflection-based.

- The default

GetHashCodeimplementation may provide a very poor distribution if a first field of many instances is the same. - There is an optimized default version for

EqualsandGetHashCodebut you should never rely on it because you may stop hitting it with an innocent code change. - You may rely on FxCop rule to make sure that every struct overrides equality members, but a better approach is to catch the issue when the “wrong” struct is stored in a hash set or in a hash table using an analyzer.

Hey Sergey,

Thanks for a comprehensive explanation, really enjoyed reading this nice article!

BTW, there is a typo in the text: “And unfortunately, the struct had an optional first field that was almost always equals to string.Equals” – I assume you wanted to mention string.Empty, not string.Equals