DeepSeek-R1 has been announced on GitHub Models as well as on Azure AI Foundry, and the goal of this blog post is to demonstrate how to use it with LangChain4j and Java.

We concentrate here on GitHub Models as they are easier to use (you just need a GitHub token, no Azure subscription required), then Azure AI Foundry uses the same model and infrastructure.

Demo project

The demo project is fully Open Source and available on GitHub at https://github.com/Azure-Samples/DeepSeek-on-Azure-with-LangChain4j.

It contains the following examples:

- Text Generation: Learn how to generate structured text using DeepSeek-R1.

- Reasoning Test: Evaluate the model’s ability to perform logical reasoning.

- Conversational Memory: Utilize a chat interface that retains contextual memory.

Please note that despite being called a Chat Model, DeepSeek-R1 is better at reasoning than at doing a real conversation, so the most interesting demo is the reasoning test.

These demos can be executed either locally (using Ollama) or in the cloud (using GitHub Models).

Configuration

The project supports two Spring Boot profiles: one for local execution and another for running with GitHub Models.

Option 1: Running DeepSeek-R1 Locally

This configuration uses Ollama with DeepSeek-R1:14b, a light model that can run on a local machine.

We’re using the 14b version of DeepSeek-R1 for this demo: it normally provides a good balance between performance and quality. If you have less ressources, you can try with the 7b version, which is lighter, but the reasoning test will usually fail. With GitHub Models (see Option 2 below), you’ll have the full 671b model which will be able to do much more complex reasoning.

To enable this setup, use the local Spring Boot profile. Set it in src/main/resources/application.properties:

spring.profiles.active=local

Running DeepSeek-R1 Locally with Ollama

To run DeepSeek-R1:14b on your local machine, install Ollama.

Start Ollama with:

ollama serve

Download DeepSeek-R1 from Ollama:

ollama pull deepseek-r1:14b

Option 2: Running in the Cloud with GitHub Models

This configuration uses GitHub Models with DeepSeek-R1:671b, the most advanced model which needs advanced GPU and memory resources1.

To enable this setup, use the github Spring Boot profile. Set it in src/main/resources/application.properties:

spring.profiles.active=github

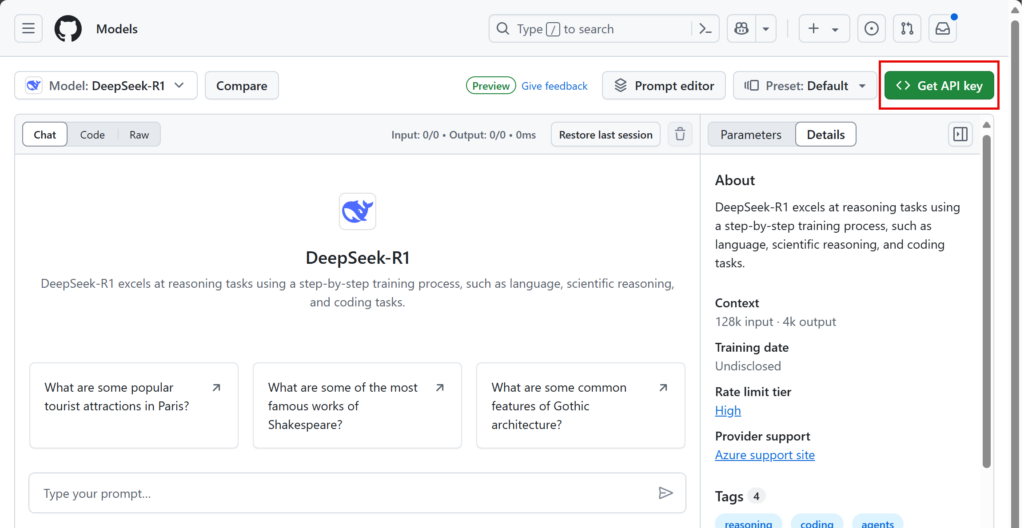

Configuring GitHub Models

Visit GitHub Marketplace and follow the documentation to create a GitHub token.

Set up an environment variable with your GitHub token:

export GITHUB_TOKEN=<your_token>

This token is required to access GitHub-hosted models.

Running the Demos

Once the resources (local or GitHub Models) are configured, start the application:

./mvnw spring-boot:run

Access the web UI at:

The demos are available in the top menu for easy navigation.

Don’t forget try demo 2, with reasoning:

Great marks for timeliness. But I have a question. I ran local with 14b. Consider the method `getAnswerWithSystemMessage`. Why does the answer come back in English when the system message requests French? I asked GitHub Copilot and it had this answer:

```

The answer likely comes back in English despite the system message requesting French because the chat model (`chatLanguageModel`) may not be configured to handle or prioritize language instructions from system messages correctly. You may need to verify if the `ChatLanguageModel` implementation used supports language instructions and if additional configuration is required to ensure it generates responses in the requested...