In the dynamic world of AI and data science developing production-level solutions for corporate environments comes with its own set of challenges and lessons. As a data science team working within Microsoft, we recently completed an engagement for a large company where we leveraged cutting-edge technologies, including OpenAI tools, GPT-4o for generating syntactic datasets, embedding models like text_embedding_3, and Azure AI Search for implementing both text and hybrid search solutions, Here are 10 key lessons we learned along the way.

Clarifying Key Terms:

Generating Syntactic Datasets: This process involves creating datasets with variations in language structure, such as common misspellings and different phrasing, to help models handle a range of real-world inputs. Embeddings: These are vector representations of text that capture the meaning and context of words or phrases, allowing our model to understand semantic relationships, not just keywords. Hybrid Search: A search method that combines traditional keyword search with semantic search (using embeddings) to improve accuracy, especially with complex queries.

1. Start with Clear Business Objectives

The first step in any data science project is aligning your goals with the business objectives. In our case, it was important to understand what the company wanted to achieve with the AI-powered search. Was it improving accuracy, better handling of natural language queries, or providing faster results? Setting clear, measurable objectives early on helped us define the direction and prioritize the features.

2. Synthetic Data Generation with GPT-4o: A Game Changer

Creating synthetic datasets using GPT-4o proved invaluable, especially when dealing with domain-specific language and scenarios. We used GPT-4o to generate misspelled and syntactically varied queries, ensuring our search models could handle real-world user inputs. The synthetic datasets enhanced the robustness of our evaluation pipeline, simulating a variety of search scenarios. Worth mentioning GPT-4o was significantly better than GPT-3.5.

3. Leveraging Embedding Models for Deep Understanding

We incorporated OpenAI’s text_embedding_3 to improve the semantic understanding of queries. This model allowed us to capture the nuances of language and return more relevant results, even when the queries were not an exact match. Embedding models are powerful for moving beyond keyword-based search to truly understanding intent.

4. Hybrid Search for Superior Results

One of the key takeaways from our project was the superior performance of hybrid search, especially when dealing with misspellings, logic operators and syntactic variations in queries. Combining traditional text search with embedding-based search allowed us to capture both precise keyword matches and broader semantic similarities. The hybrid approach consistently outperformed text-only search in our evaluations.

5. Building an Evaluation Pipeline in Python

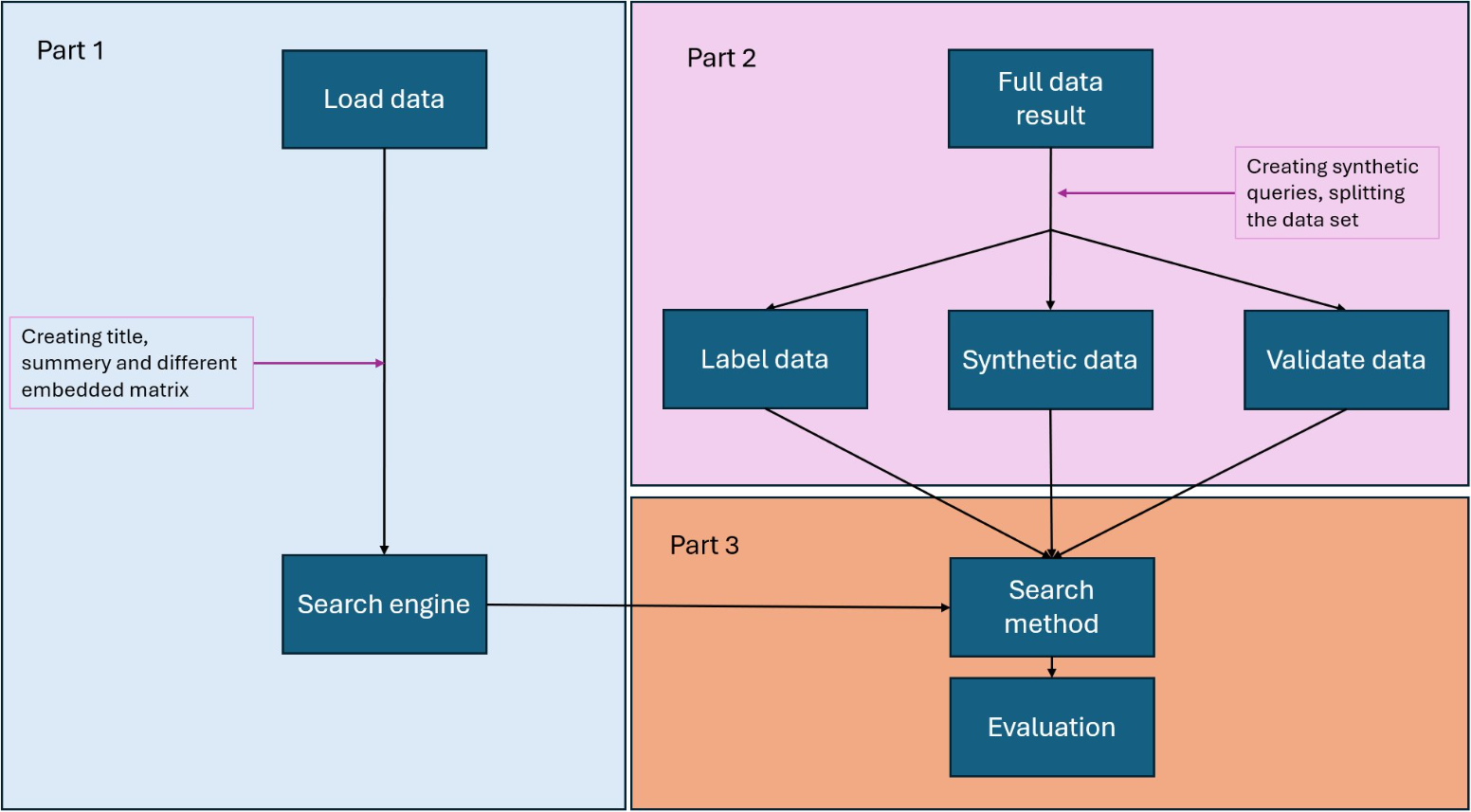

This next visual flowchart provides a high-level view of the data processing and evaluation stages involved in our search solution.

Loading Data and Preprocessing

In this phase, we load raw data, including documents and metadata, which serve as the foundation for our search engine. Preprocessing steps are critical here, involving the creation of titles, summaries, and various embedded matrices. These embeddings are derived from models like text_embedding_3, and they help convert text into a form that can be easily understood and queried by the search engine.

Labeling, Synthesizing, and Validating Data:

This phase splits the data into three main categories: labeled data, synthetic data, and validation data.

- Labeled Data: This includes any data that has been manually tagged with the correct outputs, allowing us to benchmark the performance of our search engine.

- Synthetic Data: We used GPT-4o to generate synthetic queries, especially for testing misspellings and variations in syntax. This helped create more comprehensive datasets that mirrored real-world scenarios.

- Validation Data: This data is crucial for verifying that the search model generalizes well to unseen data. Validation ensures that our models aren’t overfitting with the training data but can handle diverse queries. These three types of data come together to produce a full dataset, which is then passed to the search method.

Search Method and Evaluation

The search method, using both traditional and hybrid approaches, interacts with the search engine. Once we have the search results, the evaluation process begins. Here, we measure metrics such as recall (top K), and MRR (Mean Reciprocal Rank). The evaluation pipeline is automated, enabling us to quickly assess how different search methods perform on various datasets. As seen in the diagram, the search method and evaluation work in tandem, allowing us to continuously refine the search model based on the results. By following this structured pipeline, we were able to systematically develop, test, and optimize the AI-powered search solution, ensuring it was production-ready for the corporate environment.

6. The Importance of Robust Evaluation Metrics

Evaluation isn’t just about accuracy. We measured two different metrics to bring a better understanding of the business impact and potential downsides of the search index: recall (top K) and MRR (Mean Reciprocal Rank).

- Recall (Top K): This metric evaluates how well our search model can retrieve relevant documents within the top K results. For instance, if a user expects the correct document to appear within the first five results, we calculate how often the model successfully retrieves it within that range. A high recall score indicates that our search method is effective at returning relevant results, even if they aren’t ranked first.

- MRR (Mean Reciprocal Rank): MRR focuses on the position of the first relevant result in the search output. It measures how quickly users find what they’re looking for. A high MRR score means that users are likely to find the most relevant information at the top of the search results, improving user satisfaction and efficiency. These metrics gave us a more nuanced understanding of our search index is performance. While recall provided insights into the comprehensiveness of our search index, MRR helped us assess how well we were ranking the most relevant results. We learned that optimizing both metrics was crucial for balancing business needs, as high recall without good ranking could overwhelm users with too many irrelevant results, while a high MRR without strong recall could mean missing out on valuable information further down the list.

This approach helped us communicate to stakeholders not only the strengths of our search engine but also the trade-offs we were managing. By focusing on these metrics, we could show them how the search solution aligned with their business objectives while being transparent about any limitations.

7. Latency Matters

Latency is often an overlooked aspect when building AI-powered search solutions, but it significantly impacts user experience. Even the most accurate search results can frustrate users if they take too long to load. We worked hard to balance the trade-off between high accuracy and low latency by optimizing our infrastructure and carefully tuning our search models. We also leveraged Azure AI search scaling capabilities to ensure the solution was performant under heavy load. It’s important to note that hybrid search was significantly slower than text-based search, and this tradeoff needed to be discussed with stakeholders.

8. Challenges of Creating Evaluation Sets with Stakeholders

One of the unexpected challenges was gathering a representative evaluation set. Engaging stakeholders to provide real-world queries and feedback was crucial, but it required clear communication. Many stakeholders didn’t initially understand the technical requirements, so we had to simplify the process and explain how their input would directly impact the system’s performance. Establishing a shared understanding with stakeholders was a key step in building trust and ensuring the evaluation set was realistic.

9. Communication is Key with Corporate Stakeholders

While it’s easy to get lost in the technical details, it’s essential to remember that corporate stakeholders are focused on measurable business impact. We prioritized non-technical business outcomes by aligning our technical goals with broader objectives, such as:

- User Satisfaction: By focusing on relevance, we ensured that users would find accurate and valuable information quickly, minimizing frustration and improving engagement.

- Efficiency Gains: We targeted faster query response times, which translated into time savings for users—an important metric for productivity-focused stakeholders.

- Data-Driven Insights for Business Decisions: By optimizing recall and MRR, we not only improved the search experience but also provided valuable insights that helped stakeholders make more informed decisions based on user search patterns.

By focusing on these specific business outcomes, we gained buy-in from stakeholders and demonstrated the practical effectiveness of our solution beyond technical metrics. This approach helped us show the real-world value of our AI search model, aligning it with the company’s broader strategic goals.

10. Iterate, Measure, and Improve

The final lesson is that building AI search for production is an iterative process. Continuous evaluation, stakeholder feedback, and iterative improvements were critical to our success. After launching the solution, we actively monitored its real-world performance and made necessary adjustments. AI solutions evolve as users interact with them, and it’s essential to have a framework for ongoing improvements.

In summary, building AI search solutions for corporate environments requires a combination of technical expertise, business alignment, and continuous iteration. By leveraging powerful tools like OpenAI’s models and Azure AI Search, along with strong communication, we were able to create a solution that met both the technical and business needs. These lessons underscore the importance of aligning technical innovations with business objectives, ensuring both technical success and measurable business outcomes.

Resources

The feature image was generated using Bing Image Creator. Terms can be found here.