This code story outlines a new end to end video tagging tool, built on top of the Microsoft Cognitive Toolkit (CNTK), that enables developers to more easily create, review and iterate their own object detection models.

Background

We recently worked with Insoundz, an Israeli startup that captures sound at live sports events using audio-tracking technology. In order to map this captured audio, they needed a way to identify areas of interest in live video feeds. For this purpose, we worked with them to build an object detection model with CNTK.

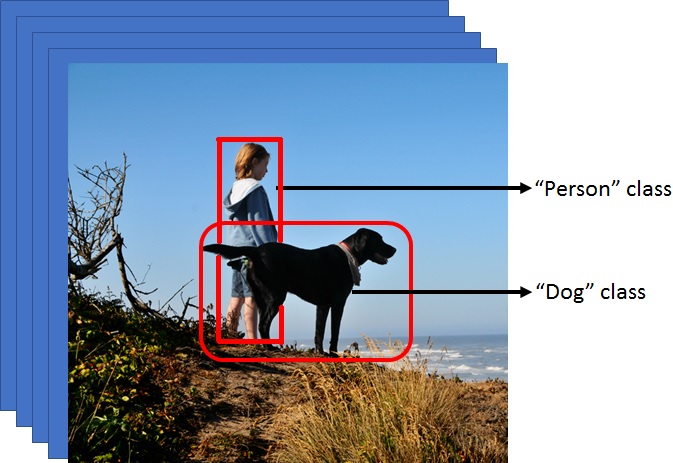

Object detection models require a large quantity of tagged image data to work in production. The training data for an object detection model consists of a set of images, where each image is associated with a group of bounding boxes surrounding the objects in the image, and each bounding box is assigned a label that describes the object.

Having quality training data is one of the most important aspects of the model building process. However, in most existing object detection training pipelines, image datasets are compiled and expanded upon independently of training. As a result, models can only be optimized by fine tuning hyperparameters and by hoping that requests for additional training data encapsulate the edge cases of an existing model.

Since Insoundz’s capture solution supports a wide set of event scenarios (each with unique areas of interest) they needed a scalable way to aggregate data as they train, validate, and iterate new object detection models.

The Solution

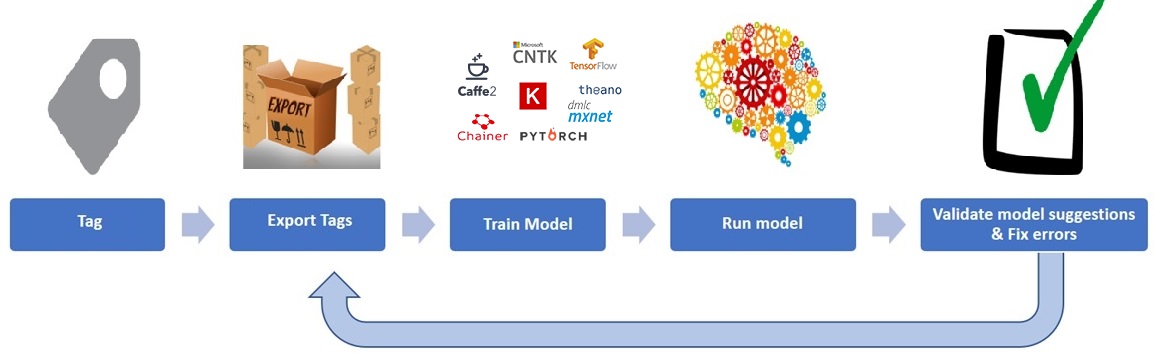

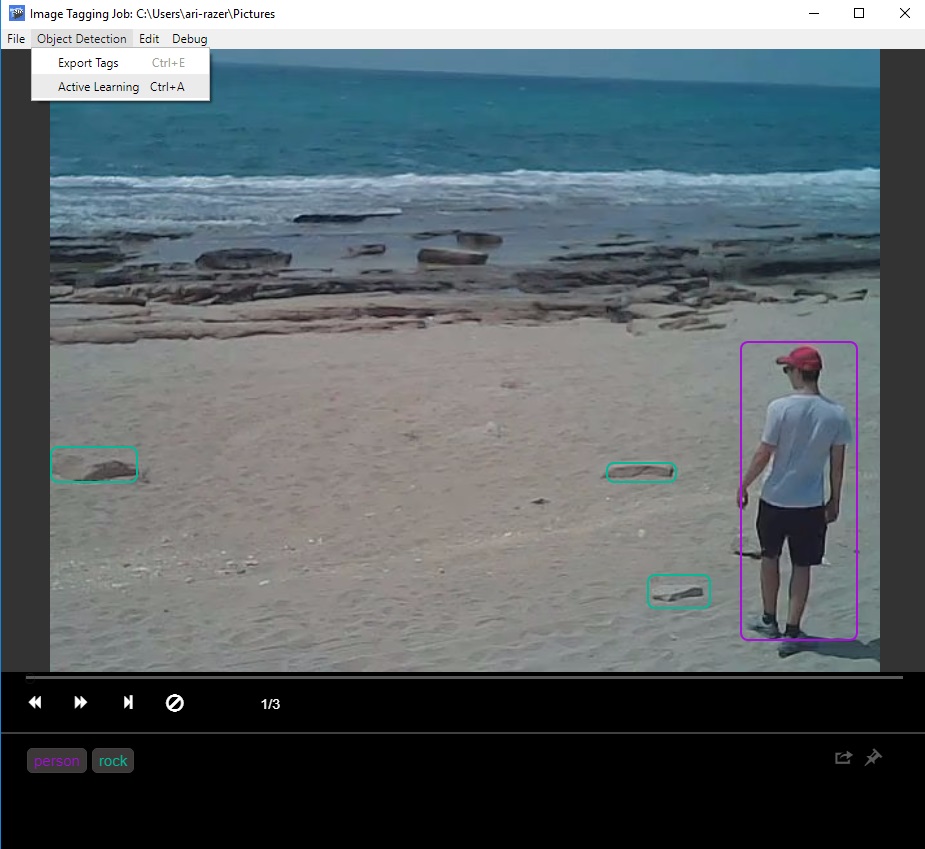

To enable Insoundz to generate large quantities of high quality, tagged image data quickly, we developed a semi-automated, cross-platform Electron application. This video tagging application exports data directly to CNTK format and allows users to run and validate a trained model on new videos to generate stronger models. The application supports Windows, OSX, and Linux.

One major advantage of this object detection training architecture over other publicly available architectures, is the validation feedback loop. The feedback loop increases iterative model performance while decreasing the number of training samples needed for training.

How the tool works

The Visual Object Tagging Tool supports the following features:

- Semi-automated video tagging: computer-assisted tagging and tracking of objects in videos using the CAMSHIFT tracking algorithm

- Export to CNTK: export tags and assets to CNTK format for training a CNTK object detection model

- Model validation: run and validate trained CNTK object detection model on new videos to generate stronger models

The tagging control we used in our tool was built on the video tagging component with some critical improvements, including the abilities to:

- Resize the control to maximize screen real estate

- Create rectangular bounding regions

- Resize regions

- Reposition regions

- Monitor visited frames

- Track regions between frames in a continuous scene

Tagging a Video

- Click and drag a bounding box around the desired area

- Move or resize the region until it fits the object

- Click on a region and select the desired tag from the labeling toolbar at the bottom of the tagging control

- Selected regions will appear in red

and unselected regions will appear in blue

- Click the

button to clear all tags on a given frame

button to clear all tags on a given frame

- Selected regions will appear in red

Navigation

- Users can navigate between video frames by using:

- the

buttons

buttons - the left/right arrow keys

- the video skip bar

- the

- Tags are auto-saved each time a frame is changed

Tracking

Tracking support reduces the need to redraw and re-tag regions on every frame in a scene. For example, in our test video, we were able to track the location of a hat in over 600 consecutive frames with only one manual correction.

New regions are tracked by default until the scene changes. Since the CAMSHIFT algorithm has some known limitations, users can disable tracking for certain sets of frames. To toggle tracking on and off, use the file menu setting or the keyboard shortcut Ctrl/Cmd + T.

CNTK Integration

- Export tags for a video tagging job to CNTK format using Ctrl/Cmd + E

- Apply the model to new video for Review using Ctrl/Cmd + R

Code

You can download the tool or evaluate the code for your own use on GitHub.

Opportunities for Reuse

The tool outlined in this code story is adaptable to any object detection/recognition scenario.

Since we wrote the CNTK video tagging tool in JavaScript and used independent components for tracking and CNTK integration, the work here is cross-platform and can be abstracted and embedded into a web application or an existing workflow.

Future Plans

In the future, we plan to provide support for tagging image directories, as well as additional project management support for handling multiple tagging jobs in parallel. Furthermore, we hope to investigate additional tracking algorithms to automate the tagging process even further. In the next version we will include some configurable interfaces so that interested contributors can integrate their own object detection frameworks and tracking algorithms into the tool.