When modernizing legacy systems, the user interface often becomes the make-or-break factor for project success. While backend improvements matter, users interact with the frontend and that’s where trust is built or broken. Using AI‑generated tools like Stagehand with Microsoft’s Playwright helps ensure a smooth user experience during modernization efforts such as Hyper Velocity Engineering and monolith-to-microservices migrations. This post shares our journey using AI-powered testing tools to ensure seamless user experiences during complex modernization projects.

Why UI Testing Matters in Modernization

Modernization is the process of upgrading legacy systems to newer, more efficient technologies, frameworks, and architectures so they can better support current and future business needs. This often includes moving applications from outdated platforms to cloud environments, adopting modern frameworks, or breaking down monolithic applications into microservices for improved scalability, resilience, and agility.

Here’s the challenge: while modernization brings technical improvements, what truly matters is the experience users have when interacting with the system. If familiar features stop working—like navigation, data access, or forms—it can quickly erode trust and slow adoption.

UI tests play a crucial role in safeguarding that experience. They ensure users can continue completing their tasks smoothly and consistently, while catching integration issues that other tests might miss. In short, UI testing helps modernization deliver its benefits without disrupting usability or customer confidence. This is where AI-powered testing tools become invaluable, offering new ways to create and maintain comprehensive UI test coverage more efficiently than traditional approaches.

Our Journey: From Traditional to AI-Powered Testing

Our Testing Evolution: From Playwright to Stagehand and Back

Initially, our approach to UI testing relied on traditional Playwright scripts. These required manual test creation and maintenance. While effective, this method was time-intensive. It also required deep knowledge of selectors and user flows.

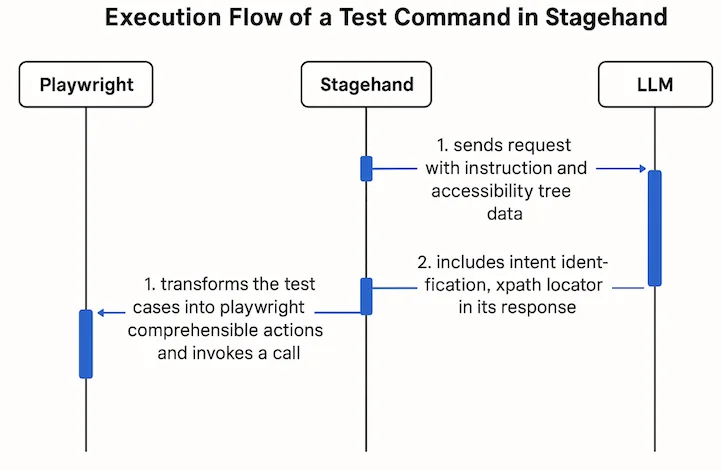

The team then transitioned to Stagehand, an LLM-driven automation tool. Stagehand could generate UI tests through natural language instructions. This significantly reduced the complexity of test creation. The AI capabilities allowed teams to describe user scenarios in plain English. The tool would then automatically generate the corresponding test logic.

However, the team found that Stagehand became most useful when integrated with Playwright. Teams could leverage Stagehand’s AI-generated test logic and seamlessly convert it back to standard Playwright code. This hybrid approach gave the best of both worlds. Teams gained the speed and simplicity of AI-generated tests while keeping the reliability, debugging capabilities, and ecosystem support of Playwright’s established framework.

This evolution shows how teams moved from traditional manual approaches to AI-powered generation, ultimately settling on a hybrid model that combines the best aspects of both approaches for modernization projects.

Understanding the Tools

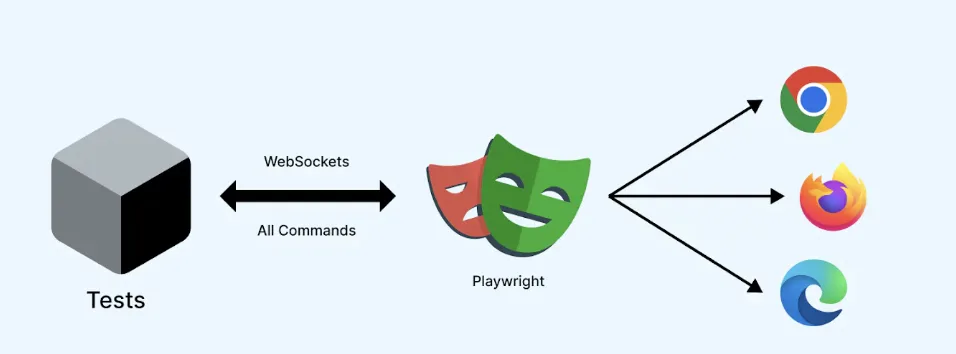

What is Playwright?

Playwright is an open-source end-to-end testing framework developed by Microsoft that enables reliable automation of modern web applications across multiple browsers. For modernization projects, Playwright’s strength lies in its ability to validate user workflows consistently, handle complex interactions automatically, and provide detailed debugging insights when tests fail. This makes it particularly valuable when migrating legacy systems where maintaining user experience continuity is critical.

Why Use Playwright in Dev Containers

Adopting a Dev Container–based approach was very useful for quickly bootstrapping local environments and enabling fast iteration by making environments reproducible and easy to share across distributed teams. This is especially valuable in modernization projects where team members may be working with different legacy system configurations and need consistent testing environments. This benefit holds true regardless of which UI testing framework is used. However, introducing Dev Containers also brought new challenges specifically for browser-based UI testing. These challenges required additional considerations to ensure tests remained reliable and the development workflow stayed smooth.

Generating Tests with Playwright

Playwright provides a codegen feature to record tests instead of writing everything manually:

npx playwright codegen https://your-app-url.comThis opens a browser window where you can interact with the application.

As you click, type, or navigate, Playwright auto-generates the test script.

You can save and edit the generated test for future use.

Setup for Browser Visibility & Test Recording

To run Playwright tests in headed mode (with visible browser), recording tests with codegen (npx playwright codegen), or debugging tests visually you’ll need to set up X11 forwarding:

WSL (Windows Subsystem for Linux):

- Runs out of the box – no additional setup required

macOS:

- Install XQuartz:

- Option 1 (Homebrew):

brew install --cask xquartz - Option 2 (Manual Download):

- Download and install XQuartz from https://www.xquartz.org/

- Restart your computer after installation

- Option 1 (Homebrew):

- Configure X11 forwarding:

# Allow localhost connections to X11 xhost +localhost

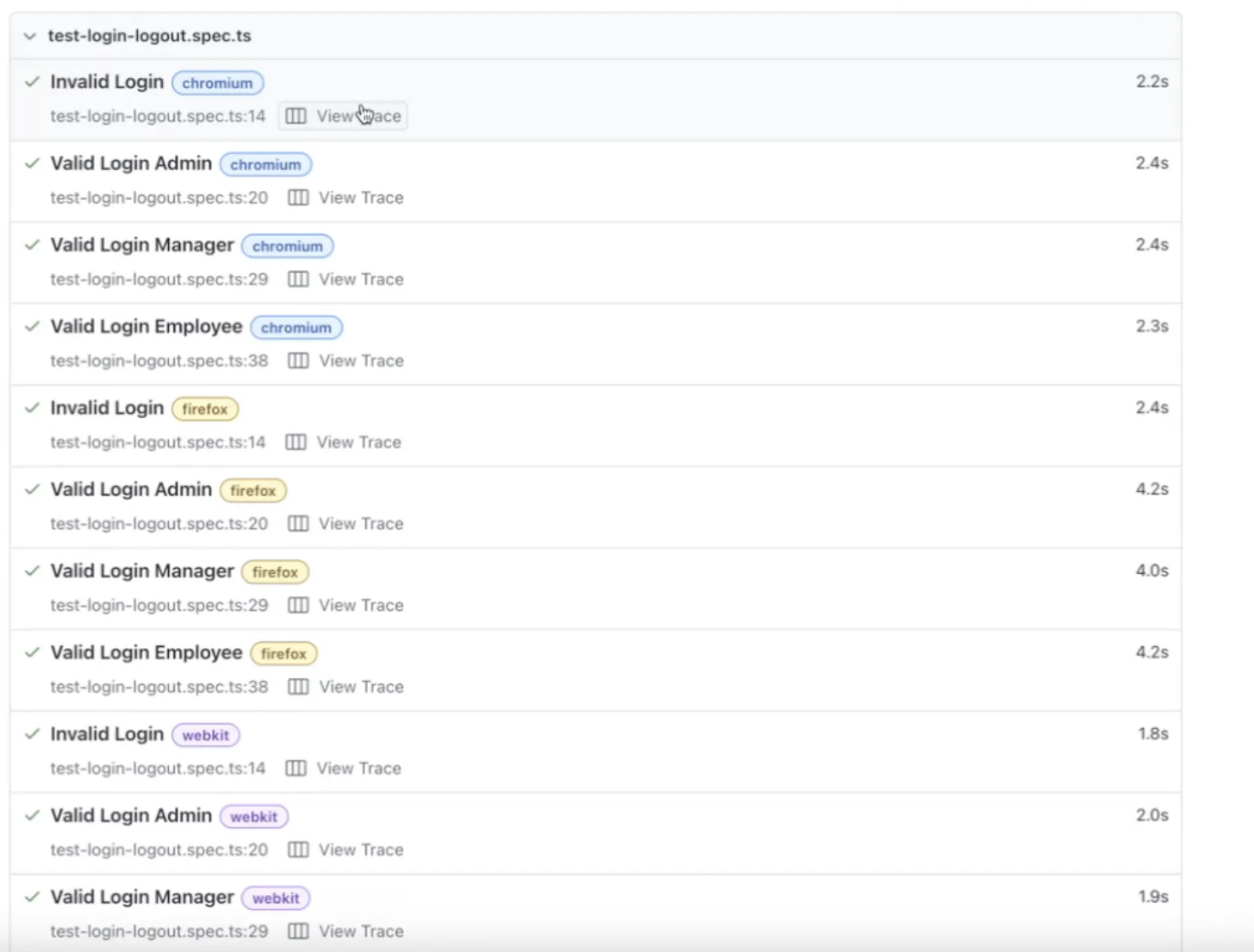

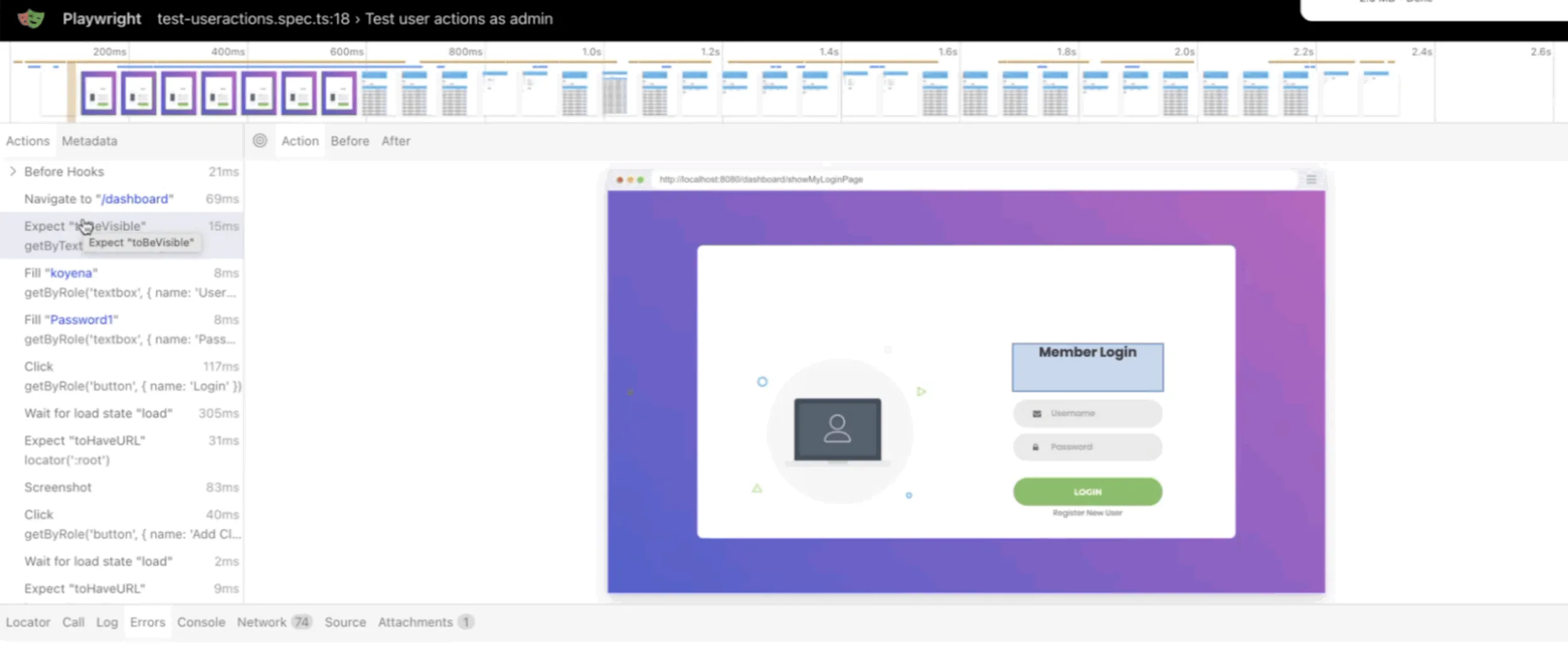

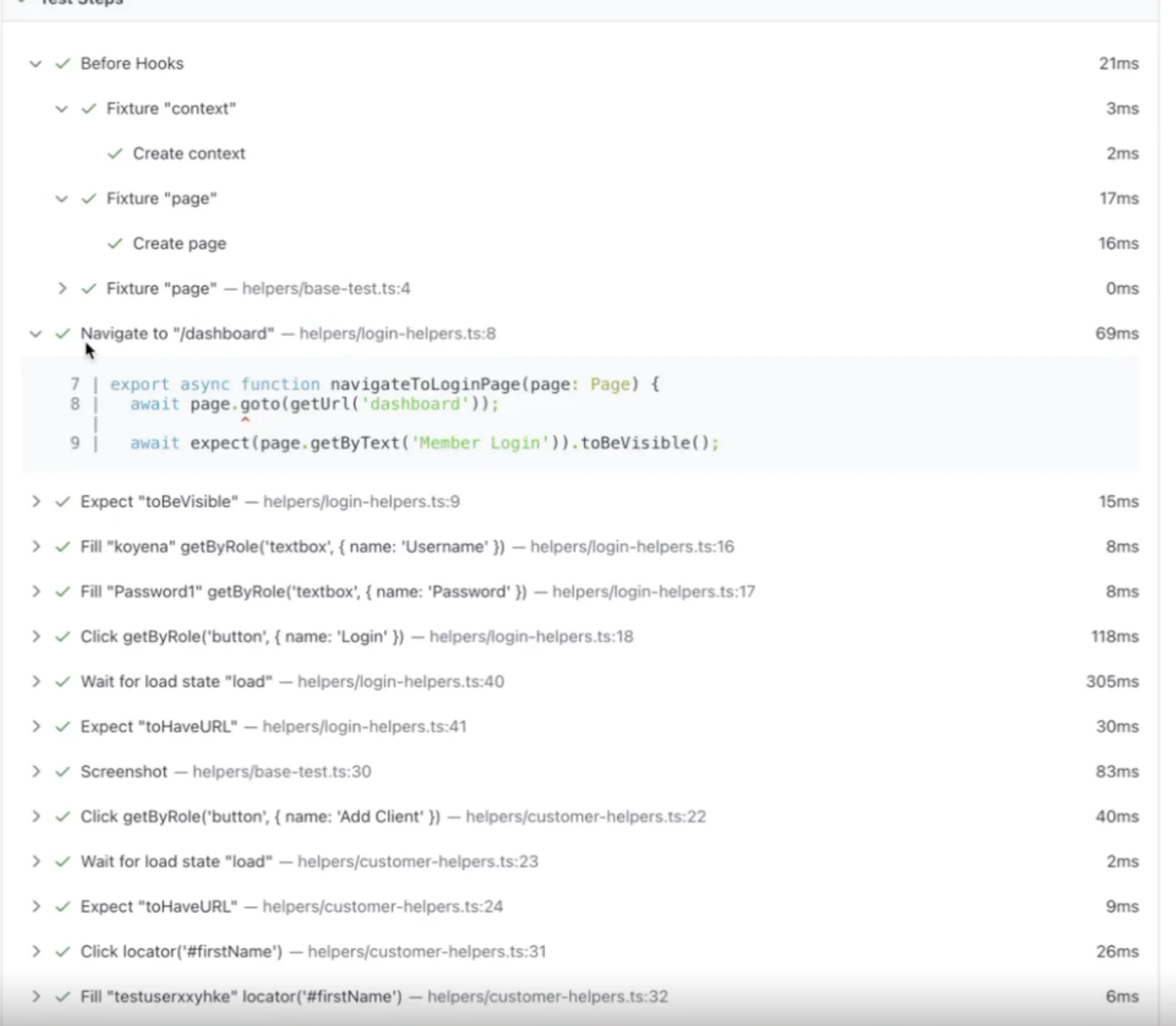

Test Reporting and Tracing in Playwright

Playwright’s reporting and tracing capabilities provide deep insights into test execution. After each test run, it automatically generates detailed HTML reports with test results, failure screenshots, and performance metrics. The built-in trace viewer captures every action DOM snapshots, network requests, console logs, and timeline events. When tests fail, you can replay the exact sequence and inspect application state at any point.

HTML Report Example: A failed login test shows a red-highlighted test case, a screenshot of the login page at the failure point, and a stack trace pointing to the exact assertion that failed.

This makes it easier to quickly identify and communicate test failures to development teams during modernization sprints.

This makes it easier to quickly identify and communicate test failures to development teams during modernization sprints.

Trace Viewer Example: A trace of a checkout flow allows you to step through each browser action (clicks, navigations, inputs) and inspect DOM snapshots, network calls, and console logs at every step.

This capability is crucial for diagnosing complex integration issues that often surface when migrating legacy workflows to modern architectures.

This capability is crucial for diagnosing complex integration issues that often surface when migrating legacy workflows to modern architectures.

Performance Metrics Example: Reports may include execution time per test and resource usage, helping identify bottlenecks in slower tests.

The AI Revolution in Testing

What is Stagehand?

Stagehand is an AI-powered browser automation tool that simplifies web testing by allowing you to write tests using natural language instructions. Built on top of Playwright, Stagehand leverages large language models to interpret human-readable commands and automatically translate them into browser automation code. Instead of manually writing complex selectors and interaction logic, you can simply describe what you want to test like “click the login button” or “fill out the registration form with valid data” and Stagehand’s AI engine handles the technical implementation. This approach dramatically reduces the barrier to entry for UI testing, making it accessible to team members who may not have deep automation expertise. Stagehand excels in modernization projects where business users and QA teams need to quickly validate that critical workflows still function correctly after system changes, without requiring extensive technical knowledge of DOM manipulation or CSS selectors.

Basic Stagehand Operations

Stagehand provides three core functions that make testing intuitive:

ACT – Performs user interactions on the page using natural language commands. Instead of finding specific CSS selectors, you simply describe the action you want to perform. Stagehand’s AI identifies the correct elements and executes the interaction.

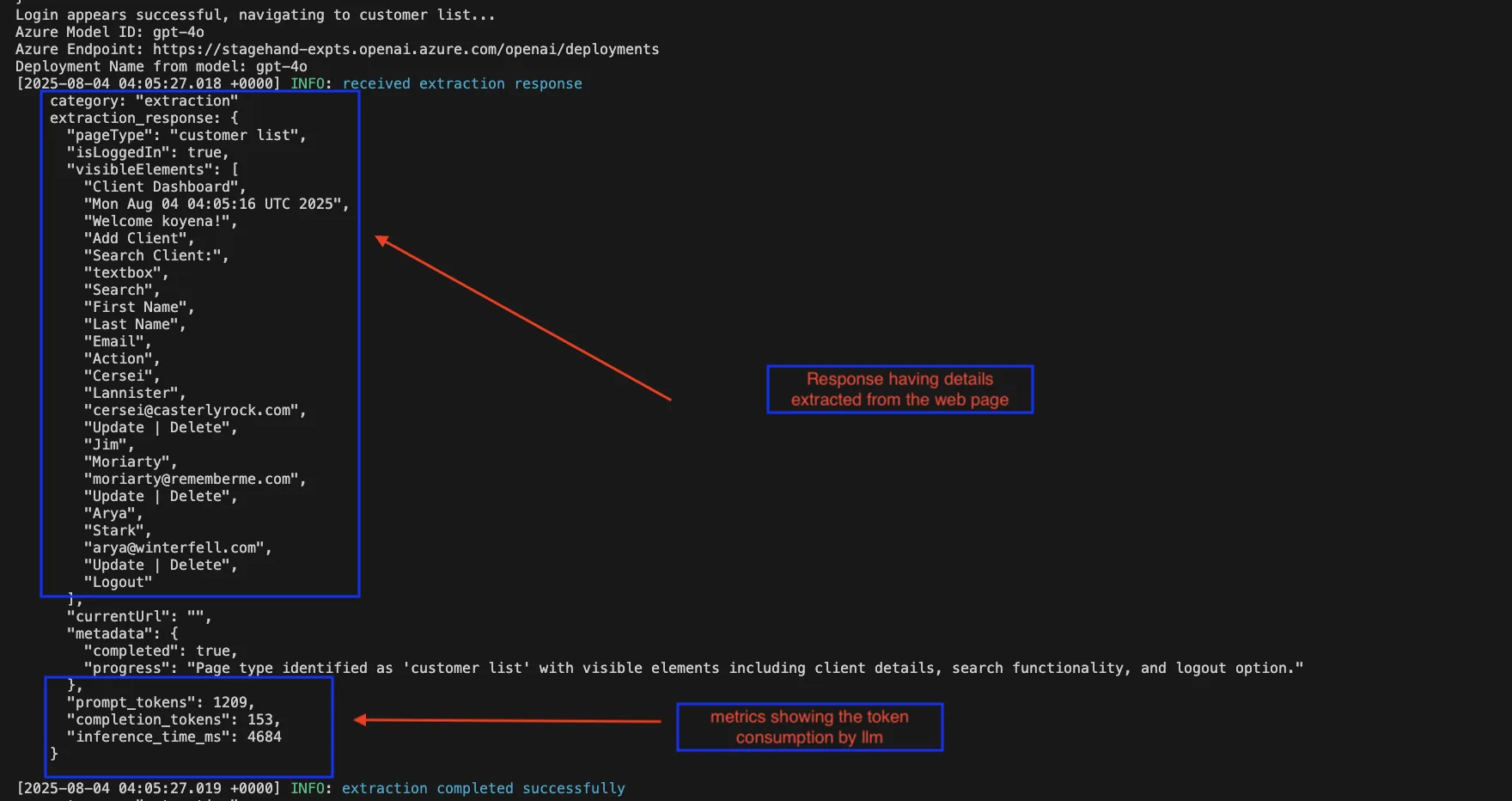

EXTRACT – Retrieves data from the webpage based on descriptive instructions. Rather than querying DOM elements manually, you describe what information you need, and Stagehand returns the relevant data in a usable format.

OBSERVE – Waits for specific conditions or page states to occur before continuing. This replaces complex waiting logic with simple descriptions of what you expect to see or happen on the page.

import { Stagehand } from "@browserbase/stagehand";

const stagehand = new Stagehand();

await stagehand.init();

// ACT: Perform actions using natural language

await stagehand.act("click the login button");

await stagehand.act("type 'user@example.com' in the email field");

// EXTRACT: Get information from the page

const userInfo = await stagehand.extract("get the user's name from the profile section");

// OBSERVE: Wait for and verify page states

await stagehand.observe("wait for the dashboard to load");

Logs showing the three operations flow

Azure OpenAI Integration Challenge

One challenge encountered with Stagehand is that it doesn’t have direct integration with Azure OpenAI out of the box at the time of writing this blog post.

Because Stagehand does not natively support Azure OpenAI, the team implemented a custom LLMClient to enable integration with enterprise Azure OpenAI deployments. For Azure AI SDK implementation, you need to create a custom LLMClient class that implements the ChatCompletion interface:

export class AISdkClient extends LLMClient {

constructor({ model }: { model: LanguageModel }) {

super(model.modelId as AvailableModel);

this.model = model;

}

async createChatCompletion<T = ChatCompletion>({

options,

}: CreateChatCompletionOptions): Promise<T> {

// Format messages and handle Azure-specific logic

// ... implementation details

}

}

import { Stagehand } from "@browserbase/stagehand";

import { openai } from "@ai-sdk/openai";

// Custom Azure OpenAI client configuration

const azureModel = openai({

baseURL: "https://your-azure-endpoint.openai.azure.com/openai/deployments/your-model",

apiKey: process.env.AZURE_OPENAI_API_KEY,

apiVersion: "2024-02-15-preview"

});

const aiSdkClient = new AISdkClient({ model: azureModel });

const stagehand = new Stagehand({

llmClient: aiSdkClient,

// other configuration options

});

This requires additional setup and configuration compared to using standard OpenAI endpoints, but enables integration with enterprise Azure OpenAI deployments that many organizations prefer for compliance and data governance reasons.

Implementing the Hybrid Approach

Experimenting with a Stagehand-First Approach

The team experimented with a “Stagehand-first” approach to accelerate test creation and democratize test writing across the team. The workflow starts with writing tests in Stagehand’s natural language format, which allows people without coding experience like business analysts and QA professionals to create comprehensive test scenarios. Once the Stagehand tests are validated and working correctly, teams convert them to standard Playwright code for production use.

This approach offers several key benefits:

- Faster test creation – Natural language tests are quicker to write and modify

- Team accessibility – People who don’t have coding experience can contribute to test coverage

- Cost optimization – Generated Playwright tests run without LLM API calls, reducing operational costs

- Maintainability – Standard Playwright code integrates seamlessly with existing CI/CD pipelines

Architecture: Stagehand to Playwright Conversion Flow

┌─────────────────┐ ┌──────────────────┐ ┌─────────────────┐

│ Business User │ │ Developer/ │ │ Developer │

│ │ │ QA Engineer │ │ │

│ Writes natural │───▶│ Validates tests │───▶│ Converts to │

│ language tests │ │ in Stagehand │ │ Playwright │

│ in Stagehand │ │ │ │ │

└─────────────────┘ └──────────────────┘ └─────────────────┘

│ │ │

▼ ▼ ▼

┌─────────────────┐ ┌──────────────────┐ ┌─────────────────┐

│ Natural Lang. │ │ Stagehand Test │ │ Playwright Test │

│ Test Scenarios │ │ Execution │ │ Code Generation │

└─────────────────┘ └──────────────────┘ └─────────────────┘

│ │

▼ ▼

┌──────────────────┐ ┌─────────────────┐

│ Quick Validation │ │ Production CI/CD│

│ & Iteration │ │ Pipeline │

└──────────────────┘ └─────────────────┘This hybrid workflow maximizes team productivity while ensuring production-ready, cost-effective test automation for modernization projects.

Converting Stagehand to Playwright with GitHub Copilot

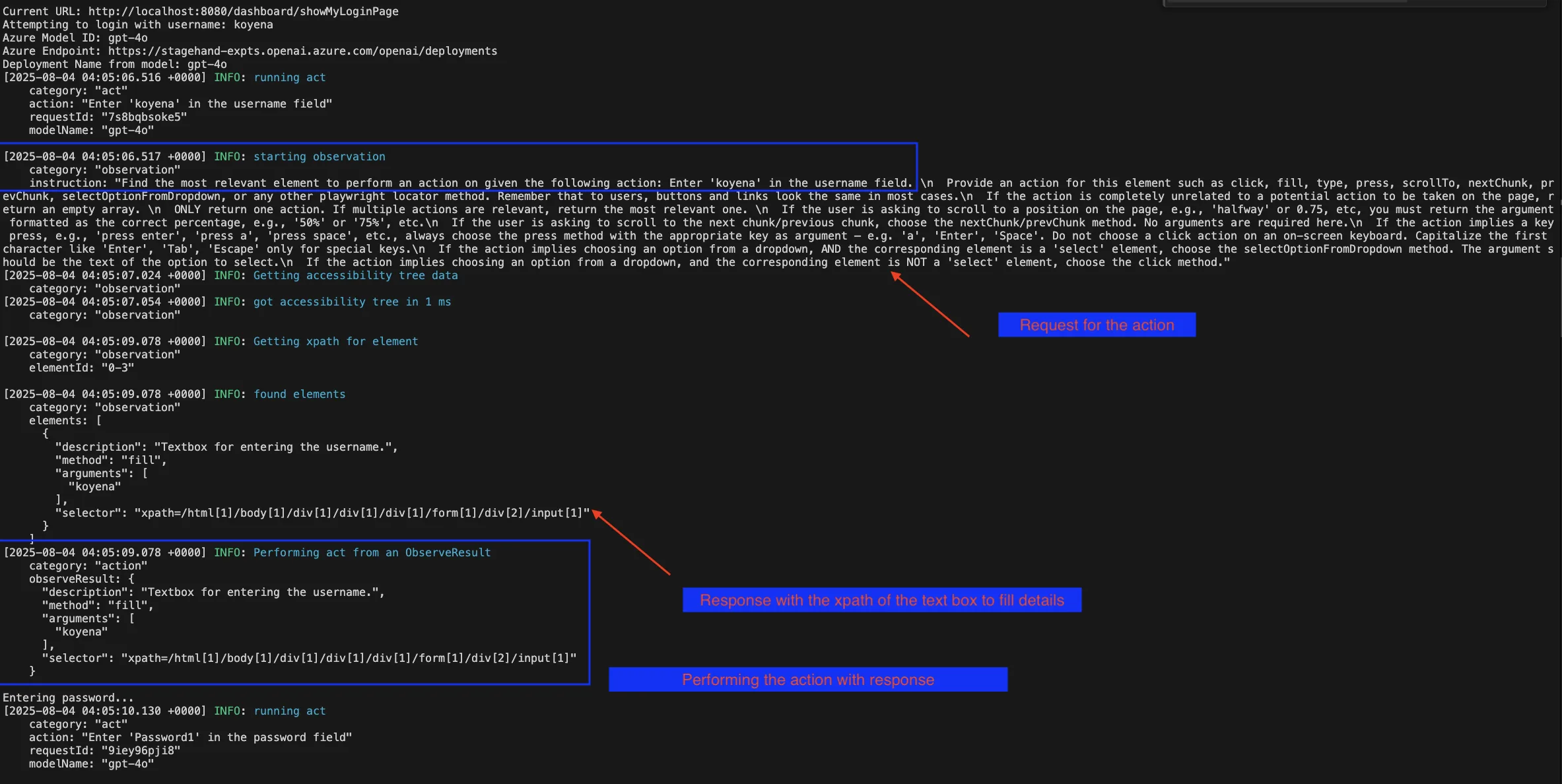

Running a dynamic Stagehand test each time can be expensive because of token consumption, so teams use it selectively. One of its key advantages is enabling non-technical team members with strong product knowledge to create tests, lowering the barrier to contribution. The conversion from Stagehand tests to Playwright code was streamlined using GitHub Copilot with carefully crafted prompts. By executing Stagehand tests with detailed info logs enabled, teams captured the underlying browser interactions and element identifications that Stagehand performed.

These logs, combined with XPath validations, provided GitHub Copilot with the necessary context to generate accurate Playwright test code. Stagehand plays a role similar to Playwright’s recording functionality, but instead of just raw clicks and inputs, it produces more structured, semantically clear steps that translate into robust, repeatable Playwright tests. While recording-to-test creation is possible, the Stagehand-first approach balances accessibility for non-technical contributors with stronger automation outcomes.

Sample Code: Stagehand to Playwright Conversion

Here’s a real example showing the conversion process:

Stagehand Test Code:

await test.step('Navigate to application', async () => {

console.log("Navigating to SpringDashboard application...");

await page.goto("http://localhost:8080/dashboard");

await page.waitForTimeout(2000);

});

await test.step('Perform login', async () => {

const currentUrl = page.url();

console.log("Current URL:", currentUrl);

// If not already on login page, try to find and click login link

if (!currentUrl.includes('login') && !currentUrl.includes('Login')) {

console.log("Looking for login link...");

await page.act("Click on the login link or navigate to login page");

await page.waitForTimeout(1000);

}

// Perform login

console.log(`Attempting to login with username: ${TEST_USER.username}`);

await page.act(`Enter '${TEST_USER.username}' in the username field`);

await page.waitForTimeout(500);

console.log("Entering password...");

await page.act(`Enter '${TEST_USER.password}' in the password field`);

await page.waitForTimeout(500);

console.log("Clicking login button...");

await page.act("Click the login or submit button");

// Wait for navigation after login

await page.waitForTimeout(3000);

});

await test.step('Verify login success', async () => {

const postLoginUrl = page.url();

console.log("URL after login attempt:", postLoginUrl);

Converted Playwright Code:

test('Complete CRUD workflow: Login, Create and Delete Customer', async ({ page }) => {

await test.step('Navigate to application', async () => {

console.log("Navigating to SpringDashboard application...");

await page.goto("http://localhost:8080/dashboard");

await page.waitForTimeout(2000);

});

await test.step('Perform login', async () => {

// Wait for login page to load

await page.waitForURL('**/showMyLoginPage');

console.log("Current URL:", page.url());

// Fill username using exact selector from Stagehand

console.log(`Entering username: ${TEST_USER.username}`);

await page.locator('xpath=/html[1]/body[1]/div[1]/div[1]/div[1]/form[1]/div[2]/input[1]').fill(TEST_USER.username);

// Fill password using exact selector from Stagehand

console.log("Entering password...");

await page.locator('xpath=/html[1]/body[1]/div[1]/div[1]/div[1]/form[1]/div[3]/input[1]').fill(TEST_USER.password);

// Click login button using exact selector from Stagehand

console.log("Clicking login button...");

await page.locator('xpath=/html[1]/body[1]/div[1]/div[1]/div[1]/form[1]/div[4]/button[1]').click();

// Wait for navigation after login

await page.waitForURL('**/customer/list');

});

The team also tried adding prompts to extract locator names instead of XPath, but this did not produce accurate results. The XPath approach proved more reliable for conversion.

In the extract locator approach, it relied on semantic selection which requires proper naming and much more detailing in naming conventions. This approach uses Playwright’s semantic selectors like getByRole with fallback options:

await test.step('Navigate to application', async () => {

console.log("Navigating to SpringDashboard application...");

await page.goto("http://localhost:8080/dashboard");

await page.waitForLoadState('networkidle');

});

await test.step('Perform login', async () => {

console.log(`Attempting to login with username: ${TEST_USER.username}`);

// Wait for login page to load

await expect(page).toHaveURL(/.*login.*/i);

// Fill username - using getByRole for semantic selection

const usernameField = page.getByRole('textbox', { name: /username/i })

.or(page.locator('input[name="username"]'))

.or(page.locator('input[type="text"]').first());

await usernameField.fill(TEST_USER.username);

// Fill password - using getByRole for semantic selection

const passwordField = page.getByRole('textbox', { name: /password/i })

.or(page.locator('input[name="password"]'))

.or(page.locator('input[type="password"]'));

await passwordField.fill(TEST_USER.password);

});

While this approach creates more maintainable and readable tests, it requires developers to have deeper knowledge of accessibility attributes and semantic naming patterns, making it less suitable for automated conversion from Stagehand logs.

The process involved:

- Running Stagehand tests with verbose logging to capture all browser interactions

- Extracting XPath selectors and element validation patterns from the logs

- Prompting GitHub Copilot with the logged interactions and validation requirements

- Generating equivalent Playwright code that replicated the same test behavior

This approach ensured that the converted Playwright tests maintained the same reliability and accuracy as the original Stagehand tests while providing the performance and cost benefits of native Playwright execution.

The GitHub Copilot Conversion Experience

The specific prompt used with GitHub Copilot was:

“Generate Playwright tests using the logging detailed information by running Stagehand tests. Convert the natural language actions to specific element selectors and interactions based on the captured browser automation logs.”

Results: The generated Playwright code was approximately 85% correct and required only minor changes and tweaks. Most of the element selectors, test logic, and flow were accurately translated from the Stagehand logs.

Evaluating the Approach

Pros of Stagehand-to-Playwright Approach

- Rapid Prototyping – Natural language tests enable quick test scenario validation

- Democratized Testing – Non-coders can create comprehensive test coverage

- Cost Optimization – Production tests run without LLM API costs

- Faster Development – Reduced time from test conception to implementation

- Better Collaboration – Business stakeholders can directly contribute to test scenarios

Cons and Challenges

- Additional Conversion Step – Requires manual or semi-automated conversion process

- Learning Curve – Teams need to understand both Stagehand and Playwright

- Maintenance Overhead – Changes require updates in both Stagehand and Playwright versions

- Limited Complex Scenarios – Some advanced testing patterns may not translate well, especially when dealing with dialogs outside the DOM.

Technical Considerations

XPath Considerations

Advantages:

- Precise Element Targeting – XPath provides exact element location from Stagehand logs

- Flexibility – Can traverse complex DOM structures effectively

- Debugging – Clear path from natural language to exact element selection

Disadvantages:

- Fragility – XPath selectors can break easily with UI changes

- Performance – Generally slower than CSS selectors

- Maintenance – Hard-coded paths require updates when DOM structure changes

- Readability – Long XPath expressions are difficult to understand and modify

Recommendation: Use XPath selectors from Stagehand logs as a starting point, then refactor to more robust CSS selectors or data attributes for production tests when possible.

Alternative Prototype Approach: Stagehand in Playwright Record Mode

Another approach explored as a prototype was running Stagehand in Playwright record mode to automatically capture the generated test code. However, this method spawned two browser instances simultaneously, which created complexity in managing the testing environment. While this approach shows promise for direct code generation without manual conversion, it requires significant one-time engineering effort to properly coordinate the dual-instance setup and handle the synchronization between Stagehand’s AI-driven actions and Playwright’s recording mechanism. Despite the additional complexity, this remains a good area to explore for teams looking to fully automate the conversion process.

Conclusion

The hybrid Stagehand-to-Playwright approach offers a compelling solution for modernization projects where rapid test creation and team accessibility are priorities. While the approach introduces some complexity, the benefits of democratized test writing and cost optimization make it valuable for teams looking to accelerate their UI testing workflows while maintaining production-ready automation.