We recently worked with Ascoderu, a small tech-for-good non-profit registered in Canada and the Congo DRC. Ascoderu is currently developing the Lokole project, which includes a hardware access point and suite of open source Linux cloud services to enable communities to have rich communications via email for just $1 per community per day. Ascoderu is targeting rural communities in sub-Saharan Africa with this technology. Access to efficient communication in rural African areas has been recognized as an important factor for sustainable development by the United Nations’ Sustainable Development Goal 9. The African Innovation Foundation also recently recognized Lokole’s importance for the continent, calling it one of the top 10 innovations solving Africa’s problems.

Ascoderu is a small non-profit and relies on volunteer development through tech-for-good hackathons, open-source events, university clubs, and so forth. As such, the continuity and the average tenure of development staff is short, which makes it essential that tasks such as deploying to production be as simple as possible.

We previously wrote a code story about how we simplified infrastructure management with Ascoderu using Azure Service Fabric and Docker Compose. This article follows up on that work and will describe how we leveraged Service Fabric, Docker Compose, Github, and Travis CI to build a one-click continuous delivery pipeline that deploys Ascoderu’s open-source Linux cloud services.

Continuous delivery for Azure Service Fabric via Travis CI

In our previous article, we covered how to set up a Linux Service Fabric cluster and how to deploy an application to the cluster using Docker Compose. This article assumes that you already have a Service Fabric cluster and an application that you deployed to the cluster using Docker Compose, as described in the earlier article. In this article, we will show how to build on that work and set up continuous delivery for Service Fabric via Github and Travis CI so that whenever a new tag is created in the project (e.g. via authoring a release on the project’s Github page), the changes are automatically built and deployed to the Service Fabric cluster.

We’re using Travis CI as the continuous delivery service in this article since it’s very popular and free for open-source projects; however, the approach outlined below also applies to any continuous delivery service that has support for secrets and custom Bash scripts.

Note that Service Fabric already has a continuous delivery integration with Visual Studio Team Services but, like many open source projects, Ascoderu’s code is hosted on Github, so we cannot leverage this integration.

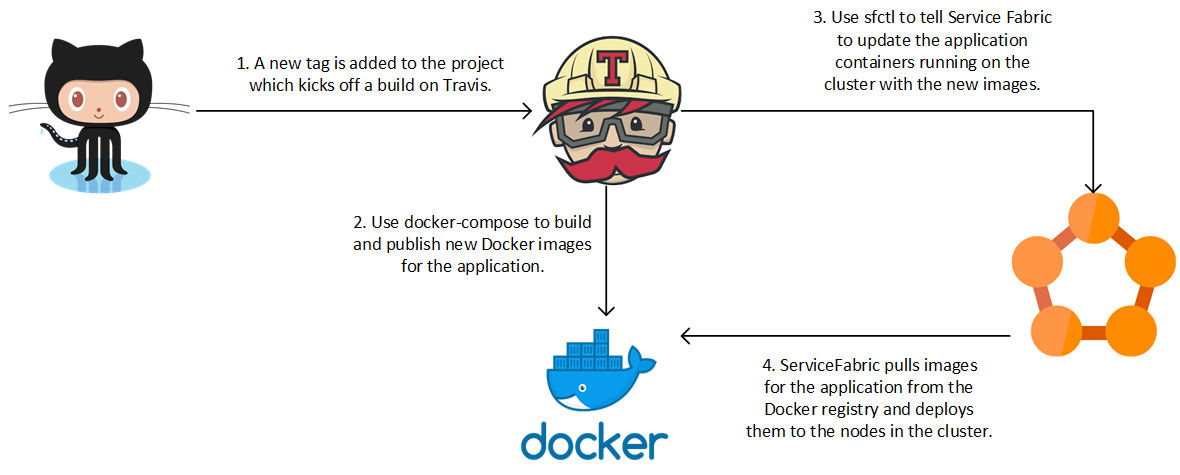

The diagram below shows the workflow based on Github and Travis CI that we’ll implement:

Travis CI has built-in support for publishing to a number of systems when a build tagged as a release is successful. For example, Travis supports publishing a Python package to PyPI or publishing a NodeJS module to NPM. Currently, there is no off-the-shelf support for publishing to a Service Fabric cluster but it’s easy to implement it ourselves as shown in the rest of this section.

First, ensure that the .travis.yml file that configures the Travis CI environment installs an up-to-date version of Docker and runs our script to publish to Service Fabric whenever a build for a tag was successful:

# the snippet below is an excerpt from the file .travis.yml

# ensure that docker is available on the travis machine

services:

- docker

# ensure that the docker version is up to date so that we're sure that

# we can build any sort of docker-compose file that may be in the project

# e.g., the default ubuntu docker version can't build v3 compose files

sudo: required

before_deploy:

- sudo apt-get update

- sudo apt-get -y -o Dpkg::Options::="--force-confnew" install docker-ce

# wire-up the script to build the application and deploy it to service fabric

# the script is run whenever a tag is added to the application's repository

deploy:

- provider: script

script: deploy-to-service-fabric.sh

on:

repo: your_github_organization/your_repository_name

tags: true

Next, create the deploy-to-service-fabric.sh script referenced in the .travis.yml file. The script builds our application, publishes the built Docker images, and deploys the changes to the Service Fabric cluster:

# the snippet below is an excerpt from the file deploy-to-service-fabric.sh

#!/usr/bin/env bash

# double check that the details for how to connect to docker and the service

# fabric cluster are provided; you can set these in the travis ui at

# https://travis-ci.org/your_github_organization/your_repository_name/settings

# the variables SERVICE_FABRIC_NAME and SERVICE_FABRIC_LOCATION should be set

# to the values of $cluster_name and $location created during the initial

# cluster setup described in our earlier article

if [ -z "$DOCKER_USERNAME" ] || [ -z "$DOCKER_PASSWORD" ]; then

echo "No docker credentials configured" >&2; exit 1

fi

if [ -z "$SERVICE_FABRIC_NAME" ] || [ -z "$SERVICE_FABRIC_LOCATION" ]; then

echo "No service fabric credentials configured" >&2; exit 1

fi

# install the service fabric command line tool (sfctl), re-using the travis

# python virtual environment to avoid having to set up a new one

py_env="$HOME/virtualenv/python$TRAVIS_PYTHON_VERSION"

pip="$py_env/bin/pip"

sfctl="$py_env/bin/sfctl"

${pip} install sfctl

# as during the initial deployment to the cluster, we must materialize

# all environment variables used in the docker compose file

compose_file="$(mktemp)"

docker-compose config > "$compose_file"

# create a new build of the application and publish it to the docker registry so

# that the nodes in the service fabric cluster can find the updated application

# this assumes that the travis build step didn't already create these assets

docker login --username "$DOCKER_USERNAME" --password "$DOCKER_PASSWORD"

docker-compose -f "$compose_file" build

docker-compose -f "$compose_file" push

# connect to the service fabric cluster using the certificate provided via the

# travis encrypted file

sf_host="$SERVICE_FABRIC_NAME.$SERVICE_FABRIC_LOCATION.cloudapp.azure.com"

REQUESTS_CA_BUNDLE="$cert_file" ${sfctl} cluster select \

--endpoint "https://$sf_host:19080" \

--pem "cert.pem" \

--no-verify

# deploy the new build to the service fabric cluster, in rolling-upgrade mode to

# ensure up-time; the deployment will run asynchronously and you can monitor the

# progress in the cluster management ui

${sfctl} compose upgrade \

--deployment-name "$SERVICE_FABRIC_NAME" \

--file-path "$compose_file"

The script above is general-purpose so it can be re-used for any application that is hosted on GitHub, uses Travis CI and deploys to Service Fabric. The script assumes two preconditions:

- The certificate required to connect to the Service Fabric cluster is made available under the name cert.pem in the script’s working directory

- Username and password credentials to publish Docker images are provided via environment variables

To satisfy the first precondition and make the Service Fabric certificate available to the continuous delivery script, we can use the Travis command line tool to encrypt the certificate and then commit the encrypted file to the Github repository, whose contents are always available during the Travis CI build:

# encrypt the certificate using travis' key; this command will also modify # the .travis.yml configuration file to automatically decrypt the certificate # whenever a build is run travis encrypt-file cert.pem --add # make sure to never commit the original certificate! echo "cert.pem" >> .gitignore # commit the encrypted certificate to make it available to travis git add cert.pem.enc .travis.yml git commit -m "Adding deployment secret" git push

More information about encrypting files for Travis CI is available in the Travis documentation.

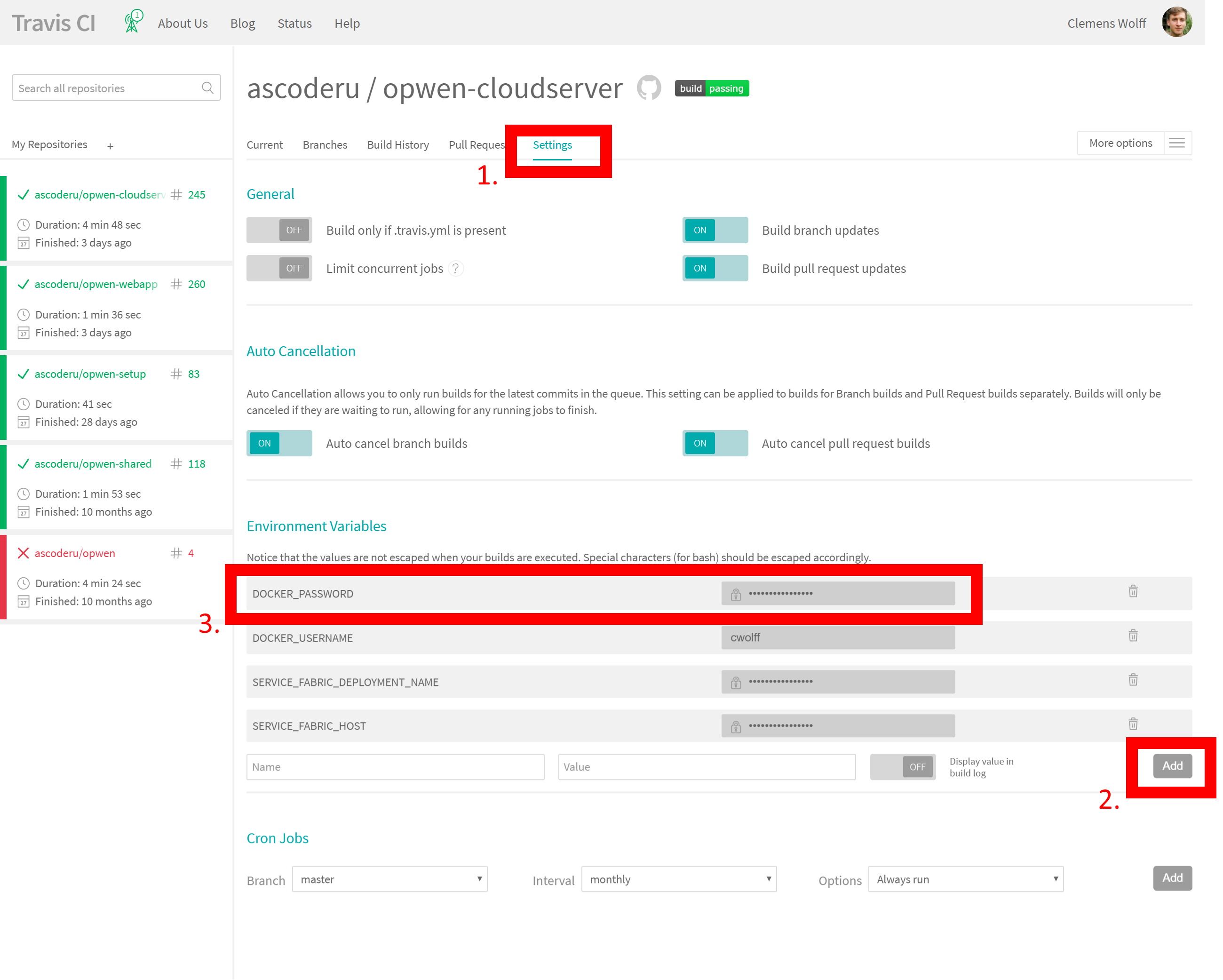

To satisfy the second precondition and make the Docker credentials available to the script, we use the Travis website to configure the required environment variables as shown in the screenshot below.

More information about providing environment variables to Travis CI is available in the Travis documentation.

With the deployment script now set up, the cluster certificate made available to Travis CI, and Docker credentials provided via environment variables, our continuous delivery pipeline is now complete. Whenever a tag gets added to the repository (e.g., by authoring a release on Github via https://github.com/your_github_organization/your_repository_name/releases/new), the changes will be automatically built, new Docker images will be published, and Service Fabric will take care of upgrading the running applications to reflect the new images (see the sample log for a release build in the Ascoderu project). Note that Service Fabric uses a rolling upgrade mechanism to ensure availability of the service during the deployment.

Summary

This code story covered how to create a continuous delivery pipeline for Service Fabric via Github and Travis CI. Using this setup, the Ascoderu non-profit is now able to deploy changes to production with a simple one-click workflow driven off of Github releases. This method enables anyone in Ascoderu to easily and confidently author releases, including developers new to the project.

The approach outlined in this article is fully generalized and therefore reusable for any project that is hosted on Github and uses Docker Compose with Azure Service Fabric. Try it in your project and tell us about your results in the comments below!

Resources

- Service Fabric enabled Travis CI continuous delivery script for Ascoderu project

- Article on how to deploy Linux Python applications to Service Fabric via Docker Compose

- More information about Service Fabric