Introduction

In late 2021, a large customer from the process manufacturing industry approached CSE with an interesting challenge that brought about the findings summarized in this blog post. Their development team was implementing a custom lab device management tool that would both orchestrate the deployment of their Azure IoT Edge workloads and manage the hosting systems. While deploying containerized applications was a well-understood problem, keeping the underlying operating systems properly patched and configured was another pair of shoes.

Prior to our engagement, said customer had selected Azure IoT with OSConfig as the preferred solution to perform host-level configuration. As you may have guessed, they asked us whether we could help them design a way to use the same tool for package management. But why use Azure IoT and OSConfig instead of any of the well-established server configuration management tools out there?

This post will guide you through the train of thought that led us to the development of a custom OSConfig module named Package Manager Configuration.

Why OSConfig?

OSConfig’s prime focus is to allow device-specific host-level configuration and observability. It uses an agent-based approach that piggybacks on the secure bidirectional communication channel established by Azure IoT Hub clients. As a consequence, IoT devices running OSConfig can receive commands relayed through Azure IoT Hub without exposing any additional inbound communication port.

Unlike servers hosted in controlled environments, IoT devices are generally expected to support intermittent network connectivity by design. Another difference specific to IoT scenarios is the sheer number of devices to be addressed. OSConfig’s native use of desired and reported properties is an invaluable feature in all aforementioned aspects. By using the asynchronous messaging channels powered by IoT Hub, OSConfig allows the same host-level access as established SSH-based tools with the added bonus of offline resiliency, IoT-grade scalability, and uncompromising security.

Implementing desired states

As opposed to image-based approaches to device management, the prerequisite of any incremental strategy is a carefully designed concept to capture a desired system state. Defining and reaching a desired state goes way beyond the subsequent execution of apt install package-x commands, also known as the imperative approach. As it is not reasonable to expect every IoT device to be in the same state at the same time, an idempotent and declarative way to frame a desired state becomes indispensable.

Installing individual packages with APT gives you no guarantees in terms of idempotence by default. When automatically resolving package dependencies, APT’s default behavior is to install the highest available package version, making the effectively installed package dependent on what packages are visible to any given device when executing the installation command. As we progressed through the engagement, we determined that to achieve true idempotence with APT, we had to combine three different techniques:

- the version of all packages to be installed including their dependencies can be specified explicitly, e.g. by running

apt-get install foo=1.1.1 bar=1.2.3 - the package source repositories and their contents can be controlled by hosting private package repositories using tools like aptly or Artifactory

- APT preferences can be configured to force APT to install specific package versions regardless of what other packages are available

Taking the strategies above into account, incremental package-based updates can be implemented in a way that offers idempotence but comes at the price of increasing levels of complexity.

Applying technique #1 is the easiest way to declare desired packages using OSConfig. However, the list of packages might grow over time and end up as numerous as to overflow the max allowed device twin size. This is where we came up with an approach that makes use of a feature inherent to DEB packages: dependency relationships. Every package can define dependencies that need to be resolved during its installation. This concept can be used to capture a desired system state by listing all packages that are required in a special metadata-only package, which we called the “dependency package”. By introducing this declarative approach, desired states are not limited by any size restrictions dictated by the underlying communication channel. Even though in theory dependencies can be pinned in the control file of dependency packages, APT will disregard the exact versions during the installation, which raises the question about how to nudge the dependency resolution into the right direction. This is where technique #2 comes to the rescue and makes the installation of dependencies deterministic.

In our concrete case, a self-hosted instance of Artifactory was already in use as repository for build artifacts, making it an ideal solution to host dependency packages and any referenced dependencies. Prior to accessing private package repositories, edge devices need to be configured accordingly. On Debian-based systems, this is best achieved through the addition of a source file to /etc/apt/sources.list.d/ as described here.

Having private repositories at our disposal, controlling the contents of the latter could have been enough to fulfil our customer’s immediate requirements. But what happens if any of the customer’s edge devices were to source their packages from a public package repo (or a synced mirror) in the future? This would again break the idempotence of the update procedure that was described this far. Enter technique #3, the ultimate lever to make incremental updates deterministic both with private and public package repositories.

By modifying the preferences governing APT’s dependency resolution logic, the version of each package listed in a dependency package can be pinned to an exact version. Resolution preferences can be deployed through a custom “pinning package”, which essentially contains an APT-specific preference file that will be placed under/etc/apt/preferences.d/.

While adding packages can easily be done using dependency relationships, the removal of packages needs special consideration. Packages that were installed explicitly and not as a dependency of another package elude the convenience provided by APT’s “autoremove” option. Such packages are typically the ones that come preinstalled with the base image. This is where conflicting relationships can be leveraged. Specifying packages to be removed as “conflicting packages” will make APT remove them automatically while installing the latest dependency package.

An example implementation of dependency and pinning packages can be found here.

Why build a new OSConfig module?

OSConfig’s CommandRunner module is readily available and allows the execution of any ad hoc shell command, begging the question whether building a new module is required at all. To answer this question, let us have a look at the entire process involved in updating an edge device.

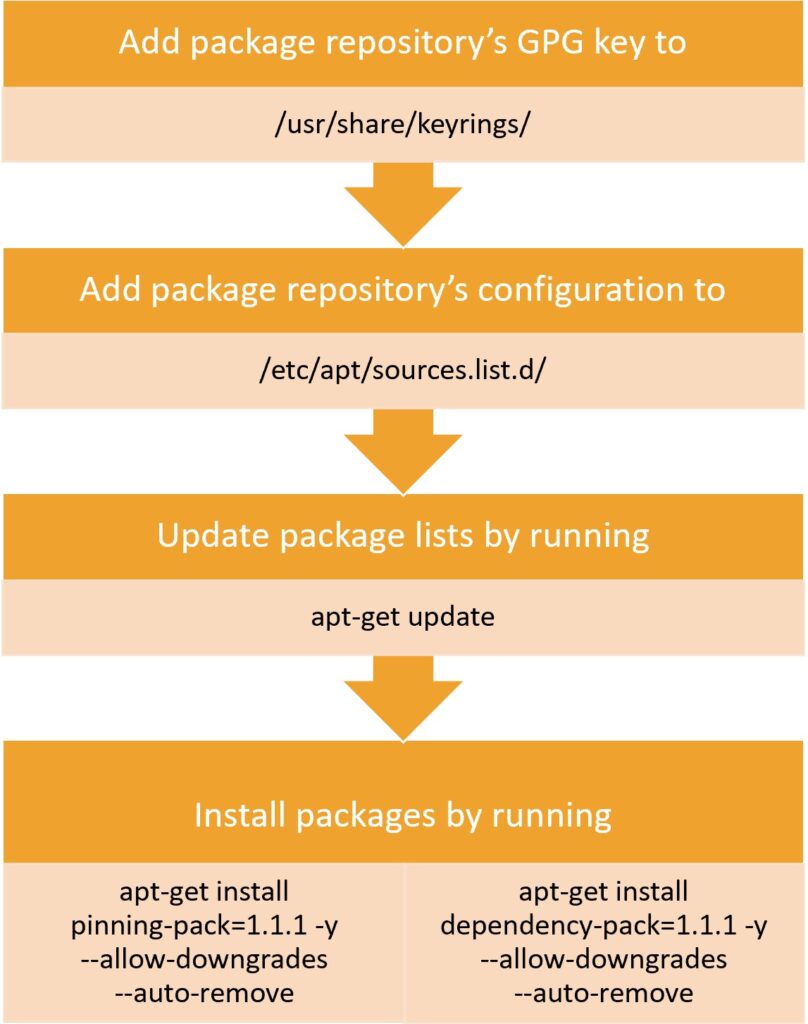

The installation process shows that four distinct commands are required to orchestrate the proper configuration of the package sources and the installation of the pinning and dependency packages. Since every communication round-trip can be delayed by network interruptions, a workflow engine is required to keep track of the progress of every command on every targeted device.

Another aspect to consider is observability. Monitoring the system state in terms of packages can also be done through a sequence of adequate CommandRunner commands. However, this would result in a large amount of actively issued requests/responses that need to be sent/received to/from each and every device. This process can be tremendously simplified by letting every device actively report back their respective state.

For the reasons mentioned above, extending OSConfig with a purpose-built module was justified and resulted in the creation of the Package Manager Configuration (PMC) module. Note our use of space saving fingerprints (hashes) to reflect a device’s current state in terms of packages and configured package repositories. The rationale behind these fingerprints is the ability to monitor large fleets of devices with respect to version drift. Any diverging package version or source entry can be identified by comparing the reported fingerprints against the expected fingerprints. Obviously, the expected fingerprints need to be precomputed on a reference/canary device before deploying the latest desired state.

Keeping the initial image consistent

Over time, different situations might call for a device’s reflash:

- a repurposed edge device requires a hard reset

- a malfunctioning device needs replacing

- a new batch of devices is added to the fleet

While package manager-based updates can bring a device into a desired state even from an outdated base image, the resulting update process could become rather lengthy as the version gap grows. Therefore, base images should be occasionally updated to reduce the deltas.

Through the use of dependency packages, any immutable base image can be updated to the latest system state as found in the field. You can find instructions on how to automate the Ubuntu image generation for Raspberry Pis here. The referenced approach can easily be extended with custom installation steps that make use of pinning and dependency packages.

Conclusion

The most pivotal questions when implementing a declarative configuration strategy are

- how to describe a desired state

- how to send the state description to targeted devices

- how to interpret the state description in an idempotent way

This blog post suggests the use of dependency packages to capture desired states and pinning packages to ensure idempotency. While many agent- and SSH-based server management solutions exist, the seamless integration with IoT Hub gives OSConfig a decisive edge for IoT scenarios that have strict requirements in terms of scalability, security and offline resiliency.

Team Members

The following CSE members (alphabetical order) contributed to the success of the solution described in this blog post:

- Alexander Gassmann (blog post author)

- Anitha Venugopal

- Francesca Longoni

- Ioana Amarande

- Machteld Bögels

- Magda Baran

- Ossi Ottka

- Simon Jäger

- Syed Hassaan Ahmed

- Ville Rantala

- Zoya Alexeeva