TL;DR

Models: GPT‑5 family now in Azure AI Foundry (gpt‑5 requires registration; launch regions: East US 2, Sweden Central). Also new: Sora API updates (image→video, inpainting; Global Standard in East US 2 and Sweden Central), Mistral Document AI (OCR), Black Forest Labs FLUX.1 Kontext [pro] and FLUX1.1 [pro], OpenAI gpt‑oss (with Foundry Local support), and VibeVoice long‑form TTS (coming soon).

Agents: Browser Automation tool (public preview) and expanded Agent Service regional availability (Brazil South, Germany West Central, Italy North, South Central US).

Tools: Browser Automation integrates with Microsoft Playwright Testing Workspaces; refreshed MCP samples; updated Deep Research guidance and samples.

Platform: Model Router adds GPT‑5 support (limited access); Responses API is GA. August updates across Python, .NET, Java, and JavaScript/TypeScript; Agent Service Java SDK enters public preview. Doc updates include new status dashboard (Preview), API lifecycle v1 guidance, updated quotas/limits (incl. GPT‑5 and Model Router), tracing/observability, CMK clarifications, enterprise chat web app tutorial, and region/model availability matrices.

📬 Subscribe to “What’s New in Foundry” monthly

Prefer a nudge each month?

- Copy this RSS feed URL

- Use a preferred RSS Reader like Feedly

- Add this 👇🏻 URL to follow

Models

GPT‑5 arrives: models, pricing, regions, and access

gpt-5, gpt-5-mini, gpt-5-nano, and gpt-5-chat are now available in Azure AI Foundry. Registration is required for gpt-5 access; mini, nano, and chat do not require registration.

Details

- Roles and context windows

gpt-5: next‑gen reasoning with long horizon tasks; up to ~272K tokens context.gpt-5-chat: multimodal conversational model; ~128K tokens context.gpt-5-mini: fast, tool‑calling/real‑time friendly baseline.gpt-5-nano: ultra‑low‑latency Q&A and lightweight tasks.

- Provisioned throughput:

gpt-5supports provisioned throughput (PTUs); mini/nano/chat are available as standard deployments. - Deployment options: Global and Data Zone (US, EU).

- API: Available via the v1 Responses API (recommended) and Chat Completions.

Freeform tool calling

Freeform tool calling in GPT‑5 enables the model to send raw text payloads like Python scripts, SQL queries, or configuration files directly to external tools without needing to wrap them in structured JSON—eliminating rigid schemas and reducing integration overhead. In this episode, April shows how the model executes model‑generated SQL to compute results, routes the output to Python for formatting and visualization, and returns reproducible artifacts (CSVs, charts) from a natural‑language prompt.

Try it yourself in Azure AI Foundry:

Pricing (per 1M tokens)

| Model | Input (G) | Cached input (G) | Output (G) | Input (DZ) | Cached input (DZ) | Output (DZ) | |

|---|---|---|---|---|---|---|---|

| gpt‑5 | $1.25 | $0.125 | $10.00 | $1.375 | $0.1375 | $11.00 | |

| gpt‑5‑mini | $0.25 | $0.025 | $2.00 | $0.275 | $0.0275 | $2.20 | |

| gpt‑5‑nano | $0.05 | $0.005 | $0.40 | $0.055 | $0.0055 | $0.44 | |

| gpt‑5‑chat | $1.25 | $0.125 | $10.00 | — | — | — |

Legend: G = Global. DZ = Data Zone (US, EU).

Regions & access

- Regions (at launch):

eastus2andswedencentral - Access: Registration is required for

gpt-5(request access). If you already have o3 access, no additional request is required. gpt‑5‑mini,gpt‑5‑nano, andgpt‑5‑chatdo not require registration.

Model router: GPT‑5 series support

The model router now supports dynamic selection across the GPT‑5 family for cost and quality optimization. Access is limited for the latest router version—request via the GPT‑5 access form (if you already have o3 access, no additional request is required). When the router selects a reasoning model, some parameters (for example, temperature/top_p) may be ignored; reasoning_effort isn’t settable through the router. Billing is based on the underlying model the router selects.

Quickstart: route with Chat Completions (Python)

import os

from openai import OpenAI

client = OpenAI(

api_key=os.getenv("AZURE_OPENAI_API_KEY"),

base_url="https://YOUR-RESOURCE-NAME.openai.azure.com/",

)

# Replace with your model router deployment name

response = client.chat.completions.create(

model="model-router",

messages=[

{"role": "user", "content": "Write a two-sentence product description for a smart thermostat."}

],

)

print(response.choices[0].message.content)Watch: Model Router demo

Black Forest Labs FLUX image models

FLUX.1 Kontext [pro] and FLUX1.1 [pro] are now available “Direct from Azure” in Foundry Models. Kontext is multimodal—text-to-image plus in‑context image editing—supporting edit instructions, character/style/object reference without fine‑tuning, and robust multi‑step refinements with minimal drift (up to ~8× faster at 1024×1024). FLUX1.1 [pro] focuses on text‑to‑image generation only, achieves top-tier Elo on Artificial Analysis (evaluated as “blueberry”), and is significantly faster than earlier FLUX releases, with an Ultra mode up to 4 MP.

Model details

| Model | Performance notes | Resolution / modes | Regions | Pricing (per 1K images) |

|---|---|---|---|---|

| FLUX.1 Kontext [pro] | Up to 8× faster at 1024×1024; robust multi‑edit consistency | 1024×1024 (default) | swedencentral, eastus2 |

$40 |

| FLUX1.1 [pro] | High Elo on Artificial Analysis; ~10s for 4 MP; up to 6× faster than FLUX 1‑pro* | Up to 4 MP (Ultra mode) | swedencentral, eastus2 |

$40 |

*Performance depends on configuration.

Get started

- Find them under Foundry Models → “Direct from Azure”; deploy to get an endpoint/key and try them in the Image Playground.

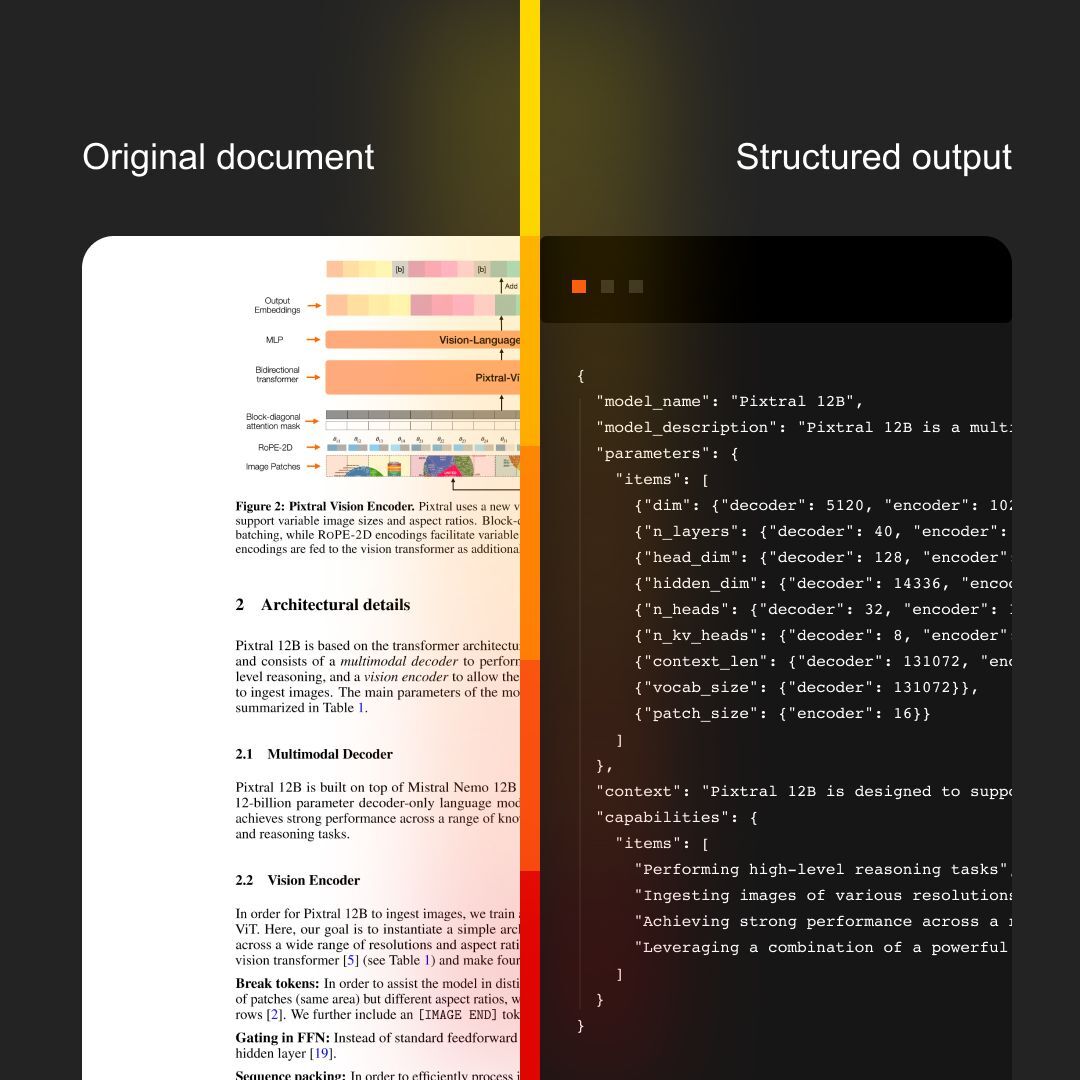

Mistral Document AI (OCR) — serverless in Foundry

Unlock high‑fidelity, layout‑aware document understanding with structured outputs. Mistral Document AI combines vision + language to preserve tables, figures, and headings; returns structured JSON and markdown‑like tables; and supports multilingual scans, PDFs, and complex forms. It’s sold “Direct from Azure” and deploys in one click as a serverless endpoint in Azure AI Foundry.

Source: @MistralAI post 2025-08-18

Source: @MistralAI post 2025-08-18

Watch: Mistral Document AI demo

See how Mistral Document AI parses complex PDFs, preserves layout, and returns structured JSON — including tables, headings, and figures. The demo walks through serverless inference in Azure AI Foundry and exporting results for downstream RAG and automation workflows.

Details

| Model | Global | Data Zone | Regions |

|---|---|---|---|

| mistral-document-ai-2505 | $3.00 | $3.30 | eastus2, swedencentral |

Quickstart (Python, serverless REST)

import os, base64, requests

endpoint = os.environ["MISTRAL_DOC_AI_ENDPOINT"] # e.g., https://{project}.eastus2.models.ai.azure.com/inference

api_key = os.environ["MISTRAL_DOC_AI_KEY"]

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {api_key}",

}

with open("sample.pdf", "rb") as f:

encoded = base64.b64encode(f.read()).decode("utf-8")

payload = {

"model": "mistral-document-ai-2505",

"document": {

"type": "document_url",

"document_url": f"data:application/pdf;base64,{encoded}",

},

}

response = requests.post(endpoint, json=payload, headers=headers)

print(response.json()["pages"][0]["markdown"]) # first page as markdownGet started with these mistral/python Foundry samples in our GitHub repo.

Sora API — new updates (Preview)

We’ve rolled out powerful new capabilities in Sora that unlock even more creative potential:

What’s new

- Image‑to‑Video support via API, including frame indexing and region‑specific inpainting.

- Global Standard expansion: Sora is now available in East US 2 and Sweden Central under the Global Standard SKU.

Get started

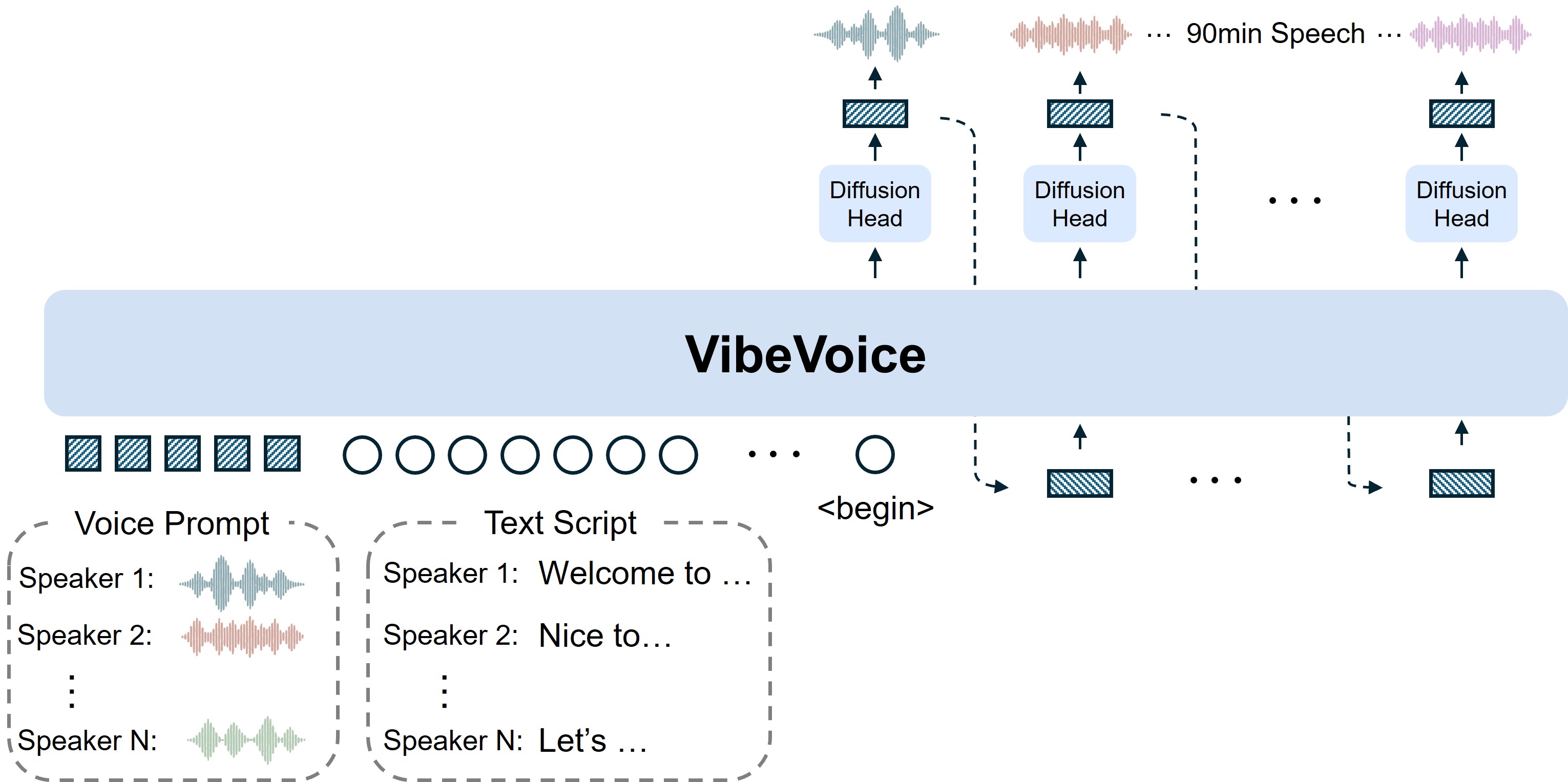

VibeVoice — long conversational TTS (Coming soon to Foundry Models)

An open-source, frontier text-to-speech framework for expressive, long-form, multi-speaker audio (think podcasts and panel shows).

Source: VibeVoice GitHub Page

Source: VibeVoice GitHub Page

Overview

VibeVoice synthesizes expressive, long‑form, multi‑speaker audio—up to ~90 minutes per session with natural turn‑taking across as many as four distinct voices. It brings context‑aware expression (including spontaneous emotion and singing), cross‑lingual transfer, and occasional background‑music moments together with efficient continuous acoustic/semantic tokenizers operating at 7.5 Hz and a next‑token diffusion head guided by an LLM for dialogue flow. Status: Coming soon to Foundry Models; in the meantime you can try the open weights in a live demo playground made with Gradio.

Models (open weights)

| Model | Parameters | Context length | Generation length | Weight |

|---|---|---|---|---|

| VibeVoice-1.5B | 1.5 billion | 64K tokens | ~90 min | HF link |

| VibeVoice-Large | 7 billion | 32K tokens | ~45 min | HF link |

| VibeVoice-0.5B-Streaming | 0.5 billion | TBD | TBD | On the way |

Get started

OpenAI gpt‑oss

OpenAI’s first open‑weight models since GPT‑2—gpt‑oss‑120b and gpt‑oss‑20b—are available in Azure AI Foundry. gpt‑oss‑20b also runs locally via Foundry Local and Windows AI Foundry.

NOTE:

gpt‑oss‑20bis only available for Hub-based projects with managed compute―gpt‑oss‑120bcan be deployed from either Hub-based projects with managed compute or serverless with Foundry projects. Managed compute deployments in Foundry projects are coming soon!

Models

gpt‑oss‑120b: reasoning‑focused, data‑center class GPU (single‑GPU capable in cloud deployments).gpt‑oss‑20b: lightweight, tool‑savvy; optimized for local/edge including modern Windows PCs with 16GB+ VRAM.- API compatibility with the Responses API is coming—swap into existing apps with minimal changes.

Run locally with Foundry Local

- Install Foundry Local (preview). On Windows: winget install Microsoft.FoundryLocal

- Requirements for

gpt‑oss‑20b: Foundry Local 0.6.87+ and NVIDIA GPU with 16GB+ VRAM. - Quick CLI: foundry model run gpt-oss-20b

Hello world (Python, Foundry Local)

import openai

from foundry_local import FoundryLocalManager

# Use the GPT-OSS 20B model locally.

alias = "gpt-oss-20b" # Requires Foundry Local >= 0.6.87 and ~16GB+ VRAM GPU

# Start Foundry Local (if not running) and load the model

manager = FoundryLocalManager(alias)

# Point OpenAI SDK to the local Foundry endpoint (no real key needed locally)

client = openai.OpenAI(

base_url=manager.endpoint,

api_key=manager.api_key

)

# Minimal chat request

resp = client.chat.completions.create(

model=manager.get_model_info(alias).id,

messages=[{"role": "user", "content": "Say hello from gpt-oss locally."}]

)

print(resp.choices[0].message.content)Learn more

Agents

Foundry Agent Service expands to four new regions

Agent Service is now available in brazilsouth, germanywestcentral, italynorth, and southcentralus bringing the region support total to 17 Azure regions.

NOTE: The

file_search_toolis currently unavailable in the following regionsitalynorthandbrazilsouth.

Tools

Browser Automation tool (Public Preview)

Ship agents that drive a real browser—search, navigate, fill forms, and even book appointments—using natural language. Browser Automation runs inside your Azure subscription using your Microsoft Playwright Testing Workspace, so you don’t manage VMs or standalone browsers. Because it reasons over the page’s DOM (roles/labels) instead of pixels, it’s much more resilient than click‑by‑coordinates flows. Learn how it works in the Browser Automation docs and what a Playwright Workspace is here: Browser Automation · Playwright Workspaces.

Where it shines: automating multi‑step bookings and calendars, product discovery with criteria‑based navigation and summaries, and robust web form interactions (submissions, profile updates, document uploads). Multi‑turn conversations mean you can correct and iterate without restarting the flow.

Setup is straightforward: create a Playwright Workspace and token, add a Serverless connection to its wss:// regional endpoint in your Foundry project (Management center → Connected resources), and grant the project identity the Contributor role on the Workspace. Then attach the tool to your agent by referencing the connection ID.

Heads‑up: review the transparency notes and warnings before pointing the tool at production sites; prefer low‑privilege, isolated environments.

NOTE: Before running the code sample, ensure you have installed the latest pre-release of azure-ai-agents.

pip install --pre azure-ai-agents>=1.2.0b2Add the tool to an agent (Agents REST):

{

"model": "MODEL_DEPLOYMENT_NAME",

"instructions": "You can browse and fill forms on the specified site to accomplish the user's goal.",

"tools": [

{

"type": "browser_automation",

"connection_id": "AZURE_PLAYWRIGHT_CONNECTION_NAME"

}

]

}Quickstart (Python SDK):

import os

from azure.identity import DefaultAzureCredential

from azure.ai.projects import AIProjectClient

from azure.ai.agents.models import MessageRole, BrowserAutomationTool

project_client = AIProjectClient(

endpoint=os.environ["PROJECT_ENDPOINT"],

credential=DefaultAzureCredential()

)

connection_id = os.environ["AZURE_PLAYWRIGHT_CONNECTION_ID"] # from Connected resources (wss://...)

model = os.environ["MODEL_DEPLOYMENT_NAME"]

browser_tool = BrowserAutomationTool(connection_id=connection_id)

with project_client:

agent = project_client.agents.create_agent(

model=model,

name="browser-agent",

instructions="Use the browser tool to complete the task.",

tools=browser_tool.definitions,

)

thread = project_client.agents.threads.create()

project_client.agents.messages.create(

thread_id=thread.id,

role=MessageRole.USER,

content=(

"Go to https://finance.yahoo.com, search for MSFT, switch the chart to YTD, "

"and report the percent change."

),

)

run = project_client.agents.runs.create_and_process(thread_id=thread.id, agent_id=agent.id)

print("Run status:", run.status)Learn more in the docs and the announcement post: Docs · Blog

Platform (API, SDK, UI, and more)

Responses API — Generally Available (GA)

Build intelligent, tool‑using agents with stateful, multi‑turn conversations in a single API call. Now GA, the Responses API automatically maintains conversation state, stitches multiple tool calls with model reasoning and outputs in one flow, supports popular Azure OpenAI models—including the GPT‑5 series and fine‑tuned variants—for predictable, structured outputs, and scales on Azure’s enterprise‑grade identity, security, and compliance.

Tools (built‑in and current limits)

- Built‑in: File Search, Function Calling, Code Interpreter (Python), Computer Use, Image Generation, and Remote MCP Server.

- Not supported: Web search tool. Use Grounding with Bing Search instead.

- Coming soon: Image generation multi‑turn editing/streaming and image uploads referenced from prompts.

API support

- v1 API is required for access to the latest features. Learn more: https://learn.microsoft.com/en-us/azure/ai-foundry/openai/api-version-lifecycle#api-evolution

Regions

- Available in:

australiaeast,eastus,eastus2,francecentral,japaneast,norwayeast,polandcentral,southindia,swedencentral,switzerlandnorth,uaenorth,uksouth,westus,westus3. - Note: Not every model is available in every region—see model region availability: https://learn.microsoft.com/en-us/azure/ai-foundry/openai/concepts/models

Model support

GPT‑5,GPT‑4o, andGPT‑4.1,o‑seriesmodel families, plusgpt‑image‑1andcomputer‑use‑preview.

Notes & known limits

- PDFs as input are supported, but file upload purpose

user_dataisn’t currently supported. Background mode with streaming may show performance issues (fix coming).

Quickstart (Python, API key)

import os

from openai import OpenAI

client = OpenAI(

api_key=os.getenv("AZURE_OPENAI_API_KEY"),

base_url="https://YOUR-RESOURCE-NAME.openai.azure.com/openai/v1/",

)

response = client.responses.create(

model="MODEL_DEPLOYMENT_NAME", # e.g., gpt-4.1-nano or your deployment name

input="This is a test.",

)

print(response.model_dump_json(indent=2))Get started

Python SDK release highlights

- Agents: 1.1.0 stable is based on 1.0.2 (excludes beta features); 1.2.0b1 beta continues the experimental line and adds tool_resources support for async runs, with multiple fixes and public type promotions.

- AI Evaluation: 1.10.0 adds

evaluate_queryfor RAI evaluators (default False) and delivers significant performance/variance improvements plus new grader and threshold controls. - AI Projects: 1.0.0 stable removes preview features and renames classes/APIs; 1.1.0b1 adds Evaluations cancel/delete; 1.1.0b2 fixes a Red‑Team regression.

.NET SDK release highlights

- AI Agents Persistent 1.1.0 adds tracing for Agents, an include parameter to CreateRunStreaming/CreateRunStreamingAsync, and a tool_resources parameter to CreateRun/CreateRunAsync.

- AI Agents Persistent 1.2.0-beta.1 fixes an issue where the after parameter was ignored when retrieving pageable lists.

- OpenAI Inference 2.3.0-beta.1 carries forward a substantial number of features from the OpenAI library (see OpenAI 2.3.0 release notes for details).

Java SDK Updates

Agent Service Java SDK (Public Preview)

Public preview of the Agent Service Java SDK with Quickstart and tool samples. Get started.

Tool samples (Java)

JavaScript/TypeScript release highlights

- AI Agents 1.1.0-beta.1 adds MCP tool, Deep Research tool and sample, and brings back SharepointGroundingTool, BingCustomSearchTool, MicrosoftFabricTool, and SharepointTool; includes a breaking change to DeepResearchDetails field names.

- AI Agents 1.1.0-beta.2 fixes message image upload type error and stream event deserialization in runs.create.

- AI Agents 1.1.0-beta.3 fixes missing required parameter json_schema in runs.createAndPoll.

- AI Agents 1.1.0 (stable) removes preview-only tools (MCP, Deep Research, Sharepoint, BingCustomSearch, MicrosoftFabric) from the stable line.

- AI Projects 1.0.0 includes breaking changes removing redTeams/evaluations and legacy inference helpers, plus renames for telemetry and Azure OpenAI client access.

Documentation Updates

- [New] Capability hosts — Concept: package tools/resources for agents; guidance on hosting, security, and deployment (link)

- [New] Cost management for fine‑tuning — Track/limit spend, clean up artifacts, and optimize job configurations (link)

- [Updated] Deep Research tool — How to enable and use the Deep Research tool in Agent Service (link)

- [Updated] Deep Research samples — End‑to‑end examples for Deep Research flows (link)

- [New] Evaluations storage account setup — Configure storage for evaluations in projects (link)

- [New] Migrate from hubs to Foundry projects — Step‑by‑step migration guidance and considerations (link)

- [New] Serverless API inference for Foundry Models — Code patterns for calling serverless “Direct from Azure” models (link)

- [New] Create Foundry resources with Terraform — IaC guide for consistent provisioning (link)

- [Updated] Responses API — Latest how‑to for GA Responses API with v1 semantics (link)

- [Updated] MCP tool samples — Model Context Protocol samples and patterns (link)

- [Updated] Customer‑managed keys — Concepts and setup for CMK across projects/hubs (link)

- [Updated] Evaluate apps — Portal guide for evaluating generative AI applications (link)

- [Updated] Evaluate agents locally — SDK guide for local agent evaluation workflows (link)

- [Updated] Evaluate apps locally — SDK guide for app evaluation workflows (link)

- [Updated] Foundry Models and capabilities — Catalog concepts, capabilities, and model families (link)

- [New] Evaluation simulators — Preview simulators to generate synthetic/adversarial data with end‑to‑end samples, multi‑turn flows, regions, and JSONL helpers (link)

- [Updated] SharePoint tool samples — Samples for the SharePoint tool (link)

- [Updated] View evaluation results in the portal — Portal views and interpretation tips (link)

- [Updated] Managed network for hubs — Clarifies isolation modes (Internet outbound vs Approved outbound), hub‑based only, irreversible once enabled, Azure Firewall FQDN rules (cost), and adds the Enterprise Network Connection Approver role (link)

- [Updated] Role‑based access control — Expanded role definitions (Azure AI User, Project Manager, Account Owner) with JSON permission blocks and conditional delegation; Contributor can deploy models (link)

- [Updated] Customer‑enabled disaster recovery — No automatic failover; guidance for hot/hot, hot/warm, hot/cold; hub‑based only; paired regions and replication responsibilities table (link)

- [Updated] Deploy Azure OpenAI models — Refined portal flows from Catalog/Project, updated inference guidance, and quota/region pointers (link)

- [New] Azure AI Foundry status dashboard (Preview) — Live status, incident timelines/RCAs, historical uptime; subscribe via email/SMS/webhook (link)

- [Updated] Azure OpenAI API lifecycle (v1) — GA endpoints; preview opt‑in via headers or path; use OpenAI client with base_url to /openai/v1; APIM OpenAPI 3.1 caveat (link)

- [Updated] Quotas and limits — Adds GPT‑5 TPM/RPM tables, model‑router tier limits, o‑series capacity unit RPM/TPM ratios; capacity API to check regional availability (link)

- [Updated] Tracing & observability — How to instrument agents/apps with OpenTelemetry, view traces in portal, and export to Azure Monitor (link)

- [Updated] Deploy an enterprise chat web app — Deploy from the playground to App Service with Microsoft Entra authentication and key setup tips (link)

- [Updated] Model availability & regions (link) — Canonical region support across Foundry features and model matrices with per‑region availability. (link)

Happy building—let us know what you ship with #AzureAIFoundry over in Discord or GitHub Discussions!

Can I know when GPT-5 will be deployed in more regions? For example, Western Europe?