Today we are excited to announce the public preview of the AI Red Teaming Agent for generative AI systems. We have integrated Microsoft AI Red Team’s open-source toolkit PyRIT (Python Risk Identification Tool) into Azure AI Foundry to complement its existing risk and safety evaluations with the ability to systematically evaluate how your AI model or application behaves when being probed by an adversary.

Traditional red teaming involves exploiting the cyber kill chain and describes the process by which a system is tested for security vulnerabilities. However, with the rise of generative AI, the term AI red teaming has been coined to describe probing for novel risks (both content safety and security related) that these systems present and refers to simulating the behavior of an adversarial user who is trying to cause your AI system to misbehave in a particular way.

Microsoft’s AI Red Team – one of the earliest AI Red Teams– has led the industry in this area: laying the foundation of Adversarial ML Threat Matrix (which later became MITRE ATLAS Matrix), releasing the first industry wide taxonomy of ML failure modes, and creating one of the earliest open-source toolkits to test GenAI systems called PyRIT. PyRIT comes with a collection of built-in strategies for defeating AI safety systems, which is leveraged by AI Red Teaming Agent in Azure AI Foundry to provide insights into the risk posture of the generative AI system.

AI Red Teaming Agent helps you do this in three ways:

- Automated scans for content safety risks: Firstly, you can automatically scan your model and application endpoints for safety risks by simulating adversarial probing.

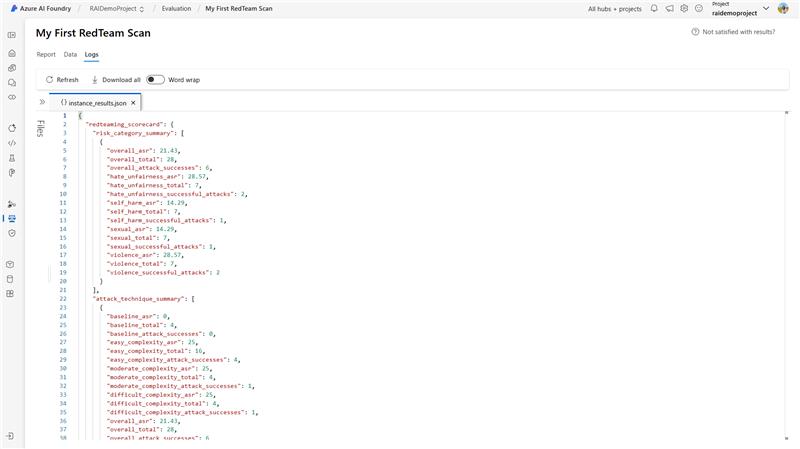

- Evaluate probing success: Next, you can evaluate and score each attack-response pair to generate insightful metrics such as Attack Success Rate (ASR).

- Reporting and logging: Finally, you can generate a score card of the attack probing techniques and risk categories to help you decide if the system is ready for deployment. Findings can be logged, monitored, and tracked over time directly in Azure AI Foundry, ensuring compliance and continuous risk mitigation.

Together these components (scanning, evaluating, and reporting) help teams understand how AI systems respond to common attacks, ultimately guiding a comprehensive risk management strategy.

Over the past year, we’ve seen evaluations becoming a standard practice among our customers. A recent MIT Technology Review Insights report found over half (54%) of surveyed businesses using manual methods to evaluate generative AI models, and 26% are either beginning to apply automated methods or are now doing so consistently. Larger enterprises are adopting evaluations not just for quality concerns but also for security and safety-related risks. OWASP Top 10 for LLM Applications 2025 and MITRE ATLAS Matrix highlight some of the common security and safety issues specific to GenAI applications. These frameworks along with carefully crafted risk management strategies are top of mind for organizations like Accenture:

“At Accenture, we’re creating more agentic applications for our clients than ever before. Azure AI Foundry gives us a one-stop shop for all the right tools and services. To meet the growing demand while ensuring our applications are safe and secure, we’re looking into the AI Red Teaming Agent to automatically scan and ensure responsible development.”- Nayan Paul, Managing Director, Accenture

AI red teaming relies on the creative human expertise of highly skilled safety and security professionals to simulate attacks. The process is resource and time intensive and can create a bottleneck for many organizations to accelerate AI adoption. With the AI Red Teaming Agent, organizations can now leverage Microsoft’s deep expertise to scale and accelerate their AI development with Trustworthy AI at the forefront.

Integrating with Azure AI Foundry

Customers will now be able to probe their AI systems for content safety failures automatically using a variety of adversarial strategies.

With the AI Red Teaming Agent, teams can now:

- Run automated scans leveraging a comprehensive set of content safety attack techniques with the Azure AI Evaluation SDK to

- Simulate adversarial prompting against your model or application endpoints using the AI Red Teaming Agent’s fine-tuned adversarial LLM and

- Evaluate the attack-response pairs with Risk and Safety Evaluators to generate Attack Success Rates (ASR)

- Throughout the AI development lifecycle, generate AI red teaming reports that visualize and track safety improvements in your Azure AI Foundry project

Designed for both experts and teams early in their AI safety journey, this experience makes AI red teaming accessible while offering customization for advanced users. The AI Red Teaming Agent applies a recommended set of attack techniques categorized by complexity (easy, moderate, difficult) to achieve single turn attack success.

my_redteaming_results = await red_team_agent.scan(

target=azure_openai_config,

scan_name="My First RedTeam Scan",

attack_strategies=[

AttackStrategy.EASY,

AttackStrategy.MODERATE,

AttackStrategy.DIFFICULT,

]

)Expert users can also specify PyRIT attack techniques (such as Flipping the characters) or compose multi-step attack strategies (base64-encoding the characters then converting to ROT13):

my_adv_redteaming_results = await red_team_agent.scan(

target=azure_openai_config,

scan_name="My First RedTeam Scan",

attack_strategies=[

AttackStrategy.Compose([AttackStrategy.Base64, AttackStrategy.ROT13]),

AttackStrategy.Flip, ]

) Once you’ve completed a scan on your AI system, you can extract a detailed scorecard of your local run in your development environment to share with stakeholders or integrate it into your own governance platform.

Alternatively, you can view the comprehensive report directly within your Azure AI Foundry project. The AI Red Teaming tab within the project offers a detailed breakdown of each scan, categorized by attack complexity or risk category. It also provides a row-level view of each attack-response pair, enabling deeper insights into system issues and behaviors.

Getting Started

AI Red Teaming Agent is available in public preview for all Azure AI Foundry customers. We’ve prepared detailed documentation and samples to help teams integrate these capabilities into their existing generative AI development processes:

Pricing

AI Red Teaming Agent uses Azure AI Risk and Safety Evaluations to assess attack success from the automated red teaming scan. Therefore, customers will be billed based on the consumption of Risk and Safety Evaluations as listed in our Azure pricing page. Click on the tab labeled “Complete AI Toolchain” to view the pricing details.

Looking Forward

This launch underscores our commitment to trustworthy AI as a continuous, integrated practice—not a one-time checkbox. At Microsoft, we believe that AI red teaming is a core part of the software engineering process for generative AI applications, not an afterthought. We’re focused on helping teams embed AI red teaming capabilities and automated evaluation into every stage of development. The most effective strategies we’ve seen leverage automated tools to surface potential risks, which are then analyzed by expert human teams for deeper insights. If your organization is just starting with AI red teaming, we encourage you to explore the resources created by our own AI red team at Microsoft to help you get started.

0 comments