In today’s fast-moving tech landscape, developers need clear, efficient tooling to keep pace. Forrester tells us that 85 percent of enterprises now run multi-model AI strategies, so rapid testing and deployment are essential. Yet with seemingly endless models, platforms and providers to choose from, teams still lose precious cycles on guesswork—tuning prompts, wiring orchestration, and chasing performance issues. Azure AI Foundry removes that friction. The latest release streamlines model selection, customization, and monitoring and brings the Foundry Agent Service to general availability, so you can customize, deploy, and run single or multiagent solutions at scale from one familiar platform.

Azure AI Foundry at a glance

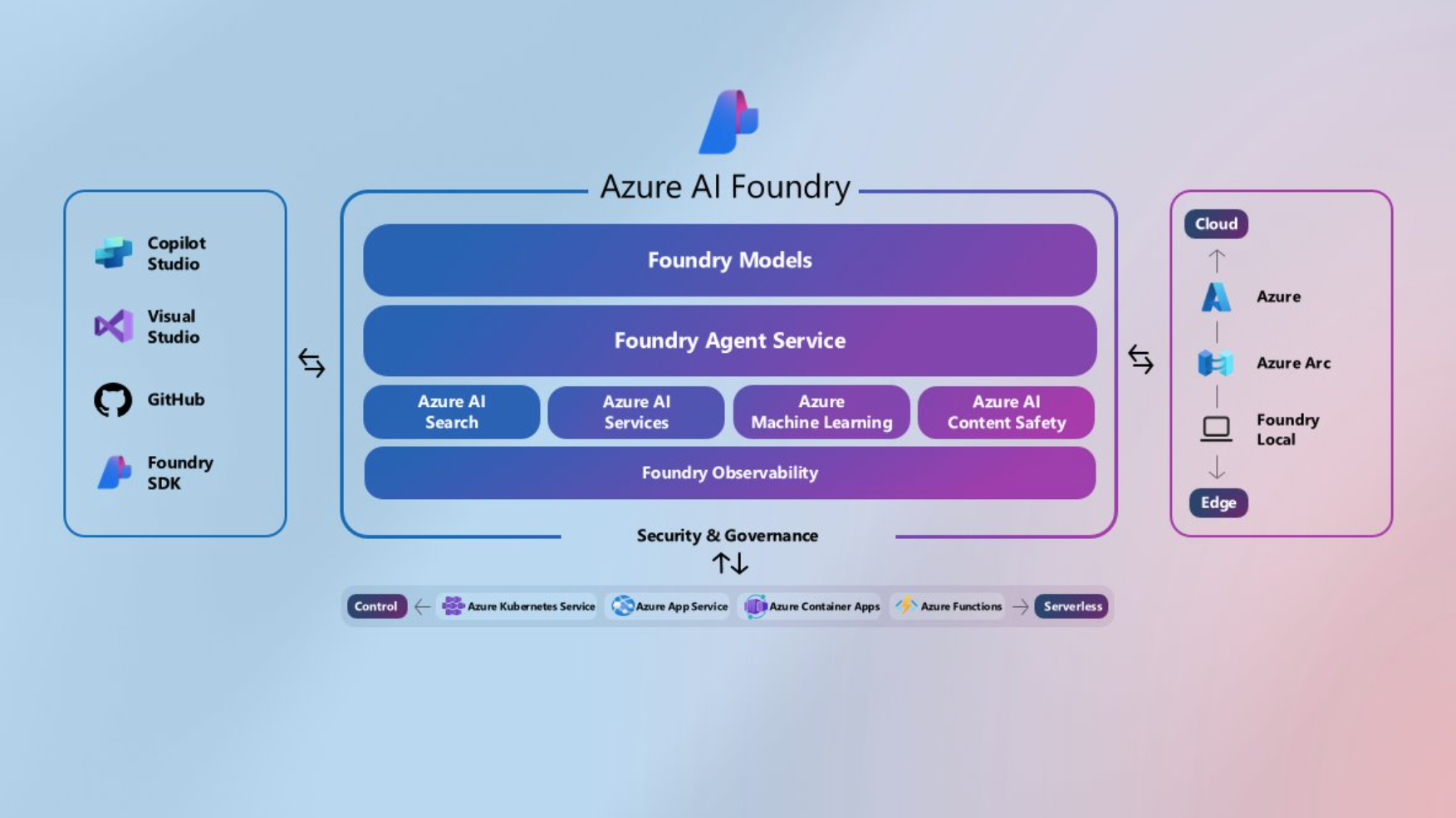

Azure AI Foundry is Microsoft’s secure, flexible platform for designing, customizing, and managing AI apps and agents. Everything—models, agents, tools, and observability—lives behind a single portal, SDK, and REST endpoint, so you can ship to cloud or edge with governance and cost controls in place from day one.

Why the new release matters

With this update, Foundry truly becomes a one-stop-shop. In addition to giving you access to more than 11,000 Foundry Models, we are now expanding the models hosted and sold by Microsoft including select models from Meta, Mistral AI, DeepSeek, and Black Forest Labs – coming soon in preview and models from xAI available today. Reserved capacity and provisioned throughput give every model the same predictable performance and billing experience. Foundry Agent Service is now GA. You can host a single agent or orchestrate a group of agents, expose them through the Agent to Agent (A2A) protocol, and connect them to other services using Model Context Protocol (MCP) or OpenAPI. Quick-start templates, VS Code integration, and GitHub workflows shorten the journey from idea to production to minutes instead of weeks. All of this arrives wrapped in enterprise grade trust: RBAC, customer managed keys, network isolation, and a responsible AI toolchain are built in rather than bolted on.

What’s new in detail

Foundry Models

Select model families hosted and sold directly from Microsoft – Llama 3, Llama 4, Grok 3, Mistral OCR, Codestral, and Flux now sit alongside Azure OpenAI in Foundry Models. These models carry the SLAs, security, and compliance Azure customers expect from any Microsoft product. Starting next month, you will also be able to use reserved capacity across these models with Foundry Provisioned Throughput (PTU)—including Azure OpenAI models and the new models hosted and sold by Microsoft. This shared capacity model makes it easier than ever to operate multiple models under a unified, predictable performance and billing framework. We are also expanding our partnership with Hugging Face to provide access to a wide range of frontier and open-source Foundry Models. Soon, expect over 11,000 models and hundreds of pre-built agentic tools. To make model choice easier, a new model leaderboard ranks models by quality, cost, and throughput, and a smart model router automatically picks the best model for each request based on your latency and budget constraints. In our tests comparing use of model router versus direct use of GPT-4.1 in Foundry Models, we saw up-to 60% cost savings with similar accuracy. Foundry Local enables you to run a growing catalog of edge-optimized models and agents directly on Windows or macOS—ideal for offline or privacy-sensitive workloads.

Fine-tuning and distillation

Developers can now fine-tune GPT-4.1-nano, o4-mini, and Llama 4 in Foundry Models with reinforcement fine-tuning available for advanced reasoning tasks. A new low-cost Developer Tier removes hosting fees during experimentation.

Agent development

Foundry Agent Service is now GA. You can host a single agent or orchestrate a group of agents, expose them through the Agent-to-Agent (A2A) protocol. The service simplifies agent development by integrating seamlessly with data sources like Microsoft Bing, Microsoft SharePoint, Azure AI Search, and Microsoft Fabric via knowledge tools while supporting task automation through action tools like Azure Logic Apps, Azure Functions, and custom tools using OpenAPI and Model Context Protocol (MCP). Behind the scenes, Semantic Kernel and AutoGen are merging into one unified SDK, giving you a single, composable API for defining, chaining, and deploying agents locally or in the cloud with identical behavior. Foundry Agent Service also connects with a centralized catalog of agent code samples that developers can easily customize. These include predefined instructions, actions, APIs, knowledge, and tools, allowing developers to quickly create and deploy agents with Azure AI Foundry. Meanwhile, Azure AI Search has introduced agentic retrieval—now in public preview—to handle automated multiturn planning, retrieval, and synthesis. And if you prefer to talk rather than type, the new Voice Live API brings real-time speech input and output in more than 150 locales, all through the same endpoint.

Content & media

A new video playground—coming soon—lets you try Sora in Foundry Models—coming soon—and other cutting-edge generation models without provisioning any resources. Once you have a prompt you like, you can export ready-to-run code snippets straight into VS Code. For document-heavy workflows, the Pro-mode in Azure AI Content Understanding collapses what used to be multiple extraction calls and human validation into one streamlined operation—for example, comparing insurance claims against contract terms in a single step.

Developer productivity

Onboarding now takes seconds and produces a proof-of-concept in minutes. The GA version of the Foundry REST API unifies model inference, agent operations, and evaluations behind one endpoint, with SDKs for Python, C#, JavaScript, and Java. The updated VS Code extension offers YAML IntelliSense, full agent CRUD, and a new “Open in VS Code” button in the portal that preloads keys and sample code. We have also published AI templates for common scenarios and released updates to the Microsoft 365 Agents Toolkit so you can publish agents created with Foundry to Microsoft Copilot, Teams, and beyond.

Deployment & observability

Dynamic capacity allocation scales on demand, and you can supercharge massive AI workloads with the dynamic token quota via Batch Global and DataZone deployments with new enhancements allowing you to queue up to 1 trillion tokens. Built-in dashboards in Foundry Observability cover quality, cost, safety, and ROI from the first playground test to production, and they plug into GitHub Actions and Azure DevOps so evaluations run automatically on every commit. In addition, Foundry Agent Service includes robust AgentOps capabilities—such as tracing, evaluation, and monitoring—helping developers validate, observe, and optimize agent behavior with confidence.

Customers innovating with Azure AI Foundry

More than 70,000 enterprises and digital natives have adopted Azure AI Foundry. A couple examples:

- Heineken used Azure AI Foundry to create a multi-agent platform to help employees access data and information across the company in their native language. Tasks that used to take 10 to 15 minutes now take 5 to 10 seconds.

- Accenture used Azure AI Foundry to develop a centralized solution for secure generative AI development, including Azure AI Search, Azure AI Content Safety, and Azure Machine Learning. Azure Machine Learning was used for custom model training, and Azure AI Foundry Models were used for fine-tuning. Accenture has also deployed more than 75 generative AI use cases across clients, with over 16 solutions in full production.

Get started today

The easiest way to explore is through the Azure AI Foundry portal. From there you can install the SDK, follow the documentation and Microsoft Learn courses, and add the VS Code extension—all in a few clicks. Sample repositories walk you through everything from a simple chat bot to a fully managed agent fleet.

If you’re attending Microsoft Build 2025, drop by one of my sessions—“Azure AI Foundry: The Agent Factory,” “Developer Essentials for Agents and Apps in Azure AI Foundry,” and “Foundry Agent Service: Transforming Workflows with Azure AI Foundry.”

Happy coding!

The links to use cases (Heineken and Accenture) are broken.