A year ago, I published Performance Improvements in .NET 6, following on the heels of similar posts for .NET 5, .NET Core 3.0, .NET Core 2.1, and .NET Core 2.0. I enjoy writing these posts and love reading developers’ responses to them. One comment in particular last year resonated with me. The commenter cited the Die Hard movie quote, “‘When Alexander saw the breadth of his domain, he wept for there were no more worlds to conquer’,” and questioned whether .NET performance improvements were similar. Has the well run dry? Are there no more “[performance] worlds to conquer”? I’m a bit giddy to say that, even with how fast .NET 6 is, .NET 7 definitively highlights how much more can be and has been done.

As with previous versions of .NET, performance is a key focus that pervades the entire stack, whether it be features created explicitly for performance or non-performance-related features that are still designed and implemented with performance keenly in mind. And now that a .NET 7 release candidate is just around the corner, it’s a good time to discuss much of it. Over the course of the last year, every time I’ve reviewed a PR that might positively impact performance, I’ve copied that link to a journal I maintain for the purposes of writing this post. When I sat down to write this a few weeks ago, I was faced with a list of almost 1000 performance-impacting PRs (out of more than 7000 PRs that went into the release), and I’m excited to share almost 500 of them here with you.

One thought before we dive in. In past years, I’ve received the odd piece of negative feedback about the length of some of my performance-focused write-ups, and while I disagree with the criticism, I respect the opinion. So, this year, consider this a “choose your own adventure.” If you’re here just looking for a super short adventure, one that provides the top-level summary and a core message to take away from your time here, I’m happy to oblige:

TL;DR: .NET 7 is fast. Really fast. A thousand performance-impacting PRs went into runtime and core libraries this release, never mind all the improvements in ASP.NET Core and Windows Forms and Entity Framework and beyond. It’s the fastest .NET ever. If your manager asks you why your project should upgrade to .NET 7, you can say “in addition to all the new functionality in the release, .NET 7 is super fast.”

Or, if you prefer a slightly longer adventure, one filled with interesting nuggets of performance-focused data, consider skimming through the post, looking for the small code snippets and corresponding tables showing a wealth of measurable performance improvements. At that point, you, too, may walk away with your head held high and my thanks.

Both noted paths achieve one of my primary goals for spending the time to write these posts, to highlight the greatness of the next release and to encourage everyone to give it a try. But, I have other goals for these posts, too. I want everyone interested to walk away from this post with an upleveled understanding of how .NET is implemented, why various decisions were made, tradeoffs that were evaluated, techniques that were employed, algorithms that were considered, and valuable tools and approaches that were utilized to make .NET even faster than it was previously. I want developers to learn from our own learnings and find ways to apply this new-found knowledge to their own codebases, thereby further increasing the overall performance of code in the ecosystem. I want developers to take an extra beat, think about reaching for a profiler the next time they’re working on a gnarly problem, think about looking at the source for the component they’re using in order to better understand how to work with it, and think about revisiting previous assumptions and decisions to determine whether they’re still accurate and appropriate. And I want developers to be excited at the prospect of submitting PRs to improve .NET not only for themselves but for every developer around the globe using .NET. If any of that sounds interesting, then I encourage you to choose the last adventure: prepare a carafe of your favorite hot beverage, get comfortable, and please enjoy.

Oh, and please don’t print this to paper. “Print to PDF” tells me it would take a third of a ream. If you would like a nicely formatted PDF, one is available for download here.

Table of Contents

- Setup

- JIT

- GC

- Native AOT

- Mono

- Reflection

- Interop

- Threading

- Primitive Types and Numerics

- Arrays, Strings, and Spans

- Regex

- Collections

- LINQ

- File I/O

- Compression

- Networking

- JSON

- XML

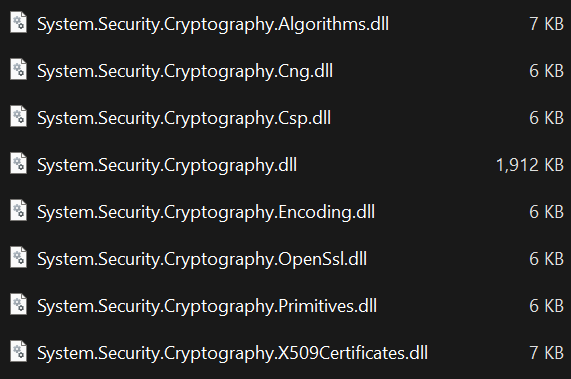

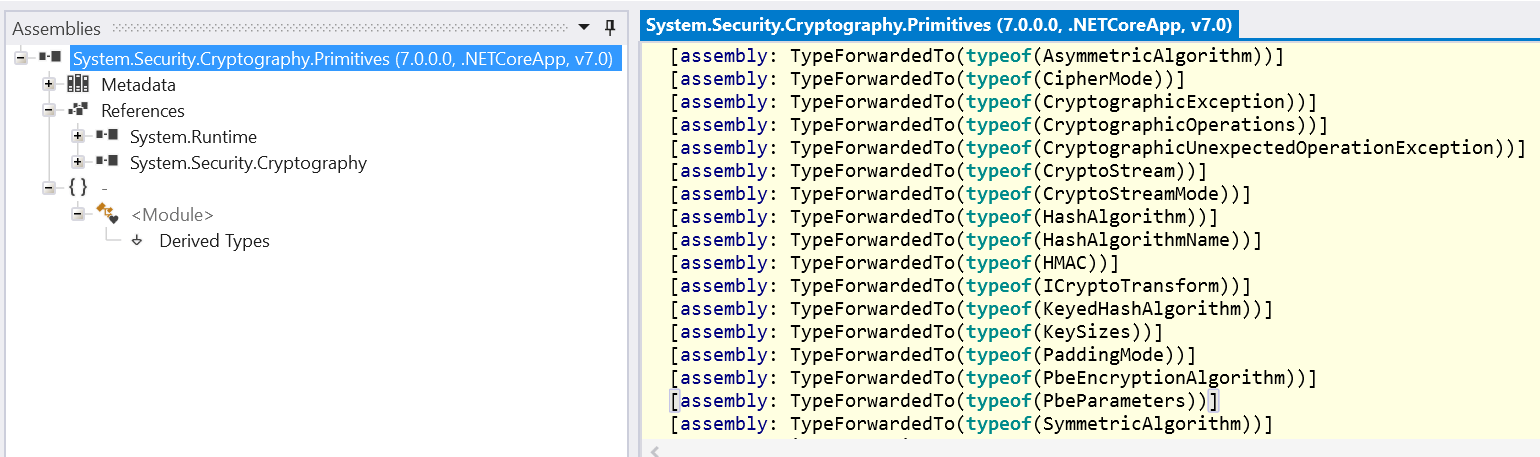

- Cryptography

- Diagnostics

- Exceptions

- Registry

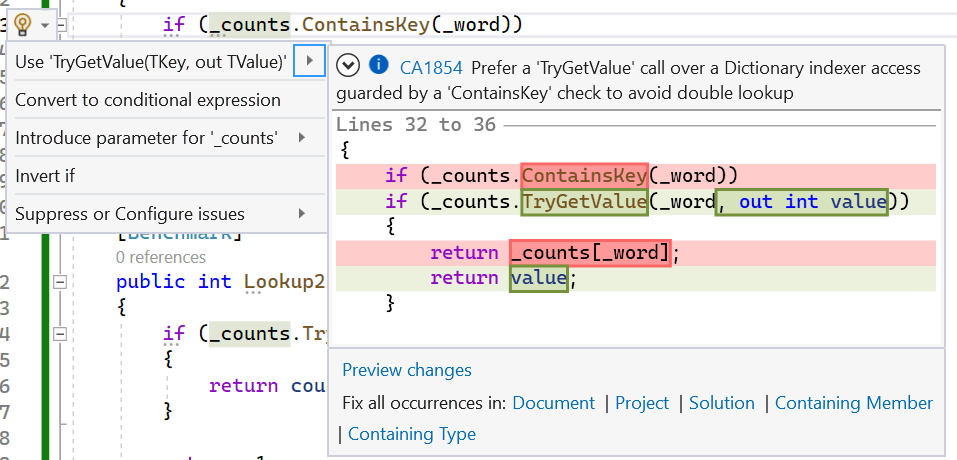

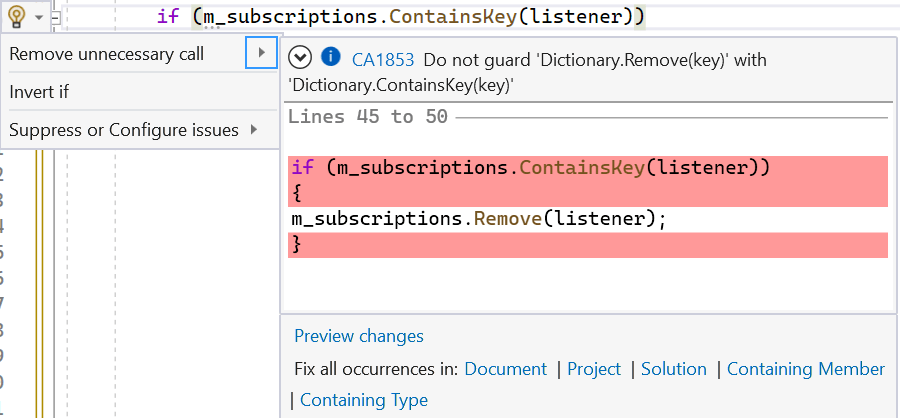

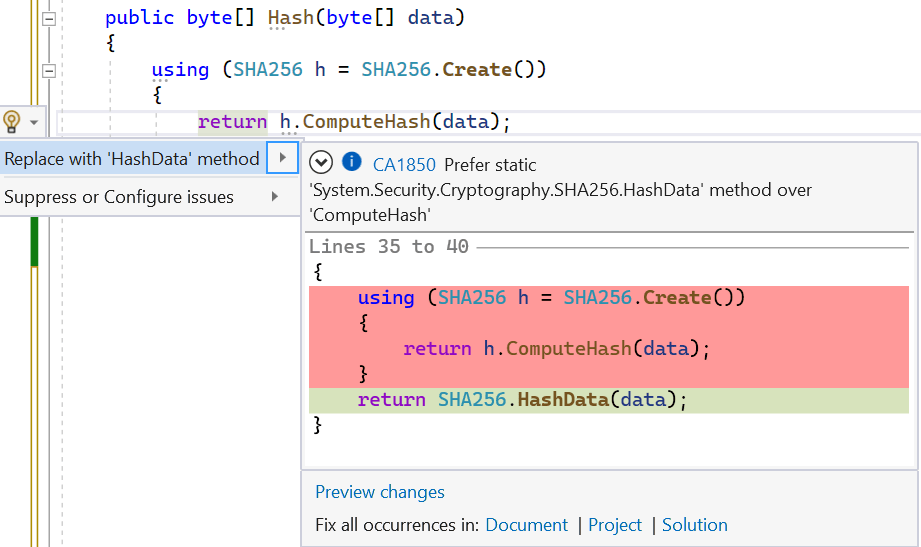

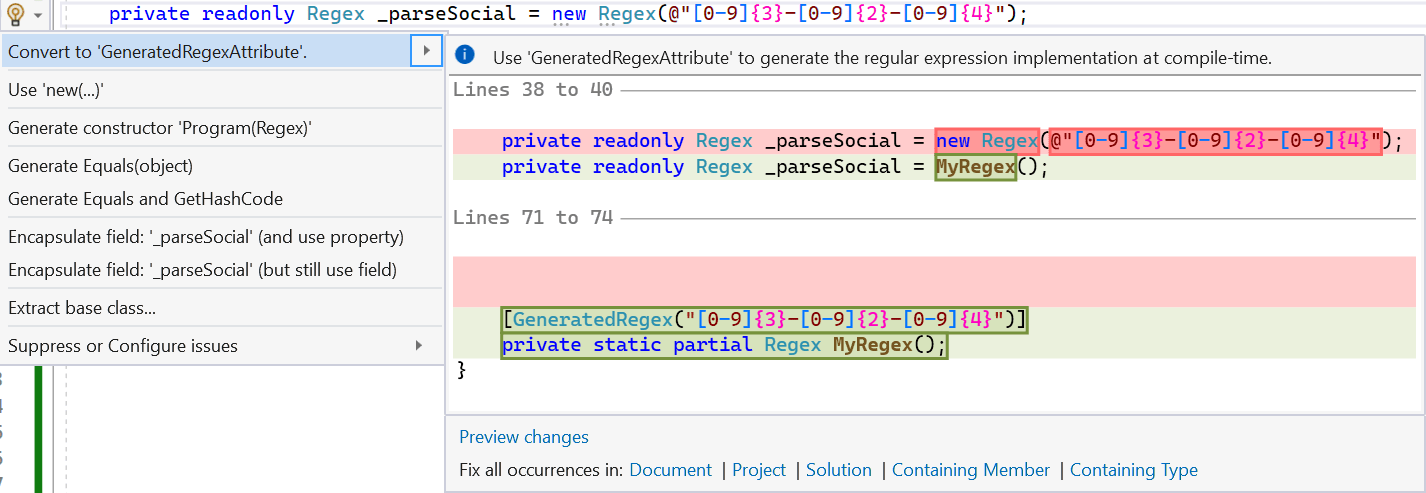

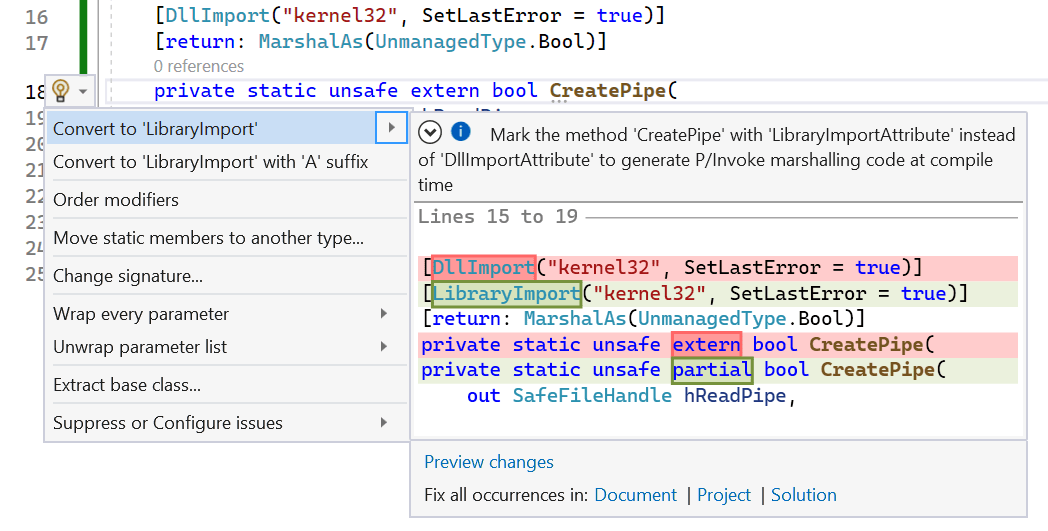

- Analyzers

- What’s Next?

Setup

The microbenchmarks throughout this post utilize benchmarkdotnet. To make it easy for you to follow along with your own validation, I have a very simple setup for the benchmarks I use. Create a new C# project:

dotnet new console -o benchmarks

cd benchmarksYour new benchmarks directory will contain a benchmarks.csproj file and a Program.cs file. Replace the contents of benchmarks.csproj with this:

<Project Sdk="Microsoft.NET.Sdk">

<PropertyGroup>

<OutputType>Exe</OutputType>

<TargetFrameworks>net7.0;net6.0</TargetFrameworks>

<LangVersion>Preview</LangVersion>

<AllowUnsafeBlocks>true</AllowUnsafeBlocks>

<ServerGarbageCollection>true</ServerGarbageCollection>

</PropertyGroup>

<ItemGroup>

<PackageReference Include="benchmarkdotnet" Version="0.13.2" />

</ItemGroup>

</Project>and the contents of Program.cs with this:

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

using Microsoft.Win32;

using System;

using System.Buffers;

using System.Collections.Generic;

using System.Collections.Immutable;

using System.ComponentModel;

using System.Diagnostics;

using System.IO;

using System.IO.Compression;

using System.IO.MemoryMappedFiles;

using System.IO.Pipes;

using System.Linq;

using System.Net;

using System.Net.Http;

using System.Net.Http.Headers;

using System.Net.Security;

using System.Net.Sockets;

using System.Numerics;

using System.Reflection;

using System.Runtime.CompilerServices;

using System.Runtime.InteropServices;

using System.Runtime.Intrinsics;

using System.Security.Authentication;

using System.Security.Cryptography;

using System.Security.Cryptography.X509Certificates;

using System.Text;

using System.Text.Json;

using System.Text.RegularExpressions;

using System.Threading;

using System.Threading.Tasks;

using System.Xml;

[MemoryDiagnoser(displayGenColumns: false)]

[DisassemblyDiagnoser]

[HideColumns("Error", "StdDev", "Median", "RatioSD")]

public partial class Program

{

static void Main(string[] args) => BenchmarkSwitcher.FromAssembly(typeof(Program).Assembly).Run(args);

// ... copy [Benchmark]s here

}For each benchmark included in this write-up, you can then just copy and paste the code into this test class, and run the benchmarks. For example, to run a benchmark comparing performance on .NET 6 and .NET 7, do:

dotnet run -c Release -f net6.0 --filter '**' --runtimes net6.0 net7.0This command says “build the benchmarks in release configuration targeting the .NET 6 surface area, and then run all of the benchmarks on both .NET 6 and .NET 7.” Or to run just on .NET 7:

dotnet run -c Release -f net7.0 --filter '**' --runtimes net7.0which instead builds targeting the .NET 7 surface area and then only runs once against .NET 7. You can do this on any of Windows, Linux, or macOS. Unless otherwise called out (e.g. where the improvements are specific to Unix and I run the benchmarks on Linux), the results I share were recorded on Windows 11 64-bit but aren’t Windows-specific and should show similar relative differences on the other operating systems as well.

The release of the first .NET 7 release candidate is right around the corner. All of the measurements in this post were gathered with a recent daily build of .NET 7 RC1.

Also, my standard caveat: These are microbenchmarks. It is expected that different hardware, different versions of operating systems, and the way in which the wind is currently blowing can affect the numbers involved. Your mileage may vary.

JIT

I’d like to kick off a discussion of performance improvements in the Just-In-Time (JIT) compiler by talking about something that itself isn’t actually a performance improvement. Being able to understand exactly what assembly code is generated by the JIT is critical when fine-tuning lower-level, performance-sensitive code. There are multiple ways to get at that assembly code. The online tool sharplab.io is incredibly useful for this (thanks to @ashmind for this tool); however it currently only targets a single release, so as I write this I’m only able to see the output for .NET 6, which makes it difficult to use for A/B comparisons. godbolt.org is also valuable for this, with C# support added in compiler-explorer/compiler-explorer#3168 from @hez2010, with similar limitations. The most flexible solutions involve getting at that assembly code locally, as it enables comparing whatever versions or local builds you desire with whatever configurations and switches set that you need.

One common approach is to use the [DisassemblyDiagnoser] in benchmarkdotnet. Simply slap the [DisassemblyDiagnoser] attribute onto your test class: benchmarkdotnet will find the assembly code generated for your tests and some depth of functions they call, and dump out the found assembly code in a human-readable form. For example, if I run this test:

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

using System;

[DisassemblyDiagnoser]

public partial class Program

{

static void Main(string[] args) => BenchmarkSwitcher.FromAssembly(typeof(Program).Assembly).Run(args);

private int _a = 42, _b = 84;

[Benchmark]

public int Min() => Math.Min(_a, _b);

}with:

dotnet run -c Release -f net7.0 --filter '**'in addition to doing all of its normal test execution and timing, benchmarkdotnet also outputs a Program-asm.md file that contains this:

; Program.Min()

mov eax,[rcx+8]

mov edx,[rcx+0C]

cmp eax,edx

jg short M00_L01

mov edx,eax

M00_L00:

mov eax,edx

ret

M00_L01:

jmp short M00_L00

; Total bytes of code 17Pretty neat. This support was recently improved further in dotnet/benchmarkdotnet#2072, which allows passing a filter list on the command-line to benchmarkdotnet to tell it exactly which methods’ assembly code should be dumped.

If you can get your hands on a “debug” or “checked” build of the .NET runtime (“checked” is a build that has optimizations enabled but also still includes asserts), and specifically of clrjit.dll, another valuable approach is to set an environment variable that causes the JIT itself to spit out a human-readable description of all of the assembly code it emits. This can be used with any kind of application, as it’s part of the JIT itself rather than part of any specific tool or other environment, it supports showing the code the JIT generates each time it generates code (e.g. if it first compiles a method without optimization and then later recompiles it with optimization), and overall it’s the most accurate picture of the assembly code as it comes “straight from the horses mouth,” as it were. The (big) downside of course is that it requires a non-release build of the runtime, which typically means you need to build it yourself from the sources in the dotnet/runtime repo.

… until .NET 7, that is. As of dotnet/runtime#73365, this assembly dumping support is now available in release builds as well, which means it’s simply part of .NET 7 and you don’t need anything special to use it. To see this, try creating a simple “hello world” app like:

using System;

class Program

{

public static void Main() => Console.WriteLine("Hello, world!");

}and building it (e.g. dotnet build -c Release). Then, set the DOTNET_JitDisasm environment variable to the name of the method we care about, in this case “Main” (the exact syntax allowed is more permissive and allows for some use of wildcards, optional namespace and class names, etc.). As I’m using PowerShell, that means:

$env:DOTNET_JitDisasm="Main"and then running the app. You should see code like this output to the console:

; Assembly listing for method Program:Main()

; Emitting BLENDED_CODE for X64 CPU with AVX - Windows

; Tier-0 compilation

; MinOpts code

; rbp based frame

; partially interruptible

G_M000_IG01: ;; offset=0000H

55 push rbp

4883EC20 sub rsp, 32

488D6C2420 lea rbp, [rsp+20H]

G_M000_IG02: ;; offset=000AH

48B9D820400A8E010000 mov rcx, 0x18E0A4020D8

488B09 mov rcx, gword ptr [rcx]

FF1583B31000 call [Console:WriteLine(String)]

90 nop

G_M000_IG03: ;; offset=001EH

4883C420 add rsp, 32

5D pop rbp

C3 ret

; Total bytes of code 36

Hello, world!This is immeasurably helpful for performance analysis and tuning, even for questions as simple as “did my function get inlined” or “is this code I expected to be optimized away actually getting optimized away.” Throughout the rest of this post, I’ll include assembly snippets generated by one of these two mechanisms, in order to help exemplify concepts.

Note that it can sometimes be a little confusing figuring out what name to specify as the value for DOTNET_JitDisasm, especially when the method you care about is one that the C# compiler names or name mangles (since the JIT only sees the IL and metadata, not the original C#), e.g. the name of the entry point method for a program with top-level statements, the names of local functions, etc. To both help with this and to provide a really valuable top-level view of the work the JIT is doing, .NET 7 also supports the new DOTNET_JitDisasmSummary environment variable (introduced in dotnet/runtime#74090). Set that to “1”, and it’ll result in the JIT emitting a line every time it compiles a method, including the name of that method which is copy/pasteable with DOTNET_JitDisasm. This feature is useful in-and-of-itself, however, as it can quickly highlight for you what’s being compiled, when, and with what settings. For example, if I set the environment variable and then run a “hello, world” console app, I get this output:

1: JIT compiled CastHelpers:StelemRef(Array,long,Object) [Tier1, IL size=88, code size=93]

2: JIT compiled CastHelpers:LdelemaRef(Array,long,long):byref [Tier1, IL size=44, code size=44]

3: JIT compiled SpanHelpers:IndexOfNullCharacter(byref):int [Tier1, IL size=792, code size=388]

4: JIT compiled Program:Main() [Tier0, IL size=11, code size=36]

5: JIT compiled ASCIIUtility:NarrowUtf16ToAscii(long,long,long):long [Tier0, IL size=490, code size=1187]

Hello, world!We can see for “hello, world” there’s only 5 methods that actually get JIT compiled. There are of course many more methods that get executed as part of a simple “hello, world,” but almost all of them have precompiled native code available as part of the “Ready To Run” (R2R) images of the core libraries. The first three in the above list (StelemRef, LdelemaRef, and IndexOfNullCharacter) don’t because they explicitly opted-out of R2R via use of the [MethodImpl(MethodImplOptions.AggressiveOptimization)] attribute (despite the name, this attribute should almost never be used, and is only used for very specific reasons in a few very specific places in the core libraries). Then there’s our Main method. And lastly there’s the NarrowUtf16ToAscii method, which doesn’t have R2R code, either, due to using the variable-width Vector<T> (more on that later). Every other method that’s run doesn’t require JIT’ing. If we instead first set the DOTNET_ReadyToRun environment variable to 0, the list is much longer, and gives you a very good sense of what the JIT needs to do on startup (and why technologies like R2R are important for startup time). Note how many methods get compiled before “hello, world” is output:

1: JIT compiled CastHelpers:StelemRef(Array,long,Object) [Tier1, IL size=88, code size=93]

2: JIT compiled CastHelpers:LdelemaRef(Array,long,long):byref [Tier1, IL size=44, code size=44]

3: JIT compiled AppContext:Setup(long,long,int) [Tier0, IL size=68, code size=275]

4: JIT compiled Dictionary`2:.ctor(int):this [Tier0, IL size=9, code size=40]

5: JIT compiled Dictionary`2:.ctor(int,IEqualityComparer`1):this [Tier0, IL size=102, code size=444]

6: JIT compiled Object:.ctor():this [Tier0, IL size=1, code size=10]

7: JIT compiled Dictionary`2:Initialize(int):int:this [Tier0, IL size=56, code size=231]

8: JIT compiled HashHelpers:GetPrime(int):int [Tier0, IL size=83, code size=379]

9: JIT compiled HashHelpers:.cctor() [Tier0, IL size=24, code size=102]

10: JIT compiled HashHelpers:GetFastModMultiplier(int):long [Tier0, IL size=9, code size=37]

11: JIT compiled Type:GetTypeFromHandle(RuntimeTypeHandle):Type [Tier0, IL size=8, code size=14]

12: JIT compiled Type:op_Equality(Type,Type):bool [Tier0, IL size=38, code size=143]

13: JIT compiled NonRandomizedStringEqualityComparer:GetStringComparer(Object):IEqualityComparer`1 [Tier0, IL size=39, code size=170]

14: JIT compiled NonRandomizedStringEqualityComparer:.cctor() [Tier0, IL size=46, code size=232]

15: JIT compiled EqualityComparer`1:get_Default():EqualityComparer`1 [Tier0, IL size=6, code size=36]

16: JIT compiled EqualityComparer`1:.cctor() [Tier0, IL size=26, code size=125]

17: JIT compiled ComparerHelpers:CreateDefaultEqualityComparer(Type):Object [Tier0, IL size=235, code size=949]

18: JIT compiled CastHelpers:ChkCastClass(long,Object):Object [Tier0, IL size=22, code size=72]

19: JIT compiled RuntimeHelpers:GetMethodTable(Object):long [Tier0, IL size=11, code size=33]

20: JIT compiled CastHelpers:IsInstanceOfClass(long,Object):Object [Tier0, IL size=97, code size=257]

21: JIT compiled GenericEqualityComparer`1:.ctor():this [Tier0, IL size=7, code size=31]

22: JIT compiled EqualityComparer`1:.ctor():this [Tier0, IL size=7, code size=31]

23: JIT compiled CastHelpers:ChkCastClassSpecial(long,Object):Object [Tier0, IL size=87, code size=246]

24: JIT compiled OrdinalComparer:.ctor(IEqualityComparer`1):this [Tier0, IL size=8, code size=39]

25: JIT compiled NonRandomizedStringEqualityComparer:.ctor(IEqualityComparer`1):this [Tier0, IL size=14, code size=52]

26: JIT compiled StringComparer:get_Ordinal():StringComparer [Tier0, IL size=6, code size=49]

27: JIT compiled OrdinalCaseSensitiveComparer:.cctor() [Tier0, IL size=11, code size=71]

28: JIT compiled OrdinalCaseSensitiveComparer:.ctor():this [Tier0, IL size=8, code size=33]

29: JIT compiled OrdinalComparer:.ctor(bool):this [Tier0, IL size=14, code size=43]

30: JIT compiled StringComparer:.ctor():this [Tier0, IL size=7, code size=31]

31: JIT compiled StringComparer:get_OrdinalIgnoreCase():StringComparer [Tier0, IL size=6, code size=49]

32: JIT compiled OrdinalIgnoreCaseComparer:.cctor() [Tier0, IL size=11, code size=71]

33: JIT compiled OrdinalIgnoreCaseComparer:.ctor():this [Tier0, IL size=8, code size=36]

34: JIT compiled OrdinalIgnoreCaseComparer:.ctor(IEqualityComparer`1):this [Tier0, IL size=8, code size=39]

35: JIT compiled CastHelpers:ChkCastAny(long,Object):Object [Tier0, IL size=38, code size=115]

36: JIT compiled CastHelpers:TryGet(long,long):int [Tier0, IL size=129, code size=308]

37: JIT compiled CastHelpers:TableData(ref):byref [Tier0, IL size=7, code size=31]

38: JIT compiled MemoryMarshal:GetArrayDataReference(ref):byref [Tier0, IL size=7, code size=24]

39: JIT compiled CastHelpers:KeyToBucket(byref,long,long):int [Tier0, IL size=38, code size=87]

40: JIT compiled CastHelpers:HashShift(byref):int [Tier0, IL size=3, code size=16]

41: JIT compiled BitOperations:RotateLeft(long,int):long [Tier0, IL size=17, code size=23]

42: JIT compiled CastHelpers:Element(byref,int):byref [Tier0, IL size=15, code size=33]

43: JIT compiled Volatile:Read(byref):int [Tier0, IL size=6, code size=16]

44: JIT compiled String:Ctor(long):String [Tier0, IL size=57, code size=155]

45: JIT compiled String:wcslen(long):int [Tier0, IL size=7, code size=31]

46: JIT compiled SpanHelpers:IndexOfNullCharacter(byref):int [Tier1, IL size=792, code size=388]

47: JIT compiled String:get_Length():int:this [Tier0, IL size=7, code size=17]

48: JIT compiled Buffer:Memmove(byref,byref,long) [Tier0, IL size=59, code size=102]

49: JIT compiled RuntimeHelpers:IsReferenceOrContainsReferences():bool [Tier0, IL size=2, code size=8]

50: JIT compiled Buffer:Memmove(byref,byref,long) [Tier0, IL size=480, code size=678]

51: JIT compiled Dictionary`2:Add(__Canon,__Canon):this [Tier0, IL size=11, code size=55]

52: JIT compiled Dictionary`2:TryInsert(__Canon,__Canon,ubyte):bool:this [Tier0, IL size=675, code size=2467]

53: JIT compiled OrdinalComparer:GetHashCode(String):int:this [Tier0, IL size=7, code size=37]

54: JIT compiled String:GetNonRandomizedHashCode():int:this [Tier0, IL size=110, code size=290]

55: JIT compiled BitOperations:RotateLeft(int,int):int [Tier0, IL size=17, code size=20]

56: JIT compiled Dictionary`2:GetBucket(int):byref:this [Tier0, IL size=29, code size=90]

57: JIT compiled HashHelpers:FastMod(int,int,long):int [Tier0, IL size=20, code size=70]

58: JIT compiled Type:get_IsValueType():bool:this [Tier0, IL size=7, code size=39]

59: JIT compiled RuntimeType:IsValueTypeImpl():bool:this [Tier0, IL size=54, code size=158]

60: JIT compiled RuntimeType:GetNativeTypeHandle():TypeHandle:this [Tier0, IL size=12, code size=48]

61: JIT compiled TypeHandle:.ctor(long):this [Tier0, IL size=8, code size=25]

62: JIT compiled TypeHandle:get_IsTypeDesc():bool:this [Tier0, IL size=14, code size=38]

63: JIT compiled TypeHandle:AsMethodTable():long:this [Tier0, IL size=7, code size=17]

64: JIT compiled MethodTable:get_IsValueType():bool:this [Tier0, IL size=20, code size=32]

65: JIT compiled GC:KeepAlive(Object) [Tier0, IL size=1, code size=10]

66: JIT compiled Buffer:_Memmove(byref,byref,long) [Tier0, IL size=25, code size=279]

67: JIT compiled Environment:InitializeCommandLineArgs(long,int,long):ref [Tier0, IL size=75, code size=332]

68: JIT compiled Environment:.cctor() [Tier0, IL size=11, code size=163]

69: JIT compiled StartupHookProvider:ProcessStartupHooks() [Tier-0 switched to FullOpts, IL size=365, code size=1053]

70: JIT compiled StartupHookProvider:get_IsSupported():bool [Tier0, IL size=18, code size=60]

71: JIT compiled AppContext:TryGetSwitch(String,byref):bool [Tier0, IL size=97, code size=322]

72: JIT compiled ArgumentException:ThrowIfNullOrEmpty(String,String) [Tier0, IL size=16, code size=53]

73: JIT compiled String:IsNullOrEmpty(String):bool [Tier0, IL size=15, code size=58]

74: JIT compiled AppContext:GetData(String):Object [Tier0, IL size=64, code size=205]

75: JIT compiled ArgumentNullException:ThrowIfNull(Object,String) [Tier0, IL size=10, code size=42]

76: JIT compiled Monitor:Enter(Object,byref) [Tier0, IL size=17, code size=55]

77: JIT compiled Dictionary`2:TryGetValue(__Canon,byref):bool:this [Tier0, IL size=39, code size=97]

78: JIT compiled Dictionary`2:FindValue(__Canon):byref:this [Tier0, IL size=391, code size=1466]

79: JIT compiled EventSource:.cctor() [Tier0, IL size=34, code size=80]

80: JIT compiled EventSource:InitializeIsSupported():bool [Tier0, IL size=18, code size=60]

81: JIT compiled RuntimeEventSource:.ctor():this [Tier0, IL size=55, code size=184]

82: JIT compiled Guid:.ctor(int,short,short,ubyte,ubyte,ubyte,ubyte,ubyte,ubyte,ubyte,ubyte):this [Tier0, IL size=86, code size=132]

83: JIT compiled EventSource:.ctor(Guid,String):this [Tier0, IL size=11, code size=90]

84: JIT compiled EventSource:.ctor(Guid,String,int,ref):this [Tier0, IL size=58, code size=187]

85: JIT compiled EventSource:get_IsSupported():bool [Tier0, IL size=6, code size=11]

86: JIT compiled TraceLoggingEventHandleTable:.ctor():this [Tier0, IL size=20, code size=67]

87: JIT compiled EventSource:ValidateSettings(int):int [Tier0, IL size=37, code size=147]

88: JIT compiled EventSource:Initialize(Guid,String,ref):this [Tier0, IL size=418, code size=1584]

89: JIT compiled Guid:op_Equality(Guid,Guid):bool [Tier0, IL size=10, code size=39]

90: JIT compiled Guid:EqualsCore(byref,byref):bool [Tier0, IL size=132, code size=171]

91: JIT compiled ActivityTracker:get_Instance():ActivityTracker [Tier0, IL size=6, code size=49]

92: JIT compiled ActivityTracker:.cctor() [Tier0, IL size=11, code size=71]

93: JIT compiled ActivityTracker:.ctor():this [Tier0, IL size=7, code size=31]

94: JIT compiled RuntimeEventSource:get_ProviderMetadata():ReadOnlySpan`1:this [Tier0, IL size=13, code size=91]

95: JIT compiled ReadOnlySpan`1:.ctor(long,int):this [Tier0, IL size=51, code size=115]

96: JIT compiled RuntimeHelpers:IsReferenceOrContainsReferences():bool [Tier0, IL size=2, code size=8]

97: JIT compiled ReadOnlySpan`1:get_Length():int:this [Tier0, IL size=7, code size=17]

98: JIT compiled OverrideEventProvider:.ctor(EventSource,int):this [Tier0, IL size=22, code size=68]

99: JIT compiled EventProvider:.ctor(int):this [Tier0, IL size=46, code size=194]

100: JIT compiled EtwEventProvider:.ctor():this [Tier0, IL size=7, code size=31]

101: JIT compiled EventProvider:Register(EventSource):this [Tier0, IL size=48, code size=186]

102: JIT compiled MulticastDelegate:CtorClosed(Object,long):this [Tier0, IL size=23, code size=70]

103: JIT compiled EventProvider:EventRegister(EventSource,EtwEnableCallback):int:this [Tier0, IL size=53, code size=154]

104: JIT compiled EventSource:get_Name():String:this [Tier0, IL size=7, code size=18]

105: JIT compiled EventSource:get_Guid():Guid:this [Tier0, IL size=7, code size=41]

106: JIT compiled EtwEventProvider:System.Diagnostics.Tracing.IEventProvider.EventRegister(EventSource,EtwEnableCallback,long,byref):int:this [Tier0, IL size=19, code size=71]

107: JIT compiled Advapi32:EventRegister(byref,EtwEnableCallback,long,byref):int [Tier0, IL size=53, code size=374]

108: JIT compiled Marshal:GetFunctionPointerForDelegate(__Canon):long [Tier0, IL size=17, code size=54]

109: JIT compiled Marshal:GetFunctionPointerForDelegate(Delegate):long [Tier0, IL size=18, code size=53]

110: JIT compiled EventPipeEventProvider:.ctor():this [Tier0, IL size=18, code size=41]

111: JIT compiled EventListener:get_EventListenersLock():Object [Tier0, IL size=41, code size=157]

112: JIT compiled List`1:.ctor(int):this [Tier0, IL size=47, code size=275]

113: JIT compiled Interlocked:CompareExchange(byref,__Canon,__Canon):__Canon [Tier0, IL size=9, code size=50]

114: JIT compiled NativeRuntimeEventSource:.cctor() [Tier0, IL size=11, code size=71]

115: JIT compiled NativeRuntimeEventSource:.ctor():this [Tier0, IL size=63, code size=184]

116: JIT compiled Guid:.ctor(int,ushort,ushort,ubyte,ubyte,ubyte,ubyte,ubyte,ubyte,ubyte,ubyte):this [Tier0, IL size=88, code size=132]

117: JIT compiled NativeRuntimeEventSource:get_ProviderMetadata():ReadOnlySpan`1:this [Tier0, IL size=13, code size=91]

118: JIT compiled EventPipeEventProvider:System.Diagnostics.Tracing.IEventProvider.EventRegister(EventSource,EtwEnableCallback,long,byref):int:this [Tier0, IL size=44, code size=118]

119: JIT compiled EventPipeInternal:CreateProvider(String,EtwEnableCallback):long [Tier0, IL size=43, code size=320]

120: JIT compiled Utf16StringMarshaller:GetPinnableReference(String):byref [Tier0, IL size=13, code size=50]

121: JIT compiled String:GetPinnableReference():byref:this [Tier0, IL size=7, code size=24]

122: JIT compiled EventListener:AddEventSource(EventSource) [Tier0, IL size=175, code size=560]

123: JIT compiled List`1:get_Count():int:this [Tier0, IL size=7, code size=17]

124: JIT compiled WeakReference`1:.ctor(__Canon):this [Tier0, IL size=9, code size=42]

125: JIT compiled WeakReference`1:.ctor(__Canon,bool):this [Tier0, IL size=15, code size=60]

126: JIT compiled List`1:Add(__Canon):this [Tier0, IL size=60, code size=124]

127: JIT compiled String:op_Inequality(String,String):bool [Tier0, IL size=11, code size=46]

128: JIT compiled String:Equals(String,String):bool [Tier0, IL size=36, code size=114]

129: JIT compiled ReadOnlySpan`1:GetPinnableReference():byref:this [Tier0, IL size=23, code size=57]

130: JIT compiled EventProvider:SetInformation(int,long,int):int:this [Tier0, IL size=38, code size=131]

131: JIT compiled ILStubClass:IL_STUB_PInvoke(long,int,long,int):int [FullOpts, IL size=62, code size=170]

132: JIT compiled Program:Main() [Tier0, IL size=11, code size=36]

133: JIT compiled Console:WriteLine(String) [Tier0, IL size=12, code size=59]

134: JIT compiled Console:get_Out():TextWriter [Tier0, IL size=20, code size=113]

135: JIT compiled Console:.cctor() [Tier0, IL size=11, code size=71]

136: JIT compiled Volatile:Read(byref):__Canon [Tier0, IL size=6, code size=21]

137: JIT compiled Console:<get_Out>g__EnsureInitialized|26_0():TextWriter [Tier0, IL size=63, code size=209]

138: JIT compiled ConsolePal:OpenStandardOutput():Stream [Tier0, IL size=34, code size=130]

139: JIT compiled Console:get_OutputEncoding():Encoding [Tier0, IL size=72, code size=237]

140: JIT compiled ConsolePal:get_OutputEncoding():Encoding [Tier0, IL size=11, code size=200]

141: JIT compiled NativeLibrary:LoadLibraryCallbackStub(String,Assembly,bool,int):long [Tier0, IL size=63, code size=280]

142: JIT compiled EncodingHelper:GetSupportedConsoleEncoding(int):Encoding [Tier0, IL size=53, code size=186]

143: JIT compiled Encoding:GetEncoding(int):Encoding [Tier0, IL size=340, code size=1025]

144: JIT compiled EncodingProvider:GetEncodingFromProvider(int):Encoding [Tier0, IL size=51, code size=232]

145: JIT compiled Encoding:FilterDisallowedEncodings(Encoding):Encoding [Tier0, IL size=29, code size=84]

146: JIT compiled LocalAppContextSwitches:get_EnableUnsafeUTF7Encoding():bool [Tier0, IL size=16, code size=46]

147: JIT compiled LocalAppContextSwitches:GetCachedSwitchValue(String,byref):bool [Tier0, IL size=22, code size=76]

148: JIT compiled LocalAppContextSwitches:GetCachedSwitchValueInternal(String,byref):bool [Tier0, IL size=46, code size=168]

149: JIT compiled LocalAppContextSwitches:GetSwitchDefaultValue(String):bool [Tier0, IL size=32, code size=98]

150: JIT compiled String:op_Equality(String,String):bool [Tier0, IL size=8, code size=39]

151: JIT compiled Encoding:get_Default():Encoding [Tier0, IL size=6, code size=49]

152: JIT compiled Encoding:.cctor() [Tier0, IL size=12, code size=73]

153: JIT compiled UTF8EncodingSealed:.ctor(bool):this [Tier0, IL size=8, code size=40]

154: JIT compiled UTF8Encoding:.ctor(bool):this [Tier0, IL size=14, code size=43]

155: JIT compiled UTF8Encoding:.ctor():this [Tier0, IL size=12, code size=36]

156: JIT compiled Encoding:.ctor(int):this [Tier0, IL size=42, code size=152]

157: JIT compiled UTF8Encoding:SetDefaultFallbacks():this [Tier0, IL size=64, code size=212]

158: JIT compiled EncoderReplacementFallback:.ctor(String):this [Tier0, IL size=110, code size=360]

159: JIT compiled EncoderFallback:.ctor():this [Tier0, IL size=7, code size=31]

160: JIT compiled String:get_Chars(int):ushort:this [Tier0, IL size=29, code size=61]

161: JIT compiled Char:IsSurrogate(ushort):bool [Tier0, IL size=17, code size=43]

162: JIT compiled Char:IsBetween(ushort,ushort,ushort):bool [Tier0, IL size=12, code size=52]

163: JIT compiled DecoderReplacementFallback:.ctor(String):this [Tier0, IL size=110, code size=360]

164: JIT compiled DecoderFallback:.ctor():this [Tier0, IL size=7, code size=31]

165: JIT compiled Encoding:get_CodePage():int:this [Tier0, IL size=7, code size=17]

166: JIT compiled Encoding:get_UTF8():Encoding [Tier0, IL size=6, code size=49]

167: JIT compiled UTF8Encoding:.cctor() [Tier0, IL size=12, code size=76]

168: JIT compiled Volatile:Write(byref,__Canon) [Tier0, IL size=6, code size=32]

169: JIT compiled ConsolePal:GetStandardFile(int,int,bool):Stream [Tier0, IL size=50, code size=183]

170: JIT compiled ConsolePal:get_InvalidHandleValue():long [Tier0, IL size=7, code size=41]

171: JIT compiled IntPtr:.ctor(int):this [Tier0, IL size=9, code size=25]

172: JIT compiled ConsolePal:ConsoleHandleIsWritable(long):bool [Tier0, IL size=26, code size=68]

173: JIT compiled Kernel32:WriteFile(long,long,int,byref,long):int [Tier0, IL size=46, code size=294]

174: JIT compiled Marshal:SetLastSystemError(int) [Tier0, IL size=7, code size=40]

175: JIT compiled Marshal:GetLastSystemError():int [Tier0, IL size=6, code size=34]

176: JIT compiled WindowsConsoleStream:.ctor(long,int,bool):this [Tier0, IL size=37, code size=90]

177: JIT compiled ConsoleStream:.ctor(int):this [Tier0, IL size=31, code size=71]

178: JIT compiled Stream:.ctor():this [Tier0, IL size=7, code size=31]

179: JIT compiled MarshalByRefObject:.ctor():this [Tier0, IL size=7, code size=31]

180: JIT compiled Kernel32:GetFileType(long):int [Tier0, IL size=27, code size=217]

181: JIT compiled Console:CreateOutputWriter(Stream):TextWriter [Tier0, IL size=50, code size=230]

182: JIT compiled Stream:.cctor() [Tier0, IL size=11, code size=71]

183: JIT compiled NullStream:.ctor():this [Tier0, IL size=7, code size=31]

184: JIT compiled EncodingExtensions:RemovePreamble(Encoding):Encoding [Tier0, IL size=25, code size=118]

185: JIT compiled UTF8EncodingSealed:get_Preamble():ReadOnlySpan`1:this [Tier0, IL size=24, code size=99]

186: JIT compiled UTF8Encoding:get_PreambleSpan():ReadOnlySpan`1 [Tier0, IL size=12, code size=87]

187: JIT compiled ConsoleEncoding:.ctor(Encoding):this [Tier0, IL size=14, code size=52]

188: JIT compiled Encoding:.ctor():this [Tier0, IL size=8, code size=33]

189: JIT compiled Encoding:SetDefaultFallbacks():this [Tier0, IL size=23, code size=65]

190: JIT compiled EncoderFallback:get_ReplacementFallback():EncoderFallback [Tier0, IL size=6, code size=49]

191: JIT compiled EncoderReplacementFallback:.cctor() [Tier0, IL size=11, code size=71]

192: JIT compiled EncoderReplacementFallback:.ctor():this [Tier0, IL size=12, code size=44]

193: JIT compiled DecoderFallback:get_ReplacementFallback():DecoderFallback [Tier0, IL size=6, code size=49]

194: JIT compiled DecoderReplacementFallback:.cctor() [Tier0, IL size=11, code size=71]

195: JIT compiled DecoderReplacementFallback:.ctor():this [Tier0, IL size=12, code size=44]

196: JIT compiled StreamWriter:.ctor(Stream,Encoding,int,bool):this [Tier0, IL size=201, code size=564]

197: JIT compiled Task:get_CompletedTask():Task [Tier0, IL size=6, code size=49]

198: JIT compiled Task:.cctor() [Tier0, IL size=76, code size=316]

199: JIT compiled TaskFactory:.ctor():this [Tier0, IL size=7, code size=31]

200: JIT compiled Task`1:.ctor(bool,VoidTaskResult,int,CancellationToken):this [Tier0, IL size=21, code size=75]

201: JIT compiled Task:.ctor(bool,int,CancellationToken):this [Tier0, IL size=70, code size=181]

202: JIT compiled <>c:.cctor() [Tier0, IL size=11, code size=71]

203: JIT compiled <>c:.ctor():this [Tier0, IL size=7, code size=31]

204: JIT compiled TextWriter:.ctor(IFormatProvider):this [Tier0, IL size=36, code size=124]

205: JIT compiled TextWriter:.cctor() [Tier0, IL size=26, code size=108]

206: JIT compiled NullTextWriter:.ctor():this [Tier0, IL size=7, code size=31]

207: JIT compiled TextWriter:.ctor():this [Tier0, IL size=29, code size=103]

208: JIT compiled String:ToCharArray():ref:this [Tier0, IL size=52, code size=173]

209: JIT compiled MemoryMarshal:GetArrayDataReference(ref):byref [Tier0, IL size=7, code size=24]

210: JIT compiled ConsoleStream:get_CanWrite():bool:this [Tier0, IL size=7, code size=18]

211: JIT compiled ConsoleEncoding:GetEncoder():Encoder:this [Tier0, IL size=12, code size=57]

212: JIT compiled UTF8Encoding:GetEncoder():Encoder:this [Tier0, IL size=7, code size=63]

213: JIT compiled EncoderNLS:.ctor(Encoding):this [Tier0, IL size=37, code size=102]

214: JIT compiled Encoder:.ctor():this [Tier0, IL size=7, code size=31]

215: JIT compiled Encoding:get_EncoderFallback():EncoderFallback:this [Tier0, IL size=7, code size=18]

216: JIT compiled EncoderNLS:Reset():this [Tier0, IL size=24, code size=92]

217: JIT compiled ConsoleStream:get_CanSeek():bool:this [Tier0, IL size=2, code size=12]

218: JIT compiled StreamWriter:set_AutoFlush(bool):this [Tier0, IL size=25, code size=72]

219: JIT compiled StreamWriter:CheckAsyncTaskInProgress():this [Tier0, IL size=19, code size=47]

220: JIT compiled Task:get_IsCompleted():bool:this [Tier0, IL size=16, code size=40]

221: JIT compiled Task:IsCompletedMethod(int):bool [Tier0, IL size=11, code size=25]

222: JIT compiled StreamWriter:Flush(bool,bool):this [Tier0, IL size=272, code size=1127]

223: JIT compiled StreamWriter:ThrowIfDisposed():this [Tier0, IL size=15, code size=43]

224: JIT compiled Encoding:get_Preamble():ReadOnlySpan`1:this [Tier0, IL size=12, code size=70]

225: JIT compiled ConsoleEncoding:GetPreamble():ref:this [Tier0, IL size=6, code size=27]

226: JIT compiled Array:Empty():ref [Tier0, IL size=6, code size=49]

227: JIT compiled EmptyArray`1:.cctor() [Tier0, IL size=12, code size=52]

228: JIT compiled ReadOnlySpan`1:op_Implicit(ref):ReadOnlySpan`1 [Tier0, IL size=7, code size=79]

229: JIT compiled ReadOnlySpan`1:.ctor(ref):this [Tier0, IL size=33, code size=81]

230: JIT compiled MemoryMarshal:GetArrayDataReference(ref):byref [Tier0, IL size=7, code size=24]

231: JIT compiled ConsoleEncoding:GetMaxByteCount(int):int:this [Tier0, IL size=13, code size=63]

232: JIT compiled UTF8EncodingSealed:GetMaxByteCount(int):int:this [Tier0, IL size=20, code size=50]

233: JIT compiled Span`1:.ctor(long,int):this [Tier0, IL size=51, code size=115]

234: JIT compiled ReadOnlySpan`1:.ctor(ref,int,int):this [Tier0, IL size=65, code size=147]

235: JIT compiled Encoder:GetBytes(ReadOnlySpan`1,Span`1,bool):int:this [Tier0, IL size=44, code size=234]

236: JIT compiled MemoryMarshal:GetNonNullPinnableReference(ReadOnlySpan`1):byref [Tier0, IL size=30, code size=54]

237: JIT compiled ReadOnlySpan`1:get_Length():int:this [Tier0, IL size=7, code size=17]

238: JIT compiled MemoryMarshal:GetNonNullPinnableReference(Span`1):byref [Tier0, IL size=30, code size=54]

239: JIT compiled Span`1:get_Length():int:this [Tier0, IL size=7, code size=17]

240: JIT compiled EncoderNLS:GetBytes(long,int,long,int,bool):int:this [Tier0, IL size=92, code size=279]

241: JIT compiled ArgumentNullException:ThrowIfNull(long,String) [Tier0, IL size=12, code size=45]

242: JIT compiled Encoding:GetBytes(long,int,long,int,EncoderNLS):int:this [Tier0, IL size=57, code size=187]

243: JIT compiled EncoderNLS:get_HasLeftoverData():bool:this [Tier0, IL size=35, code size=105]

244: JIT compiled UTF8Encoding:GetBytesFast(long,int,long,int,byref):int:this [Tier0, IL size=33, code size=119]

245: JIT compiled Utf8Utility:TranscodeToUtf8(long,int,long,int,byref,byref):int [Tier0, IL size=1446, code size=3208]

246: JIT compiled Math:Min(int,int):int [Tier0, IL size=8, code size=28]

247: JIT compiled ASCIIUtility:NarrowUtf16ToAscii(long,long,long):long [Tier0, IL size=490, code size=1187]

248: JIT compiled WindowsConsoleStream:Flush():this [Tier0, IL size=26, code size=56]

249: JIT compiled ConsoleStream:Flush():this [Tier0, IL size=1, code size=10]

250: JIT compiled TextWriter:Synchronized(TextWriter):TextWriter [Tier0, IL size=28, code size=121]

251: JIT compiled SyncTextWriter:.ctor(TextWriter):this [Tier0, IL size=14, code size=52]

252: JIT compiled SyncTextWriter:WriteLine(String):this [Tier0, IL size=13, code size=140]

253: JIT compiled StreamWriter:WriteLine(String):this [Tier0, IL size=20, code size=110]

254: JIT compiled String:op_Implicit(String):ReadOnlySpan`1 [Tier0, IL size=31, code size=171]

255: JIT compiled String:GetRawStringData():byref:this [Tier0, IL size=7, code size=24]

256: JIT compiled ReadOnlySpan`1:.ctor(byref,int):this [Tier0, IL size=15, code size=39]

257: JIT compiled StreamWriter:WriteSpan(ReadOnlySpan`1,bool):this [Tier0, IL size=368, code size=1036]

258: JIT compiled MemoryMarshal:GetReference(ReadOnlySpan`1):byref [Tier0, IL size=8, code size=17]

259: JIT compiled Buffer:MemoryCopy(long,long,long,long) [Tier0, IL size=21, code size=83]

260: JIT compiled Unsafe:ReadUnaligned(long):long [Tier0, IL size=10, code size=17]

261: JIT compiled ASCIIUtility:AllCharsInUInt64AreAscii(long):bool [Tier0, IL size=16, code size=38]

262: JIT compiled ASCIIUtility:NarrowFourUtf16CharsToAsciiAndWriteToBuffer(byref,long) [Tier0, IL size=107, code size=171]

263: JIT compiled Unsafe:WriteUnaligned(byref,int) [Tier0, IL size=11, code size=22]

264: JIT compiled Unsafe:ReadUnaligned(long):int [Tier0, IL size=10, code size=16]

265: JIT compiled ASCIIUtility:AllCharsInUInt32AreAscii(int):bool [Tier0, IL size=11, code size=25]

266: JIT compiled ASCIIUtility:NarrowTwoUtf16CharsToAsciiAndWriteToBuffer(byref,int) [Tier0, IL size=24, code size=35]

267: JIT compiled Span`1:Slice(int,int):Span`1:this [Tier0, IL size=39, code size=135]

268: JIT compiled Span`1:.ctor(byref,int):this [Tier0, IL size=15, code size=39]

269: JIT compiled Span`1:op_Implicit(Span`1):ReadOnlySpan`1 [Tier0, IL size=19, code size=90]

270: JIT compiled ReadOnlySpan`1:.ctor(byref,int):this [Tier0, IL size=15, code size=39]

271: JIT compiled WindowsConsoleStream:Write(ReadOnlySpan`1):this [Tier0, IL size=35, code size=149]

272: JIT compiled WindowsConsoleStream:WriteFileNative(long,ReadOnlySpan`1,bool):int [Tier0, IL size=107, code size=272]

273: JIT compiled ReadOnlySpan`1:get_IsEmpty():bool:this [Tier0, IL size=10, code size=24]

Hello, world!

274: JIT compiled AppContext:OnProcessExit() [Tier0, IL size=43, code size=161]

275: JIT compiled AssemblyLoadContext:OnProcessExit() [Tier0, IL size=101, code size=442]

276: JIT compiled EventListener:DisposeOnShutdown() [Tier0, IL size=150, code size=618]

277: JIT compiled List`1:.ctor():this [Tier0, IL size=18, code size=133]

278: JIT compiled List`1:.cctor() [Tier0, IL size=12, code size=129]

279: JIT compiled List`1:GetEnumerator():Enumerator:this [Tier0, IL size=7, code size=162]

280: JIT compiled Enumerator:.ctor(List`1):this [Tier0, IL size=39, code size=64]

281: JIT compiled Enumerator:MoveNext():bool:this [Tier0, IL size=81, code size=159]

282: JIT compiled Enumerator:get_Current():__Canon:this [Tier0, IL size=7, code size=22]

283: JIT compiled WeakReference`1:TryGetTarget(byref):bool:this [Tier0, IL size=24, code size=66]

284: JIT compiled List`1:AddWithResize(__Canon):this [Tier0, IL size=39, code size=85]

285: JIT compiled List`1:Grow(int):this [Tier0, IL size=53, code size=121]

286: JIT compiled List`1:set_Capacity(int):this [Tier0, IL size=86, code size=342]

287: JIT compiled CastHelpers:StelemRef_Helper(byref,long,Object) [Tier0, IL size=34, code size=104]

288: JIT compiled CastHelpers:StelemRef_Helper_NoCacheLookup(byref,long,Object) [Tier0, IL size=26, code size=111]

289: JIT compiled Enumerator:MoveNextRare():bool:this [Tier0, IL size=57, code size=80]

290: JIT compiled Enumerator:Dispose():this [Tier0, IL size=1, code size=14]

291: JIT compiled EventSource:Dispose():this [Tier0, IL size=14, code size=54]

292: JIT compiled EventSource:Dispose(bool):this [Tier0, IL size=124, code size=236]

293: JIT compiled EventProvider:Dispose():this [Tier0, IL size=14, code size=54]

294: JIT compiled EventProvider:Dispose(bool):this [Tier0, IL size=90, code size=230]

295: JIT compiled EventProvider:EventUnregister(long):this [Tier0, IL size=14, code size=50]

296: JIT compiled EtwEventProvider:System.Diagnostics.Tracing.IEventProvider.EventUnregister(long):int:this [Tier0, IL size=7, code size=181]

297: JIT compiled GC:SuppressFinalize(Object) [Tier0, IL size=18, code size=53]

298: JIT compiled EventPipeEventProvider:System.Diagnostics.Tracing.IEventProvider.EventUnregister(long):int:this [Tier0, IL size=13, code size=187]With that out of the way, let’s move on to actual performance improvements, starting with on-stack replacement.

On-Stack Replacement

On-stack replacement (OSR) is one of the coolest features to hit the JIT in .NET 7. But to really understand OSR, we first need to understand tiered compilation, so a quick recap…

One of the issues a managed environment with a JIT compiler has to deal with is tradeoffs between startup and throughput. Historically, the job of an optimizing compiler is to, well, optimize, in order to enable the best possible throughput of the application or service once running. But such optimization takes analysis, takes time, and performing all of that work then leads to increased startup time, as all of the code on the startup path (e.g. all of the code that needs to be run before a web server can serve the first request) needs to be compiled. So a JIT compiler needs to make tradeoffs: better throughput at the expense of longer startup time, or better startup time at the expense of decreased throughput. For some kinds of apps and services, the tradeoff is an easy call, e.g. if your service starts up once and then runs for days, several extra seconds of startup time doesn’t matter, or if you’re a console application that’s going to do a quick computation and exit, startup time is all that matters. But how can the JIT know which scenario it’s in, and do we really want every developer having to know about these kinds of settings and tradeoffs and configure every one of their applications accordingly? One answer to this has been ahead-of-time compilation, which has taken various forms in .NET. For example, all of the core libraries are “crossgen”‘d, meaning they’ve been run through a tool that produces the previously mentioned R2R format, yielding binaries that contain assembly code that needs only minor tweaks to actually execute; not every method can have code generated for it, but enough that it significantly reduces startup time. Of course, such approaches have their own downsides, e.g. one of the promises of a JIT compiler is it can take advantage of knowledge of the current machine / process in order to best optimize, so for example the R2R images have to assume a certain baseline instruction set (e.g. what vectorizing instructions are available) whereas the JIT can see what’s actually available and use the best. “Tiered compilation” provides another answer, one that’s usable with or without these other ahead-of-time (AOT) compilation solutions.

Tiered compilation enables the JIT to have its proverbial cake and eat it, too. The idea is simple: allow the JIT to compile the same code multiple times. The first time, the JIT can use as a few optimizations as make sense (a handful of optimizations can actually make the JIT’s own throughput faster, so those still make sense to apply), producing fairly unoptimized assembly code but doing so really quickly. And when it does so, it can add some instrumentation into the assembly to track how often the methods are called. As it turns out, many functions used on a startup path are invoked once or maybe only a handful of times, and it would take more time to optimize them than it does to just execute them unoptimized. Then, when the method’s instrumentation triggers some threshold, for example a method having been executed 30 times, a work item gets queued to recompile that method, but this time with all the optimizations the JIT can throw at it. This is lovingly referred to as “tiering up.” Once that recompilation has completed, call sites to the method are patched with the address of the newly highly optimized assembly code, and future invocations will then take the fast path. So, we get faster startup and faster sustained throughput. At least, that’s the hope.

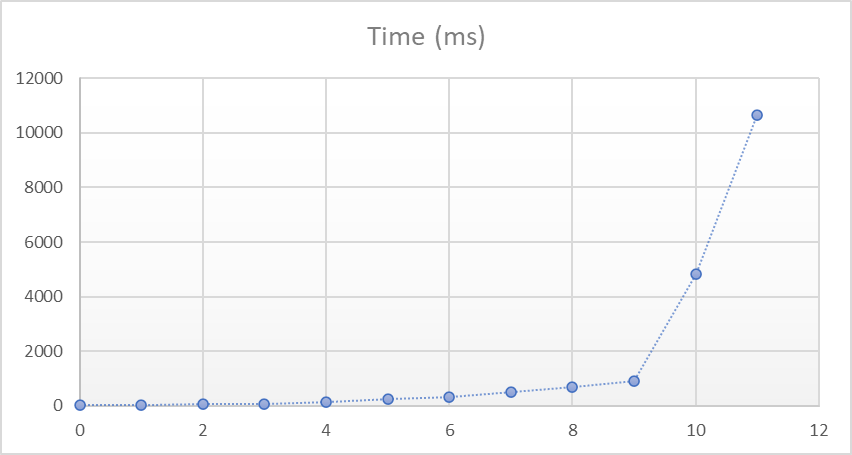

A problem, however, is methods that don’t fit this mold. While it’s certainly the case that many performance-sensitive methods are relatively quick and executed many, many, many times, there’s also a large number of performance-sensitive methods that are executed just a handful of times, or maybe even only once, but that take a very long time to execute, maybe even the duration of the whole process: methods with loops. As a result, by default tiered compilation hasn’t applied to loops, though it can be enabled by setting the DOTNET_TC_QuickJitForLoops environment variable to 1. We can see the effect of this by trying this simple console app with .NET 6. With the default settings, run this app:

class Program

{

static void Main()

{

var sw = new System.Diagnostics.Stopwatch();

while (true)

{

sw.Restart();

for (int trial = 0; trial < 10_000; trial++)

{

int count = 0;

for (int i = 0; i < char.MaxValue; i++)

if (IsAsciiDigit((char)i))

count++;

}

sw.Stop();

Console.WriteLine(sw.Elapsed);

}

static bool IsAsciiDigit(char c) => (uint)(c - '0') <= 9;

}

}I get numbers printed out like:

00:00:00.5734352

00:00:00.5526667

00:00:00.5675267

00:00:00.5588724

00:00:00.5616028Now, try setting DOTNET_TC_QuickJitForLoops to 1. When I then run it again, I get numbers like this:

00:00:01.2841397

00:00:01.2693485

00:00:01.2755646

00:00:01.2656678

00:00:01.2679925In other words, with DOTNET_TC_QuickJitForLoops enabled, it’s taking 2.5x as long as without (the default in .NET 6). That’s because this main function never gets optimizations applied to it. By setting DOTNET_TC_QuickJitForLoops to 1, we’re saying “JIT, please apply tiering to methods with loops as well,” but this method with a loop is only ever invoked once, so for the duration of the process it ends up remaining at “tier-0,” aka unoptimized. Now, let’s try the same thing with .NET 7. Regardless of whether that environment variable is set, I again get numbers like this:

00:00:00.5528889

00:00:00.5562563

00:00:00.5622086

00:00:00.5668220

00:00:00.5589112but importantly, this method was still participating in tiering. In fact, we can get confirmation of that by using the aforementioned DOTNET_JitDisasmSummary=1 environment variable. When I set that and run again, I see these lines in the output:

4: JIT compiled Program:Main() [Tier0, IL size=83, code size=319]

...

6: JIT compiled Program:Main() [Tier1-OSR @0x27, IL size=83, code size=380]highlighting that Main was indeed compiled twice. How is that possible? On-stack replacement.

The idea behind on-stack replacement is a method can be replaced not just between invocations but even while it’s executing, while it’s “on the stack.” In addition to the tier-0 code being instrumented for call counts, loops are also instrumented for iteration counts. When the iterations surpass a certain limit, the JIT compiles a new highly optimized version of that method, transfers all the local/register state from the current invocation to the new invocation, and then jumps to the appropriate location in the new method. We can see this in action by using the previously discussed DOTNET_JitDisasm environment variable. Set that to Program:* in order to see the assembly code generated for all of the methods in the Program class, and then run the app again. You should see output like the following:

; Assembly listing for method Program:Main()

; Emitting BLENDED_CODE for X64 CPU with AVX - Windows

; Tier-0 compilation

; MinOpts code

; rbp based frame

; partially interruptible

G_M000_IG01: ;; offset=0000H

55 push rbp

4881EC80000000 sub rsp, 128

488DAC2480000000 lea rbp, [rsp+80H]

C5D857E4 vxorps xmm4, xmm4

C5F97F65B0 vmovdqa xmmword ptr [rbp-50H], xmm4

33C0 xor eax, eax

488945C0 mov qword ptr [rbp-40H], rax

G_M000_IG02: ;; offset=001FH

48B9002F0B50FC7F0000 mov rcx, 0x7FFC500B2F00

E8721FB25F call CORINFO_HELP_NEWSFAST

488945B0 mov gword ptr [rbp-50H], rax

488B4DB0 mov rcx, gword ptr [rbp-50H]

FF1544C70D00 call [Stopwatch:.ctor():this]

488B4DB0 mov rcx, gword ptr [rbp-50H]

48894DC0 mov gword ptr [rbp-40H], rcx

C745A8E8030000 mov dword ptr [rbp-58H], 0x3E8

G_M000_IG03: ;; offset=004BH

8B4DA8 mov ecx, dword ptr [rbp-58H]

FFC9 dec ecx

894DA8 mov dword ptr [rbp-58H], ecx

837DA800 cmp dword ptr [rbp-58H], 0

7F0E jg SHORT G_M000_IG05

G_M000_IG04: ;; offset=0059H

488D4DA8 lea rcx, [rbp-58H]

BA06000000 mov edx, 6

E8B985AB5F call CORINFO_HELP_PATCHPOINT

G_M000_IG05: ;; offset=0067H

488B4DC0 mov rcx, gword ptr [rbp-40H]

3909 cmp dword ptr [rcx], ecx

FF1585C70D00 call [Stopwatch:Restart():this]

33C9 xor ecx, ecx

894DBC mov dword ptr [rbp-44H], ecx

33C9 xor ecx, ecx

894DB8 mov dword ptr [rbp-48H], ecx

EB20 jmp SHORT G_M000_IG08

G_M000_IG06: ;; offset=007FH

8B4DB8 mov ecx, dword ptr [rbp-48H]

0FB7C9 movzx rcx, cx

FF152DD40B00 call [Program:<Main>g__IsAsciiDigit|0_0(ushort):bool]

85C0 test eax, eax

7408 je SHORT G_M000_IG07

8B4DBC mov ecx, dword ptr [rbp-44H]

FFC1 inc ecx

894DBC mov dword ptr [rbp-44H], ecx

G_M000_IG07: ;; offset=0097H

8B4DB8 mov ecx, dword ptr [rbp-48H]

FFC1 inc ecx

894DB8 mov dword ptr [rbp-48H], ecx

G_M000_IG08: ;; offset=009FH

8B4DA8 mov ecx, dword ptr [rbp-58H]

FFC9 dec ecx

894DA8 mov dword ptr [rbp-58H], ecx

837DA800 cmp dword ptr [rbp-58H], 0

7F0E jg SHORT G_M000_IG10

G_M000_IG09: ;; offset=00ADH

488D4DA8 lea rcx, [rbp-58H]

BA23000000 mov edx, 35

E86585AB5F call CORINFO_HELP_PATCHPOINT

G_M000_IG10: ;; offset=00BBH

817DB800CA9A3B cmp dword ptr [rbp-48H], 0x3B9ACA00

7CBB jl SHORT G_M000_IG06

488B4DC0 mov rcx, gword ptr [rbp-40H]

3909 cmp dword ptr [rcx], ecx

FF1570C70D00 call [Stopwatch:get_ElapsedMilliseconds():long:this]

488BC8 mov rcx, rax

FF1507D00D00 call [Console:WriteLine(long)]

E96DFFFFFF jmp G_M000_IG03

; Total bytes of code 222

; Assembly listing for method Program:<Main>g__IsAsciiDigit|0_0(ushort):bool

; Emitting BLENDED_CODE for X64 CPU with AVX - Windows

; Tier-0 compilation

; MinOpts code

; rbp based frame

; partially interruptible

G_M000_IG01: ;; offset=0000H

55 push rbp

488BEC mov rbp, rsp

894D10 mov dword ptr [rbp+10H], ecx

G_M000_IG02: ;; offset=0007H

8B4510 mov eax, dword ptr [rbp+10H]

0FB7C0 movzx rax, ax

83C0D0 add eax, -48

83F809 cmp eax, 9

0F96C0 setbe al

0FB6C0 movzx rax, al

G_M000_IG03: ;; offset=0019H

5D pop rbp

C3 retA few relevant things to notice here. First, the comments at the top highlight how this code was compiled:

; Tier-0 compilation

; MinOpts codeSo, we know this is the initial version (“Tier-0”) of the method compiled with minimal optimization (“MinOpts”). Second, note this line of the assembly:

FF152DD40B00 call [Program:<Main>g__IsAsciiDigit|0_0(ushort):bool]Our IsAsciiDigit helper method is trivially inlineable, but it’s not getting inlined; instead, the assembly has a call to it, and indeed we can see below the generated code (also “MinOpts”) for IsAsciiDigit. Why? Because inlining is an optimization (a really important one) that’s disabled as part of tier-0 (because the analysis for doing inlining well is also quite costly). Third, we can see the code the JIT is outputting to instrument this method. This is a bit more involved, but I’ll point out the relevant parts. First, we see:

C745A8E8030000 mov dword ptr [rbp-58H], 0x3E8That 0x3E8 is the hex value for the decimal 1,000, which is the default number of iterations a loop needs to iterate before the JIT will generate the optimized version of the method (this is configurable via the DOTNET_TC_OnStackReplacement_InitialCounter environment variable). So we see 1,000 being stored into this stack location. Then a bit later in the method we see this:

G_M000_IG03: ;; offset=004BH

8B4DA8 mov ecx, dword ptr [rbp-58H]

FFC9 dec ecx

894DA8 mov dword ptr [rbp-58H], ecx

837DA800 cmp dword ptr [rbp-58H], 0

7F0E jg SHORT G_M000_IG05

G_M000_IG04: ;; offset=0059H

488D4DA8 lea rcx, [rbp-58H]

BA06000000 mov edx, 6

E8B985AB5F call CORINFO_HELP_PATCHPOINT

G_M000_IG05: ;; offset=0067HThe generated code is loading that counter into the ecx register, decrementing it, storing it back, and then seeing whether the counter dropped to 0. If it didn’t, the code skips to G_M000_IG05, which is the label for the actual code in the rest of the loop. But if the counter did drop to 0, the JIT proceeds to store relevant state into the the rcx and edx registers and then calls the CORINFO_HELP_PATCHPOINT helper method. That helper is responsible for triggering the creation of the optimized method if it doesn’t yet exist, fixing up all appropriate tracking state, and jumping to the new method. And indeed, if you look again at your console output from running the program, you’ll see yet another output for the Main method:

; Assembly listing for method Program:Main()

; Emitting BLENDED_CODE for X64 CPU with AVX - Windows

; Tier-1 compilation

; OSR variant for entry point 0x23

; optimized code

; rsp based frame

; fully interruptible

; No PGO data

; 1 inlinees with PGO data; 8 single block inlinees; 0 inlinees without PGO data

G_M000_IG01: ;; offset=0000H

4883EC58 sub rsp, 88

4889BC24D8000000 mov qword ptr [rsp+D8H], rdi

4889B424D0000000 mov qword ptr [rsp+D0H], rsi

48899C24C8000000 mov qword ptr [rsp+C8H], rbx

C5F877 vzeroupper

33C0 xor eax, eax

4889442428 mov qword ptr [rsp+28H], rax

4889442420 mov qword ptr [rsp+20H], rax

488B9C24A0000000 mov rbx, gword ptr [rsp+A0H]

8BBC249C000000 mov edi, dword ptr [rsp+9CH]

8BB42498000000 mov esi, dword ptr [rsp+98H]

G_M000_IG02: ;; offset=0041H

EB45 jmp SHORT G_M000_IG05

align [0 bytes for IG06]

G_M000_IG03: ;; offset=0043H

33C9 xor ecx, ecx

488B9C24A0000000 mov rbx, gword ptr [rsp+A0H]

48894B08 mov qword ptr [rbx+08H], rcx

488D4C2428 lea rcx, [rsp+28H]

48B87066E68AFD7F0000 mov rax, 0x7FFD8AE66670

G_M000_IG04: ;; offset=0060H

FFD0 call rax ; Kernel32:QueryPerformanceCounter(long):int

488B442428 mov rax, qword ptr [rsp+28H]

488B9C24A0000000 mov rbx, gword ptr [rsp+A0H]

48894310 mov qword ptr [rbx+10H], rax

C6431801 mov byte ptr [rbx+18H], 1

33FF xor edi, edi

33F6 xor esi, esi

833D92A1E55F00 cmp dword ptr [(reloc 0x7ffcafe1ae34)], 0

0F85CA000000 jne G_M000_IG13

G_M000_IG05: ;; offset=0088H

81FE00CA9A3B cmp esi, 0x3B9ACA00

7D17 jge SHORT G_M000_IG09

G_M000_IG06: ;; offset=0090H

0FB7CE movzx rcx, si

83C1D0 add ecx, -48

83F909 cmp ecx, 9

7702 ja SHORT G_M000_IG08

G_M000_IG07: ;; offset=009BH

FFC7 inc edi

G_M000_IG08: ;; offset=009DH

FFC6 inc esi

81FE00CA9A3B cmp esi, 0x3B9ACA00

7CE9 jl SHORT G_M000_IG06

G_M000_IG09: ;; offset=00A7H

488B6B08 mov rbp, qword ptr [rbx+08H]

48899C24A0000000 mov gword ptr [rsp+A0H], rbx

807B1800 cmp byte ptr [rbx+18H], 0

7436 je SHORT G_M000_IG12

G_M000_IG10: ;; offset=00B9H

488D4C2420 lea rcx, [rsp+20H]

48B87066E68AFD7F0000 mov rax, 0x7FFD8AE66670

G_M000_IG11: ;; offset=00C8H

FFD0 call rax ; Kernel32:QueryPerformanceCounter(long):int

488B4C2420 mov rcx, qword ptr [rsp+20H]

488B9C24A0000000 mov rbx, gword ptr [rsp+A0H]

482B4B10 sub rcx, qword ptr [rbx+10H]

4803E9 add rbp, rcx

833D2FA1E55F00 cmp dword ptr [(reloc 0x7ffcafe1ae34)], 0

48899C24A0000000 mov gword ptr [rsp+A0H], rbx

756D jne SHORT G_M000_IG14

G_M000_IG12: ;; offset=00EFH

C5F857C0 vxorps xmm0, xmm0

C4E1FB2AC5 vcvtsi2sd xmm0, rbp

C5FB11442430 vmovsd qword ptr [rsp+30H], xmm0

48B9F04BF24FFC7F0000 mov rcx, 0x7FFC4FF24BF0

BAE7070000 mov edx, 0x7E7

E82E1FB25F call CORINFO_HELP_GETSHARED_NONGCSTATIC_BASE

C5FB10442430 vmovsd xmm0, qword ptr [rsp+30H]

C5FB5905E049F6FF vmulsd xmm0, xmm0, qword ptr [(reloc 0x7ffc4ff25720)]

C4E1FB2CD0 vcvttsd2si rdx, xmm0

48B94B598638D6C56D34 mov rcx, 0x346DC5D63886594B

488BC1 mov rax, rcx

48F7EA imul rdx:rax, rdx

488BCA mov rcx, rdx

48C1E93F shr rcx, 63

48C1FA0B sar rdx, 11

4803CA add rcx, rdx

FF1567CE0D00 call [Console:WriteLine(long)]

E9F5FEFFFF jmp G_M000_IG03

G_M000_IG13: ;; offset=014EH

E8DDCBAC5F call CORINFO_HELP_POLL_GC

E930FFFFFF jmp G_M000_IG05

G_M000_IG14: ;; offset=0158H

E8D3CBAC5F call CORINFO_HELP_POLL_GC

EB90 jmp SHORT G_M000_IG12

; Total bytes of code 351Here, again, we notice a few interesting things. First, in the header we see this:

; Tier-1 compilation

; OSR variant for entry point 0x23

; optimized codeso we know this is both optimized “tier-1” code and is the “OSR variant” for this method. Second, notice there’s no longer a call to the IsAsciiDigit helper. Instead, where that call would have been, we see this:

G_M000_IG06: ;; offset=0090H

0FB7CE movzx rcx, si

83C1D0 add ecx, -48

83F909 cmp ecx, 9

7702 ja SHORT G_M000_IG08This is loading a value into rcx, subtracting 48 from it (48 is the decimal ASCII value of the '0' character) and comparing the resulting value to 9. Sounds an awful lot like our IsAsciiDigit implementation ((uint)(c - '0') <= 9), doesn’t it? That’s because it is. The helper was successfully inlined in this now-optimized code.

Great, so now in .NET 7, we can largely avoid the tradeoffs between startup and throughput, as OSR enables tiered compilation to apply to all methods, even those that are long-running. A multitude of PRs went into enabling this, including many over the last few years, but all of the functionality was disabled in the shipping bits. Thanks to improvements like dotnet/runtime#62831 which implemented support for OSR on Arm64 (previously only x64 support was implemented), and dotnet/runtime#63406 and dotnet/runtime#65609 which revised how OSR imports and epilogs are handled, dotnet/runtime#65675 enables OSR (and as a result DOTNET_TC_QuickJitForLoops) by default.

But, tiered compilation and OSR aren’t just about startup (though they’re of course very valuable there). They’re also about further improving throughput. Even though tiered compilation was originally envisioned as a way to optimize startup while not hurting throughput, it’s become much more than that. There are various things the JIT can learn about a method during tier-0 that it can then use for tier-1. For example, the very fact that the tier-0 code executed means that any statics accessed by the method will have been initialized, and that means that any readonly statics will not only have been initialized by the time the tier-1 code executes but their values won’t ever change. And that in turn means that any readonly statics of primitive types (e.g. bool, int, etc.) can be treated like consts instead of static readonly fields, and during tier-1 compilation the JIT can optimize them just as it would have optimized a const. For example, try running this simple program after setting DOTNET_JitDisasm to Program:Test:

using System.Runtime.CompilerServices;

class Program

{

static readonly bool Is64Bit = Environment.Is64BitProcess;

static int Main()

{

int count = 0;

for (int i = 0; i < 1_000_000_000; i++)

if (Test())

count++;

return count;

}

[MethodImpl(MethodImplOptions.NoInlining)]

static bool Test() => Is64Bit;

}When I do so, I get this output:

; Assembly listing for method Program:Test():bool

; Emitting BLENDED_CODE for X64 CPU with AVX - Windows

; Tier-0 compilation

; MinOpts code

; rbp based frame

; partially interruptible

G_M000_IG01: ;; offset=0000H

55 push rbp

4883EC20 sub rsp, 32

488D6C2420 lea rbp, [rsp+20H]

G_M000_IG02: ;; offset=000AH

48B9B8639A3FFC7F0000 mov rcx, 0x7FFC3F9A63B8

BA01000000 mov edx, 1

E8C220B25F call CORINFO_HELP_GETSHARED_NONGCSTATIC_BASE

0FB60545580C00 movzx rax, byte ptr [(reloc 0x7ffc3f9a63ea)]

G_M000_IG03: ;; offset=0025H

4883C420 add rsp, 32

5D pop rbp

C3 ret

; Total bytes of code 43

; Assembly listing for method Program:Test():bool

; Emitting BLENDED_CODE for X64 CPU with AVX - Windows

; Tier-1 compilation

; optimized code

; rsp based frame

; partially interruptible

; No PGO data

G_M000_IG01: ;; offset=0000H

G_M000_IG02: ;; offset=0000H

B801000000 mov eax, 1

G_M000_IG03: ;; offset=0005H

C3 ret

; Total bytes of code 6Note, again, we see two outputs for Program:Test. First, we see the “Tier-0” code, which is accessing a static (note the call CORINFO_HELP_GETSHARED_NONGCSTATIC_BASE instruction). But then we see the “Tier-1” code, where all of that overhead has vanished and is instead replaced simply by mov eax, 1. Since the “Tier-0” code had to have executed in order for it to tier up, the “Tier-1” code was generated knowing that the value of the static readonly bool Is64Bit field was true (1), and so the entirety of this method is storing the value 1 into the eax register used for the return value.

This is so useful that components are now written with tiering in mind. Consider the new Regex source generator, which is discussed later in this post (Roslyn source generators were introduced a couple of years ago; just as how Roslyn analyzers are able to plug into the compiler and surface additional diagnostics based on all of the data the compiler learns from the source code, Roslyn source generators are able to analyze that same data and then further augment the compilation unit with additional source). The Regex source generator applies a technique based on this in dotnet/runtime#67775. Regex supports setting a process-wide timeout that gets applied to Regex instances that don’t explicitly set a timeout. That means, even though it’s super rare for such a process-wide timeout to be set, the Regex source generator still needs to output timeout-related code just in case it’s needed. It does so by outputting some helpers like this:

static class Utilities

{

internal static readonly TimeSpan s_defaultTimeout = AppContext.GetData("REGEX_DEFAULT_MATCH_TIMEOUT") is TimeSpan timeout ? timeout : Timeout.InfiniteTimeSpan;

internal static readonly bool s_hasTimeout = s_defaultTimeout != Timeout.InfiniteTimeSpan;

}which it then uses at call sites like this:

if (Utilities.s_hasTimeout)

{

base.CheckTimeout();

}In tier-0, these checks will still be emitted in the assembly code, but in tier-1 where throughput matters, if the relevant AppContext switch hasn’t been set, then s_defaultTimeout will be Timeout.InfiniteTimeSpan, at which point s_hasTimeout will be false. And since s_hasTimeout is a static readonly bool, the JIT will be able to treat that as a const, and all conditions like if (Utilities.s_hasTimeout) will be treated equal to if (false) and be eliminated from the assembly code entirely as dead code.

But, this is somewhat old news. The JIT has been able to do such an optimization since tiered compilation was introduced in .NET Core 3.0. Now in .NET 7, though, with OSR it’s also able to do so by default for methods with loops (and thus enable cases like the regex one). However, the real magic of OSR comes into play when combined with another exciting feature: dynamic PGO.

PGO

I wrote about profile-guided optimization (PGO) in my Performance Improvements in .NET 6 post, but I’ll cover it again here as it’s seen a multitude of improvements for .NET 7.

PGO has been around for a long time, in any number of languages and compilers. The basic idea is you compile your app, asking the compiler to inject instrumentation into the application to track various pieces of interesting information. You then put your app through its paces, running through various common scenarios, causing that instrumentation to “profile” what happens when the app is executed, and the results of that are then saved out. The app is then recompiled, feeding those instrumentation results back into the compiler, and allowing it to optimize the app for exactly how it’s expected to be used. This approach to PGO is referred to as “static PGO,” as the information is all gleaned ahead of actual deployment, and it’s something .NET has been doing in various forms for years. From my perspective, though, the really interesting development in .NET is “dynamic PGO,” which was introduced in .NET 6, but off by default.

Dynamic PGO takes advantage of tiered compilation. I noted that the JIT instruments the tier-0 code to track how many times the method is called, or in the case of loops, how many times the loop executes. It can instrument it for other things as well. For example, it can track exactly which concrete types are used as the target of an interface dispatch, and then in tier-1 specialize the code to expect the most common types (this is referred to as “guarded devirtualization,” or GDV). You can see this in this little example. Set the DOTNET_TieredPGO environment variable to 1, and then run this on .NET 7:

class Program

{

static void Main()

{

IPrinter printer = new Printer();

for (int i = 0; ; i++)

{

DoWork(printer, i);

}

}

static void DoWork(IPrinter printer, int i)

{

printer.PrintIfTrue(i == int.MaxValue);

}

interface IPrinter

{

void PrintIfTrue(bool condition);

}

class Printer : IPrinter

{

public void PrintIfTrue(bool condition)

{

if (condition) Console.WriteLine("Print!");

}

}

}The tier-0 code for DoWork ends up looking like this:

G_M000_IG01: ;; offset=0000H

55 push rbp

4883EC30 sub rsp, 48

488D6C2430 lea rbp, [rsp+30H]

33C0 xor eax, eax

488945F8 mov qword ptr [rbp-08H], rax

488945F0 mov qword ptr [rbp-10H], rax

48894D10 mov gword ptr [rbp+10H], rcx

895518 mov dword ptr [rbp+18H], edx

G_M000_IG02: ;; offset=001BH

FF059F220F00 inc dword ptr [(reloc 0x7ffc3f1b2ea0)]

488B4D10 mov rcx, gword ptr [rbp+10H]

48894DF8 mov gword ptr [rbp-08H], rcx

488B4DF8 mov rcx, gword ptr [rbp-08H]

48BAA82E1B3FFC7F0000 mov rdx, 0x7FFC3F1B2EA8

E8B47EC55F call CORINFO_HELP_CLASSPROFILE32

488B4DF8 mov rcx, gword ptr [rbp-08H]

48894DF0 mov gword ptr [rbp-10H], rcx

488B4DF0 mov rcx, gword ptr [rbp-10H]

33D2 xor edx, edx

817D18FFFFFF7F cmp dword ptr [rbp+18H], 0x7FFFFFFF

0F94C2 sete dl

49BB0800F13EFC7F0000 mov r11, 0x7FFC3EF10008

41FF13 call [r11]IPrinter:PrintIfTrue(bool):this

90 nop

G_M000_IG03: ;; offset=0062H

4883C430 add rsp, 48

5D pop rbp

C3 retand most notably, you can see the call [r11]IPrinter:PrintIfTrue(bool):this doing the interface dispatch. But, then look at the code generated for tier-1. We still see the call [r11]IPrinter:PrintIfTrue(bool):this, but we also see this:

G_M000_IG02: ;; offset=0020H

48B9982D1B3FFC7F0000 mov rcx, 0x7FFC3F1B2D98

48390F cmp qword ptr [rdi], rcx

7521 jne SHORT G_M000_IG05

81FEFFFFFF7F cmp esi, 0x7FFFFFFF

7404 je SHORT G_M000_IG04

G_M000_IG03: ;; offset=0037H

FFC6 inc esi

EBE5 jmp SHORT G_M000_IG02

G_M000_IG04: ;; offset=003BH

48B9D820801A24020000 mov rcx, 0x2241A8020D8

488B09 mov rcx, gword ptr [rcx]

FF1572CD0D00 call [Console:WriteLine(String)]

EBE7 jmp SHORT G_M000_IG03That first block is checking the concrete type of the IPrinter (stored in rdi) and comparing it against the known type for Printer (0x7FFC3F1B2D98). If they’re different, it just jumps to the same interface dispatch it was doing in the unoptimized version. But if they’re the same, it then jumps directly to an inlined version of Printer.PrintIfTrue (you can see the call to Console:WriteLine right there in this method). Thus, the common case (the only case in this example) is super efficient at the expense of a single comparison and branch.

That all existed in .NET 6, so why are we talking about it now? Several things have improved. First, PGO now works with OSR, thanks to improvements like dotnet/runtime#61453. That’s a big deal, as it means hot long-running methods that do this kind of interface dispatch (which are fairly common) can get these kinds of devirtualization/inlining optimizations. Second, while PGO isn’t currently enabled by default, we’ve made it much easier to turn on. Between dotnet/runtime#71438 and dotnet/sdk#26350, it’s now possible to simply put <TieredPGO>true</TieredPGO> into your .csproj, and it’ll have the same effect as if you set DOTNET_TieredPGO=1 prior to every invocation of the app, enabling dynamic PGO (note that it doesn’t disable use of R2R images, so if you want the entirety of the core libraries also employing dynamic PGO, you’ll also need to set DOTNET_ReadyToRun=0). Third, however, is dynamic PGO has been taught how to instrument and optimize additional things.

PGO already knew how to instrument virtual dispatch. Now in .NET 7, thanks in large part to dotnet/runtime#68703, it can do so for delegates as well (at least for delegates to instance methods). Consider this simple console app:

using System.Runtime.CompilerServices;

class Program

{

static int[] s_values = Enumerable.Range(0, 1_000).ToArray();

static void Main()

{

for (int i = 0; i < 1_000_000; i++)

Sum(s_values, i => i * 42);

}

[MethodImpl(MethodImplOptions.NoInlining)]

static int Sum(int[] values, Func<int, int> func)

{

int sum = 0;

foreach (int value in values)

sum += func(value);

return sum;

}

}Without PGO enabled, I get generated optimized assembly like this:

; Assembly listing for method Program:Sum(ref,Func`2):int

; Emitting BLENDED_CODE for X64 CPU with AVX - Windows

; Tier-1 compilation

; optimized code

; rsp based frame

; partially interruptible

; No PGO data

G_M000_IG01: ;; offset=0000H

4156 push r14

57 push rdi

56 push rsi

55 push rbp

53 push rbx

4883EC20 sub rsp, 32

488BF2 mov rsi, rdx

G_M000_IG02: ;; offset=000DH

33FF xor edi, edi

488BD9 mov rbx, rcx

33ED xor ebp, ebp

448B7308 mov r14d, dword ptr [rbx+08H]

4585F6 test r14d, r14d

7E16 jle SHORT G_M000_IG04

G_M000_IG03: ;; offset=001DH

8BD5 mov edx, ebp

8B549310 mov edx, dword ptr [rbx+4*rdx+10H]

488B4E08 mov rcx, gword ptr [rsi+08H]

FF5618 call [rsi+18H]Func`2:Invoke(int):int:this

03F8 add edi, eax

FFC5 inc ebp

443BF5 cmp r14d, ebp

7FEA jg SHORT G_M000_IG03

G_M000_IG04: ;; offset=0033H

8BC7 mov eax, edi

G_M000_IG05: ;; offset=0035H

4883C420 add rsp, 32

5B pop rbx

5D pop rbp

5E pop rsi

5F pop rdi

415E pop r14

C3 ret

; Total bytes of code 64Note the call [rsi+18H]Func`2:Invoke(int):int:this in there that’s invoking the delegate. Now with PGO enabled:

; Assembly listing for method Program:Sum(ref,Func`2):int

; Emitting BLENDED_CODE for X64 CPU with AVX - Windows

; Tier-1 compilation

; optimized code

; optimized using profile data

; rsp based frame

; fully interruptible

; with Dynamic PGO: edge weights are valid, and fgCalledCount is 5628

; 0 inlinees with PGO data; 1 single block inlinees; 0 inlinees without PGO data

G_M000_IG01: ;; offset=0000H

4157 push r15

4156 push r14

57 push rdi

56 push rsi

55 push rbp

53 push rbx

4883EC28 sub rsp, 40

488BF2 mov rsi, rdx

G_M000_IG02: ;; offset=000FH

33FF xor edi, edi

488BD9 mov rbx, rcx

33ED xor ebp, ebp

448B7308 mov r14d, dword ptr [rbx+08H]

4585F6 test r14d, r14d

7E27 jle SHORT G_M000_IG05

G_M000_IG03: ;; offset=001FH

8BC5 mov eax, ebp

8B548310 mov edx, dword ptr [rbx+4*rax+10H]

4C8B4618 mov r8, qword ptr [rsi+18H]

48B8A0C2CF3CFC7F0000 mov rax, 0x7FFC3CCFC2A0

4C3BC0 cmp r8, rax

751D jne SHORT G_M000_IG07

446BFA2A imul r15d, edx, 42

G_M000_IG04: ;; offset=003CH

4103FF add edi, r15d

FFC5 inc ebp

443BF5 cmp r14d, ebp

7FD9 jg SHORT G_M000_IG03

G_M000_IG05: ;; offset=0046H

8BC7 mov eax, edi

G_M000_IG06: ;; offset=0048H

4883C428 add rsp, 40

5B pop rbx

5D pop rbp

5E pop rsi

5F pop rdi

415E pop r14

415F pop r15

C3 ret

G_M000_IG07: ;; offset=0055H

488B4E08 mov rcx, gword ptr [rsi+08H]

41FFD0 call r8

448BF8 mov r15d, eax

EBDB jmp SHORT G_M000_IG04I chose the 42 constant in i => i * 42 to make it easy to see in the assembly, and sure enough, there it is:

G_M000_IG03: ;; offset=001FH

8BC5 mov eax, ebp

8B548310 mov edx, dword ptr [rbx+4*rax+10H]

4C8B4618 mov r8, qword ptr [rsi+18H]

48B8A0C2CF3CFC7F0000 mov rax, 0x7FFC3CCFC2A0

4C3BC0 cmp r8, rax

751D jne SHORT G_M000_IG07