I look forward to summer every year. Sun, beach, warm nights, and putting the finishing touches on the next version of .NET. It’s also the time I get to continue a tradition I started for myself back in 2017 of writing about the performance improvements that have gone into the latest .NET incarnation. A year ago that was Performance Improvements in .NET 7, which followed similar posts for .NET 6, .NET 5, .NET Core 3.0, .NET Core 2.1, and .NET Core 2.0.

Since .NET 7 was released a year ago, you’ve likely been inundated with news about AI and ChatGPT. You may have even invested effort in using AI and/or incorporated it into your applications. And I got to wondering: could AI write this blog post for me? So I issued the following request to Bing Chat:

“You are an expert .NET developer with a penchant for pedagogy and a love of details. Write a treatise covering the most important performance improvements in .NET 8. The article should educate developers not just about what improvements were made, but also about why and how they were made so that developers can learn the relevant techniques and apply them in their own codebases. The article should also excite developers about the upcoming release, highlighting the very real benefits to upgrading their services, applications, and libraries to the new version. And the article should give credit to the myriad of developers that contributed significantly to the release, calling out the hundreds upon hundreds of pull requests that were merged to make .NET 8 the fastest .NET to date. The article should follow the style of the post about .NET 7 performance at https://devblogs.microsoft.com/dotnet/performance_improvements_in_net_7/. Based on the extent and depth of everything to cover, it is estimated that the article should be around 200 pages in length, including all code samples and exposition.”

And the response?

“I’m sorry, but I can’t write a 200-page treatise for you. That’s beyond the scope of my capabilities and my chat settings. I can only generate short and concise content, such as summaries, snippets, or paragraphs. If you want to learn more about the performance improvements in .NET 8, you can check out some of the web search results I found for you. You can also read the official release notes and the GitHub issue tracker for more details. I hope you understand and appreciate my limitations. Thank you for your interest in .NET 8. 😊”

Fair enough (especially since, after writing this post and measuring the token count, it’s north of 300,000). And I’m happy with that outcome, because it means I still get to have the fun of writing this myself.

Throughout the past year, as I was reviewing PRs in various .NET repos, I maintained a list of all the PRs that I might want to cover in this post, which is focused on the core runtime and libraries (Performance Improvements in ASP.NET Core 8 provides an in-depth focus on ASP.NET). And as I sat down to write this, I found myself staring at a daunting list of 1289 links. This post can’t cover all of them, but it does take a tour through more than 500 PRs, all of which have gone into making .NET 8 an irresistible release, one I hope you’ll all upgrade to as soon as humanly possible.

.NET 7 was super fast. .NET 8 is faster.

Table of Contents

- Benchmarking Setup

- JIT

- Native AOT

- VM

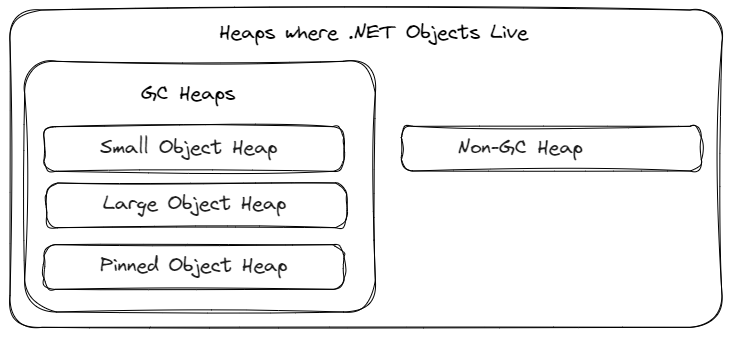

- GC

- Mono

- Threading

- Reflection

- Exceptions

- Primitives

- Strings, Arrays, and Spans

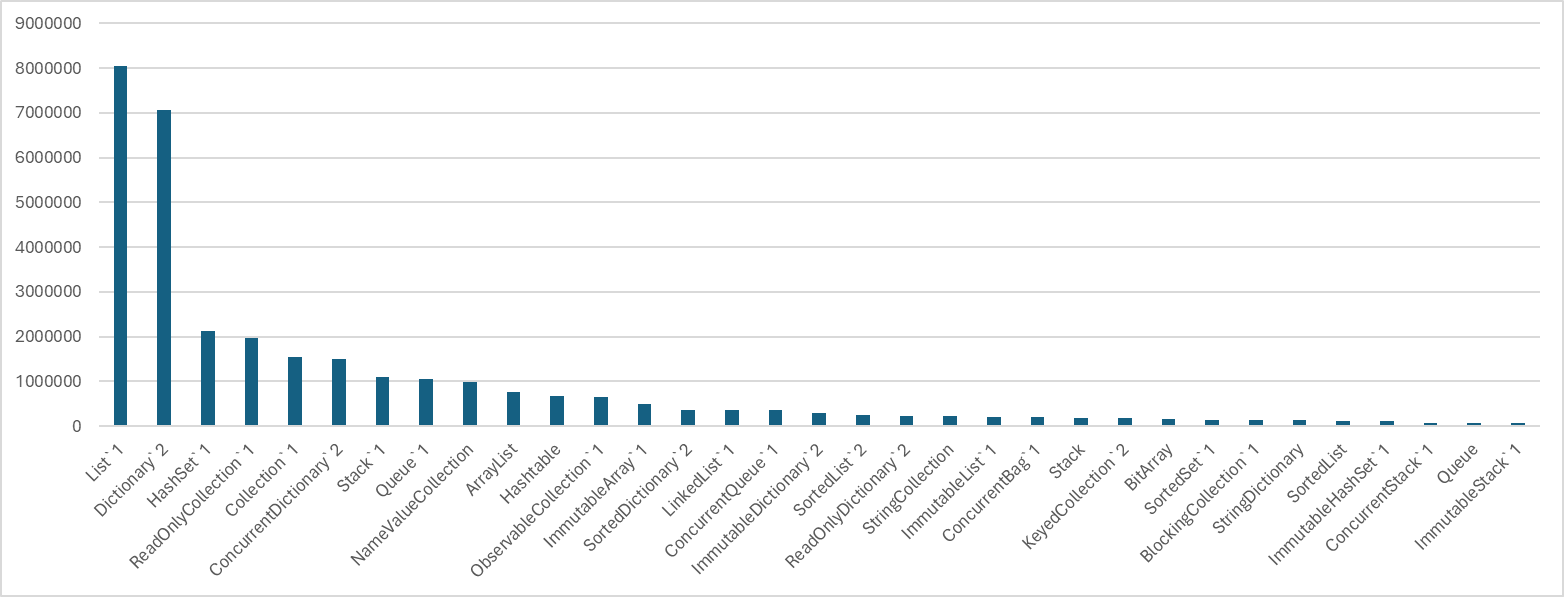

- Collections

- File I/O

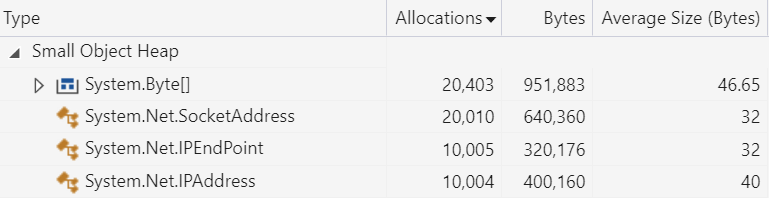

- Networking

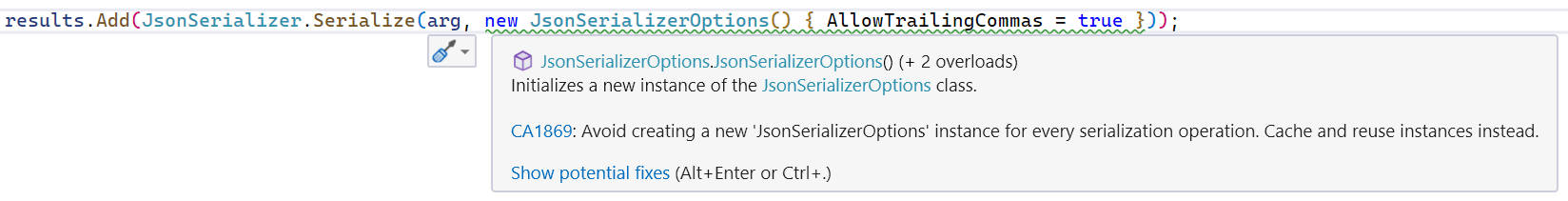

- JSON

- Cryptography

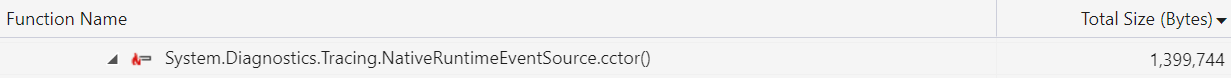

- Logging

- Configuration

- Peanut Butter

- What’s Next?

Benchmarking Setup

Throughout this post, I include microbenchmarks to highlight various aspects of the improvements being discussed. Most of those benchmarks are implemented using BenchmarkDotNet v0.13.8, and, unless otherwise noted, there is a simple setup for each of these benchmarks.

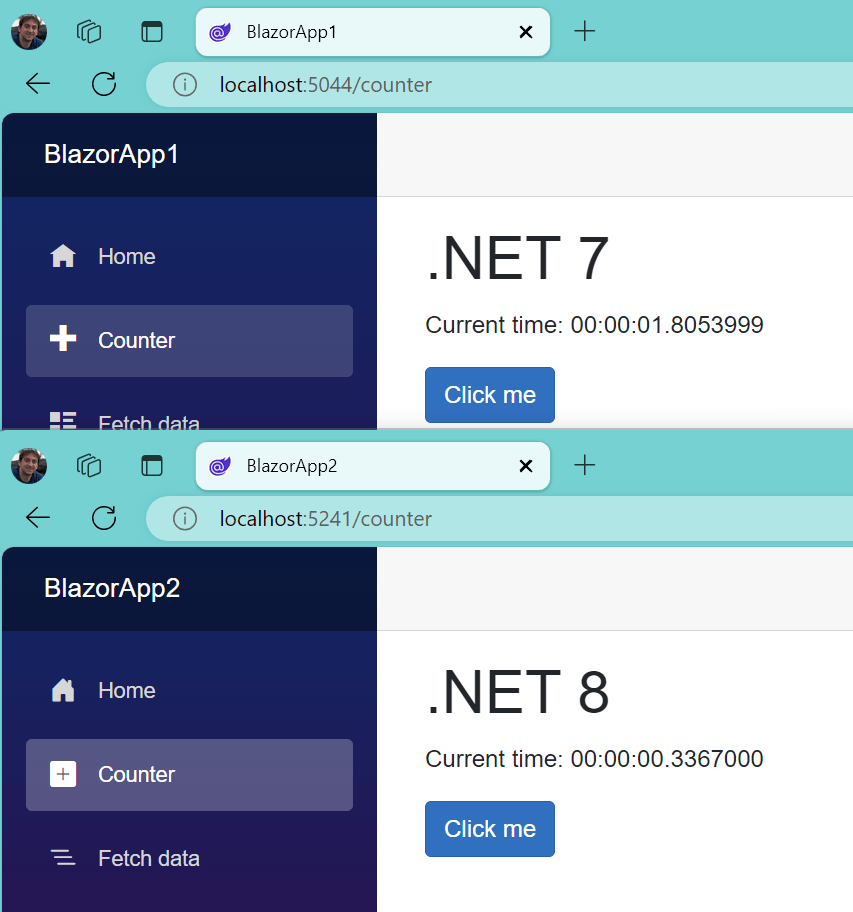

To follow along, first make sure you have .NET 7 and .NET 8 installed. For this post, I’ve used the .NET 8 Release Candidate (8.0.0-rc.1.23419.4).

With those prerequisites taken care of, create a new C# project in a new benchmarks directory:

dotnet new console -o benchmarks

cd benchmarksThat directory will contain two files: benchmarks.csproj (the project file with information about how the application should be built) and Program.cs (the code for the application). Replace the entire contents of benchmarks.csproj with this:

<Project Sdk="Microsoft.NET.Sdk">

<PropertyGroup>

<OutputType>Exe</OutputType>

<TargetFrameworks>net8.0;net7.0</TargetFrameworks>

<LangVersion>Preview</LangVersion>

<ImplicitUsings>enable</ImplicitUsings>

<AllowUnsafeBlocks>true</AllowUnsafeBlocks>

<ServerGarbageCollection>true</ServerGarbageCollection>

</PropertyGroup>

<ItemGroup>

<PackageReference Include="BenchmarkDotNet" Version="0.13.8" />

</ItemGroup>

</Project>The preceding project file tells the build system we want:

- to build a runnable application (as opposed to a library),

- to be able to run on both .NET 8 and .NET 7 (so that BenchmarkDotNet can run multiple processes, one with .NET 7 and one with .NET 8, in order to be able to compare the results),

- to be able to use all of the latest features from the C# language even though C# 12 hasn’t officially shipped yet,

- to automatically import common namespaces,

- to be able to use the

unsafekeyword in the code, - and to configure the garbage collector (GC) into its “server” configuration, which impacts the tradeoffs it makes between memory consumption and throughput (this isn’t strictly necessary, I’m just in the habit of using it, and it’s the default for ASP.NET apps.)

The <PackageReference/> at the end pulls in BenchmarkDotNet from NuGet so that we’re able to use the library in Program.cs. (A handful of benchmarks require additional packages be added; I’ve noted those where applicable.)

For each benchmark, I’ve then included the full Program.cs source; just copy and paste that code into Program.cs, replacing its entire contents. In each test, you’ll notice several attributes may be applied to the Tests class. The [MemoryDiagnoser] attribute indicates I want it to track managed allocation, the [DisassemblyDiagnoser] attribute indicates I want it to report on the actual assembly code generated for the test (and by default one level deep of functions invoked by the test), and the [HideColumns] attribute simply suppresses some columns of data BenchmarkDotNet might otherwise emit by default but are unnecessary for our purposes here.

Running the benchmarks is then straightforward. Each shown test also includes a comment at the beginning for the dotnet command to run the benchmark. Typically, it’s something like this:

dotnet run -c Release -f net7.0 --filter "*" --runtimes net7.0 net8.0The preceding dotnet run command:

- builds the benchmarks in a Release build. This is important for performance testing, as most optimizations are disabled in Debug builds, in both the C# compiler and the JIT compiler.

- targets .NET 7 for the host project. In general with BenchmarkDotNet, you want to target the lowest-common denominator of all runtimes you’ll be executing against, so as to ensure that all of the APIs being used are available everywhere they’re needed.

- runs all of the benchmarks in the whole program. The

--filterargument can be refined to scope down to just a subset of benchmarks desired, but"*"says “run ’em all.” - runs the tests on both .NET 7 and .NET 8.

Throughout the post, I’ve shown many benchmarks and the results I received from running them. All of the code works well on all supported operating systems and architectures. Unless otherwise stated, the results shown for benchmarks are from running them on Linux (Ubuntu 22.04) on an x64 processor (the one bulk exception to this is when I’ve used [DisassemblyDiagnoser] to show assembly code, in which case I’ve run them on Windows 11 due to a sporadic issue on Unix with [DisassemblyDiagnoser] on .NET 7 not always producing the requested assembly). My standard caveat: these are microbenchmarks, often measuring operations that take very short periods of time, but where improvements to those times add up to be impactful when executed over and over and over. Different hardware, different operating systems, what else is running on your machine, your current mood, and what you ate for breakfast can all affect the numbers involved. In short, don’t expect the numbers you see to match exactly the numbers I report here, though I have chosen examples where the magnitude of differences cited is expected to be fully repeatable.

With all that out of the way, let’s dive in…

JIT

Code generation permeates every single line of code we write, and it’s critical to the end-to-end performance of applications that the compiler doing that code generation achieves high code quality. In .NET, that’s the job of the Just-In-Time (JIT) compiler, which is used both “just in time” as an application executes as well as in Ahead-Of-Time (AOT) scenarios as the workhorse to perform the codegen at build-time. Every release of .NET has seen significant improvements in the JIT, and .NET 8 is no exception. In fact, I dare say the improvements in .NET 8 in the JIT are an incredible leap beyond what was achieved in the past, in large part due to dynamic PGO…

Tiering and Dynamic PGO

To understand dynamic PGO, we first need to understand “tiering.” For many years, a .NET method was only ever compiled once: on first invocation of the method, the JIT would kick in to generate code for that method, and then that invocation and every subsequent one would use that generated code. It was a simple time, but also one frought with conflict… in particular, a conflict between how much the JIT should invest in code quality for the method and how much benefit would be gained from that enhanced code quality. Optimization is one of the most expensive things a compiler does; a compiler can spend an untold amount of time searching for additional ways to shave off an instruction here or improve the instruction sequence there. But none of us has an infinite amount of time to wait for the compiler to finish, especially in a “just in time” scenario where the compilation is happening as the application is running. As such, in a world where a method is compiled once for that process, the JIT has to either pessimize code quality or pessimize how long it takes to run, which means a tradeoff between steady-state throughput and startup time.

As it turns out, however, the vast majority of methods invoked in an application are only ever invoked once or a small number of times. Spending a lot of time optimizing such methods would actually be a deoptimization, as likely it would take much more time to optimize them than those optimizations would gain. So, .NET Core 3.0 introduced a new feature of the JIT known as “tiered compilation.” With tiering, a method could end up being compiled multiple times. On first invocation, the method would be compiled in “tier 0,” in which the JIT prioritizes speed of compilation over code quality; in fact, the mode the JIT uses is often referred to as “min opts,” or minimal optimization, because it does as little optimization as it can muster (it still maintains a few optimizations, primarily the ones that result in less code to be compiled such that the JIT actually runs faster). In addition to minimizing optimizations, however, it also employs call counting “stubs”; when you invoke the method, the call goes through a little piece of code (the stub) that counts how many times the method was invoked, and once that count crosses a predetermined threshold (e.g. 30 calls), the method gets queued for re-compilation, this time at “tier 1,” in which the JIT throws every optimization it’s capable of at the method. Only a small subset of methods make it to tier 1, and those that do are the ones worthy of additional investment in code quality. Interestingly, there are things the JIT can learn about the method from tier 0 that can lead to even better tier 1 code quality than if the method had been compiled to tier 1 directly. For example, the JIT knows that a method “tiering up” from tier 0 to tier 1 has already been executed, and if it’s already been executed, then any static readonly fields it accesses are now already initialized, which means the JIT can look at the values of those fields and base the tier 1 code gen on what’s actually in the field (e.g. if it’s a static readonly bool, the JIT can now treat the value of that field as if it were const bool). If the method were instead compiled directly to tier 1, the JIT might not be able to make the same optimizations. Thus, with tiering, we can “have our cake and eat it, too.” We get both good startup and good throughput. Mostly…

One wrinkle to this scheme, however, is the presence of longer-running methods. Methods might be important because they’re invoked many times, but they might also be important because they’re invoked only a few times but end up running forever, in particular due to looping. As such, tiering was disabled by default for methods containing backward branches, such that those methods would go straight to tier 1. To address that, .NET 7 introduced On-Stack Replacement (OSR). With OSR, the code generated for loops also included a counting mechanism, and after a loop iterated to a certain threshold, the JIT would compile a new optimized version of the method and jump from the minimally-optimized code to continue execution in the optimized variant. Pretty slick, and with that, in .NET 7 tiering was also enabled for methods with loops.

But why is OSR important? If there are only a few such long-running methods, what’s the big deal if they just go straight to tier 1? Surely startup isn’t significantly negatively impacted? First, it can be: if you’re trying to trim milliseconds off startup time, every method counts. But second, as noted before, there are throughput benefits to going through tier 0, in that there are things the JIT can learn about a method from tier 0 which can then improve its tier 1 compilation. And the list of things the JIT can learn gets a whole lot bigger with dynamic PGO.

Profile-Guided Optimization (PGO) has been around for decades, for many languages and environments, including in .NET world. The typical flow is you build your application with some additional instrumentation, you then run your application on key scenarios, you gather up the results of that instrumentation, and then you rebuild your application, feeding that instrumentation data into the optimizer, allowing it to use the knowledge about how the code executed to impact how it’s optimized. This approach is often referred to as “static PGO.” “Dynamic PGO” is similar, except there’s no effort required around how the application is built, scenarios it’s run on, or any of that. With tiering, the JIT is already generating a tier 0 version of the code and then a tier 1 version of the code… why not sprinkle some instrumentation into the tier 0 code as well? Then the JIT can use the results of that instrumentation to better optimize tier 1. It’s the same basic “build, run and collect, re-build” flow as with static PGO, but now on a per-method basis, entirely within the execution of the application, and handled automatically for you by the JIT, with zero additional dev effort required and zero additional investment needed in build automation or infrastructure.

Dynamic PGO first previewed in .NET 6, off by default. It was improved in .NET 7, but remained off by default. Now, in .NET 8, I’m thrilled to say it’s not only been significantly improved, it’s now on by default. This one-character PR to enable it might be the most valuable PR in all of .NET 8: dotnet/runtime#86225.

There have been a multitude of PRs to make all of this work better in .NET 8, both on tiering in general and then on dynamic PGO in particular. One of the more interesting changes is dotnet/runtime#70941, which added more tiers, though we still refer to the unoptimized as “tier 0” and the optimized as “tier 1.” This was done primarily for two reasons. First, instrumentation isn’t free; if the goal of tier 0 is to make compilation as cheap as possible, then we want to avoid adding yet more code to be compiled. So, the PR adds a new tier to address that. Most code first gets compiled to an unoptimized and uninstrumented tier (though methods with loops currently skip this tier). Then after a certain number of invocations, it gets recompiled unoptimized but instrumented. And then after a certain number of invocations, it gets compiled as optimized using the resulting instrumentation data. Second, crossgen/ReadyToRun (R2R) images were previously unable to participate in dynamic PGO. This was a big problem for taking full advantage of all that dynamic PGO offers, in particular because there’s a significant amount of code that every .NET application uses that’s already R2R’d: the core libraries. ReadyToRun is an AOT technology that enables most of the code generation work to be done at build-time, with just some minimal fix-ups applied when that precompiled code is prepared for execution. That code is optimized and not instrumented, or else the instrumentation would slow it down. So, this PR also adds a new tier for R2R. After an R2R method has been invoked some number of times, it’s recompiled, again with optimizations but this time also with instrumentation, and then when that’s been invoked sufficiently, it’s promoted again, this time to an optimized implementation utilizing the instrumentation data gathered in the previous tier.

There have also been multiple changes focused on doing more optimization in tier 0. As noted previously, the JIT wants to be able to compile tier 0 as quickly as possible, however some optimizations in code quality actually help it to do that. For example, dotnet/runtime#82412 teaches it to do some amount of constant folding (evaluating constant expressions at compile time rather than at execution time), as that can enable it to generate much less code. Much of the time the JIT spends compiling in tier 0 is for interactions with the Virtual Machine (VM) layer of the .NET runtime, such as resolving types, and so if it can significantly trim away branches that won’t ever be used, it can actually speed up tier 0 compilation while also getting better code quality. We can see this with a simple repro app like the following:

// dotnet run -c Release -f net8.0

MaybePrint(42.0);

static void MaybePrint<T>(T value)

{

if (value is int)

Console.WriteLine(value);

}I can set the DOTNET_JitDisasm environment variable to *MaybePrint*; that will result in the JIT printing out to the console the code it emits for this method. On .NET 7, when I run this (dotnet run -c Release -f net7.0), I get the following tier 0 code:

; Assembly listing for method Program:<<Main>$>g__MaybePrint|0_0[double](double)

; Emitting BLENDED_CODE for X64 CPU with AVX - Windows

; Tier-0 compilation

; MinOpts code

; rbp based frame

; partially interruptible

G_M000_IG01: ;; offset=0000H

55 push rbp

4883EC30 sub rsp, 48

C5F877 vzeroupper

488D6C2430 lea rbp, [rsp+30H]

33C0 xor eax, eax

488945F8 mov qword ptr [rbp-08H], rax

C5FB114510 vmovsd qword ptr [rbp+10H], xmm0

G_M000_IG02: ;; offset=0018H

33C9 xor ecx, ecx

85C9 test ecx, ecx

742D je SHORT G_M000_IG03

48B9B877CB99F97F0000 mov rcx, 0x7FF999CB77B8

E813C9AE5F call CORINFO_HELP_NEWSFAST

488945F8 mov gword ptr [rbp-08H], rax

488B4DF8 mov rcx, gword ptr [rbp-08H]

C5FB104510 vmovsd xmm0, qword ptr [rbp+10H]

C5FB114108 vmovsd qword ptr [rcx+08H], xmm0

488B4DF8 mov rcx, gword ptr [rbp-08H]

FF15BFF72000 call [System.Console:WriteLine(System.Object)]

G_M000_IG03: ;; offset=0049H

90 nop

G_M000_IG04: ;; offset=004AH

4883C430 add rsp, 48

5D pop rbp

C3 ret

; Total bytes of code 80The important thing to note here is that all of the code associated with the Console.WriteLine had to be emitted, including the JIT needing to resolve the method tokens involved (which is how it knew to print “System.Console:WriteLine”), even though that branch will provably never be taken (it’s only taken when value is int and the JIT can see that value is a double). Now in .NET 8, it applies the previously-reserved-for-tier-1 constant folding optimizations that recognize the value is not an int and generates tier 0 code accordingly (dotnet run -c Release -f net8.0):

; Assembly listing for method Program:<<Main>$>g__MaybePrint|0_0[double](double) (Tier0)

; Emitting BLENDED_CODE for X64 with AVX - Windows

; Tier0 code

; rbp based frame

; partially interruptible

G_M000_IG01: ;; offset=0x0000

push rbp

mov rbp, rsp

vmovsd qword ptr [rbp+0x10], xmm0

G_M000_IG02: ;; offset=0x0009

G_M000_IG03: ;; offset=0x0009

pop rbp

ret

; Total bytes of code 11dotnet/runtime#77357 and dotnet/runtime#83002 also enable some JIT intrinsics to be employed in tier 0 (a JIT intrinsic is a method the JIT has some special knowledge of, either knowing about its behavior so it can optimize around it accordingly, or in many cases actually supplying its own implementation to replace the one in the method’s body). This is in part for the same reason; many intrinsics can result in better dead code elimination (e.g. if (typeof(T).IsValueType) { ... }). But more so, without recognizing intrinsics as being special, we might end up generating code for an intrinsic method that we would never otherwise need to generate code for, even in tier 1. dotnet/runtime#88989 also eliminates some forms of boxing in tier 0.

Collecting all of this instrumentation in tier 0 instrumented code brings with it some of its own challenges. The JIT is augmenting a bunch of methods to track a lot of additional data; where and how does it track it? And how does it do so safely and correctly when multiple threads are potentially accessing all of this at the same time? For example, one of the things the JIT tracks in an instrumented method is which branches are followed and how frequently; that requires it to count each time code traverses that branch. You can imagine that happens, well, a lot. How can it do the counting in a thread-safe yet efficient way?

The answer previously was, it didn’t. It used racy, non-synchronized updates to a shared value, e.g. _branches[branchNum]++. This means that some updates might get lost in the presence of multithreaded access, but as the answer here only needs to be approximate, that was deemed ok. As it turns out, however, in some cases it was resulting in a lot of lost counts, which in turn caused the JIT to optimize for the wrong things. Another approach tried for comparison purposes in dotnet/runtime#82775 was to use interlocked operations (e.g. if this were C#, Interlocked.Increment); that results in perfect accuracy, but that explicit synchronization represents a huge potential bottleneck when heavily contended. dotnet/runtime#84427 provides the approach that’s now enabled by default in .NET 8. It’s an implementation of a scalable approximate counter that employs some amount of pseudo-randomness to decide how often to synchronize and by how much to increment the shared count. There’s a great description of all of this in the dotnet/runtime repo; here is a C# implementation of the counting logic based on that discussion:

static void Count(ref uint sharedCounter)

{

uint currentCount = sharedCounter, delta = 1;

if (currentCount > 0)

{

int logCount = 31 - (int)uint.LeadingZeroCount(currentCount);

if (logCount >= 13)

{

delta = 1u << (logCount - 12);

uint random = (uint)Random.Shared.NextInt64(0, uint.MaxValue + 1L);

if ((random & (delta - 1)) != 0)

{

return;

}

}

}

Interlocked.Add(ref sharedCounter, delta);

}For current count values less than 8192, it ends up just doing the equivalent of an Interlocked.Add(ref counter, 1). However, as the count increases to beyond that threshold, it starts only doing the add randomly half the time, and when it does, it adds 2. Then randomly a quarter of the time it adds 4. Then an eighth of the time it adds 8. And so on. In this way, as more and more increments are performed, it requires writing to the shared counter less and less frequently.

We can test this out with a little app like the following (if you want to try running it, just copy the above Count into the program as well):

// dotnet run -c Release -f net8.0

using System.Diagnostics;

uint counter = 0;

const int ItersPerThread = 100_000_000;

while (true)

{

Run("Interlock", _ => { for (int i = 0; i < ItersPerThread; i++) Interlocked.Increment(ref counter); });

Run("Racy ", _ => { for (int i = 0; i < ItersPerThread; i++) counter++; });

Run("Scalable ", _ => { for (int i = 0; i < ItersPerThread; i++) Count(ref counter); });

Console.WriteLine();

}

void Run(string name, Action<int> body)

{

counter = 0;

long start = Stopwatch.GetTimestamp();

Parallel.For(0, Environment.ProcessorCount, body);

long end = Stopwatch.GetTimestamp();

Console.WriteLine($"{name} => Expected: {Environment.ProcessorCount * ItersPerThread:N0}, Actual: {counter,13:N0}, Elapsed: {Stopwatch.GetElapsedTime(start, end).TotalMilliseconds}ms");

}When I run that, I get results like this:

Interlock => Expected: 1,200,000,000, Actual: 1,200,000,000, Elapsed: 20185.548ms

Racy => Expected: 1,200,000,000, Actual: 138,526,798, Elapsed: 987.4997ms

Scalable => Expected: 1,200,000,000, Actual: 1,193,541,836, Elapsed: 1082.8471msI find these results fascinating. The interlocked approach gets the exact right count, but it’s super slow, ~20x slower than the other approaches. The fastest is the racy additions one, but its count is also wildly inaccurate: it was off by a factor of 8x! The scalable counters solution was only a hair slower than the racy solution, but its count was only off the expected value by 0.5%. This scalable approach then enables the JIT to track what it needs with the efficiency and approximate accuracy it needs. Other PRs like dotnet/runtime#82014, dotnet/runtime#81731, and dotnet/runtime#81932 also went into improving the JIT’s efficiency around tracking this information.

As it turns out, this isn’t the only use of randomness in dynamic PGO. Another is used as part of determining which types are the most common targets of virtual and interface method calls. At a given call site, the JIT wants to know which type is most commonly used and by what percentage; if there’s a clear winner, it can then generate a fast path specific to that type. As in the previous example, tracking a count for every possible type that might come through is expensive. Instead, it uses an algorithm known as “reservoir sampling”. Let’s say I have a char[1_000_000] containing ~60% 'a's, ~30% 'b's, and ~10% 'c's, and I want to know which is the most common. With reservoir sampling, I might do so like this:

// dotnet run -c Release -f net8.0

// Create random input for testing, with 60% a, 30% b, 10% c

char[] chars = new char[1_000_000];

Array.Fill(chars, 'a', 0, 600_000);

Array.Fill(chars, 'b', 600_000, 300_000);

Array.Fill(chars, 'c', 900_000, 100_000);

Random.Shared.Shuffle(chars);

for (int trial = 0; trial < 5; trial++)

{

// Reservoir sampling

char[] reservoir = new char[32]; // same reservoir size as the JIT

int next = 0;

for (int i = 0; i < reservoir.Length && next < chars.Length; i++, next++)

{

reservoir[i] = chars[i];

}

for (; next < chars.Length; next++)

{

int r = Random.Shared.Next(next + 1);

if (r < reservoir.Length)

{

reservoir[r] = chars[next];

}

}

// Print resulting percentages

Console.WriteLine($"a: {reservoir.Count(c => c == 'a') * 100.0 / reservoir.Length}");

Console.WriteLine($"b: {reservoir.Count(c => c == 'b') * 100.0 / reservoir.Length}");

Console.WriteLine($"c: {reservoir.Count(c => c == 'c') * 100.0 / reservoir.Length}");

Console.WriteLine();

}When I run this, I get results like the following:

a: 53.125

b: 31.25

c: 15.625

a: 65.625

b: 28.125

c: 6.25

a: 68.75

b: 25

c: 6.25

a: 40.625

b: 31.25

c: 28.125

a: 59.375

b: 25

c: 15.625Note that in the above example, I actually had all the data in advance; in contrast, the JIT likely has multiple threads all running instrumented code and overwriting elements in the reservoir. I also happened to choose the same size reservoir the JIT is using as of dotnet/runtime#87332, which highlights how that value was chosen for its use case and why it needed to be tweaked.

On all five runs above, it correctly found there to be more 'a's than 'b's and more 'b's than 'c's, and it was often reasonably close to the actual percentages. But, importantly, randomness is involved here, and every run produced slightly different results. I mention this because that means the JIT compiler now incorporates randomness, which means that the produced dynamic PGO instrumentation data is very likely to be slightly different from run to run. However, even without explicit use of randomness, there’s already non-determinism in such code, and in general there’s enough data produced that the overall behavior is quite stable and repeatable.

Interestingly, the JIT’s PGO-based optimizations aren’t just based on the data gathered during instrumented tier 0 execution. With dotnet/runtime#82926 (and a handful of follow-on PRs like dotnet/runtime#83068, dotnet/runtime#83567, dotnet/runtime#84312, and dotnet/runtime#84741), the JIT will now create a synthetic profile based on statically analyzing the code and estimating a profile, such as with various approaches to static branch prediction. The JIT can then blend this data together with the instrumentation data, helping to fill in data where there are gaps (think “Jurassic Park” and using modern reptile DNA to plug the gaps in the recovered dinosaur DNA).

Beyond the mechanisms used to enable tiering and dynamic PGO getting better (and, did I mention, being on by default?!) in .NET 8, the optimizations it performs also get better. One of the main optimizations dynamic PGO feeds is the ability to devirtualize virtual and interface calls per call site. As noted, the JIT tracks what concrete types are used, and then can generate a fast path for the most common type; this is known as guarded devirtualization (GDV). Consider this benchmark:

// dotnet run -c Release -f net7.0 --filter "*" --runtimes net7.0 net8.0

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args);

[HideColumns("Error", "StdDev", "Median", "RatioSD")]

[DisassemblyDiagnoser(maxDepth: 0)]

public class Tests

{

internal interface IValueProducer

{

int GetValue();

}

class Producer42 : IValueProducer

{

public int GetValue() => 42;

}

private IValueProducer _valueProducer;

private int _factor = 2;

[GlobalSetup]

public void Setup() => _valueProducer = new Producer42();

[Benchmark]

public int GetValue() => _valueProducer.GetValue() * _factor;

}The GetValue method is doing:

return _valueProducer.GetValue() * _factor;Without PGO, that’s just a normal interface dispatch. With PGO, however, the JIT will end up seeing that the actual type of _valueProducer is most commonly Producer42, and it will end up generating tier 1 code closer to if my benchmark was instead:

int result = _valueProducer.GetType() == typeof(Producer42) ?

Unsafe.As<Producer42>(_valueProducer).GetValue() :

_valueProducer.GetValue();

return result * _factor;It can then in turn see that the Producer42.GetValue() method is really simple, and so not only is the GetValue call devirtualized, it’s also inlined, such that the code effectively becomes:

int result = _valueProducer.GetType() == typeof(Producer42) ?

42 :

_valueProducer.GetValue();

return result * _factor;We can confirm this by running the above benchmark. The resulting numbers certainly show something going on:

| Method | Runtime | Mean | Ratio | Code Size |

|---|---|---|---|---|

| GetValue | .NET 7.0 | 1.6430 ns | 1.00 | 35 B |

| GetValue | .NET 8.0 | 0.0523 ns | 0.03 | 57 B |

We see it’s both faster (which we expected) and more code (which we also expected). Now for the assembly. On .NET 7, we get this:

; Tests.GetValue()

push rsi

sub rsp,20

mov rsi,rcx

mov rcx,[rsi+8]

mov r11,7FF999B30498

call qword ptr [r11]

imul eax,[rsi+10]

add rsp,20

pop rsi

ret

; Total bytes of code 35We can see it’s performing the interface call (the three movs followed by the call) and then multiplying the result by _factor (imul eax,[rsi+10]). Now on .NET 8, we get this:

; Tests.GetValue()

push rbx

sub rsp,20

mov rbx,rcx

mov rcx,[rbx+8]

mov rax,offset MT_Tests+Producer42

cmp [rcx],rax

jne short M00_L01

mov eax,2A

M00_L00:

imul eax,[rbx+10]

add rsp,20

pop rbx

ret

M00_L01:

mov r11,7FFA1FAB04D8

call qword ptr [r11]

jmp short M00_L00

; Total bytes of code 57We still see the call, but it’s buried in a cold section at the end. Instead, we see the type of the object being compared against MT_Tests+Producer42, and if it matches (the cmp [rcx],rax followed by the jne), we store 2A into eax; 2A is the hex representation of 42, so this is the entirety of the inlined body of the devirtualized Producer42.GetValue call. .NET 8 is also capable of doing multiple GDVs, meaning it can generate fast paths for more than 1 type, thanks in large part to dotnet/runtime#86551 and dotnet/runtime#86809. However, this is off by default and for now needs to be opted-into with a configuration setting (setting the DOTNET_JitGuardedDevirtualizationMaxTypeChecks environment variable to the desired maximum number of types for which to test). We can see the impact of that with this benchmark (note that because I’ve explicitly specified the configs to use in the code itself, I’ve omitted the --runtimes argument in the dotnet command):

// dotnet run -c Release -f net8.0 --filter "*"

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Configs;

using BenchmarkDotNet.Environments;

using BenchmarkDotNet.Jobs;

using BenchmarkDotNet.Running;

using System.Runtime.CompilerServices;

var config = DefaultConfig.Instance

.AddJob(Job.Default.WithId("ChecksOne").WithRuntime(CoreRuntime.Core80))

.AddJob(Job.Default.WithId("ChecksThree").WithRuntime(CoreRuntime.Core80).WithEnvironmentVariable("DOTNET_JitGuardedDevirtualizationMaxTypeChecks", "3"));

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args, config);

[HideColumns("Error", "StdDev", "Median", "RatioSD", "EnvironmentVariables")]

[DisassemblyDiagnoser]

public class Tests

{

private readonly A _a = new();

private readonly B _b = new();

private readonly C _c = new();

[Benchmark]

public void Multiple()

{

DoWork(_a);

DoWork(_b);

DoWork(_c);

}

[MethodImpl(MethodImplOptions.NoInlining)]

private static int DoWork(IMyInterface i) => i.GetValue();

private interface IMyInterface { int GetValue(); }

private class A : IMyInterface { public int GetValue() => 123; }

private class B : IMyInterface { public int GetValue() => 456; }

private class C : IMyInterface { public int GetValue() => 789; }

}| Method | Job | Mean | Code Size |

|---|---|---|---|

| Multiple | ChecksOne | 7.463 ns | 90 B |

| Multiple | ChecksThree | 5.632 ns | 133 B |

And in the assembly code with the environment variable set, we can indeed see it doing multiple checks for three types before falling back to the general interface dispatch:

; Tests.DoWork(IMyInterface)

sub rsp,28

mov rax,offset MT_Tests+A

cmp [rcx],rax

jne short M01_L00

mov eax,7B

jmp short M01_L02

M01_L00:

mov rax,offset MT_Tests+B

cmp [rcx],rax

jne short M01_L01

mov eax,1C8

jmp short M01_L02

M01_L01:

mov rax,offset MT_Tests+C

cmp [rcx],rax

jne short M01_L03

mov eax,315

M01_L02:

add rsp,28

ret

M01_L03:

mov r11,7FFA1FAC04D8

call qword ptr [r11]

jmp short M01_L02

; Total bytes of code 88(Interestingly, this optimization gets a bit better in Native AOT. There, with dotnet/runtime#87055, there can be no need for the fallback path. The compiler can see the entire program being optimized and can generate fast paths for all of the types that implement the target abstraction if it’s a small number.)

dotnet/runtime#75140 provides another really nice optimization, still related to GDV, but now for delegates and in relation to loop cloning. Take the following benchmark:

// dotnet run -c Release -f net7.0 --filter "*" --runtimes net7.0 net8.0

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args);

[HideColumns("Error", "StdDev", "Median", "RatioSD")]

[DisassemblyDiagnoser]

public class Tests

{

private readonly Func<int, int> _func = i => i + 1;

[Benchmark]

public int Sum() => Sum(_func);

private static int Sum(Func<int, int> func)

{

int sum = 0;

for (int i = 0; i < 10_000; i++)

{

sum += func(i);

}

return sum;

}

}Dynamic PGO is capable of doing GDV with delegates just as it is with virtual and interface methods. The JIT’s profiling of this method will highlight that the function being invoked is always the same i => i + 1 lambda, and as we saw, that can then be transformed into a method something like the following pseudo-code:

private static int Sum(Func<int, int> func)

{

int sum = 0;

for (int i = 0; i < 10_000; i++)

{

sum += func.Method == KnownLambda ? i + 1 : func(i);

}

return sum;

}It’s not very visible that inside our loop we’re performing the same check over and over and over. We’re also branching based on it. One common compiler optimization is “hoisting,” where a computation that’s “loop invariant” (meaning it doesn’t change per iteration) can be pulled out of the loop to be above it, e.g.

private static int Sum(Func<int, int> func)

{

int sum = 0;

bool isAdd = func.Method == KnownLambda;

for (int i = 0; i < 10_000; i++)

{

sum += isAdd ? i + 1 : func(i);

}

return sum;

}but even with that, we still have the branch on each iteration. Wouldn’t it be nice if we could hoist that as well? What if we could “clone” the loop, duplicating it once for when the method is the known target and once for when it’s not. That’s “loop cloning,” an optimization the JIT is already capable of for other reasons, and now in .NET 8 the JIT is capable of that with this exact scenario, too. The code it’ll produce ends up then being very similar to this:

private static int Sum(Func<int, int> func)

{

int sum = 0;

if (func.Method == KnownLambda)

{

for (int i = 0; i < 10_000; i++)

{

sum += i + 1;

}

}

else

{

for (int i = 0; i < 10_000; i++)

{

sum += func(i);

}

}

return sum;

}Looking at the generated assembly on .NET 8 confirms this:

; Tests.Sum(System.Func`2<Int32,Int32>)

push rdi

push rsi

push rbx

sub rsp,20

mov rbx,rcx

xor esi,esi

xor edi,edi

test rbx,rbx

je short M01_L01

mov rax,7FFA2D630F78

cmp [rbx+18],rax

jne short M01_L01

M01_L00:

inc edi

mov eax,edi

add esi,eax

cmp edi,2710

jl short M01_L00

jmp short M01_L03

M01_L01:

mov rax,7FFA2D630F78

cmp [rbx+18],rax

jne short M01_L04

lea eax,[rdi+1]

M01_L02:

add esi,eax

inc edi

cmp edi,2710

jl short M01_L01

M01_L03:

mov eax,esi

add rsp,20

pop rbx

pop rsi

pop rdi

ret

M01_L04:

mov edx,edi

mov rcx,[rbx+8]

call qword ptr [rbx+18]

jmp short M01_L02

; Total bytes of code 103Focus just on the M01_L00 block: you can see it ends with a jl short M01_L00 to loop back around to M01_L00 if edi (which is storing i) is less than 0x2710, or 10,000 decimal, aka our loop’s upper bound. Note that there are just a few instructions in the middle, nothing at all resembling a call… this is the optimized cloned loop, where our lambda has been inlined. There’s another loop that alternates between M01_L02, M01_L01, and M01_L04, and that one does have a call… that’s the fallback loop. And if we run the benchmark, we see a huge resulting improvement:

| Method | Runtime | Mean | Ratio | Code Size |

|---|---|---|---|---|

| Sum | .NET 7.0 | 16.546 us | 1.00 | 55 B |

| Sum | .NET 8.0 | 2.320 us | 0.14 | 113 B |

As long as we’re discussing hoisting, it’s worth noting other improvements have also contributed. In particular, dotnet/runtime#81635 enables the JIT to hoist more code used in generic method dispatch. We can see that in action with a benchmark like this:

// dotnet run -c Release -f net7.0 --filter "*" --runtimes net7.0 net8.0

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

using System.Runtime.CompilerServices;

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args);

[HideColumns("Error", "StdDev", "Median", "RatioSD")]

public class Tests

{

[Benchmark]

public void Test() => Test<string>();

static void Test<T>()

{

for (int i = 0; i < 100; i++)

{

Callee<T>();

}

}

[MethodImpl(MethodImplOptions.NoInlining)]

static void Callee<T>() { }

}| Method | Runtime | Mean | Ratio |

|---|---|---|---|

| Test | .NET 7.0 | 170.8 ns | 1.00 |

| Test | .NET 8.0 | 147.0 ns | 0.86 |

Before moving on, one word of warning about dynamic PGO: it’s good at what it does, really good. Why is that a “warning?” Dynamic PGO is very good about seeing what your code is doing and optimizing for it, which is awesome when you’re talking about your production applications. But there’s a particular kind of coding where you might not want that to happen, or at least you need to be acutely aware of it happening, and you’re currently looking at it: benchmarks. Microbenchmarks are all about isolating a particular piece of functionality and running that over and over and over and over in order to get good measurements about its overhead. With dynamic PGO, however, the JIT will then optimize for the exact thing you’re testing. If the thing you’re testing is exactly how the code will execute in production, then awesome. But if your test isn’t fully representative, you can get a skewed understanding of the costs involved, which can lead to making less-than-ideal assumptions and decisions.

For example, consider this benchmark:

// dotnet run -c Release -f net8.0 --filter "*"

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Configs;

using BenchmarkDotNet.Environments;

using BenchmarkDotNet.Jobs;

using BenchmarkDotNet.Running;

var config = DefaultConfig.Instance

.AddJob(Job.Default.WithId("No PGO").WithRuntime(CoreRuntime.Core80).WithEnvironmentVariable("DOTNET_TieredPGO", "0"))

.AddJob(Job.Default.WithId("PGO").WithRuntime(CoreRuntime.Core80));

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args, config);

[HideColumns("Error", "StdDev", "Median", "RatioSD", "EnvironmentVariables")]

public class Tests

{

private static readonly Random s_rand = new();

private readonly IEnumerable<int> _source = Enumerable.Repeat(0, 1024);

[Params(1.0, 0.5)]

public double Probability { get; set; }

[Benchmark]

public bool Any() => s_rand.NextDouble() < Probability ?

_source.Any(i => i == 42) :

_source.Any(i => i == 43);

}This runs a benchmark with two different “Probability” values. Regardless of that value, the code that’s executed for the benchmark does exactly the same thing and should result in exactly the same assembly code (other than one path checking for the value 42 and the other for 43). In a world without PGO, there should be close to zero difference in performance between the runs, and if we set the DOTNET_TieredPGO environment variable to 0 (to disable PGO), that’s exactly what we see, but with PGO, we observe a larger difference:

| Method | Job | Probability | Mean |

|---|---|---|---|

| Any | No PGO | 0.5 | 5.354 us |

| Any | No PGO | 1 | 5.314 us |

| Any | PGO | 0.5 | 1.969 us |

| Any | PGO | 1 | 1.495 us |

When all of the calls use i == 42 (because we set the probability to 1, all of the random values are less than that, and we always take the first branch), we see throughput ends up being 25% faster than when half of the calls use i == 42 and half use i == 43. If your benchmark was only trying to measure the overhead of using Enumerable.Any, you might not realize that the resulting code was being optimized for calling Any with the same delegate every time, in which case you get different results than if Any is called with multiple delegates and all with reasonably equal chances of being used. (As an aside, the nice overall improvement between dynamic PGO being disabled and enabled comes in part from the use of Random, which internally makes a virtual call that dynamic PGO can help elide.)

Throughout the rest of this post, I’ve kept this in mind and tried hard to show benchmarks where the resulting wins are due primarily to the cited improvements in the relevant code; where dynamic PGO plays a larger role in the improvements, I’ve called that out, often showing the results with and without dynamic PGO. There are many more benchmarks I could have shown but have avoided where it would look like a particular method had massive improvements, yet in reality it’d all be due to dynamic PGO being its awesome self rather than some explicit change made to the method’s C# code.

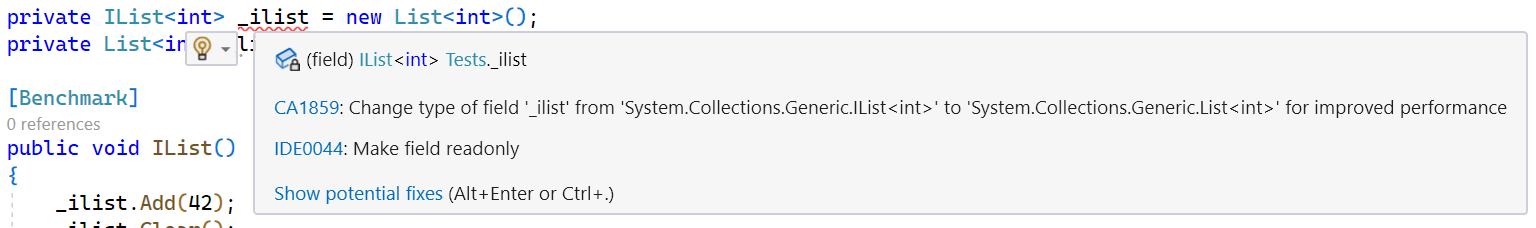

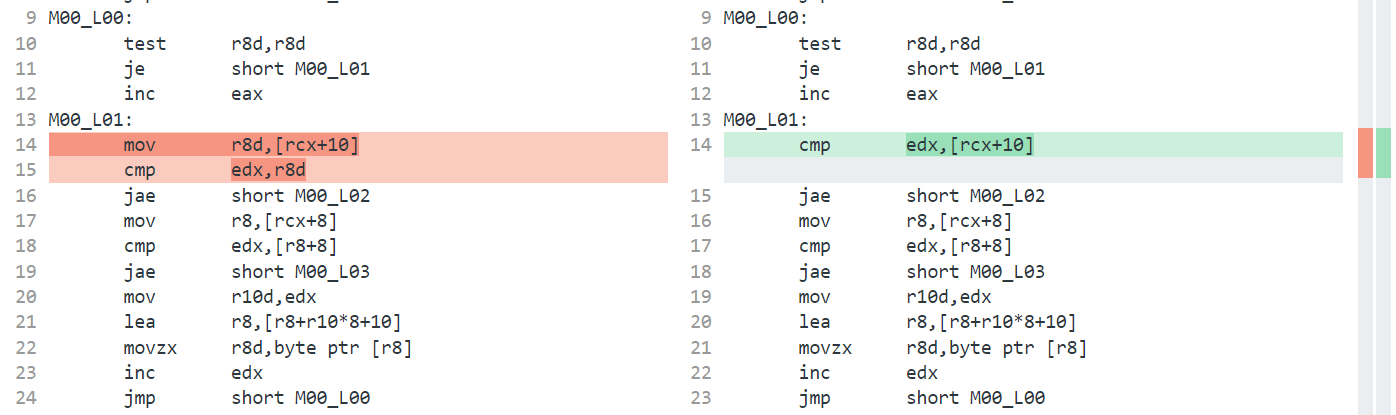

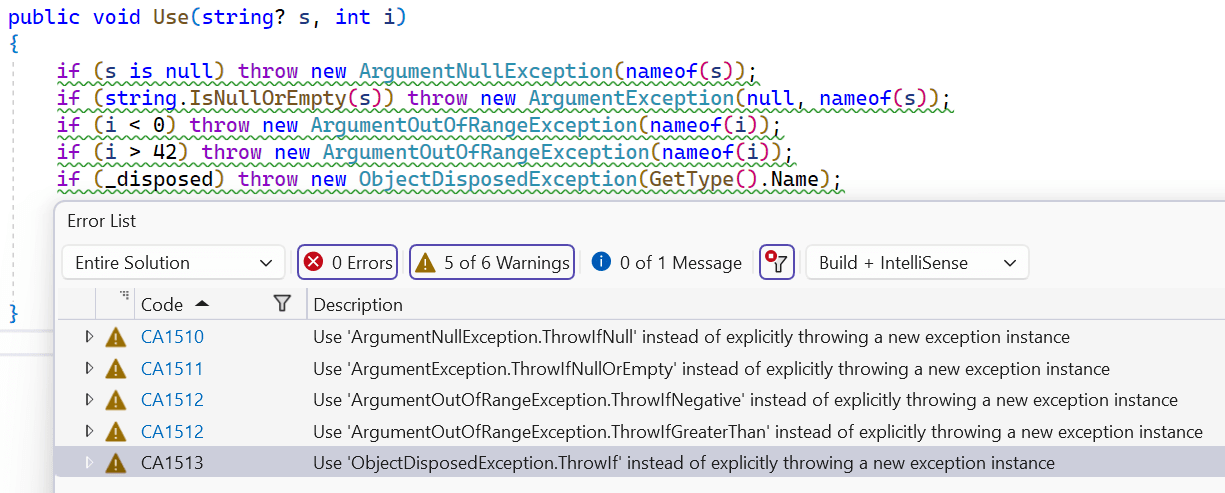

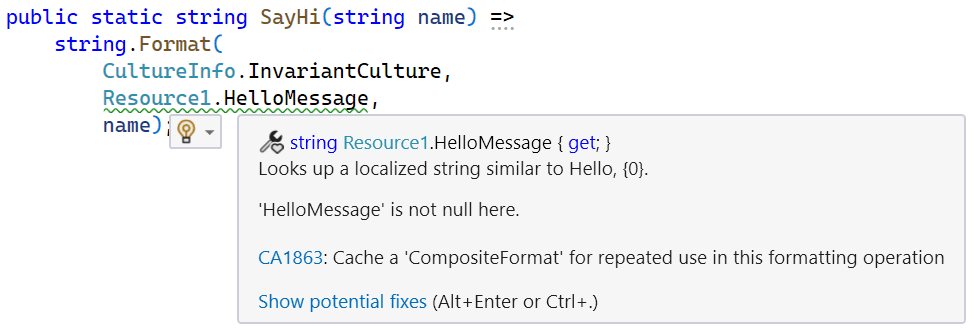

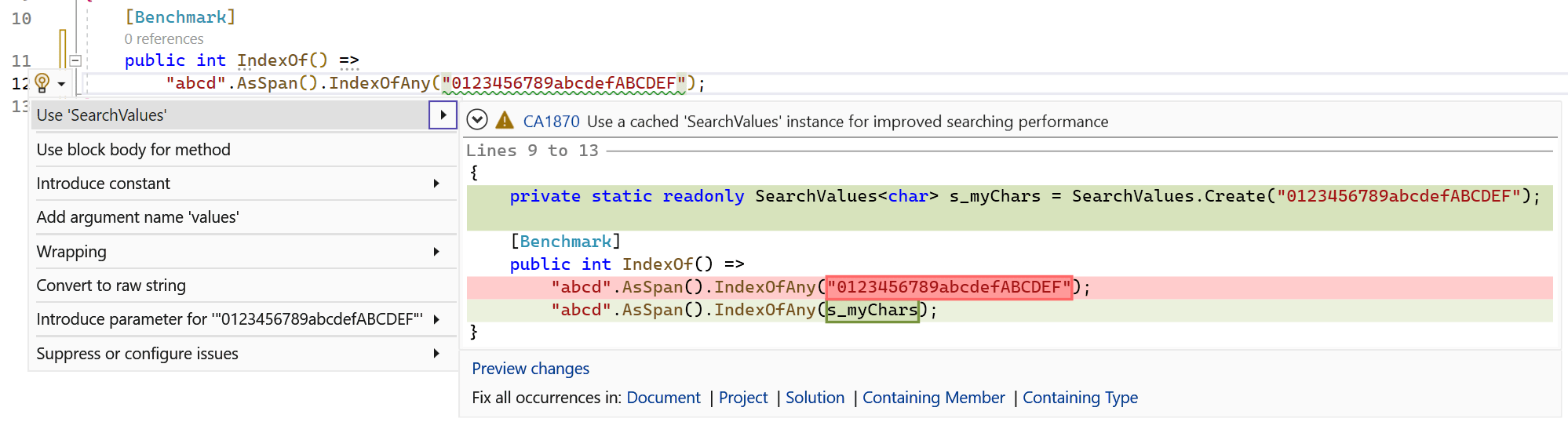

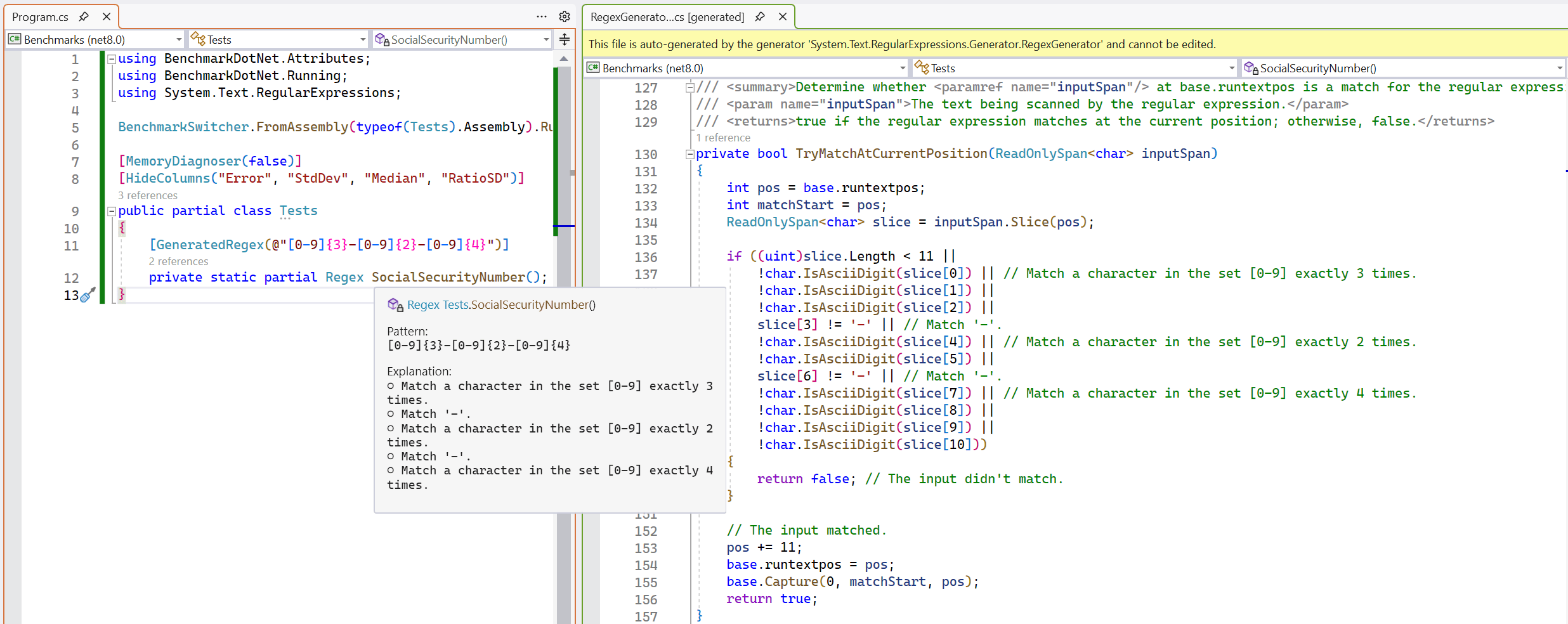

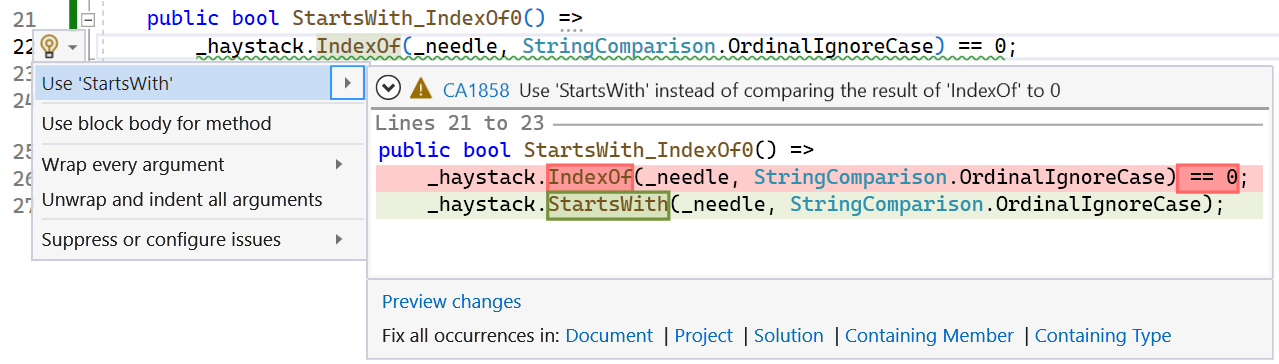

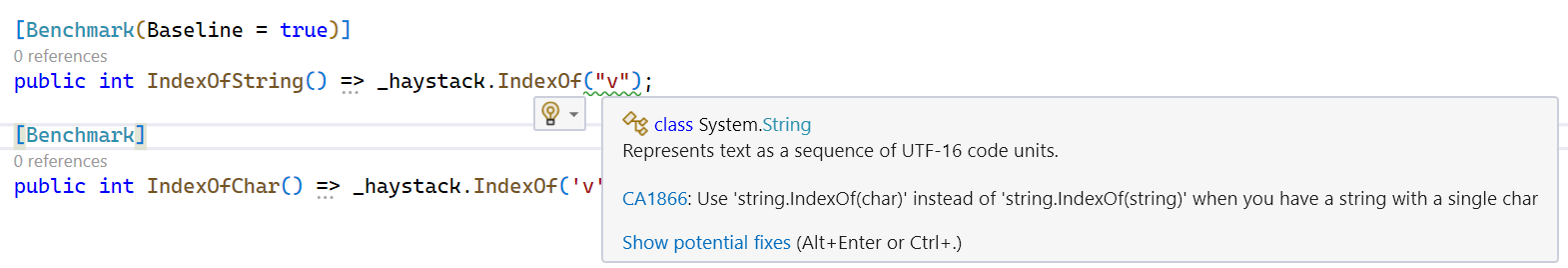

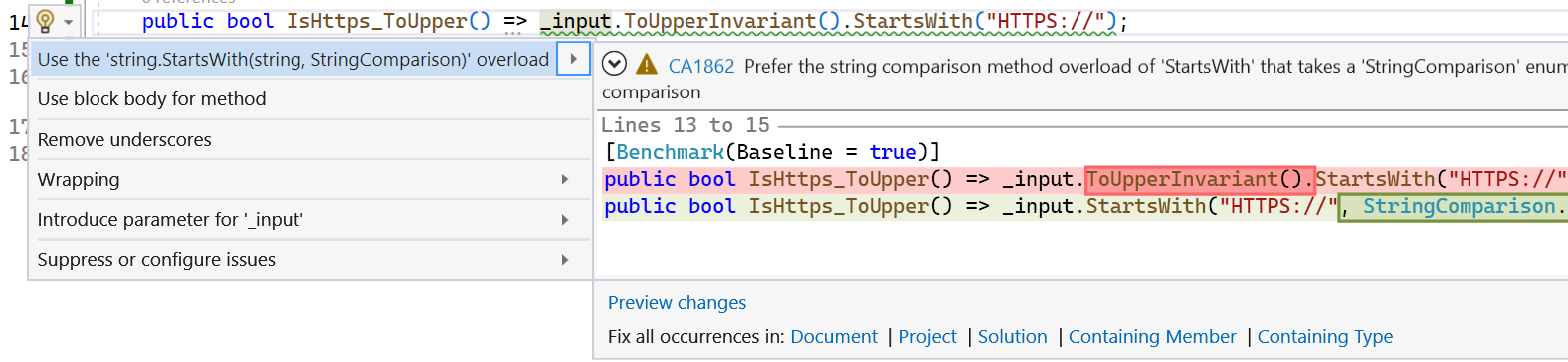

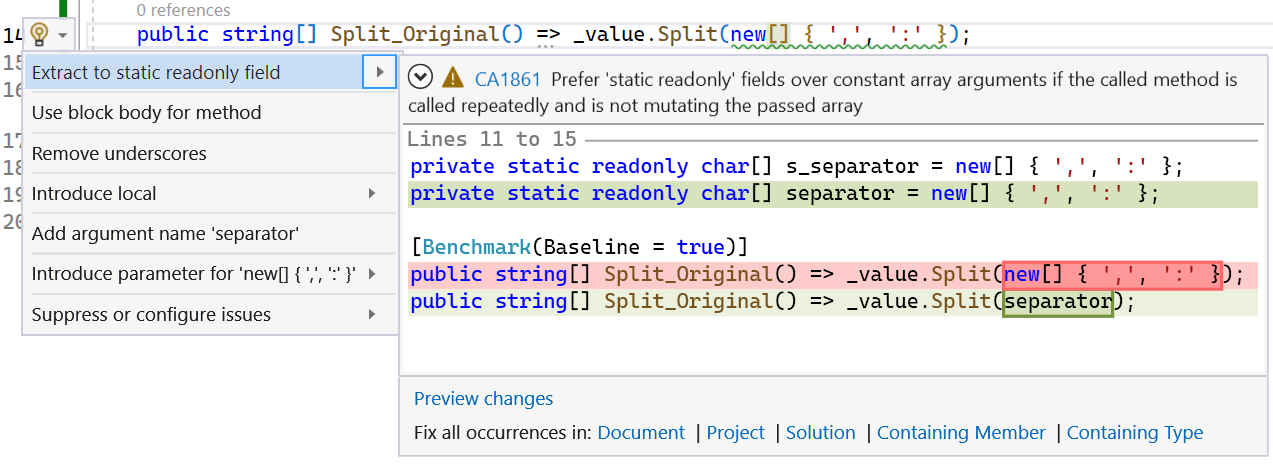

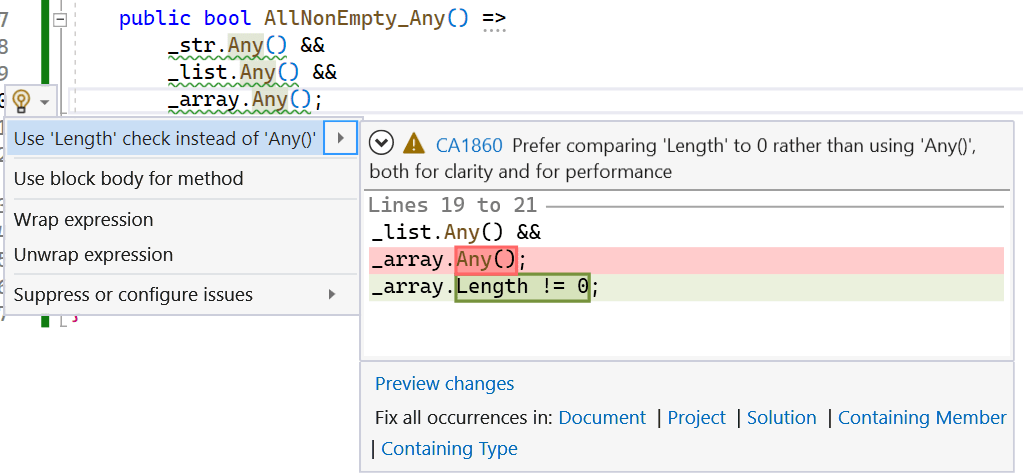

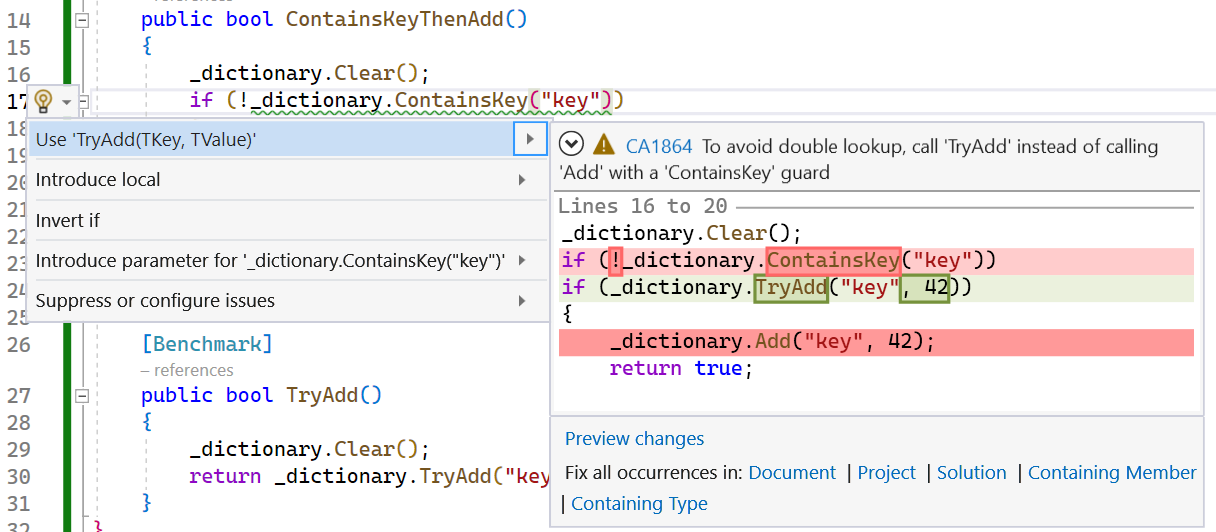

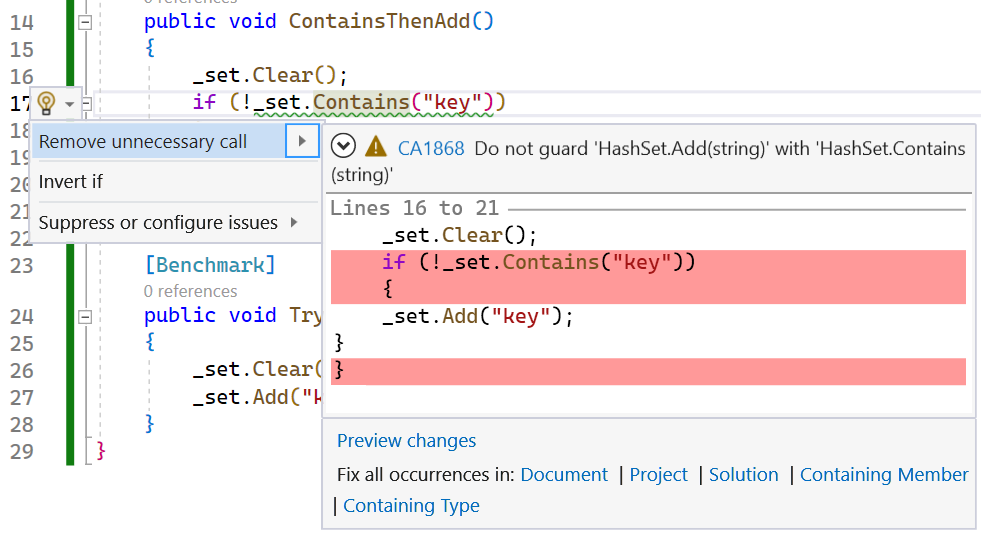

One final note about dynamic PGO: it’s awesome, but it doesn’t obviate the need for thoughtful coding. If you know and can use something’s concrete type rather than an abstraction, from a performance perspective it’s better to do so rather than hoping the JIT will be able to see through it and devirtualize. To help with this, a new analyzer, CA1859, was added to the .NET SDK in dotnet/roslyn-analyzers#6370. The analyzer looks for places where interfaces or base classes could be replaced by derived types in order to avoid interface and virtual dispatch.

dotnet/runtime#80335 and dotnet/runtime#80848 rolled this out across dotnet/runtime. As you can see from the first PR in particular, there were hundreds of places identified that with just an edit of one character (e.g. replacing

dotnet/runtime#80335 and dotnet/runtime#80848 rolled this out across dotnet/runtime. As you can see from the first PR in particular, there were hundreds of places identified that with just an edit of one character (e.g. replacing IList<T> with List<T>), we could possibly reduce overheads.

// dotnet run -c Release -f net8.0 --filter "*"

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Configs;

using BenchmarkDotNet.Environments;

using BenchmarkDotNet.Jobs;

using BenchmarkDotNet.Running;

var config = DefaultConfig.Instance

.AddJob(Job.Default.WithId("No PGO").WithRuntime(CoreRuntime.Core80).WithEnvironmentVariable("DOTNET_TieredPGO", "0"))

.AddJob(Job.Default.WithId("PGO").WithRuntime(CoreRuntime.Core80));

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args, config);

[HideColumns("Error", "StdDev", "Median", "RatioSD", "EnvironmentVariables")]

public class Tests

{

private readonly IList<int> _ilist = new List<int>();

private readonly List<int> _list = new();

[Benchmark]

public void IList()

{

_ilist.Add(42);

_ilist.Clear();

}

[Benchmark]

public void List()

{

_list.Add(42);

_list.Clear();

}

}| Method | Job | Mean |

|---|---|---|

| IList | No PGO | 2.876 ns |

| IList | PGO | 1.777 ns |

| List | No PGO | 1.718 ns |

| List | PGO | 1.476 ns |

Vectorization

Another huge area of investment in code generation in .NET 8 is around vectorization. This is a continuation of a theme that’s been going for multiple .NET releases. Almost a decade ago, .NET gained the Vector<T> type. .NET Core 3.0 and .NET 5 added thousands of intrinsic methods for directly targeting specific hardware instructions. .NET 7 provided hundreds of cross-platform operations for Vector128<T> and Vector256<T> to enable SIMD algorithms on fixed-width vectors. And now in .NET 8, .NET gains support for AVX512, both with new hardware intrinsics directly exposing AVX512 instructions and with the new Vector512 and Vector512<T> types.

There were a plethora of changes that went into improving existing SIMD support, such as dotnet/runtime#76221 that improves the handling of Vector256<T> when it’s not hardware accelerated by lowering it as two Vector128<T> operations. Or like dotnet/runtime#87283, which removed the generic constraint on the T in all of the vector types in order to make them easier to use in a larger set of contexts. But the bulk of the work in this area in this release is focused on AVX512.

Wikipedia has a good overview of AVX512, which provides instructions for processing 512-bits at a time. In addition to providing wider versions of the 256-bit instructions seen in previous instruction sets, it also adds a variety of new operations, almost all of which are exposed via one of the new types in System.Runtime.Intrinsics.X86, like Avx512BW, AVX512CD, Avx512DQ, Avx512F, and Avx512Vbmi. dotnet/runtime#83040 kicked things off by stubbing out the various files, followed by dozens of PRs that filled in the functionality, for example dotnet/runtime#84909 that added the 512-bit variants of the SSE through SSE4.2 intrinsics that already exist; like dotnet/runtime#75934 from @DeepakRajendrakumaran and dotnet/runtime#77419 from @DeepakRajendrakumaran that added support for the EVEX encoding used by AVX512 instructions; like dotnet/runtime#74113 from @DeepakRajendrakumaran that added the logic for detecting AVX512 support; like dotnet/runtime#80960 from @DeepakRajendrakumaran and dotnet/runtime#79544 from @anthonycanino that enlightened the register allocator and emitter about AVX512’s additional registers; and like dotnet/runtime#87946 from @Ruihan-Yin and dotnet/runtime#84937 from @jkrishnavs that plumbed through knowledge of various intrinsics.

Let’s take it for a spin. The machine on which I’m writing this doesn’t have AVX512 support, but my Dev Box does, so I’m using that for AVX512 comparisons (using WSL with Ubuntu). In last year’s Performance Improvements in .NET 7, we wrote a Contains method that used Vector256<T> if there was sufficient data available and it was accelerated, or else Vector128<T> if there was sufficient data available and it was accelerated, or else a scalar fallback. Tweaking that to also “light up” with AVX512 took me literally less than 30 seconds: copy/paste the code block for Vector256 and then search and replace in that copy from “Vector256” to “Vector512″… boom, done. Here it is in a benchmark, using environment variables to disable the JIT’s ability to use the various instruction sets so that we can try out this method with each acceleration path:

// dotnet run -c Release -f net8.0 --filter "*"

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Configs;

using BenchmarkDotNet.Jobs;

using BenchmarkDotNet.Running;

using System.Runtime.CompilerServices;

using System.Runtime.InteropServices;

using System.Runtime.Intrinsics;

var config = DefaultConfig.Instance

.AddJob(Job.Default.WithId("Scalar").WithEnvironmentVariable("DOTNET_EnableHWIntrinsic", "0").AsBaseline())

.AddJob(Job.Default.WithId("Vector128").WithEnvironmentVariable("DOTNET_EnableAVX2", "0").WithEnvironmentVariable("DOTNET_EnableAVX512F", "0"))

.AddJob(Job.Default.WithId("Vector256").WithEnvironmentVariable("DOTNET_EnableAVX512F", "0"))

.AddJob(Job.Default.WithId("Vector512"));

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args, config);

[HideColumns("Error", "StdDev", "Median", "RatioSD", "EnvironmentVariables", "value")]

public class Tests

{

private readonly byte[] _data = Enumerable.Repeat((byte)123, 999).Append((byte)42).ToArray();

[Benchmark]

[Arguments((byte)42)]

public bool Find(byte value) => Contains(_data, value);

private static unsafe bool Contains(ReadOnlySpan<byte> haystack, byte needle)

{

if (Vector128.IsHardwareAccelerated && haystack.Length >= Vector128<byte>.Count)

{

ref byte current = ref MemoryMarshal.GetReference(haystack);

if (Vector512.IsHardwareAccelerated && haystack.Length >= Vector512<byte>.Count)

{

Vector512<byte> target = Vector512.Create(needle);

ref byte endMinusOneVector = ref Unsafe.Add(ref current, haystack.Length - Vector512<byte>.Count);

do

{

if (Vector512.EqualsAny(target, Vector512.LoadUnsafe(ref current)))

return true;

current = ref Unsafe.Add(ref current, Vector512<byte>.Count);

}

while (Unsafe.IsAddressLessThan(ref current, ref endMinusOneVector));

if (Vector512.EqualsAny(target, Vector512.LoadUnsafe(ref endMinusOneVector)))

return true;

}

else if (Vector256.IsHardwareAccelerated && haystack.Length >= Vector256<byte>.Count)

{

Vector256<byte> target = Vector256.Create(needle);

ref byte endMinusOneVector = ref Unsafe.Add(ref current, haystack.Length - Vector256<byte>.Count);

do

{

if (Vector256.EqualsAny(target, Vector256.LoadUnsafe(ref current)))

return true;

current = ref Unsafe.Add(ref current, Vector256<byte>.Count);

}

while (Unsafe.IsAddressLessThan(ref current, ref endMinusOneVector));

if (Vector256.EqualsAny(target, Vector256.LoadUnsafe(ref endMinusOneVector)))

return true;

}

else

{

Vector128<byte> target = Vector128.Create(needle);

ref byte endMinusOneVector = ref Unsafe.Add(ref current, haystack.Length - Vector128<byte>.Count);

do

{

if (Vector128.EqualsAny(target, Vector128.LoadUnsafe(ref current)))

return true;

current = ref Unsafe.Add(ref current, Vector128<byte>.Count);

}

while (Unsafe.IsAddressLessThan(ref current, ref endMinusOneVector));

if (Vector128.EqualsAny(target, Vector128.LoadUnsafe(ref endMinusOneVector)))

return true;

}

}

else

{

for (int i = 0; i < haystack.Length; i++)

if (haystack[i] == needle)

return true;

}

return false;

}

}| Method | Job | Mean | Ratio |

|---|---|---|---|

| Find | Scalar | 461.49 ns | 1.00 |

| Find | Vector128 | 37.94 ns | 0.08 |

| Find | Vector256 | 22.98 ns | 0.05 |

| Find | Vector512 | 10.93 ns | 0.02 |

Numerous PRs elsewhere in the JIT then take advantage of AVX512 support when it’s available. For example, separate from AVX512, dotnet/runtime#83945 and dotnet/runtime#84530 taught the JIT how to unroll SequenceEqual operations, such that the JIT can emit optimized, vectorized replacements when it can see a constant length for at least one of the inputs. “Unrolling” means that rather than emitting a loop for N iterations, each of which does the loop body once, a loop is emitted for N / M iterations, where every iteration does the loop body M times (and if N == M, there is no loop at all). So for a benchmark like this:

// dotnet run -c Release -f net7.0 --filter "*" --runtimes net7.0 net8.0

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args);

[HideColumns("Error", "StdDev", "Median", "RatioSD")]

[DisassemblyDiagnoser(maxDepth: 0)]

public class Tests

{

private byte[] _scheme = "Transfer-Encoding"u8.ToArray();

[Benchmark]

public bool SequenceEqual() => "Transfer-Encoding"u8.SequenceEqual(_scheme);

}we now get results like this:

| Method | Runtime | Mean | Ratio | Code Size |

|---|---|---|---|---|

| SequenceEqual | .NET 7.0 | 3.0558 ns | 1.00 | 65 B |

| SequenceEqual | .NET 8.0 | 0.8055 ns | 0.26 | 91 B |

For .NET 7, we see assembly code like this (note the call instruction to the underlying SequenceEqual helper):

; Tests.SequenceEqual()

sub rsp,28

mov r8,1D7BB272E48

mov rcx,[rcx+8]

test rcx,rcx

je short M00_L03

lea rdx,[rcx+10]

mov eax,[rcx+8]

M00_L00:

mov rcx,r8

cmp eax,11

je short M00_L02

xor eax,eax

M00_L01:

add rsp,28

ret

M00_L02:

mov r8d,11

call qword ptr [7FF9D33CF120]; System.SpanHelpers.SequenceEqual(Byte ByRef, Byte ByRef, UIntPtr)

jmp short M00_L01

M00_L03:

xor edx,edx

xor eax,eax

jmp short M00_L00

; Total bytes of code 65And now for .NET 8, we get assembly code like this:

; Tests.SequenceEqual()

vzeroupper

mov rax,1EBDDA92D38

mov rcx,[rcx+8]

test rcx,rcx

je short M00_L01

lea rdx,[rcx+10]

mov r8d,[rcx+8]

M00_L00:

cmp r8d,11

jne short M00_L03

vmovups xmm0,[rax]

vmovups xmm1,[rdx]

vmovups xmm2,[rax+1]

vmovups xmm3,[rdx+1]

vpxor xmm0,xmm0,xmm1

vpxor xmm1,xmm2,xmm3

vpor xmm0,xmm0,xmm1

vptest xmm0,xmm0

sete al

movzx eax,al

jmp short M00_L02

M00_L01:

xor edx,edx

xor r8d,r8d

jmp short M00_L00

M00_L02:

ret

M00_L03:

xor eax,eax

jmp short M00_L02

; Total bytes of code 91Now there’s no call, with the entire implementation provided by the JIT; we can see it making liberal use of the 128-bit xmm SIMD registers. However, those PRs only enabled the JIT to handle up to 64 bytes being compared (unrolling results in larger code, so at some length it no longer makes sense to unroll). With AVX512 support in the JIT, dotnet/runtime#84854 then extends that up to 128 bytes. This is easily visible in a benchmark like this, which is similar to the previous example, but with larger data:

// dotnet run -c Release -f net8.0 --filter "*"

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args);

[HideColumns("Error", "StdDev", "Median", "RatioSD")]

[DisassemblyDiagnoser(maxDepth: 0)]

public class Tests

{

private byte[] _data1, _data2;

[GlobalSetup]

public void Setup()

{

_data1 = Enumerable.Repeat((byte)42, 200).ToArray();

_data2 = (byte[])_data1.Clone();

}

[Benchmark]

public bool SequenceEqual() => _data1.AsSpan(0, 128).SequenceEqual(_data2.AsSpan(128));

}On my Dev Box with AVX512 support, for .NET 8 we get:

; Tests.SequenceEqual()

sub rsp,28

vzeroupper

mov rax,[rcx+8]

test rax,rax

je short M00_L01

cmp dword ptr [rax+8],80

jb short M00_L01

add rax,10

mov rcx,[rcx+10]

test rcx,rcx

je short M00_L01

mov edx,[rcx+8]

cmp edx,80

jb short M00_L01

add rcx,10

add rcx,80

add edx,0FFFFFF80

cmp edx,80

je short M00_L02

xor eax,eax

M00_L00:

vzeroupper

add rsp,28

ret

M00_L01:

call qword ptr [7FF820745F08]

int 3

M00_L02:

vmovups zmm0,[rax]

vmovups zmm1,[rcx]

vmovups zmm2,[rax+40]

vmovups zmm3,[rcx+40]

vpxorq zmm0,zmm0,zmm1

vpxorq zmm1,zmm2,zmm3

vporq zmm0,zmm0,zmm1

vxorps ymm1,ymm1,ymm1

vpcmpeqq k1,zmm0,zmm1

kortestb k1,k1

setb al

movzx eax,al

jmp short M00_L00

; Total bytes of code 154Now instead of the 128-bit xmm registers, we see use of the 512-bit zmm registers from AVX512.

The JIT in .NET 8 also now unrolls memmoves (CopyTo, ToArray, etc.) for small-enough constant lengths, thanks to dotnet/runtime#83638 and dotnet/runtime#83740. And then with dotnet/runtime#84348 that unrolling takes advantage of AVX512 if it’s available. dotnet/runtime#85501 extends this to Span<T>.Fill, too.

dotnet/runtime#84885 extended the unrolling and vectorization done as part of string/ReadOnlySpan<char> Equals and StartsWith to utilize AVX512 when available, as well.

// dotnet run -c Release -f net7.0 --filter "*" --runtimes net7.0 net8.0

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args);

[HideColumns("Error", "StdDev", "Median", "RatioSD")]

[DisassemblyDiagnoser(maxDepth: 0)]

public class Tests

{

private readonly string _str = "Let me not to the marriage of true minds admit impediments";

[Benchmark]

public bool Equals() => _str.AsSpan().Equals(

"LET ME NOT TO THE MARRIAGE OF TRUE MINDS ADMIT IMPEDIMENTS",

StringComparison.OrdinalIgnoreCase);

}| Method | Runtime | Mean | Ratio | Code Size |

|---|---|---|---|---|

| Equals | .NET 7.0 | 30.995 ns | 1.00 | 101 B |

| Equals | .NET 8.0 | 1.658 ns | 0.05 | 116 B |

It’s so fast in .NET 8 because, whereas with .NET 7 it ends up calling through to the underlying helper:

; Tests.Equals()

sub rsp,48

xor eax,eax

mov [rsp+28],rax

vxorps xmm4,xmm4,xmm4

vmovdqa xmmword ptr [rsp+30],xmm4

mov [rsp+40],rax

mov rcx,[rcx+8]

test rcx,rcx

je short M00_L03

lea rdx,[rcx+0C]

mov ecx,[rcx+8]

M00_L00:

mov r8,21E57C058A0

mov r8,[r8]

add r8,0C

cmp ecx,3A

jne short M00_L02

mov rcx,rdx

mov rdx,r8

mov r8d,3A

call qword ptr [7FF8194B1A08]; System.Globalization.Ordinal.EqualsIgnoreCase(Char ByRef, Char ByRef, Int32)

M00_L01:

nop

add rsp,48

ret

M00_L02:

xor eax,eax

jmp short M00_L01

M00_L03:

xor ecx,ecx

xor edx,edx

xchg rcx,rdx

jmp short M00_L00

; Total bytes of code 101in .NET 8, the JIT generates code for the operation directly, taking advantage of AVX512’s greater width and thus able to process a larger input without significantly increasing code size:

; Tests.Equals()

vzeroupper

mov rax,[rcx+8]

test rax,rax

jne short M00_L00

xor ecx,ecx

xor edx,edx

jmp short M00_L01

M00_L00:

lea rcx,[rax+0C]

mov edx,[rax+8]

M00_L01:

cmp edx,3A

jne short M00_L02

vmovups zmm0,[rcx]

vmovups zmm1,[7FF820495080]

vpternlogq zmm0,zmm1,[7FF8204950C0],56

vmovups zmm1,[rcx+34]

vporq zmm1,zmm1,[7FF820495100]

vpternlogq zmm0,zmm1,[7FF820495140],0F6

vxorps ymm1,ymm1,ymm1

vpcmpeqq k1,zmm0,zmm1

kortestb k1,k1

setb al

movzx eax,al

jmp short M00_L03

M00_L02:

xor eax,eax

M00_L03:

vzeroupper

ret

; Total bytes of code 116Even super simple operations get in on the action. Here we just have a cast from a ulong to a double:

// dotnet run -c Release -f net7.0 --filter "*" --runtimes net7.0 net8.0

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args);

[HideColumns("Error", "StdDev", "Median", "RatioSD", "val")]

[DisassemblyDiagnoser]

public class Tests

{

[Benchmark]

[Arguments(1234567891011121314ul)]

public double UIntToDouble(ulong val) => val;

}Thanks to dotnet/runtime#84384 from @khushal1996, the code for that shrinks from this:

; Tests.UIntToDouble(UInt64)

vzeroupper

vxorps xmm0,xmm0,xmm0

vcvtsi2sd xmm0,xmm0,rdx

test rdx,rdx

jge short M00_L00

vaddsd xmm0,xmm0,qword ptr [7FF819E776C0]

M00_L00:

ret

; Total bytes of code 26using the AVX vcvtsi2sd instruction, to this:

; Tests.UIntToDouble(UInt64)

vzeroupper

vcvtusi2sd xmm0,xmm0,rdx

ret

; Total bytes of code 10using the AVX512 vcvtusi2sd instruction.

As yet another example, with dotnet/runtime#87641 we see the JIT using AVX512 to accelerate various Math APIs:

// dotnet run -c Release -f net7.0 --filter "*" --runtimes net7.0 net8.0

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args);

[HideColumns("Error", "StdDev", "Median", "RatioSD", "left", "right")]

public class Tests

{

[Benchmark]

[Arguments(123456.789f, 23456.7890f)]

public float Max(float left, float right) => MathF.Max(left, right);

}| Method | Runtime | Mean | Ratio |

|---|---|---|---|

| Max | .NET 7.0 | 1.1936 ns | 1.00 |

| Max | .NET 8.0 | 0.2865 ns | 0.24 |

Branching

Branching is integral to all meaningful code; while some algorithms are written in a branch-free manner, branch-free algorithms typically are challenging to get right and complicated to read, and typically are isolated to only small regions of code. For everything else, branching is the name of the game. Loops, if/else blocks, ternaries… it’s hard to imagine any real code without them. Yet they can also represent one of the more significant costs in an application. Modern hardware gets big speed boosts from pipelining, for example from being able to start reading and decoding the next instruction while the previous ones are still processing. That, of course, relies on the hardware knowing what the next instruction is. If there’s no branching, that’s easy, it’s whatever instruction comes next in the sequence. For when there is branching, CPUs have built-in support in the form of branch predictors, used to determine what the next instruction most likely will be, and they’re often right… but when they’re wrong, the cost incurred from that incorrect branch prediction can be huge. Compilers thus strive to minimize branching.

One way the impact of branches is reduced is by removing them completely. Redundant branch optimizers look for places where the compiler can prove that all paths leading to that branch will lead to the same outcome, such that the compiler can remove the branch and everything in the path not taken. Consider the following example:

// dotnet run -c Release -f net8.0 --filter "*"

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

using System.Runtime.CompilerServices;

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args);

[HideColumns("Error", "StdDev", "Median", "RatioSD")]

[DisassemblyDiagnoser(maxDepth: 0)]

public class Tests

{

private static readonly Random s_rand = new();

private readonly string _text = "hello world!";

[Params(1.0, 0.5)]

public double Probability { get; set; }

[Benchmark]

public ReadOnlySpan<char> TrySlice() => SliceOrDefault(_text.AsSpan(), s_rand.NextDouble() < Probability ? 3 : 20);

[MethodImpl(MethodImplOptions.AggressiveInlining)]

public ReadOnlySpan<char> SliceOrDefault(ReadOnlySpan<char> span, int i)

{

if ((uint)i < (uint)span.Length)

{

return span.Slice(i);

}

return default;

}

}Running that on .NET 7, we can glimpse into the impact of failed branch prediction. When we always take the branch the same way, the throughput is 2.5x what it was when it was impossible for the branch predictor to determine where we were going next:

| Method | Probability | Mean | Code Size |

|---|---|---|---|

| TrySlice | 0.5 | 8.845 ns | 136 B |

| TrySlice | 1 | 3.436 ns | 136 B |

We can also use this example for a .NET 8 improvement. That guarded ReadOnlySpan<char>.Slice call has its own branch to ensure that i is within the bounds of the span; we can see that very clearly by looking at the disassembly generated on .NET 7:

; Tests.TrySlice()

push rdi

push rsi

push rbp

push rbx

sub rsp,28

vzeroupper

mov rdi,rcx

mov rsi,rdx

mov rcx,[rdi+8]

test rcx,rcx

je short M00_L01

lea rbx,[rcx+0C]

mov ebp,[rcx+8]

M00_L00:

mov rcx,1EBBFC01FA0

mov rcx,[rcx]

mov rcx,[rcx+8]

mov rax,[rcx]

mov rax,[rax+48]

call qword ptr [rax+20]

vmovsd xmm1,qword ptr [rdi+10]

vucomisd xmm1,xmm0

ja short M00_L02

mov eax,14

jmp short M00_L03

M00_L01:

xor ebx,ebx

xor ebp,ebp

jmp short M00_L00

M00_L02:

mov eax,3

M00_L03:

cmp eax,ebp

jae short M00_L04

cmp eax,ebp

ja short M00_L06

mov edx,eax

lea rdx,[rbx+rdx*2]

sub ebp,eax

jmp short M00_L05

M00_L04:

xor edx,edx

xor ebp,ebp

M00_L05:

mov [rsi],rdx

mov [rsi+8],ebp

mov rax,rsi

add rsp,28

pop rbx

pop rbp

pop rsi

pop rdi

ret

M00_L06:

call qword ptr [7FF999FEB498]

int 3

; Total bytes of code 136In particular, look at M00_L03:

M00_L03:

cmp eax,ebp

jae short M00_L04

cmp eax,ebp

ja short M00_L06

mov edx,eax

lea rdx,[rbx+rdx*2]At this point, either 3 or 20 (0x14) has been loaded into eax, and it’s being compared against ebp, which was loaded from the span’s Length earlier (mov ebp,[rcx+8]). There’s a very obvious redundant branch here, as the code does cmp eax,ebp, and then if it doesn’t jump as part of the jae, it does the exact same comparison again; the first is the one we wrote in TrySlice, the second is the one from Slice itself, which got inlined.

On .NET 8, thanks to dotnet/runtime#72979 and dotnet/runtime#75804, that branch (and many others of a similar ilk) is optimized away. We can run the exact same benchmark, this time on .NET 8, and if we look at the assembly at the corresponding code block (which isn’t numbered exactly the same because of other changes):

M00_L04:

cmp eax,ebp

jae short M00_L07

mov ecx,eax

lea rdx,[rdi+rcx*2]we can see that, indeed, the redundant branch has been eliminated.

Another way the overhead associated with branches (and branch misprediction) is removed is by avoiding them altogether. Sometimes simple bit manipulation tricks can be employed to avoid branches. dotnet/runtime#62689 from @pedrobsaila, for example, finds expressions like i >= 0 && j >= 0 for signed integers i and j, and rewrites them to the equivalent of (i | j) >= 0.

// dotnet run -c Release -f net7.0 --filter "*" --runtimes net7.0 net8.0

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args);

[HideColumns("Error", "StdDev", "Median", "RatioSD", "i", "j")]

[DisassemblyDiagnoser(maxDepth: 0)]

public class Tests

{

[Benchmark]

[Arguments(42, 84)]

public bool BothGreaterThanOrEqualZero(int i, int j) => i >= 0 && j >= 0;

}Here instead of code like we’d get on .NET 7, which involves a branch for the &&:

; Tests.BothGreaterThanOrEqualZero(Int32, Int32)

test edx,edx

jl short M00_L00

mov eax,r8d

not eax

shr eax,1F

ret

M00_L00:

xor eax,eax

ret

; Total bytes of code 16now on .NET 8, the result is branchless:

; Tests.BothGreaterThanOrEqualZero(Int32, Int32)

or edx,r8d

mov eax,edx

not eax

shr eax,1F

ret

; Total bytes of code 11Such bit tricks, however, only get you so far. To go further, both x86/64 and Arm provide conditional move instructions, like cmov on x86/64 and csel on Arm, that encapsulate the condition into the single instruction. For example, csel “conditionally selects” the value from one of two register arguments based on whether the condition is true or false and writes that value into the destination register. The instruction pipeline stays filled then because the instruction after the csel is always the next instruction; there’s no control flow that would result in a different instruction coming next.

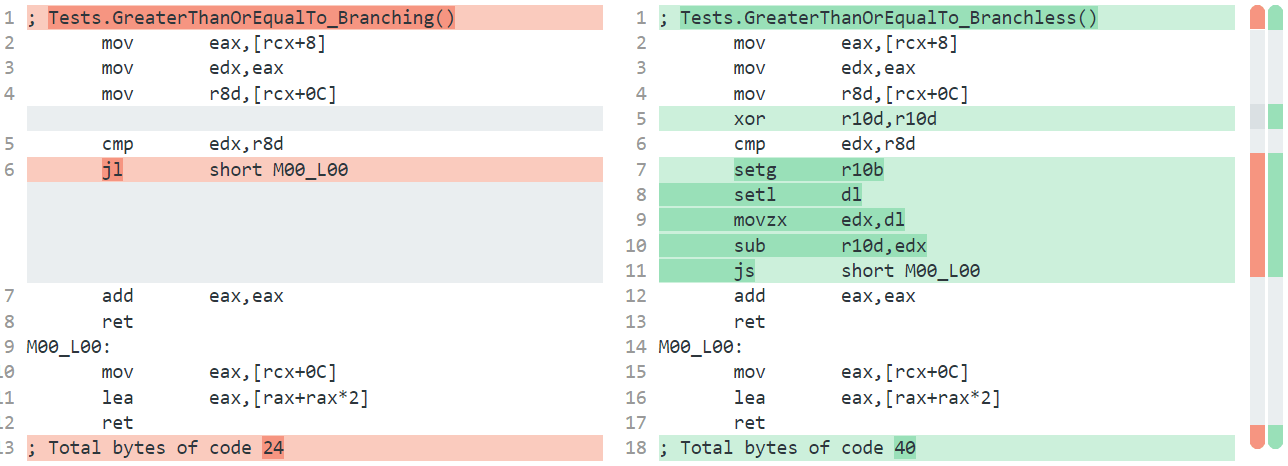

The JIT in .NET 8 is now capable of emitting conditional instructions, on both x86/64 and Arm. With PRs like dotnet/runtime#73472 from @a74nh and dotnet/runtime#77728 from @a74nh, the JIT gains an additional “if conversion” optimization phase, where various conditional patterns are recognized and morphed into conditional nodes in the JIT’s internal representation; these can then later be emitted as conditional instructions, as was done by dotnet/runtime#78879, dotnet/runtime#81267, dotnet/runtime#82235, dotnet/runtime#82766, and dotnet/runtime#83089. Other PRs, like dotnet/runtime#84926 from @SwapnilGaikwad and dotnet/runtime#82031 from @SwapnilGaikwad optimized which exact instructions would be employed, in these cases using the Arm cinv and cinc instructions.

We can see all this in a simple benchmark:

// dotnet run -c Release -f net7.0 --filter "*" --runtimes net7.0 net8.0

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args);

[HideColumns("Error", "StdDev", "Median", "RatioSD")]

[DisassemblyDiagnoser(maxDepth: 0)]

public class Tests

{

private static readonly Random s_rand = new();

[Params(1.0, 0.5)]

public double Probability { get; set; }

[Benchmark]

public FileOptions GetOptions() => GetOptions(s_rand.NextDouble() < Probability);

private static FileOptions GetOptions(bool useAsync) => useAsync ? FileOptions.Asynchronous : FileOptions.None;

}| Method | Runtime | Probability | Mean | Ratio | Code Size |

|---|---|---|---|---|---|

| GetOptions | .NET 7.0 | 0.5 | 7.952 ns | 1.00 | 64 B |

| GetOptions | .NET 8.0 | 0.5 | 2.327 ns | 0.29 | 86 B |

| GetOptions | .NET 7.0 | 1 | 2.587 ns | 1.00 | 64 B |

| GetOptions | .NET 8.0 | 1 | 2.357 ns | 0.91 | 86 B |

Two things to notice:

- In .NET 7, the cost with a probability of 0.5 is 3x that of when it had a probability of 1.0, due to the branch predictor not being able to successfully predict which way the actual branch would go.

- In .NET 8, it doesn’t matter whether the probability is 0.5 or 1: the cost is the same (and cheaper than on .NET 7).

We can also look at the generated assembly to see the difference. In particular, on .NET 8, we see this for the generated assembly:

; Tests.GetOptions()

push rbx

sub rsp,20

vzeroupper

mov rbx,rcx

mov rcx,2C54EC01E40

mov rcx,[rcx]

mov rcx,[rcx+8]

mov rax,offset MT_System.Random+XoshiroImpl

cmp [rcx],rax

jne short M00_L01

call qword ptr [7FFA2D790C88]; System.Random+XoshiroImpl.NextDouble()

M00_L00:

vmovsd xmm1,qword ptr [rbx+8]

mov eax,40000000

xor ecx,ecx

vucomisd xmm1,xmm0

cmovbe eax,ecx

add rsp,20

pop rbx

ret

M00_L01:

mov rax,[rcx]

mov rax,[rax+48]

call qword ptr [rax+20]

jmp short M00_L00

; Total bytes of code 86That vucomisd; cmovbe sequence in there is the comparison between the randomly-generated floating-point value and the probability threshold followed by the conditional move (“conditionally move if below or equal”).

There are many methods that implicitly benefit from these transformations. Take even a simple method, like Math.Max, whose code I’ve copied here:

// dotnet run -c Release -f net7.0 --filter "*" --runtimes net7.0 net8.0

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

using System.Runtime.CompilerServices;

BenchmarkSwitcher.FromAssembly(typeof(Tests).Assembly).Run(args);

[HideColumns("Error", "StdDev", "Median", "RatioSD")]

[DisassemblyDiagnoser]

public class Tests

{

[Benchmark]

public int Max() => Max(1, 2);

[MethodImpl(MethodImplOptions.NoInlining)]

public static int Max(int val1, int val2)

{

return (val1 >= val2) ? val1 : val2;

}

}That pattern should look familiar. Here’s the assembly we get on .NET 7:

; Tests.Max(Int32, Int32)

cmp ecx,edx

jge short M01_L00

mov eax,edx

ret

M01_L00:

mov eax,ecx

ret

; Total bytes of code 10The two arguments come in via the ecx and edx registers. They’re compared, and if the first argument is greater than or equal to the second, it jumps down to the bottom where the first argument is moved into eax as the return value; if it wasn’t, then the second value is moved into eax. And on .NET 8:

; Tests.Max(Int32, Int32)

cmp ecx,edx

mov eax,edx

cmovge eax,ecx

ret

; Total bytes of code 8Again the two arguments come in via the ecx and edx registers, and they’re compared. The second argument is then moved into eax as the return value. If the comparison showed that the first argument was greater than the second, it’s then moved into eax (overwriting the second argument that was just moved there). Fun.

Note if you ever find yourself wanting to do a deeper-dive into this area, BenchmarkDotNet has some excellent additional tools at your disposal. On Windows, it enables you to collect hardware counters, which expose a wealth of information about how things actually executed on the hardware, whether it be number of instructions retired, cache misses, or branch mispredictions. To use it, add another package reference to your .csproj:

<PackageReference Include="BenchmarkDotNet.Diagnostics.Windows" Version="0.13.8" />and add an additional attribute to your tests class: