One of the most interesting ways to enhance your existing applications with AI is to enable more ways for your users to interact with it. Today you probably handle text input, and perhaps some touch gestures for your power users. Now it’s easier than ever to expand that to voice and vision, especially when your users’ primary input is via mobile device.

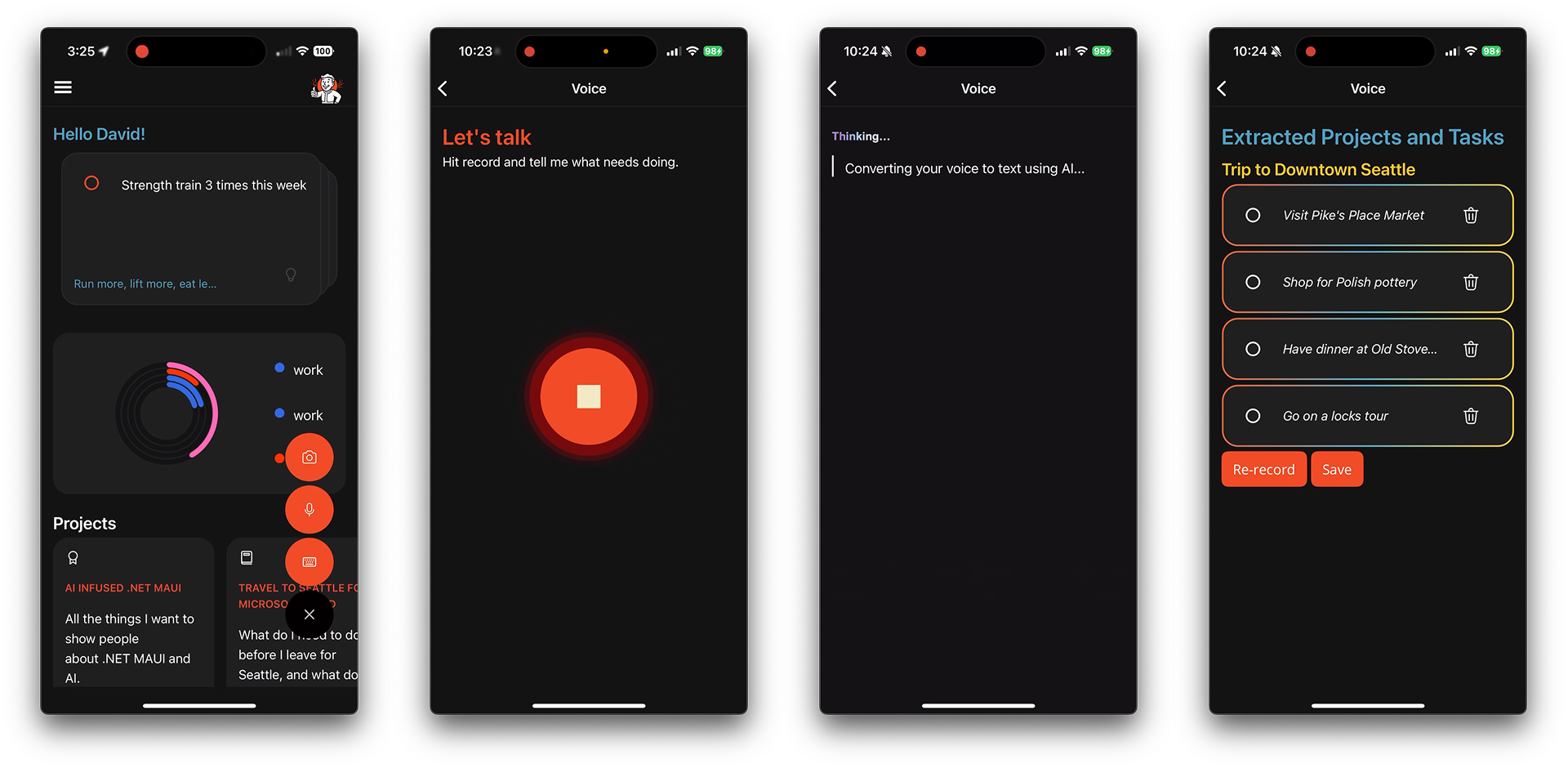

At Microsoft Build 2025 I demonstrated expanding the .NET MAUI sample “to do” app from text input to supporting voice and vision when those capabilities are detected. Let me show you how .NET MAUI and our fantastic ecosystem of plugins makes this rather painless to do with a single implementation that works across all platforms starting with voice.

Talk to me

Being able to talk to an app isn’t anything revolutionary. We’ve all spoken to Siri, Alexa, and our dear Cortana a time or two, and the key is in knowing the keywords and recipes of things they can comprehend and act on. “Start a timer”, “turn down the volume”, “tell me a joke”, and everyone’s favorite “I wasn’t talking to you”.

The new and powerful capability we now have with large language models is having them take our unstructured ramblings and make sense of that in order to fit the structured format our apps expect and require.

Listening to audio

The first thing to do is add the Plugin.Maui.Audio NuGet which helps us request permissions to the microphone and start capturing a stream. The plugin is also capable of playback.

dotnet add package Plugin.Maui.Audio --version 4.0.0In MauiProgram.cs configure the recording settings and add the IAudioService from the plugin to the services container.

public static class MauiProgram

{

public static MauiApp CreateMauiApp()

{

var builder = MauiApp.CreateBuilder();

builder

.UseMauiApp<App>()

.AddAudio(

recordingOptions =>

{

#if IOS || MACCATALYST

recordingOptions.Category = AVFoundation.AVAudioSessionCategory.Record;

recordingOptions.Mode = AVFoundation.AVAudioSessionMode.Default;

recordingOptions.CategoryOptions = AVFoundation.AVAudioSessionCategoryOptions.MixWithOthers;

#endif

});

builder.Services.AddSingleton<IAudioService, AudioService>();

// more code

}

}Be sure to also review and implement any additional configuration steps in the documentation.

Now the app is ready to capture some audio. In VoicePage the user will tap the microphone button, start speaking, and tap again to end the recording.

This is a trimmed version of the actual code for starting and stopping the recording.

[RelayCommand]

private async Task ToggleRecordingAsync()

{

if (!IsRecording)

{

var status = await Permissions.CheckStatusAsync<Permissions.Microphone>();

if (status != PermissionStatus.Granted)

{

status = await Permissions.RequestAsync<Permissions.Microphone>();

if (status != PermissionStatus.Granted)

{

// more code

return;

}

}

_recorder = _audioManager.CreateRecorder();

await _recorder.StartAsync();

IsRecording = true;

RecordButtonText = "⏹ Stop";

}

else

{

_audioSource = await _recorder.StopAsync();

IsRecording = false;

RecordButtonText = "🎤 Record";

// more code

TranscribeAsync();

}

}Once it has the audio stream it can start transcribing and processing it. (source)

private async Task TranscribeAsync()

{

string audioFilePath = Path.Combine(FileSystem.CacheDirectory, $"recording_{DateTime.Now:yyyyMMddHHmmss}.wav");

if (_audioSource != null)

{

await using (var fileStream = File.Create(audioFilePath))

{

var audioStream = _audioSource.GetAudioStream();

await audioStream.CopyToAsync(fileStream);

}

Transcript = await _transcriber.TranscribeAsync(audioFilePath, CancellationToken.None);

await ExtractTasksAsync();

}

}In this sample app, I used Microsoft.Extensions.AI with OpenAI to perform the transcription with the whisper-1 model trained specifically for this use case. There are certainly other methods of doing this including on-device with SpeechToText in the .NET MAUI Community Toolkit.

By using Microsoft.Extensions.AI I can easily swap out another cloud based AI service, use a local LLM with ONNX, or later choose another on-device solution.

using Microsoft.Extensions.AI;

using OpenAI;

namespace Telepathic.Services;

public class WhisperTranscriptionService : ITranscriptionService

{

public async Task<string> TranscribeAsync(string path, CancellationToken ct)

{

var openAiApiKey = Preferences.Default.Get("openai_api_key", string.Empty);

var client = new OpenAIClient(openAiApiKey);

try

{

await using var stream = File.OpenRead(path);

var result = await client

.GetAudioClient("whisper-1")

.TranscribeAudioAsync(stream, "file.wav", cancellationToken: ct);

return result.Value.Text.Trim();

}

catch (Exception ex)

{

// Will add better error handling in Phase 5

throw new Exception($"Failed to transcribe audio: {ex.Message}", ex);

}

}

}Making sense and structure

Once I have the transcript, I can have my AI service make sense of it to return projects and tasks using the same client. This happens in the ExtractTasksAsync method referenced above. The key parts of this method are below. (source)

private async Task ExtractTasksAsync()

{

var prompt = $@"

Extract projects and tasks from this voice memo transcript.

Analyze the text to identify actionable tasks I need to keep track of. Use the following instructions:

1. Tasks are actionable items that can be completed, such as 'Buy groceries' or 'Call Mom'.

2. Projects are larger tasks that may contain multiple smaller tasks, such as 'Plan birthday party' or 'Organize closet'.

3. Tasks must be grouped under a project and cannot be grouped under multiple projects.

4. Any mentioned due dates use the YYYY-MM-DD format

Here's the transcript: {Transcript}";

var chatClient = _chatClientService.GetClient();

var response = await chatClient.GetResponseAsync<ProjectsJson>(prompt);

if (response?.Result != null)

{

Projects = response.Result.Projects;

}

}The _chatClientService is an injected service class that handles the creation and retrieval of the IChatClient instance provided by Microsoft.Extensions.AI. Here I use the GetResponseAsync method along with passing a strong type and a prompt, and the LLM (gpt-4o-mini in this case) returns a ProjectsJson response. The response includes a Projects list with which I can proceed.

Co-creation

Now I’ve gone from having an app that only took data entry input via a form, to an app that can also take unstructured voice input and produce structure data. While I was tempted to just insert the results into the database and claim success, there was yet more to do to make this a truly satisfying experience.

There’s a reasonable chance that the project name needs to be adjusted for clarity, or some task was misheard or worse yet omitted. To address this, I add a step of approval where the use can see the projects and tasks as recommendations and choose to accept them as-is with changes. This is not much different than the experience we have now in Copilot when changes are make but we have the option to iterate further, keep, or discard.

For more guidance like this for designing great AI experiences in your apps, consider checking out the HAX Toolkit and Microsoft AI Principles.

Resources

Here are key resources mentioned in this article to help you implement multimodal AI capabilities in your .NET MAUI apps:

- AI infused mobile & desktop app development with .NET MAUI

- Plugin.Maui.Audio – NuGet package for handling audio recording and playback in .NET MAUI apps

- Microsoft.Extensions.AI – Framework for integrating AI capabilities into .NET applications

- Whisper Model – OpenAI’s speech-to-text model used for audio transcription

- SpeechToText in .NET MAUI Community Toolkit – On-device alternative for speech recognition

- ONNX Runtime – For running local LLMs on mobile devices

- HAX Toolkit – Design guidance for AI experiences in applications

- Microsoft AI Principles – Guidelines for responsible AI implementation

- Telepathy Sample App Source Code – Complete implementation example referenced in this article

Summary

In this article, we explored how to enhance .NET MAUI applications with multimodal AI capabilities, focusing on voice interaction. We covered how to implement audio recording using Plugin.Maui.Audio, transcribe speech using Microsoft.Extensions.AI with OpenAI’s Whisper model, and extract structured data from unstructured voice input.

By combining these technologies, you can transform a traditional form-based app into one that accepts voice commands and intelligently processes them into actionable data. The implementation works across all platforms with a single codebase, making it accessible for any .NET MAUI developer.

With these techniques, you can significantly enhance user experience by supporting multiple interaction modes, making your applications more accessible and intuitive, especially on mobile devices where voice input can be much more convenient than typing.

Great article! Question – will the above work with OpenRouter?