Expanding the many ways in which users can interact with our apps is one of the most exciting parts of working with modern AI models and device capabilities. With .NET MAUI, it’s easy to enhance your app from a text-based experience to one that supports voice, vision, and more.

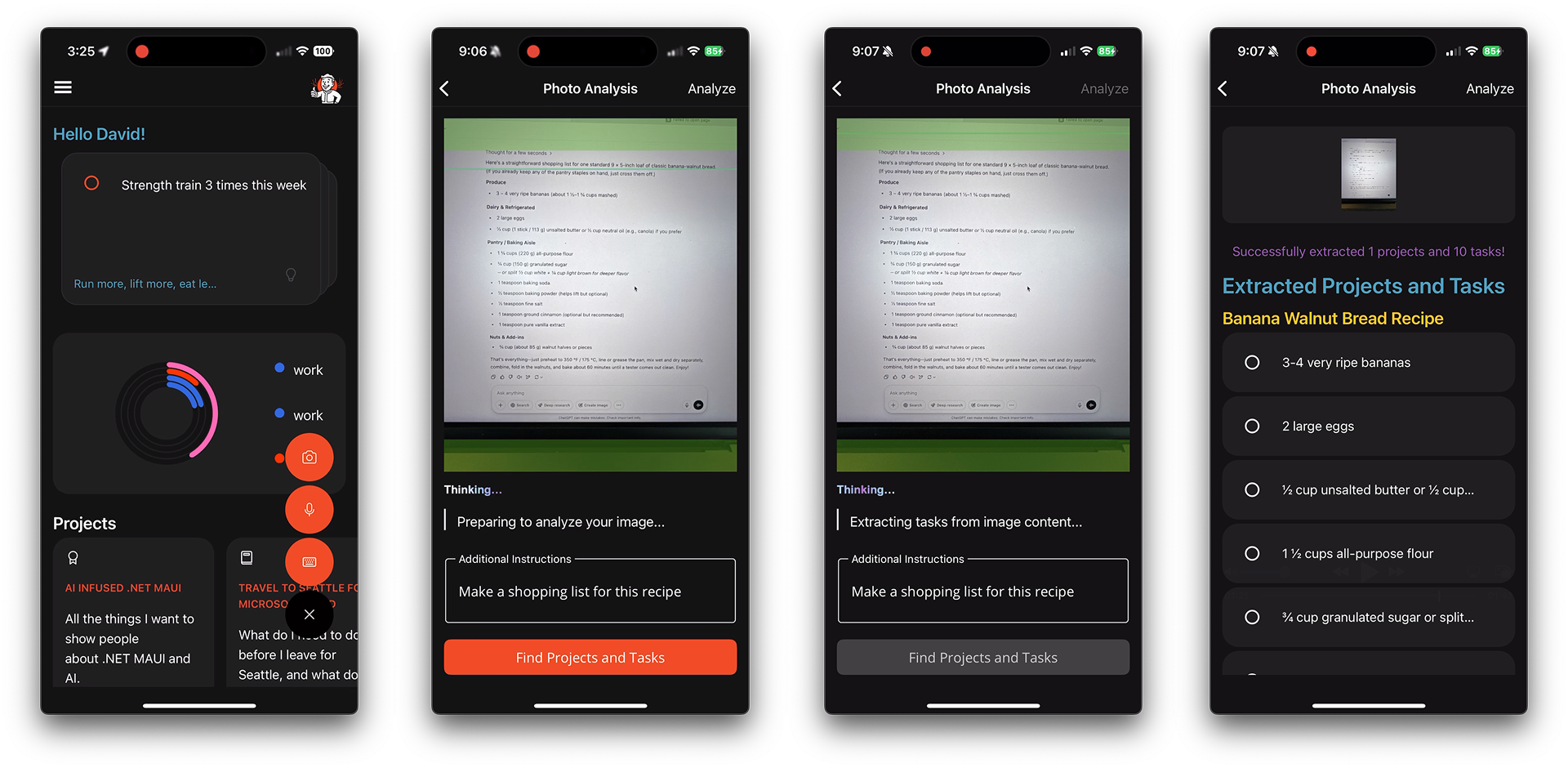

Previously I covered adding voice support to the “to do” app from our Microsoft Build 2025 session. Now I’ll review the vision side of multimodal intelligence. I want to let users capture or select an image and have AI extract actionable information from it to create a project and tasks in the Telepathic sample app. This goes well beyond OCR scanning by using an AI agent to use context and prompting to produce meaningful input.

See what I see

From the floating action button menu on MainPage the user selects the camera button immediately transitioning to the PhotoPage where MediaPicker takes over. MediaPicker provides a single cross-platform API for working with photo gallery, media picking, and taking photos. It was recently modernized in .NET 10 Preview 4.

The PhotoPageModel handles both photo capture and file picking, starting from the PageAppearing lifecycle event that I’ve easily tapped into using the EventToCommandBehavior from the Community Toolkit for .NET MAUI.

<ContentPage.Behaviors>

<toolkit:EventToCommandBehavior

EventName="Appearing"

Command="{Binding PageAppearingCommand}"/>

</ContentPage.Behaviors>The PageAppearing method is decorated with [RelayCommand] which generates a command thanks to the Community Toolkit for MVVM (yes, toolkits are a recurring theme of adoration that you’ll hear from me). I then check for the type of device being used and choose to pick or take a photo. .NET MAUI’s cross-platform APIs for DeviceInfo and MediaPicker save me a ton of time navigating through platform-specific idiosyncrasies.

if (DeviceInfo.Idiom == DeviceIdiom.Desktop)

{

result = await MediaPicker.PickPhotoAsync(new MediaPickerOptions

{

Title = "Select a photo"

});

}

else

{

if (!MediaPicker.IsCaptureSupported)

{

return;

}

result = await MediaPicker.CapturePhotoAsync(new MediaPickerOptions

{

Title = "Take a photo"

});

}Another advantage of using the built-in MediaPicker is giving users the native experience for photo input they are already accustomed to. When you’re implementing this, be sure to perform the necessary platform-specific setup as documented.

Processing the image

Once an image is received, it’s desplayed on screen along with an optional Editor field to capture any additional context and instructions the user might want to provide. I build the prompt with StringBuilder (in other apps I like to use Scriban templates), grab an instance of the Microsoft.Extensions.AI‘s IChatClient from a service, get the image bytes, and supply everything to the chat client using a ChatMessage that packs TextContent and DataContent.

private async Task ExtractTasksFromImageAsync()

{

// more code

var prompt = new System.Text.StringBuilder();

prompt.AppendLine("# Image Analysis Task");

prompt.AppendLine("Analyze the image for task lists, to-do items, notes, or any content that could be organized into projects and tasks.");

prompt.AppendLine();

prompt.AppendLine("## Instructions:");

prompt.AppendLine("1. Identify any projects and tasks (to-do items) visible in the image");

prompt.AppendLine("2. Format handwritten text, screenshots, or photos of physical notes into structured data");

prompt.AppendLine("3. Group related tasks into projects when appropriate");

if (!string.IsNullOrEmpty(AnalysisInstructions))

{

prompt.AppendLine($"4. {AnalysisInstructions}");

}

prompt.AppendLine();

prompt.AppendLine("If no projects/tasks are found, return an empty projects array.");

var client = _chatClientService.GetClient();

byte[] imageBytes = File.ReadAllBytes(ImagePath);

var msg = new Microsoft.Extensions.AI.ChatMessage(ChatRole.User,

[

new TextContent(prompt.ToString()),

new DataContent(imageBytes, mediaType: "image/png")

]);

var apiResponse = await client.GetResponseAsync<ProjectsJson>(msg);

if (apiResponse?.Result?.Projects != null)

{

Projects = apiResponse.Result.Projects.ToList();

}

// more code

}Human-AI Collaboration

Just like with the voice experience, the photo flow doesn’t blindly assume the agent got everything right. After processing, the user is shown a proposed set of projects and tasks for review and confirmation.

This ensures users remain in control while benefiting from AI-augmented assistance. You can learn more about designing these kinds of flows using best practices in the HAX Toolkit.

Resources

- Telepathic App Source Code

- Microsoft.Extensions.AI

- MediaPicker Documentation

- HAX Toolkit

- Microsoft AI Principles

- AI for .NET Developers

Summary

We’ve now extended our .NET MAUI app to see as well as hear. With just a few lines of code and a clear UX pattern, the app can take in images, analyze them using vision-capable AI models, and return structured, actionable data like tasks and projects.

Multimodal experiences are more accessible and powerful than ever. With cross-platform support from .NET MAUI and the modularity of Microsoft.Extensions.AI, you can rapidly evolve your apps to meet your users where they are, whether that’s typing, speaking, or snapping a photo.

0 comments

Be the first to start the discussion.