Azure Active Directory’s gateway service is a reverse proxy that fronts hundreds of services that make up Azure Active Directory (Azure AD). If you’ve used services such as office.com, outlook.com, azure.com or xbox.live.com, then you’ve used Azure AD’s gateway. The gateway provides features such as TLS termination, automatic failovers/retries, geo-proximity routing, throttling, and tarpitting to services in Azure AD. The gateway is present in more than 53 Azure datacenters worldwide and serves ~115 Billion requests each day. Up until recently, Azure AD’s gateway was running on .NET Framework 4.6.2. As of September 2020, it’s running on .NET Core 3.1.

Motivation for porting to .NET Core

The gateway’s scale of execution results in significant consumption of compute resources, which in turn costs money. Finding ways to reduce the cost of executing the service has been a key goal for the team behind it. The buzz around .NET Core’s focus on performance caught our attention, especially since TechEmpower listed ASP.NET Core as one of the fastest web frameworks on the planet. We ran our own benchmarks on gateway prototypes on .NET Core and the results made the decision very easy: we must port our service to .NET Core.

Does .NET Core performance translate to real-life cost savings?

It absolutely does. In Azure AD gateway’s case, we were able to cut our CPU costs by 50%.

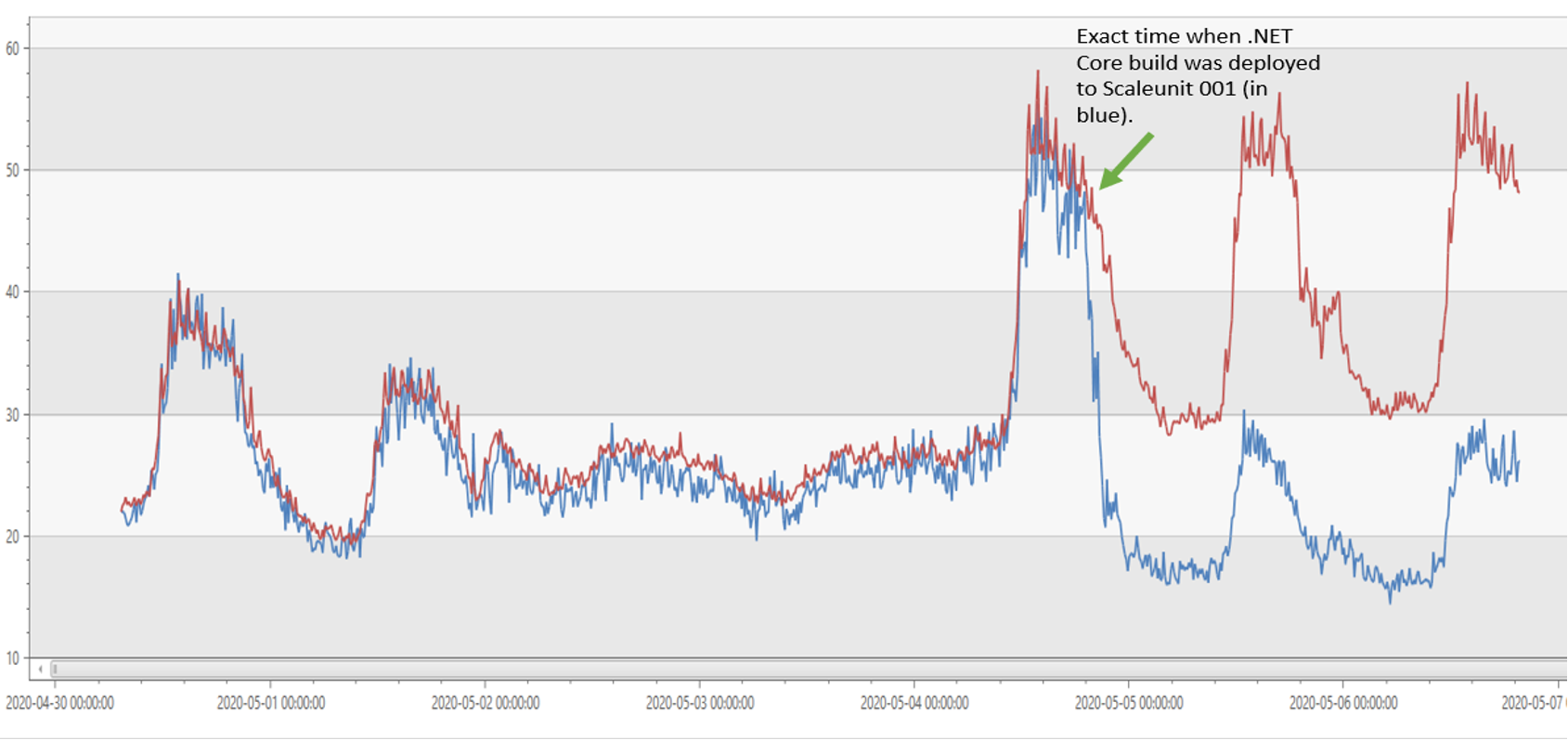

The gateway used to run on IIS with .NET Framework 4.6.2. Today, it runs on IIS with .NET Core 3.1. The image below shows that our CPU usage was reduced by half on .NET Core 3.1 compared to .NET Framework 4.6.2 (effectively doubling our throughput).

As a result of the gains in throughput, we were able to reduce our fleet size from ~40k cores to ~20k cores (50% reduction).

How was the port to .NET Core achieved?

The porting was done in 3 phases.

Phase 1: Choosing an edge webserver.

When we started the porting effort, the first question we had to ask ourselves was which of the 3 webservers in .NET Core do we pick?

We ran our production scenarios on all 3 webservers, and we realized it all came down to TLS support. Given the gateway is a reverse proxy, support for a wide range of TLS scenarios is critical.

Kestrel:

- When we started our migration (November 2019), Kestrel did not support client certificate negotiation nor revocation on a per-hostname basis. In .NET 5.0, support for these features was added.

- As for .NET 5.0, Kestrel (via its reliance on SslStream) does not support CTL stores on a per hostname basis. Support is expected in .NET 6.0.

HTTP.sys:

- HTTP.sys server had a disconnect between the TLS configuration at Http.Sys layer and the .NET implementation: Even when a binding is configured to not negotiate client certificates, accessing the Client certificate property in .NET Core triggers an unwanted TLS renegotiation.

For example, performing a simple null check in C# renegotiates the TLS handshake:if (HttpContext.Connection.ClientCertificate != null)This has been addressed in: https://github.com/dotnet/aspnetcore/issues/14806 in ASP.NET Core 3.1. At the time, when we started the port in November 2019, we were on ASP.NET Core 2.2 and therefore did not pick this server.

IIS:

- IIS met all our requirements for TLS, so that’s the webserver we chose.

Phase 2: Migrating the application and dependencies.

As with many large services and applications, Azure AD’s gateway has many dependencies. Some were written specifically for the service, and some written by others inside and outside of Microsoft. In certain cases, those libraries were already targeting .NET Standard 2.0. For others, we updated them to support .NET Standard 2.0 or found alternative implementations, e.g. removing our legacy Dependency Injection library and instead using .NET Core’s built-in support for dependency injection. The .NET Portability Analyzer was of great help in this step.

For the application itself:

- Azure AD’s gateway used to have a dependency on

IHttpModuleandIHttpHandlerfrom classic ASP.NET, which don’t exist in ASP.NET Core. So, we re-wrote the application using the middleware constructs in ASP.NET Core. - One of the things that really helped throughout the migration is Azure Profiler (a service that collects performance traces at runtime on Azure VMs). We deployed our nightly builds to test beds, used wrk2 as a load agent to test the scenarios under stress and collected Azure Profiler traces. These traces would then inform us of the next tweak necessary to extract peak performance from our application.

Phase 3: Rolling out gradually.

The philosophy we adopted during rollout was to discover as many issues as possible with little or no production impact.

- We deployed our initial builds to test, integration and DogFood environments. This led to early discovery of bugs and helped in fixing them before hitting production.

- After code complete, we deployed the .NET Core build to a single production machine in a scale unit. A scale unit is a load balanced pool of machines.

- The scale unit had ~100 machines, where 99 machines were still running our existing .NET Framework build and only 1 machine was running the new .NET Core build.

- All ~100 machines in this scale unit receive the exact type and amount of traffic. Then, we compared status codes, error rates, functional scenarios and performance of the single machine to the remaining 99 machines to detect anomalies.

- We wanted this single machine to behave functionally the same as the remaining 99 machines, but have much better performance/throughput and that’s what we observed.

- We also “forked” traffic from live production scale units (running .NET Framework build) to .NET Core scale units to compare and contrast as indicated above.

- Once we reached functional equivalence, we started expanding the number of Scale units running .NET Core and gradually expanded to an entire datacenter.

- Once an entire datacenter was migrated, the last step was to gradually expand worldwide to all the Azure datacenters where Azure AD’s gateway service has a presence. Migration done!

Learnings

- ASP.NET Core is strict about RFCs. This is a very good thing as it drives good practices across the board. However, classic ASP.NET and .NET Framework were quite a bit more lenient and that causes some backwards compatibility issues:

- The Webserver by default allows only ASCII values in HTTP Headers. At our request, Latin1 support was added in IISHttpServer: https://github.com/dotnet/aspnetcore/pull/22798

HttpClienton .NET Core used to support only ASCII values in HTTP headers.- .NET Core team has added Latin1 support in .NET Core 3.1: https://github.com/dotnet/corefx/pull/42978

- Ability to select encoding scheme added in .NET 5.0: https://github.com/dotnet/runtime/issues/38711

- Forms and cookies that are not RFC compliant result in validation exceptions. So, we built “fallback” parsers using classic ASP.NET source code to maintain backward compatibility for customers.

- There was a performance bottleneck in

FileBufferingReadStream‘sCopyToAsync()method due to multiple 1 byte copies of a n byte stream. This has been addressed in .NET 5.0 by picking a default buffer size of 4K: https://github.com/dotnet/aspnetcore/issues/24032 - Be aware of classic ASP.NET quirks:

- Trailing whitespace is auto-trimmed in the path:

- foo.com/oauth ?client=abc is trimmed to foo.com/oauth?client=abc on classic ASP.NET.

- Over the years, customers/downstream services have taken a dependency on this path being trimmed and ASP.NET Core does not auto-trim the path. So, we had to trim trailing whitespace to mimic classic ASP.NET behavior.

Content-Typeheader is auto-generated if missing:- When the response is larger than zero bytes, but

Content-Typeheader is missing, classic ASP.NET generates a defaultContent-Type:text/htmlheader. ASP.NET Core does not force generate a defaultContent-Typeheader and clients who assumeContent-Typeheader is always sent in the response start having issues. We mimicked the classic ASP.NET behavior by adding a defaultContent-Typewhen it is missing from downstream services.

- When the response is larger than zero bytes, but

- Trailing whitespace is auto-trimmed in the path:

Future

Porting to .NET Core resulted in doubling the throughput for our service and it was a great decision to move. Our .NET Core journey will not stop after porting. For the future, we are looking at:

- Upgrading to .NET 5.0 for better performance.

- Porting to Kestrel so that we can intercept connections at the TLS layer for better resiliency.

- Leveraging components/best practices in YARP (https://microsoft.github.io/reverse-proxy/) in our own reverse proxy and also contribute back.

Nice case!

But, If I understand correctly there is only 66.5 tps per core? can you explain more about it?

(115000000000requests / 20000cores / 3600s / 24h = 66.5)

Thank you Avanindra

Is this “Azure Active Directory’s gateway” something I can setup/use in Azure? I mean, is it available as a resource that I can create in the Azure portal. Or does it exist as a product I can install on premise? Is the source on GitHub?

Hi Hans,

You cannot create Azure AD’s gateway as a resource in Azure Portal. It is a reverse proxy that is used by other public services that are part of Azure AD. When you use those services (Azure AD B2C, for example), the requests are automatically routed to the gateway.

Hi Avanindra

I understand. Thank you for your answer.

A very good read and a proper encouragement to port the applications under 2.1 straight to 5.0 because of the issues highlighted. Thank you for the insights

Great insights. But on the future side why do you consider .NET 5.0 when it is not the LTS version. Shouldn’t be .NET 6

Hi Amit, .NET 6.0 is 10 months out. (expected release date is November 2021). While .NET 5.0 is not LTS, , I expect .NET 5.1 to be LTS (assuming the .NET release convention is followed where .NET Core 3.1 and .NET 2.1 were LTS releases, whereas .NET 3.0 and .NET 2.0 were not LTS.)

There is no 5.1 release planned. The progression will be 5.0 and then 6.0.

Got it. Will there be a LTS version of .NET 5 be available then? Also, will .NET 6.0 be LTS?

net 6 will be LTS. Every even version will be LTS going forward (At least according to this: https://devblogs.microsoft.com/dotnet/net-core-releases-and-support/)

I would guess that the hardest part was migrating from .NET Framework to .NET Core and upgrading to a newer version now is a far less risky and complicated endeavor. And you then get performance improvements yearly instead of only once every two years.

Avanindra, I really enjoyed this read. I'd like to ask how many break fix cycles it took to get right. How many times did you end up sending out 1 server in the scaleset and what tooling did you do to make that easier (K8? Azure Pipelines?)

I ask because you note:

Once we reached functional equivalence, we started expanding the number of Scale units running .NET Core and gradually expanded to an entire datacenter.

Which implied to me that you had a fair number of releases before you felt confident you'd go to 100%

Also, enjoyed the acknowledgement that some assumed...

Hi Doug, we use a pipeline many teams within Microsoft use called Elastic Autopilot. This infrastructure makes it feasible to roll forward/backward with ease. Regarding K8s, we have plans on migrating there after we migrate to Kestrel.