Updated (2017): See .NET Framework Releases to learn about newer releases. This release is unsupported.

Updated (July 2015): See Announcing .NET Framework 4.6 to read about the latest version of the NET Framework.

Update: The .NET Framework 4.5.1 RTM has been released and is available for download. The content below is still a great description of the .NET Framework 4.5.1 and is a great way to learn about the great features in the release.

Today, we are announcing the .NET Framework 4.5.1 Preview, which includes new features and improvements across the product. We’ve made it easier to build .NET apps in Visual Studio, with convenient and useful productivity improvements that are a direct response to your feedback and requests. .NET apps are now faster, because they make better use of your hardware. We’ve also laid groundwork that will enable you to more easily take advantage of updates to the .NET Framework.

You can download .NET Framework 4.5.1 Preview from the Microsoft Download Center.

Before we get into the details, we’d like to share some of the successes we’ve seen with the .NET Framework 4.5, which was released less than a year ago. Today, there are ~90M machines in active use globally that have the .NET Framework 4.5 installed, out of ~1B active total machines with the .NET Framework. We’ve seen Windows Azure and other cloud services add support for the .NET Framework 4.5. We’ve also heard from many ISVs who have moved their apps to the .NET Framework 4.5 and many more who explicitly support it. And we’ve seen enthusiastic acceptance of the asynchronous programming model (which was completed in the .NET Framework 4.5), both by you and more broadly across the technology industry. These all provide a great starting point for the .NET Framework 4.5.1 Preview, the first update to the .NET Framework 4.5.

This post is an overview of the .NET Framework 4.5.1. We will be posting more detailed information on the release in the coming weeks.

What’s in the box?

In the .NET Framework 4.5.1 Preview, you’ll find that we’ve delivered a valuable set of large and small improvements that build on top of the .NET Framework 4 and 4.5 releases. We had a much shorter ship schedule for the .NET Framework 4.5.1 Preview, so it was important to prioritize the set of scenarios and features that we aimed to tackle. Many of you have short ship schedules, too, so you’ll probably sympathize. We focused our effort on the following key areas:

- Developer productivity

- Application performance

- Continuous innovation

Within those three areas, we spent much of our effort on requests from uservoice and other feedback channels, as you’ll see in the sections below. You’ll really like those.

We know that most .NET development today is based on the .NET Framework 4 (or later), which will make broad adoption of the .NET Framework 4.5.1 update convenient and easy.

Developer productivity

We’ve got some fun ones to share here. We did a fair bit more to improve your experience of building apps in Visual Studio.

- X64 edit and continue

- Async-aware debugging

- Managed return value inspection

- ADO.NET idle connection resiliency

- Improvements in Windows Store app development

x64 Edit and Continue

Uservoice feature completed: x64 edit and continue (2600+ votes)

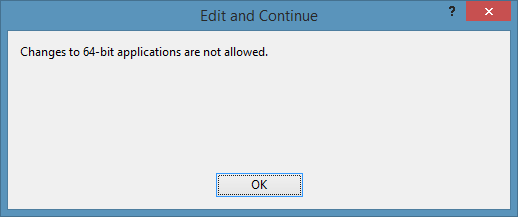

We now have x64 Edit and Continue (EnC) available in Visual Studio 2013 Preview. We’ve wanted to add that feature for a long time, but we always had at least two other features ahead of it in our priority list. This time, we decided that it really needed to get done and didn’t bother with any of the priority lists. That, of course, leads us to our fun celebratory image. It’s certainly a good description of how we feel about this feature.

x64 EnC doesn’t need a lot of explanation. We’ve had x86 EnC in the product since the Visual Studio 2005/.NET Framework 2.0 release, and we’re sure all you have used this feature and that many of you rely on it daily. With Visual Studio 2013 Preview, you can now use EnC with x64, AnyCPU, and (of course) x86 projects.

In short, we removed this dialog for almost all project types in Visual Studio 2013 Preview. We know that it wasn’t your favorite.

We still have some more work to do to remove the dialog from Azure Cloud Services projects, and the team will tackle that in a later Azure SDK release. However, you will be able to use EnC on x64 dev machines as you build and diagnose ASP.NET web apps locally, no matter if you target on-premise servers, a 3rd-party hoster, Azure WAWS, or IaaS VMs.

This work brings x64 up to parity with x86. There are some language features that are not supported with EnC. Those remain in place for both x86 and x64. The additional work, to allow the modification of lambdas and anonymous methods, for example, is something that the team will consider for a future release. We needed to get x64 support out of the way first.

Async-aware debugging

You probably noticed that we made big investments in asynchronous programming in the last two versions of the .NET Framework. We believe that it is a game-changing language feature that enables .NET developers to achieve results that used to be quite challenging and less elegant. We hope that you enjoy Task, async, and await as much as we do.

For this release, we focused on improving the way the debugger enables you to interact with tasks in the debugger windows.

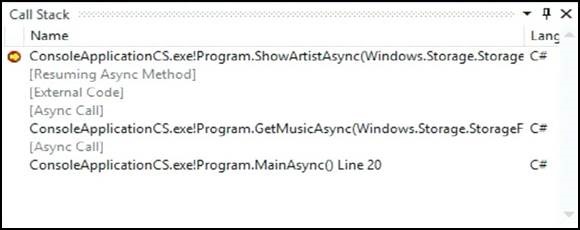

Debugging is all about understanding what your program does. One of the most important things is understanding the code flow, i.e., where did this code come from and what is the next line that executes. For this purpose, Visual Studio provides the Call Stack window. The async/await language feature is about providing an abstraction that allows you to think of the problem in a sequential fashion. The compilers do the heavy lifting to generate code that wires it up in order to run asynchronously. This means the runtime representation of the call stack doesn’t directly match your intuitive understanding of your code. In other words, the abstraction becomes leaky when debugging your code, which makes the Call Stack window less useful in Visual Studio 2012.

For example, the following shows a typical example of a call stack in Visual Studio 2012. As you can see, there is a lot of infrastructure code, but you can’t really determine the logical starting point, which might not even be on the call stack anymore.

Visual Studio 2012 – Call Stack Window

The experience in Visual Studio 2013 Preview has been greatly improved. We folded the infrastructure code into [External Code], and we also chained in the logical parent frames–in this example, GetMusicAsync. Note that the parent and child frames may have been run on different threads.

Visual Studio 2013 Preview – Call Stack Window

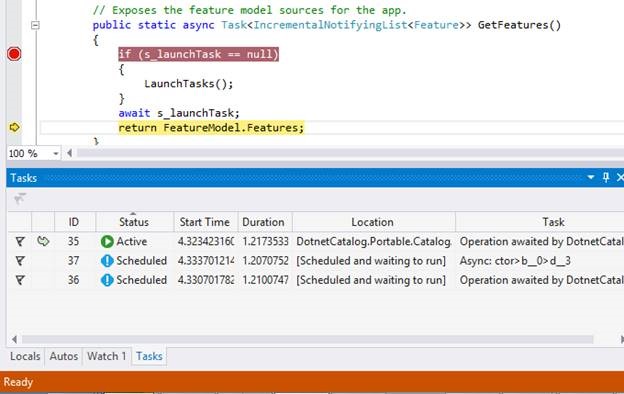

We also added a new window called Tasks, which replaces the Parallel Tasks window. This is a cross-language window that is used by .NET, C++, and JavaScript apps. In Windows 8.1 Preview, the OS has an understanding of asynchronous operations and the states that they can be in, which is then used by Visual Studio 2013 preview, in this new window. For .NET, this window is available for all app types (Windows Store, Windows desktop, …).

The Tasks window displays tasks that relate to the given breakpoint, and also any other tasks that are currently active or scheduled within the app. This extra information is very useful to help build an accurate model of what is going on at any a given time within your app, and was quite difficult to gather before.

Managed return value inspection

Uservoice feature completed: Function return value in debugger (1096 votes)

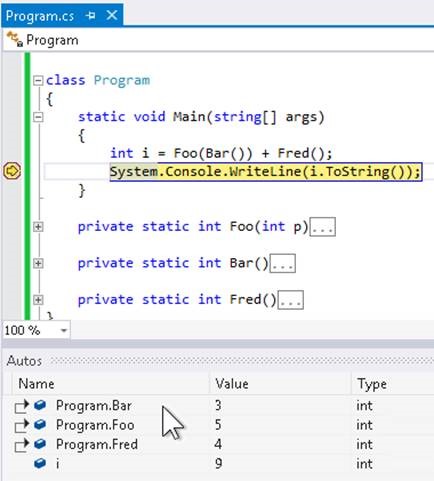

How many times have you written a method that included a return statement of a method call or called a method that evaluated expressions as arguments? It is pleasant and tempting to write code like that. It might look like this.

public Task<HttpResponseMessage> GetDotNetTeamRSS() { var server = ControllerContext.Request.RequestUri.GetLeftPart(UriPartial.Scheme | UriPartial.Authority); var client = new HttpClient(); return client.GetAsync(server + "/api/httpproxy?url=" + server + "/api/rss"); }

Unfortunately, that code can be difficult to debug, since the debugger will not show you the final return value. In the worst case, you may find that the return value is immediately returned from the caller, requiring you to travel back a few frames to get the value you want. Instead, you may sometimes store these values as locals to ensure a better debugging experience later. In that case, the code above would look like the following:

public Task<HttpResponseMessage> GetDotNetTeamRSS() { var server = ControllerContext.Request.RequestUri.GetLeftPart(UriPartial.Scheme | UriPartial.Authority); var client = new HttpClient(); var message = client.GetAsync(server + "/api/httpproxy?url=" + server + "/api/rss"); return message; }

We’ve all evaluated this trade-off many times and sided on both sides of it.

Fortunately, we’ve erased this trade-off in Visual Studio 2013 Preview. Going forward, you can write your code any way you want, and consult the Autos Window for return values.

You’ll see a new symbol in the Autos Window that displays return values, as illustrated below. This new feature displays both direct return values and the values of embedded methods (the arguments). This is similar to the existing Visual C++ version of this feature, which we consulted as part of our design effort.

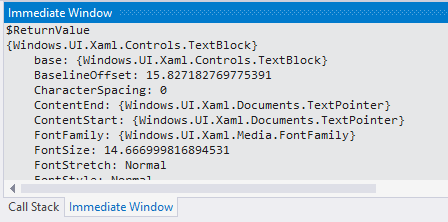

Different people use the debugger in different ways, in addition to having different coding styles. The visual representation that you see in Autos may be the perfect solution for many of you, but others may want to use the Watch window or the Immediate window, which provide different ways of interacting with the debugger. In both cases, you can use the new $ReturnValue keyword to inspect managed return values.

In this release, $ReturnValue represents just the direct return value and not any of the embedded return values. Please tell us in the comments or on uservoice if you feel that we should extend the feature to enable the same level of expressiveness for Immediate and Watch windows as you get with the Autos.

ADO.NET idle connection resiliency

Data is a big part of what your customers expect from an app. There are a variety of ways that an app’s connection to a SQL database can get broken. When that happens, it is necessary to re-connect the app to its data source. Doing so is easier said than done, and requires you to build and test a set of connection resiliency features. Instead, we’ve built those features for you.

The .NET Framework 4.5.1 Preview includes a new feature in ADO.NET that takes care of this experience. Idle connection resiliency adds the capability to re-build broken idle connections against SQL databases transparently, by recovering lightweight session state even after back-end failure. Under the covers, this new feature provides a more robust connectivity framework for recreating broken connections and re-trying transactions. It also handles a set of common error cases that can occur in these situations. Last, it supports both synchronous and asynchronous operations, so it can be used in a variety of code patterns.

There are many scenarios that will benefit from this new capability. Each one is a little bit different and potentially requires different behavior, but that is handled for you as part of the feature. We also see that recent industry trends have a greater need for connection resiliency than we saw just a few years ago. That’s the reason we added this feature to the product.

Many of you are moving your apps to the cloud. That frequently means that you are moving your on-premise infrastructure piece by piece, not all at once. It can be the case that an app and the databases it accesses are quite separate (accessed via VPN or in different data centers). The underlying remote connection can go down, even for just a second.

You don’t need a remote network to encounter resiliency challenges. Instead, you may have apps deployed on customer laptops or tablets. This is a scenario where we see both Microsoft SQL Server and Microsoft Access being used. Every time users close their laptops, there is a likelihood that your app’s database connection has been broken. As soon as they open their laptops, their machines will wake up, and they will expect to be able to continue their work.

Even in data centers with fast and reliable internal links, there are important scenarios. In particular, SQL Server can be configured for dynamic load balancing and failover. A database might be on server1 now and later on server2. That’s expected, as part of how SQL Server is configured in that particular environment. Still, apps that rely on that database need to be resilient to those changes, since connections can be broken at any time.

ADO.NET connection resiliency is a great answer to these connection scenarios. It is able to re-connect an app to the database and replay session state transparently when an app’s database connection is broken.

Windows Store app development

Windows Store development has moved forward with Windows 8.1 Preview. You can learn about the larger set of improvements on the Windows Store apps site. There are a few additional changes that we made to improve the productivity of Windows Store app development with the .NET Framework.

We filled in a key gap in this release, for interacting with Windows Runtime streams. Many of you have been wanting a way to convert a .NET Stream to a Windows Runtime IRandomAccessStream. Let’s just call it an AsRandomAccessStream extension method. We weren’t able to get this feature into Windows 8, but it was one of our first additions to Windows 8.1 Preview.

You can now write the following code, to download an image with HttpClient, load it in a BitmapImage and then set as the source for a Xaml Image control.

//access image via networking i/o var imageUrl = "http://www.microsoft.com/global/en-us/news/publishingimages/logos/MSFT_logo_Web.jpg"; var client = new HttpClient(); Stream stream = await client.GetStreamAsync(imageUrl); var memStream = new MemoryStream(); await stream.CopyToAsync(memStream); memStream.Position = 0; var bitmap = new BitmapImage(); bitmap.SetSource(memStream.AsRandomAccessStream()); image.Source = bitmap;

You can do the same thing, with a local file. In the case of files, there is no requirement to use .NET streams, since the Windows Runtime already exposes streams from StorageFile objects as IRandomAccessStream. However, you may need to read or update a stream before setting it as the source for an Image, for example. In that case, using AsRandomAccessStream makes sense. Note that the underlying Windows Runtime stream is preserved, enabling our implementation to avoid multiple copies between the different stream types. Here’s what the code would look like using files:

//access image via file i/o var imagePath = "picture.png"; StorageFolder folder = KnownFolders.PicturesLibrary; StorageFile file = await folder.GetFileAsync(imagePath); var bitmap = new BitmapImage(); Stream stream = await file.OpenStreamForWriteAsync(); // this is the point where you operate on the stream with .NET code // imaging applying an image transform bitmap.SetSource(stream.AsRandomAccessStream()); image.Source = bitmap;

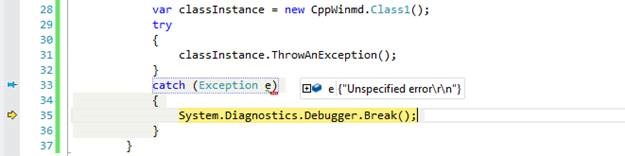

We also made a few other changes, to provide a better experience using the Windows Runtime from .NET. We are now able to provide better exception messages when exceptions are thrown from a Windows Runtime component. Here you see an exception thrown from a C++/CX component and being caught in .NET code, in Visual Studio 2012.

On Windows 8.1 Preview, using Visual Studio 2013 Preview, you can see that the text used in the exception object now passes across language and component boundaries. That can be very useful if you are consuming Windows Runtime components that other developers have written.

Another change is with structs. Nullable fields are now supported on structs defined in Windows Runtime components. You have the expected experience accessing those fields from .NET code and you can define a struct with a Nullable field when exporting a managed Windows Runtime component.

We added support so Intellisense can pick up on doc comments across language boundaries when a type projection occurs (e.g. IList<int> <-> IVector<int>). Here is an example of a doc comment on a managed method which has a projected type parameter.

/// <summary> /// MyMethod has a summary /// </summary> /// <param name="myInts">Give me all your numbers</param> public void MyMethod(IList<int> myInts) { // do useful work }

On Windows 8.1 Preview with Visual Studio 2013 Preview, Intellisense will show these doc comments when you try to use this method from another language projection.![clip_image004[1] clip_image004[1]](https://devblogs.microsoft.com/dotnet/wp-content/uploads/sites/10/2013/06/4846.clip_image0041_1C54A82C.jpg)

Please tell us if there are any other APIs that you need added, to make it easier to use Windows Runtime APIs.

Application performance

Looking back, we managed to achieve some really big performance wins in the .NET Framework 4.5 that continue to lighten the load of big services. This time around, we’ve got some similarly impressive results to share in the following areas:

- ASP.NET app suspension

- On-demand large object heap compaction

- Multi-core JIT improvements

- Consistent performance before and after servicing the .NET Framework

ASP.NET app suspension

Update: A more recent post on ASP.NET App Suspend is now available: ASP.NET App Suspend – responsive shared .NET web hosting.

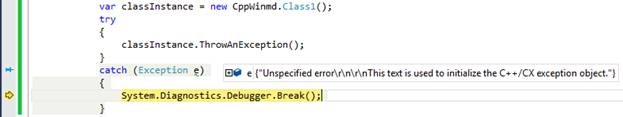

You’re probably used to hearing the phrases “low-latency” and “high-density”, but not often together unless they include another common (and less friendly) word, which is “expensive”. We’re excited to announce a major change for web hosters in Windows Server 2012 R2 Preview and the .NET Framework 4.5.1 Preview that enables a low-latency and high-density solution. In fact, this new solution is the preferred mode for ASP.NET on Windows Server 2012 R2 Preview.

ASP.NET can host many more sites with this new approach because idle sites are suspended from CPU activity and are paged to disk, making the CPU and more memory available to new site requests. Sites start up so much faster because they are suspended in a “ready to go” state. We call this new kind of startup, “resume from suspend”.

Windows Server 2012 R2 Preview includes a new feature called IIS Idle Worker Process Page-out. It suspends CPU activity to IIS sites after they have been idle for a given time. Sites that are suspended become candidates for paging to disk via the Windows Virtual Memory Manager, per the normal memory paging behavior in Windows Server. If you’ve seen app suspension for Windows Phone or Windows Store apps, this feature will be familiar, although the details are different on Windows Server.

IIS Idle Worker Process Page-out must be enabled by the server admin, so it is opt-in, per app-pool. The given idle time to wait before suspending a site can also be set by the admin. Once the feature is enabled in IIS, ASP.NET will use it without any additional configuration. You can think of ASP.NET app suspension as the .NET Framework implementation of IIS Idle Worker Process Page-out.

In our performance labs, we’ve seen phenomenal improvements to site density and startup latency on Windows Server 2012 R2 Preview. We were able to host about 7 times more ASP.NET sites, with 90% reductions in startup time, on the same hardware, compared to Windows Server 2012. We believe that’s an amazing improvement! Our testing of ASP.NET app suspension was done on what we regard as relatively unspectacular server hardware (for example, 20 GB of RAM). The only change we made was to configure the Windows page file to be located on an SSD, which made resume from suspend very fast. This features works on traditional HDDs too, and startup times will still be significantly improved, although not quite as much as we saw with our configuration.

ASP.NET app suspension performance numbers seen in the .NET lab on lab hardware

ASP.NET app suspension primarily targets servers that host a large number of sites. This is a common scenario for companies that run their own intranet and Internet operations and also commercial web hosting companies. If your situation matches that description, you will want to take advantage of this new feature. In fact, the Windows Azure Web Sites functionality in the Windows Azure Pack Preview is already using ASP.NET app suspension.

ASP.NET app suspension enables suspended sites to start up really fast, but requires sites to have been run at least once to get them into that state. Also, ASP.NET sites do not remain suspended if you recycle the app-pool or reboot the machine. Last, sites that do not get traffic for extended periods of time will eventually be terminated. As a result, existing ASP.NET caching features still remain relevant and useful for the initial startup case.

On-demand large object heap compaction

Uservoice feature completed: LOH compaction (18 votes)

We’ve had requests for providing a solution to large object heap (LOH) fragmentation use for a while. In the .NET Framework 4.5, we took a first step towards resolving this issue by making better use of free blocks of contiguous memory within the LOH. We felt good about that solution since it was automatically provided by the GC and would therefore benefit a broad set of scenarios by reducing the amount of memory that managed apps use.

After we released the .NET Framework 4.5, we received positive feedback that LOH fragmentation had subsided quite a lot. That was great to hear and provided validation that addressing fragmentation with a smarter allocation algorithm was a good first step. We did, however, also hear from a small set of customers that more help was still needed with the LOH.

We’ve taken the next logical step, by including LOH compaction in the .NET Framework 4.5.1 Preview. You can now instruct the GC to compact the LOH, either as part of the next natural GC or a forced GC, using the GC.Collect API. We hope that this solution resolves the remaining LOH issues.

While on-demand LOH compaction is a great addition to the .NET Framework, it isn’t intended to be a feature that most apps will use. LOH compaction can be an expensive operation and should only be used after significant performance analysis, both to determine that LOH fragmentation is a problem, but also to decide when to request compaction. Even if you do decide that it is something that your app needs, you’ll still want to use it sparingly and intentionally. It certainly isn’t something you use every 5 minutes. For example, we’ve talked to some of the big services teams at Microsoft that make intentional and extensive use of the LOH. They tell us that they do not have LOH fragmentation problems, so likely will not adopt this feature. Other teams are in a different situation and will take advantage of the new feature.

In our planning, we talked to a number of companies, including Microsoft, about their specific needs for LOH compaction– for both client apps and websites. We wanted to make sure that our implementation worked for them out of the box. I’ll share one of the ways that we’ve seen the LOH used, and how LOH compaction will improve that approach.

As .NET workloads get larger, we continue to see the GC as a key place to invest. Please try out your workload on the .NET Framework 4.5.1 Preview, particularly if you haven’t yet tried the .NET Framework 4.5. We want to hear if LOH fragmentation in your app is resolved in the .NET Framework 4.5.1 Preview. That’s certainly our hope.

Multi-core JIT improvements

You may recall that we shipped multi-core JIT in the .NET Framework 4.5. In this release, we expanded the feature significantly, to work better with ASP.NET. In The .NET Framework 4.5.1 Preview, multi-core JIT has been extended to support dynamically loaded assemblies, loaded with the Assembly.LoadFrom API and from an Appdomain.AssemblyResolve event handler. These APIs are not specific to ASP.NET, however, we found that they were used quite commonly in ASP.NET apps.

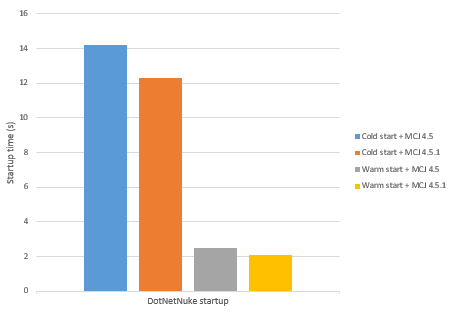

Last time, we demonstrated the use of multi-core JIT for client apps. This time, let’s look at server apps. Here, you’ll see that we tested multi-core JIT with DotNetNuke. You can see that we saw an ~15% speedup.

Multi-core JIT improvements with DotNetNuke

Multi-core JIT is enabled automatically for ASP.NET apps. For client apps, you need to opt in-to the feature, which is only two lines of code.

Consistent performance before and after servicing the .NET Framework

One of the ways that we deliver great performance, on both Windows and Windows Server, is by pre-compiling the core .NET Framework assemblies into native images, via a tool called NGEN. We’ve been doing that for over a decade now. It makes sense to pre-compile these assemblies since they are loaded by all managed apps. Requiring every app to JIT-compile core .NET Framework code at every launch doesn’t make good performance sense. On mobile devices such as laptops and tablets, NGEN also helps with battery life. A CPU cycle saved by not JITing is also a battery unit saved.

You may have noticed that we ship a handful of updates to the .NET Framework each year on Windows Update, typically on patch Tuesday. After an update, there may be a window of time where apps need to JIT core .NET Framework code, because NGEN images aren’t available yet. During that time window, apps may have noticeably slower startup times. This window is typically short, but it’s something that we wanted to improve.

In Windows 8.1 Preview, we have significantly improved the .NET Framework servicing system. As a result, app startup performance will be more consistent after a .NET Framework update. These servicing improvements will also help preserve battery life on laptops and tablets.

Continuous innovation

Release cadence is a really interesting topic. In aggregate, the .NET developer community is so large and broad that there is no one perfect release timeline. We talk to some (mega-large) companies who would prefer that we ship once every ten years. Here, think about ultra-reliable .NET apps running critical systems on massive commercial or military boats that only get significant systems maintenance once every one to five years and that are never hooked up to the Internet or Windows Update. On the other end of the spectrum, we have bleeding-edge early adopters who download and comment on each of our pre-release NuGet packages.

The work we’re delivering now in the .NET Framework 4.5.1 Preview, and which will continue to evolve, is setting ourselves up to deliver a product, almost a set of products, which meets the needs of this broad range of .NET developers. The following are a set of catch-phrases that we hear in many of the conversations we’ve had during the last couple years:

- Bleeding edge of ideas

- Modernized apps and services

- Proven features and approaches

- Battle-tested and hardened

These are the desired attributes that we are asked for. These are what we plan to deliver, although typically not in one package, all at the same time. That’s really the key difference, actually. We’ve always delivered these things, but not in a way that allowed you to selectively pick and choose the pieces that worked best for you. Going forward, you will have more choice. We’ll continue to update the .NET Framework, like we are doing with the .NET Framework 4.5.1, but we’ll also do more work outside, as you may have seen us doing via NuGet.org.

.NET Framework updates

The .NET Framework 4.5.1 Preview is the first update on top of the .NET Framework 4.5. It contains a roll-up of critical fixes, reliability and performance improvements, and opt-in features. It has undergone extensive compatibility testing at Microsoft and at over 70 external companies across a broad set of industries and app types. It is part of Windows 8.1 Preview and Windows Server 2012 R2 Preview, and is available for installation on the same platforms as the .NET Framework 4.5. It is also available for direct download, as a standalone installation, with no requirement to install the .NET Framework 4.5 first.

We expect that this release will be adopted very quickly, both because of its new features and the release vehicles we are using. You should feel confident taking a dependency on it, since it has satisfied a rigorous pre-release process and will achieve a broad global footprint quickly.

By the way this is not the first time that we’ve delivered updates on top of a release. We also delivered platform updates on top of the .NET Framework 4. For example, we’ve seen a lot of activity around the most recent one, which was the .NET Framework 4.0.3.

That’s a brief description of the .NET Framework 4.5.1 Preview, which we consider a good template for subsequent .NET Framework updates. We’ve thought about it a lot, but we’re still early in its actual execution. Feel free to provide feedback in the comments below. If you want to engage in a longer conversation, you can always do that with us at dotnet@microsoft.com.

NuGet releases

In case you’re not familiar with NuGet, it is a software package manager and repository, largely intended for and composed of .NET Framework libraries. It is owned and managed by the Outercurve foundation. You will see that NuGet is fully integrated into Visual Studio. There are libraries available on NuGet across a broad range of domains. You might find one that does just what you need.

The .NET Framework team has been publishing libraries to NuGet for a few years now. If you look, you’ll see that the broad range of releases include ASP.NET, Entity Framework, networking, languages, and the base class libraries. It is clear from the download numbers that many of you appreciate this approach for delivering both updates and whole new features. NuGet also enables a much tighter feedback loop, which allows us to respond to feedback much quicker. Thanks for your support on NuGet!

We see NuGet as a great way to ship opt-in updates to those of you who want updates at a faster pace, without needing to make changes to the actual .NET Framework product. We see this approach as something of a sweet spot where we keep both the developers building software for the big boats and those building the next startup happy. The trick isn’t just releasing different software to different audiences. That’s easy. Instead, we plan to release updates on NuGet and to the .NET Framework itself that nicely compose and layer together, although they might be on significantly differently release cadences with different release process requirements. We are working with NuGet to make this experience even better moving forward.

We tweeted a few months ago to say “we may be a little silent right now, but we’re busy working on the next thing.” We loved the response we got back:

We couldn’t agree more, @robertmclaws. We quite like the Framework we already have, but more modular would indeed be better. In short, that’s exactly where we are headed with this release and going forward.

Better discoverability and support for NuGet in Visual Studio

Today, we are also announcing an easier way to discover the libraries outside of the .NET Framework that have been built by our team. Specifically, we have published a curated list of Microsoft .NET Framework NuGet Packages to help you discover these releases, published in OData format on the NuGet site. This list is integrated into Visual Studio 2013 Preview. You can also subscribe to it in Visual Studio 2010 and 2012 (register feed). We also have made an HTML version of the list available, with download numbers, which also might give you some hints on which libraries you should be taking a closer look at.

This list doesn’t include everything that the .NET Framework team has ever published, but it is the set of releases that meet a certain set of requirements. We’re still working through that process, so you may see a few more libraries added. Note that all versions of a given library are listed in the feed. That’s a characteristic that we’d like to change.

We are also releasing an update in Visual Studio 2013 Preview to provide better support for apps that indirectly depend on multiple versions of a single NuGet package. You can think of this as sane NuGet library versioning for desktop apps. This support is only enabled for .NET Framework desktop apps, and not for Windows Store or Windows Phone apps, which already have other features that achieve the same goal.

In cases where your application references different versions of the same library this new feature will automatically generate binding redirects during the build and record them in the app.config file. The binding redirects will ensure that only the latest version of each library is loaded at runtime. In fact, this feature works with any library, not just NuGet libraries, however, NuGet was the primary motivator for the feature.

Supporting large-scale deployment of .NET NuGet Packages

We have talked to a few large customers who have told us that they love what we’re doing on NuGet, but cannot adopt our work until we are able to service our NuGet libraries on Windows Update. They tell us that they have hundreds, if not thousands, of sites (intranet and internet) built on .NET, and that they cannot afford to service them outside of their regular update schedule. To put their feedback bluntly: if a NuGet library needs to be updated across their hosted sites due to a critical issue, Microsoft needs to deal with that via Windows Update, not the site admins. OK, that’s clear enough. Not a problem!

We can now service NuGet packages published by the .NET Framework team on Windows Update. It isn’t that we expect a steady stream of these updates. In fact, we don’t at all. Going forward, if we find a (very) severe bug or issue in one of our NuGet packages, we’ll use Windows Update as one of our release vehicles for servicing. Obviously, we’ll continue to publish updates (serious or otherwise) to NuGet.org as our first course of action, and expect that app authors primarily adopt our updates that way.

Since this new capability is very important to many of you, we want to clarify a few key points:

- We are enabling Windows Update servicing for the packages in our new curated list, announced above. Packages outside that list will not get serviced by Windows Update. If there is a Microsoft-published package that you want added to this list, please tell us.

- Your machines will get servicing updates only for NuGet packages that your apps actually use.

- This capability requires that you run your app on the .NET Framework 4.5.1 Preview. Windows Update servicing is not enabled when you run apps on earlier versions of the .NET Framework.

We see NuGet as a key enabler for shipping on a faster cadence, and features like this will bring it much closer to a mature technology that we know that many of you have been waiting for. We’ll continue to push NuGet in that direction.

Summary

For this release, we focused primarily on your feedback and feature requests. We think that the features in this release should be an immediate help, both in how you use Visual Studio and in the experience that you can provide your customers.

The following are the user voice requests that we will be closing as completed:

- x64 edit and continue – 2600+ votes

- Function return value in debugger – 1094 votes

- LOH compaction – 18 votes

To recap, we focused our efforts on three key areas in this release:

- Developer productivity

- Application performance

- Continuation innovation

You are encouraged to install the .NET Framework 4.5.1 Preview, Visual Studio 2013 Preview, Windows 8.1 Preview, and Windows Server 2012 R2 Preview. These releases all work separately from one another; however, we’ve been able to provide additional benefits when you use them together.

These releases are all still in preview. Please send us your feedback, either in the comments, on Twitter (@dotnet), on Facebook (dotnet), or via email (dotnet@microsoft.com). We want to hear about your experiences with the new release, and what you think about the changes that we’ve made. What other features would you like to see in our next release?

0 comments