Using local AI models can be a great way to experiment on your own machine without needing to deploy resources to the cloud. In this post, we’ll look at how use .NET Aspire with Ollama to run AI models locally, while using the Microsoft.Extensions.AI abstractions to make it transition to cloud-hosted models on deployment.

Setting up Ollama in .NET Aspire

We’re going to need a way to use Ollama from our .NET Aspire application, and the easiest way to do that is using the Ollama hosting integration from the .NET Aspire Community Toolkit. You can install the Ollama hosting integration from NuGet via the Visual Studio tooling, VS Code tooling, or the .NET CLI. Let’s take a look at how to install the Ollama hosting integration via the command line into our app host project:

dotnet add package CommunityToolkit.Aspire.Hosting.OllamaOnce you’ve installed the Ollama hosting integration, you can configure it in your Program.cs file. Here’s an example of how you might configure the Ollama hosting integration:

var ollama =

builder.AddOllama("ollama")

.WithDataVolume()

.WithOpenWebUI();Here, we’ve used the AddOllama extension method to add the container to the app host. Since we’re going to download some models, we’re going to want to persist that data volume across container restarts (it means we don’t have to pull several gigabytes of data every time we start the container!). Also, so we’ve got a playground, we’ll add the OpenWebUI container, which will give us a web interface to interact with the model outside of our app.

Running a local AI model

The ollama resource that we created in the previous step is only going to be running the Ollama server, we still need to add some models to it, and we can do that with the AddModel method. Let’s use the Llama 3.2 model:

var chat = ollama.AddModel("chat", "llama3.2");If we wanted to use a variation of the model, or a specific tag, we could specify that in the AddModel method, such as ollama.AddModel("chat", "llama3.2:1b") for the 1b tag of the Llama 3.2 model. Alternatively, if the model you’re after isn’t in the Ollama library, you can use the AddHuggingFaceModel method to add a model from the Hugging Face model hub.

Now that we have our model, we can add it as a resource to any of the other services in the app host:

builder.AddProject<Projects.MyApi>("api")

.WithReference(chat);When we run the app host project, the Ollama server will start up and download the model we specified (make sure you don’t stop the app host before the download completes), and then we can use the model in our application. If you want the resources that depend on the model to wait until the model is downloaded, you can use the WaitFor method with the model reference:

builder.AddProject<Projects.MyApi>("api")

.WithReference(chat)

.WaitFor(chat);

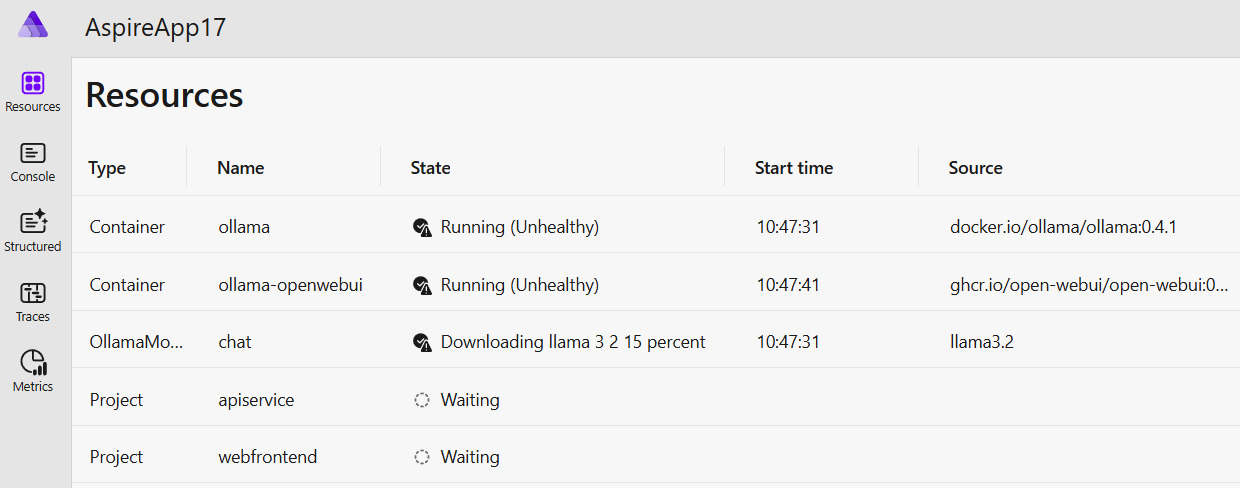

In the above screenshot of the dashboard, we’ll see that the model is being downloaded. The Ollama server is running but unhealthy because the model hasn’t been downloaded yet, and the api resource hasn’t started as it’s waiting for the model to download and become healthy.

Using the model in your application

With our API project set up to use the chat model, we can now use the OllamaSharp library to connect to the Ollama server and interact with the model, and to do this, we’ll use the OllamaSharp integration from the .NET Aspire Community Toolkit:

dotnet add package CommunityToolkit.Aspire.OllamaSharpThis integration allows us to register the OllamaSharp client as the IChatClient or IEmbeddingsGenerator service from the Microsoft.Extensions.AI package, which is an abstraction that means we could switch out the local Ollama server for a cloud-hosted option such as Azure OpenAI Service without changing the code using the client:

builder.AddOllamaSharpChatClient("chat");Note: If you are using an embedding model and want to register the

IEmbeddingsGeneratorservice, you can use theAddOllamaSharpEmbeddingsGeneratormethod instead.

To make full use of the Microsoft.Extensions.AI pipeline, we can provide that service to the ChatClientBuilder:

builder.AddKeyedOllamaSharpChatClient("chat");

builder.Services.AddChatClient(sp => sp.GetRequiredKeyedService("chat"))

.UseFunctionInvocation()

.UseOpenTelemetry(configure: t => t.EnableSensitiveData = true)

.UseLogging();Lastly, we can inject the IChatClient into our route handler:

app.MapPost("/chat", async (IChatClient chatClient, string question) =>

{

var response = await chatClient.CompleteAsync(question);

return response.Message;

});Supporting cloud-hosted models

While Ollama is great as a local development tool, when it comes to deploying your application, you’ll likely want to use a cloud-based AI service like Azure OpenAI Service. To handle this, we’ll need to update the API project to register a different implementation of the IChatClient service when running in the cloud:

if (builder.Environment.IsDevelopment())

{

builder.AddKeyedOllamaSharpChatClient("chat");

}

else

{

builder.AddKeyedAzureOpenAIClient("chat");

}

builder.Services.AddChatClient(sp => sp.GetRequiredKeyedService("chat"))

.UseFunctionInvocation()

.UseOpenTelemetry(configure: t => t.EnableSensitiveData = true)

.UseLogging();Conclusion

In this post, we’ve seen how, with only a few lines of code, we can set up an Ollama server with .NET Aspire, specify a model that we want to use, have it downloaded for us, and then integrated into a client application. We’ve also seen how we can use the Microsoft.Extensions.AI abstractions to make it easy to switch between local and cloud-hosted models. This is a powerful way to experiment with AI models on your local machine before deploying them to the cloud.

Check out the eShop sample application for a full example of how to use Ollama with .NET Aspire.

the peace of code with builder.Services.AddChatClient not working ((

Cannot resolve symbol ‘AddChatClient’

My dependencies:

PackageReference Include=”CommunityToolkit.Aspire.Hosting.Ollama” Version=”9.1.0″

The AddChatClient method comes from Microsoft.Extensions.AI, and you’ll need to ensure that you have that namespace imported.

Hi Aaron,

I can’t get the sample above to work. Specifically around this code:

builder.Services.AddChatClient(b => b .UseFunctionInvocation() .UseOpenTelemetry(configure: t => t.EnableSensitiveData = true) .UseLogging() // Use the OllamaSharp client .Use(b.Services.GetRequiredKeyedService("chat")));Can you share the full source code?

It appears that the Microsoft.Extensions.AI API has changed since I published the blog. I’ll get the sample updated, but this is the update:

builder.Services.AddChatClient(b => b.GetRequiredKeyedService("chat")) .UseFunctionInvocation() .UseOpenTelemetry(configure: t => t.EnableSensitiveData = true) .UseLogging();The difference is that the “Use” methods when adding the chat client are outide the callback.