Artificial intelligence, or AI, is a powerful tool that can enhance your applications to provide an improved and individually customized experience that caters to your customers’ unique desires while simultaneously improving the quality and efficiency of your internal operations. Although simple demo apps are often an easy and fast way to ramp-up on new technology, the “real world” is far more complex and you want to see more examples of robust, reality-inspired scenarios that use AI. To help answer not just the question, “What is AI?” but also “How can I use AI in my applications?” we created an application that illustrates how to infuse AI into a typical line-of-business application.

Introducing eShop “support with AI” edition

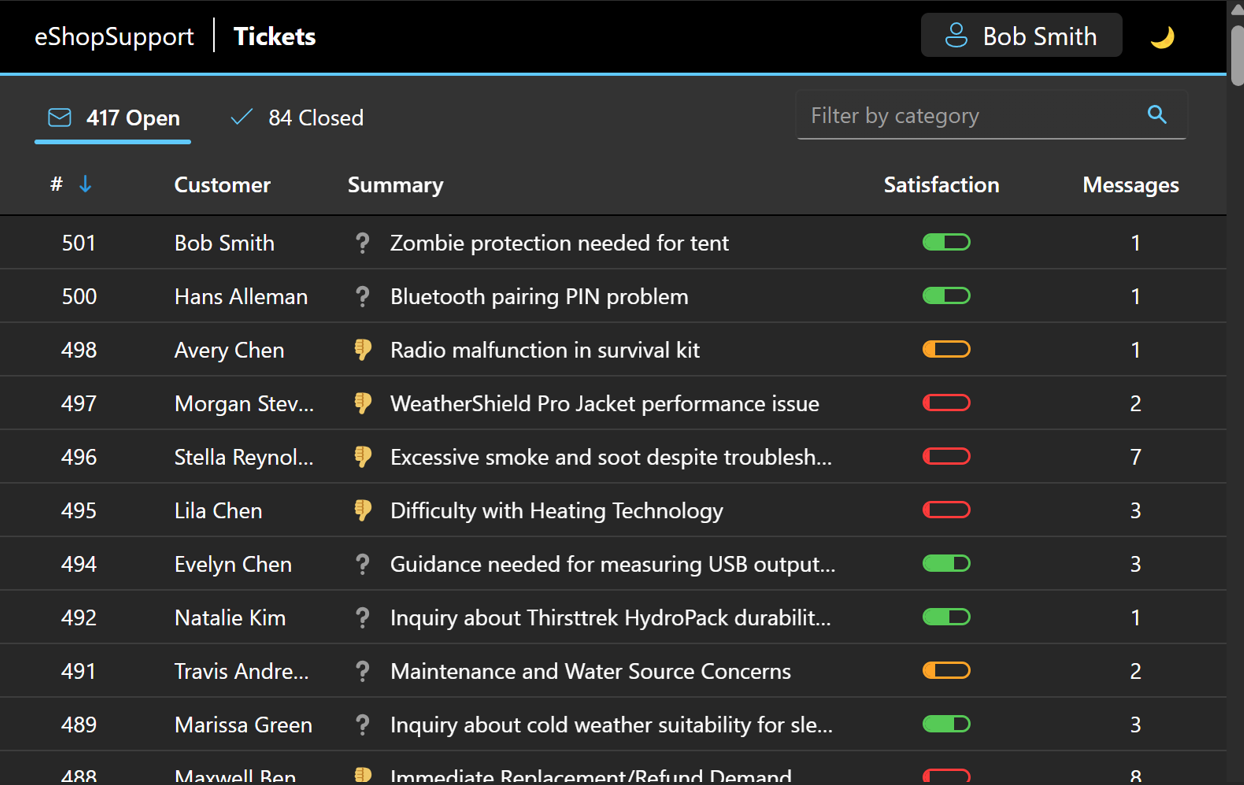

The AI-enhanced eShopSupport application is a support site that customers use to inquire about products. The eShop staff have a workflow for tracking these inquiries, conversing with the customers, and categorizing and eventually closing these inquiries. Through a variety of features, this sample moves past the popular “chatbot” scenario to illustrate several ways AI can boost your developer productivity while increasing the level of individualized customer support you are able to provide.

This demo illustrates how AI can be used to enhance a variety of features in an existing line-of-business application and is not just for “green field” or new apps. For example, why not enhance your search with semantic search that can find things even when the user doesn’t type the exact phrase or use proper spelling? Do you need to add new languages to your app? Large language models, or LLMs, are capable of handling multiple language inputs and outputs. Do you have a lot of data you sift through to find trends? Use the LLM to help summarize your data. If it is going into a pipeline, consider automating classification and sentiment analysis.

The demo also uses .NET Aspire to take advantage of its ability to orchestrate across services, data stores, containers, and even technologies. Python is a popular language in the data science world, but that doesn’t mean you have to switch everything to Python or even convert Python to C#. This example shows one approach to interoperability and hosts a Python microservice using .NET Aspire.

Features

AI-related features in the sample include:

| Feature | Description | Code implementation |

|---|---|---|

| Semantic search | Find things without knowing an exact phrase or description and even with improper spelling | src / Backend / Services / SemanticSeach |

| Summarization | Avoid “getting in the weeds” to re-read history to gain context and just focus on the relevant bits | src / Backend / Services / TicketSummarizer |

| Classification | Automate workflows without requiring human intervention | src / PythonInference / routers / classifier |

| Sentiment scoring | Help triage and prioritize feedback and discussions to understand how well a product or campaign is received | src / Backend / Services / TicketSummarizer |

| Internal Q&A chatbot | Help staff answer technical and business related questions with citations for proof, and auto-generate the draft of the reply | src / ServiceDefaults / Clients / Backend / StaffBackendClient |

| Test data generation | Generate large volumes of data based on the rules you provide | seeddata / DataGenerator / Generators |

| Evaluation tool | Objectively score the behavior of your bots/agents based on accuracy, speed, and cost, and systematically improve them over time | src / Evaluator / Program |

| E2E testing | An example (experimental) approach to providing deterministic test gates when the product itself is not deterministic | test / E2ETest |

.NET Aspire is used to manage the resources such as LLMs and databases. Microsoft.Extensions.AI is a set of common building blocks and primitives for intelligent apps. Developers benefit by using a standard set of APIs to perform common tasks, while library and framework providers can build on these common, standard interfaces and classes to provide a consistent experience throughout the .NET ecosystem. As the Semantic Kernel team announced in their blog, the Microsoft.Extensions.AI release does not replace Semantic Kernel. Instead, it provides a set of abstractions, APIs, and primitive building blocks that will be implemented by Semantic Kernel along with additional features like semantic chunking.

Instructions for cloning and running locally

Intelligent apps samples typically require more resources and a robust environment to run than your typical demo. You can read about the minimum requirements to run the example and use the latest step-by-step instructions to clone and run the sample locally on the eShopSupport repo.

Code tour

The “brain” behind the application is the Large Language Model (LLM) used to generate responses. Although it’s a common trend to think of LLMs in terms of chats or assistants, this sample demonstrates how you can use them to enhance a variety of features in a line-of-business application. The LLM is used to generate summaries, provide answers to questions, suggest replies to customer inquiries, and even generate test data. Using the standard primitives provided by Microsoft.Extensions.AI, you can use the same code to interact with the LLM regardless of whether it is a local model hosted by Ollama or an Azure OpenAI Service resource in the cloud.

Choose your model

Now that you know it’s possible to swap out your model, let’s take a look at the code to do this. The following code uses the Microsoft.Extensions.AI abstractions to interface with the client, and the only difference between local and remote models is the way it is configured. The service and model you configure is passed to dependency injection and registered with the standard interfaces provided by Microsoft.Extensions.AI. Using this feature, you can easily swap from a local model using a tool like Ollama to a cloud-hosted OpenAI model.

Open Program.cs in the AppHost project to see how the model is configured and injected into the application. To use Ollama, for example, you configure the service like this:

var chatCompletion = builder.AddOllama("chatcompletion").WithDataVolume();Done testing, and ready to deploy? No problem. Set OpenAI key in the appSettings.json configuration, then change the call to:

var chatCompletion = builder.AddConnectionString("chatcompletion");Notice that although the way you wire it up is different, the service name is the same in both instances (“chatcompletion”). Now, regardless of which model you are using, the code is the same:

var client = services.GetRequiredService<IChatClient>();

var response = await ChatClient.CompleteAsync(

prompt,

new ChatOptions ());The ChatOptions class allows you to specify parameters like the maximum tokens to generate, the temperature or “creativity”, and more. It provides reasonable defaults out of the box, but you can customize it to suit your needs.

Generate test data

The seeddata folder contains a DataGenerator for creating test data. The various generators are in the Generators folder. The prompt looks like this:

- How many records

- Field creation rules and business logic

- The schema for the data (in this case, a JSON example is provided)

This method of constructing a prompt is useful for more than just data generation. Use the pattern anytime you wish to receive a response in a well-known structure or format so that you can parse it. This is the key to automating some of your workflows and tasks.

Here is an example prompt to generate categories:

Generate 100 product category names for an online retailer of high-tech outdoor adventure goods and related clothing/electronics/etc.

Each category name is a single descriptive term, so it does not use the word 'and'.

Category names should be interesting and novel, e.g., "Mountain Unicycles", "AI Boots", or "High-volume Water Filtration Plants", not simply "Tents".

This retailer sells relatively technical products.

Each category has a list of up to 8 brand names that make products in that category.

All brand names are purely fictional.

Brand names are usually multiple words with spaces and/or special characters, e.g. "Orange Gear", "Aqua Tech US", "Livewell", "E & K", "JAXⓇ".

Many brand names are used in multiple categories. Some categories have only 2 brands.

The response should be a JSON object of the form:

{

"categories"": [

{

"name":"Tents",

"brands": [

"Rosewood",

"Summit Kings"]

}]

}The response is then easily deserialized and inserted into the database.

Evaluate the model

LLM models are not deterministic, so it’s possible to have varied results with the exact same inputs. This poses a few challenges. You need a way to test that your prompts are generating the expected responses so that you can iteratively improve the prompts by evaluating the quality of the responses. You also want a baseline that you can automate against and triage when you experience a regression because of changes to the app.

The code provides an example of “evaluations” which are sets of objective questions to ask a model and determine with it is grounded. The score is based on how close the model’s answers are to the expected answers. A perfect score must include all facts provided by the manual and be free of errors, unrelated statements or contradictions. The questions are generated by a model based on the product manual, and the answers are compared to the expected answers.

This approach can help you and your team feel confident that updates to models or tweaks to prompts will achieve the desired results.

Summarize and classify information

One feature of LLMs that can be an incredible time-saver and boost both efficiency and productivity is the ability to summarize and classify information. Imagine you are a customer support rep and have hundreds of requests coming in daily. You need to triage each request so that you are able to prioritize unhappy customers and critical requests. The LLM can help by summarizing and classifying the request. It can also provide a sentiment score. The sentiment, summary, and classification all provide the right context “at a glance” you need to prioritize the tickets appropriately. You can always read the original ticket for clarifications or to double-check the LLM’s work.

Here’s the best part: this can all be achieved in a single call to the LLM. The following is an example of a prompt to summarize, classify, and analyze the sentiment of a ticket:

You are part of a customer support ticketing system.

Your job is to write brief summaries of customer support interactions.

This is to help support agents understand the context quickly so they can help the customer efficiently.

Here are details of a support ticket.

Product: "Television Set"

Brand: "Contoso"

The message log so far is:

(customer feedback goes here, such as: "my TV won't turn on" or "it's stuck on a channel that plays nothing but 80s sitcoms")

Write these summaries:

1. A longer summary that is up to 30 words long, condensing as much distinctive information as possible. Do NOT repeat the customer or product name, since this is known anyway. Try to include what SPECIFIC questions/info were given, not just stating in general that questions/info were given. Always cite specifics of the questions or answers. For example, if there is pending question, summarize it in a few words. FOCUS ON THE CURRENT STATUS AND WHAT KIND OF RESPONSE (IF ANY) WOULD BE MOST USEFUL FROM THE NEXT SUPPORT AGENT.

2. A shorter summary that is up to 8 words long. This functions as a title for the ticket, so the goal is to distinguish what's unique about this ticket.

3. A 10-word summary of the latest thing the CUSTOMER has said, ignoring any agent messages. Then, based ONLY on that summary, score the customer's satisfaction using one of the following phrases ranked from worst to best: (insert list of terms like "angry" to "ecstatic" here) Pay particular attention to the TONE of the customer's messages, as we are most interested in their emotional state.

Both summaries will only be seen by customer support agents.

Respond as JSON in the following form:

{

"LongSummary": "string",

"ShortSummary": "string",

"TenWordsSummarizingOnlyWhatCustomerSaid": "string",

"CustomerSatisfaction": "string"

}As you can see, the result is again a parsable JSON object that can be used to update the ticket in the database.

Semantic search

Semantic search uses semantic rankings to provide more relevant results. This is especially useful when you have a large database of items, and users who may not know the exact words or phrases they are looking for. The LLM can help by providing a more relevant search result based on the meaning of the search terms. The search works by converting the search text into an “embedding”, a numerical vector that represents the meaning of the text. It can provide a “distance” between phrases based on how the meanings align. For example, consider a product catalog with:

- Microscope – a powerful 200x magnification reveals the world’s tiniest wonders

- Telescope – a focal length of 2,350mm is enough to view a distant galaxy

- Gloves – don’t let your hands get too cold

In a traditional search, if the customer typed “micrscope” (purposefully misspelled), the search would return no results because there is no exact match. With semantic search, the search will consider this string to most closely match the entry for the microscope. Similarly, if the user searched for “skiing”, it would pick the entry for “gloves” as being a closer match than the ones for “microscope” or “telescope”, even though there’s no similarity in spelling.

The repo contains several classes that provide implementations of semantic search in the Services folder.

A note on end-to-end testing

An approach to end-to-end testing has been included for completeness. The example in eShop support is entirely experimental and should not be considered guidance for production. There are many challenges to conducting automated tests against intelligent apps from resource constraints and cost controls to the fact that the model is not deterministic. The example provided is a way to gate changes to the model and ensure that the behavior of the app is consistent with the expected results.

Conclusion

The era of intelligent apps is here, and .NET provides the tools you need to integrate AI-powered functionality into your projects. It’s crucial to continuously evaluate the responsible use of AI. For example, don’t assume the AI is always right. That’s why the app is designed so that the actual customer response is not automatic. A suggested response is generated, but it’s still up to a human to review and send it. The AI accelerates the process, but the human is still in control.

In a similar fashion, the workflow to automate support tickets makes a “best guess” at a category, but this can always be overridden by a human if it’s incorrect. The app also quantifies the quality of the responses so you can be systematic about improving them. These are just a few examples of how AI can work with, not in place of, humans to enhance your app experience and boost productivity.

See eShopSupport in action at .NET Conf!

Join us at .NET Conf November 12-14, where you’ll see eShopSupport featured in keynote demos and in some deep dive breakout sessions.For an even deeper dive, check out Jason Haley’s eShopSupport blog series.

Go ahead, it’s ready for you! Pull down the eShopSupport intelligent .NET app sample and start exploring the code today!

Thank you for the useful code sample. Is it possible to get a pure C# version i.e. one that doesn’t rely on Python? Also, a version that doesn’t rely on Docker given Blazor is meant to circumvent containers?

Hopefully you can take us back to something more akin the old ‘IBuySpy’ sample!