My name is Mauricio Avilés and I am a Test Consultant in the Microsoft Enterprise Services / IT Service Management Practice. I am part of a team of experienced consultants who specialize on all things testing related. In this post we will walk you through the way we used Visual Studio Online (VSO) Load Testing Service to test the capacity of NORAD Tracks Santa (NTS) website.

This site allows children to track Santa Claus while he delivers gifts throughout the world. Spearheaded by the Internet Explorer, the NTS experience combined multiple products and services across Microsoft, including Windows, Windows Phone, Bing, Skype and Azure. The website had over 14 Million visits on Christmas Eve!

Brief description of the service:

NORAD Tracks Santa website is composed of three cloud services:

- Website: A web role hosting all of the website resources

- Map Service: A worker role serving all the map images

- Timer Service: A worker role used to synchronize Santa’s location

All of these cloud service were hosted in Azure, hence the Visual Studio Online load testing was the perfect match for our load testing needs. The focus for this post will be the Timer Service. The Map and Timer services serve static content and are protected by a CDN, hence they had a lower priority and will be ignored for the rest of the post. However, the timer service was used by the website as well as all the applications (Windows 8, Windows Phone, Iphone, and Android).

Load Testing Objective

We had three objective for the traffic simulation: test the capacity of a single server, uncover any serious performance issues, and identify opportunities to improve the capacity or performance of the service.

We knew that in previous years we had approximately 16 million visitors on Christmas Eve. However, we did not have IIS logs or any other data to hint the user distribution on that day (that has changed for next year). Hence, we decided to focus on finding the capacity of a service with a single instance. To do this we ran a step load test, increasing the load on the system until we saw a degradation on performance or resource exhaustion. We had the advantage that this is a stateless system with no shared resources, so if we could find the capacity of a single server we can assume linear growth by increasing the number of instances.

Creating the web and load test

The Timer service has a single method: Get Time. This is a simple WCF service in charge of ensuring that Santa’s location is synchronized throughout the world. This method takes no arguments and returns a JSON body containing the current UTC time. This ensures

that all the client code that is in charge of displaying Santa’s journey will display the correct location. One of the great things about the Visual Studio Online Load Testing Service is that you can take the same web tests that you created and used on premises and execute them on the cloud with no additional effort!

You could take a web test created with Visual Studio 2010 (or 2008), open it with VS2013, add it to a load test, and run it in the cloud with no additional modifications! Isn’t that cool?

To create the web test I deployed a local instance of the Timer Service using the Azure Compute Emulator (a.k.a DevFabric), submitted a request to the service using the WCF Test Client, recorded the request with fiddler, and exported it as a web test (note: I had to do this because I am testing a web service. If this would have been a website I could have used the OOB Visual Studio Web Test Recorder).

Since the web test consisted of a single request, there was no need to correlate parameters so our web test was done:

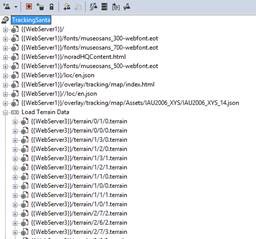

Side Note: that even though this is a very simple web test, the VSO Load Testing has the capacity to handle more complex web tests like this one we used to stress the Norad Web Site:

You can even use web test and web test request plug-ins!

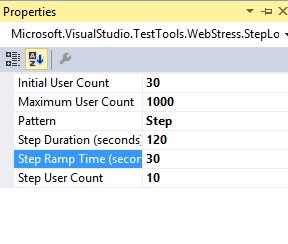

Generating the load once we had created a web test, we added to a load test the same way you would do it with any other load test. Here is a screenshot of the test we used to simulate the traffic on the timer service:Since we were running a stateless application, we decided to not use think times in our test. We do not keep user state on the service hence we are able to simulate multiple of “real life” users with fewer virtual users without worrying about a technical impact on our results.

This is an important point because VSO Load Testing charges by the Virtual User Minute (refer to the link for current rates). It does not matter if the user sends 100 request per minute or if it sleeps for the whole minute, you will still be charged the same amount. Keep in mind, a Virtual User does not necessarily correspond to a “real life user”. For example, a requirement to simulate 10,000 users does not implies that you will need to use 10,000 virtual users. By removing think times from your web test, you can simulate dozens or hundreds of users with a single virtual user (depending on the nature of your test). At this point you will want to shift your focus from users as a key performance metric and start focusing on Requests per Second (RPS). However, if your system is state-full (that is it keeps session state on the server), then at some point you will want to run tests where the number of virtual-users equals the number of expected concurrent users to ensure the system can handle the complexities associated with keeping session state on the server. For our stress test we decided to start with 30 virtual-users (remember no think time), adding 5 users every 2 minutes:

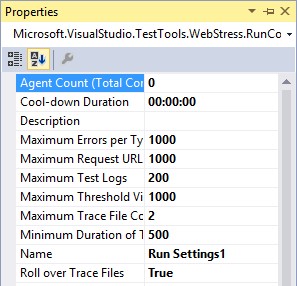

Whenever running in the cloud make sure to pay attention to the resource utilization of your test agents. By default, the load testing service while try to allocate the correct number of agents cores based on the load you are trying to generate. However, there are instances in which your tests will have higher resource requirements (as in the case of “no think times” tests) and hence you will want to increase the number of cores (and hence agents) allocated to your run. You can do that from the run settings of your load test:

The last thing we had to do to generate the load was to open our test setting (make sure you are using Visual Studio 2013) and select the “Run tests using Visual Studio Team Foundation Service”:

That is it! One click and you have the power of the cloud to load test your applications!

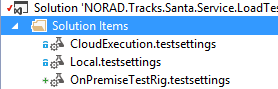

If you happen to have a test rig on premises, you can create multiple test settings files: one for your on premises test rig, one for the cloud, and one for a local run. With this setup you can leverage the same load test across three different generation engines (test rigs):

During this stress test our system was able to handle a maximum of 75 virtual users and a little under 5,000 RPS. This load resulted on an average CPU of over 75% in the one instance of our timer service.

Referring to our earlier point: in this run we were able to generate 5,000 RPS with only 75 users. Keep in mind that your millage will vary (it will be affected by the complexity of your web tests and the performance of your System Under Test).

Analyzing the results

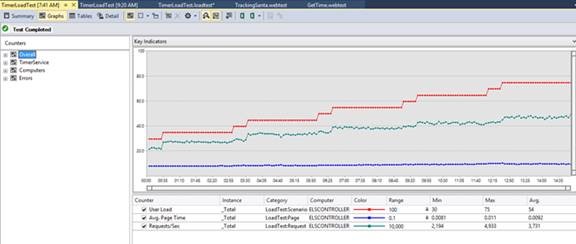

While running the test Visual Studio will provide high level metrics of your test to ensure it is running as expected:

Once your test is completed, you are able to download to your machine the full report and access it like you would with any other load test report from an “on premise” test :

Thanks to the Visual Studio Online Load Testing we were able to make informed decisions about the number of instances we needed to allocate on our production environment, and we were able to identify opportunities for performance and throughput improvements in the system. We were able to increase the throughput of each service instance while reducing the CPU utilization. This had a direct impact on the number of instances we had to use during Christmas Eve and hence in the cost of the project. The following graphs summarizes the impact of our efforts:

In conclusion, Visual Studio Online Load Testing was a key factor in the success of the NORAD tracks Santa website. It was easy to get started with it (our on-premises knowledge translated to it), it was reliable, and was able to simulate the high volume traffic that we needed for this system (over 6,000 requests per second). We were able to have a direct cost-saving and performance-improvement impact on the service with a relatively small effort.

0 comments