<

p>The teams that contribute to VSTS (TFS and other micro-services like Release Management, Package Management, etc) began using Release Management to deploy to production as outlined by Buck Hodges in this blog. However, in Feb this year, there was some feedback that it was difficult to debug failed deployments using RM, and that engineers were being forced to use unnatural workarounds.

<

p>We (the RM team) used that as an opportunity to re-look at our RM usage, and to fix things up so that it becomes easier to use. Along the way, we fixed up some things in the product, and some things in the way we use the product. In this two-part blog, I will walk you through what we did. The first part covers the issues that we faced, and the fixes that have been rolled out. I will blog the second part when the remaining fixes have been rolled out (since they are still in-flight).

The various things that didn’t work very well in RM

<

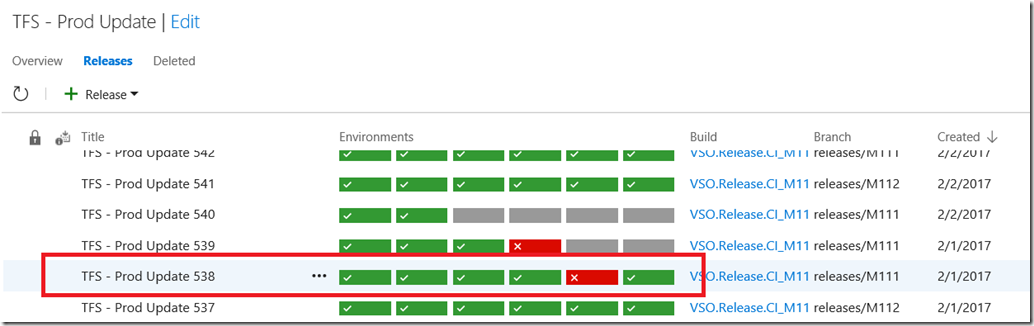

p>As we walk through the issues, we will use the release “TFS – Prod Update 538” as an example of what didn’t work very well in RM:

<

<

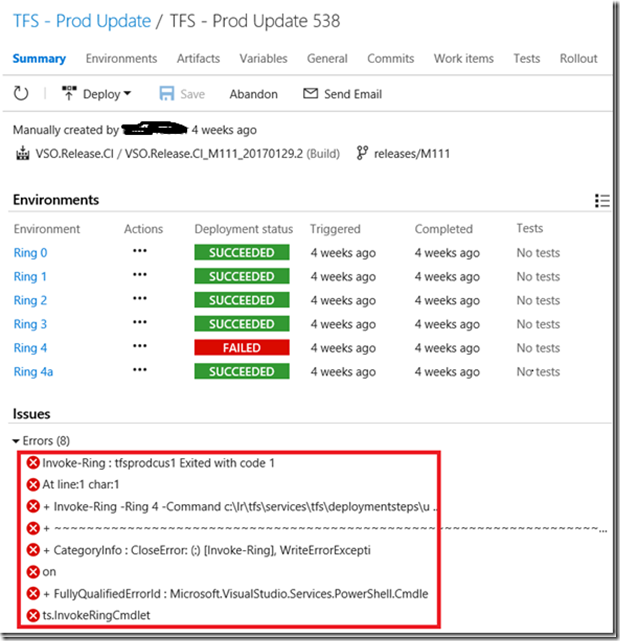

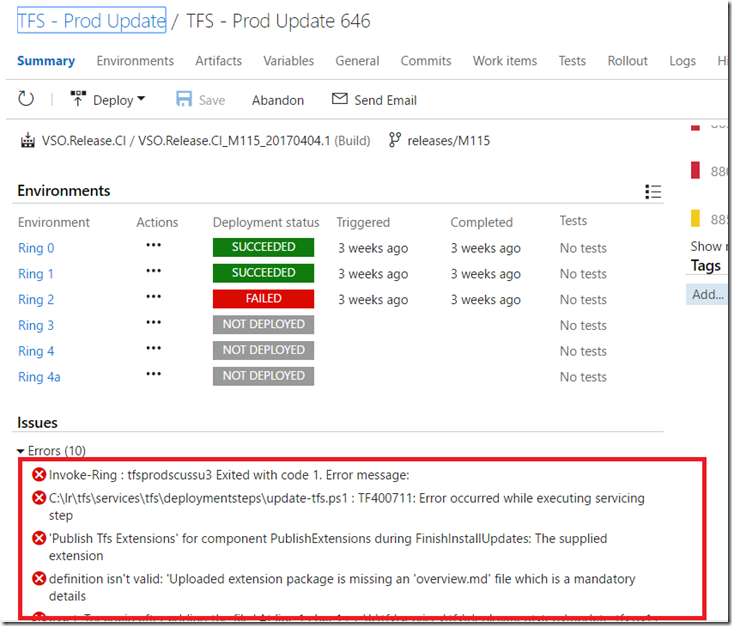

p>Double-clicking on the release showed a pretty un-actionable set of Issues. We used to see this “wrapper script” text for all errors, and it wasn’t very useful:

<

<

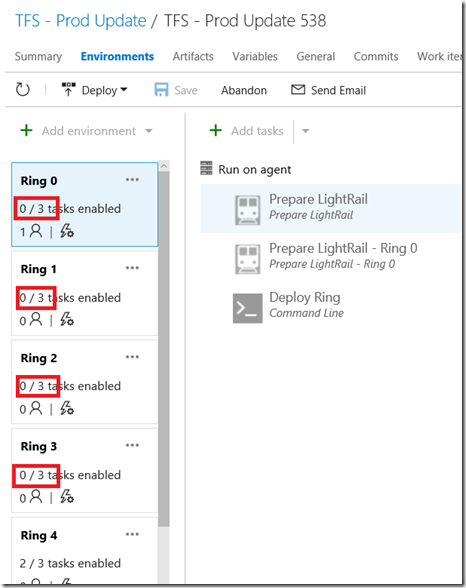

p>Further, the Release Summary indicated that the deployments to Ring 0, Ring 1, Ring 2, and Ring 3 succeeded. However, clicking on the Environments tab and looking closely at the number of tasks enabled in these rings told a different story.

<

<

p>There were, in fact, zero tasks enabled in Rings 0, 1, 2 and 3! The bits needed to be deployed only to Ring 4, but the release was taken through Rings 0, 1, 2 and 3 without doing anything meaningful in these environments. This used to mess up the traceability of the product, because RM thought that the current release on Ring 0 (and Rings 1, 2 and 3 also) was “TFS – Prod Update 538” (at least till the next release rolled out), whereas actually the bits on Ring 0 corresponded to an older release. The “current release in an environment” concept is pretty important for RM: The product surfaces this in some of its views, and also uses this for some internal operations like release retention e.g. RM won’t delete a release if it is the current release on an environment.

<

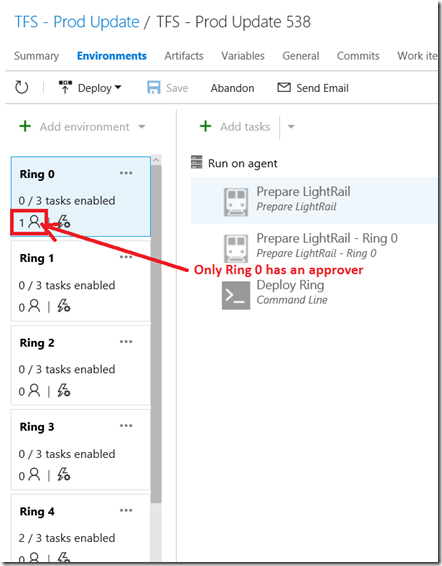

p>The above design – of dragging the release through Rings 0, 1, 2 and 3 unnecessarily – begs the question, “Why? Why couldn’t the release be created so that zero environments started automatically ‘after release creation’, and then Ring 4 was started manually?” The reason for this was the requirement that there should be only 1 approval for the entire release, across all the environments. When the release was created, the approver wanted to check that everything was in order across all the environments, and then didn’t want to be asked to approve again. Therefore, this requirement was modeled as an approval on Ring 0, and every release had to go through this environment before it reached its real destination.

<

<

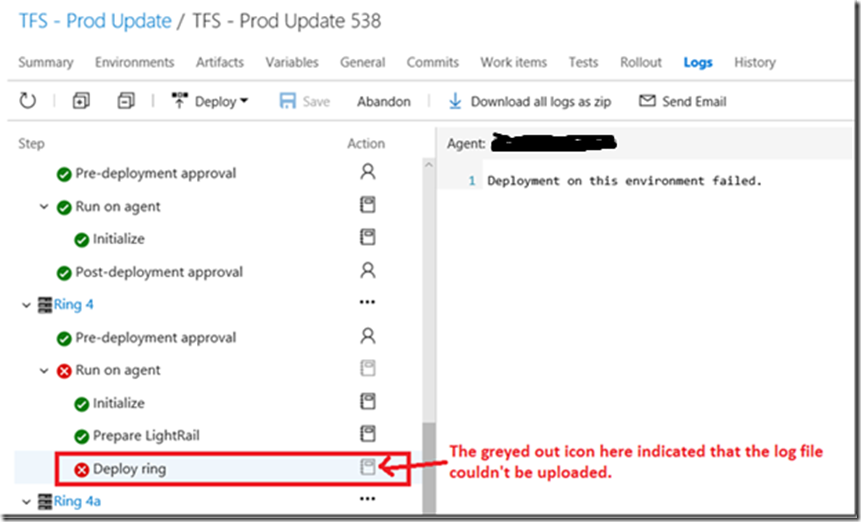

p>Moving on … going to the Logs tab showed that the log file corresponding to the failed task wasn’t even available in the browser. This used to sometimes happen when the log file was very large. The workaround was that we used to have to log into the agent box and view the logs on the agent.

<

<

p>Once we got to the logs, we found out that the logs were not easy to understand. Reason: Each environment corresponded to multiple Scale Units (SUs) e.g. Ring 3 corresponds to three scale units (WEU2, SU6 and WUS22). So the logs for the three scale units were interspersed.

<

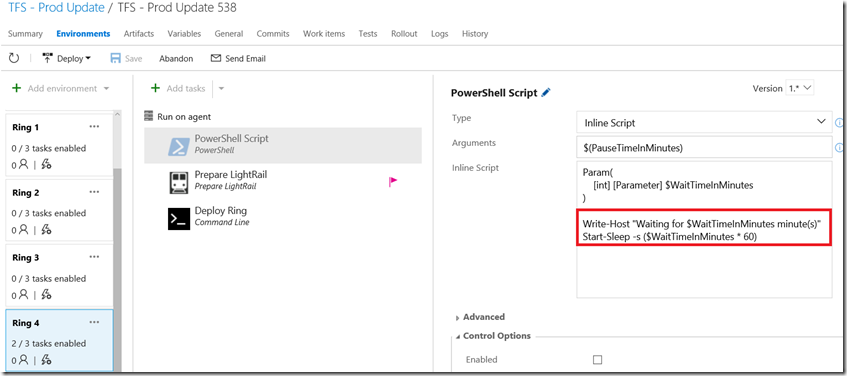

p>Finally, as part of aligning to Azure’s Safe Deployment practices, there was a requirement to have each Ring “bake” for some time before proceeding to the next ring, so that issues were flushed out in the inner rings before moving to the outer (and more public) rings. The bake time was modeled as a “sleep” task. This was less-than-ideal because it used up an agent unnecessarily while sleeping.

<

<

p>In the screenshot above, the Sleep task was disabled for this particular hotfix deployment, probably to get some fix out into prod early, but typically this task is enabled.

Fixes that we have rolled out

We fixed the following issues either by enhancing RM or by changing the way we used the product.

Problem statement: The Issues list in the release was un-actionable.

Solution: [Change in the way we use RM] Changed the Powershell script so that it logged the inner exception as the Error, as opposed to the outer exception

<

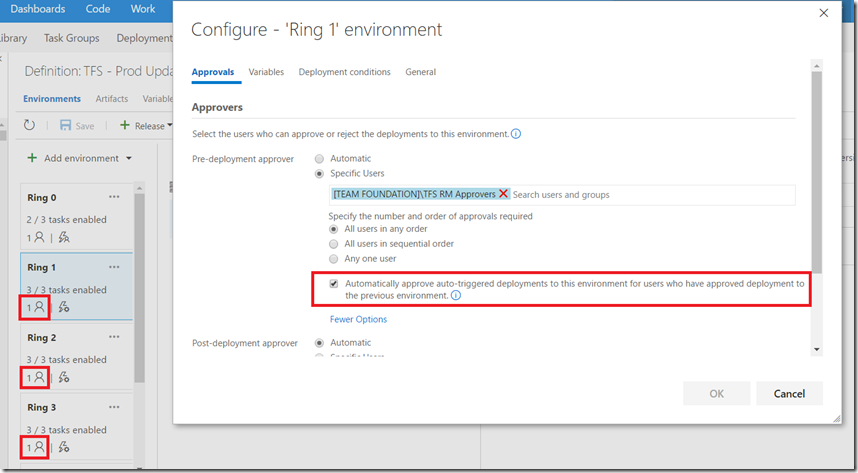

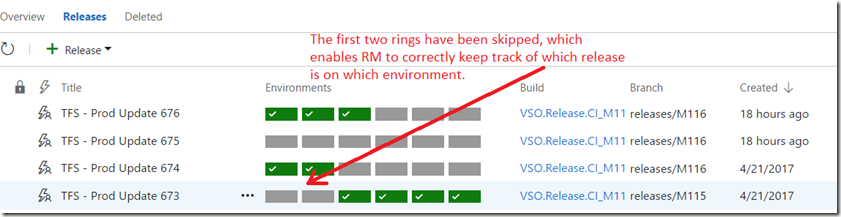

p>Problem statement: Incorrect traceability in the product caused by taking each release through Ring 0 even if the bits were meant for a different Ring

<

p>Solution: [Enhanced RM] We enabled correct traceability in the product by adding support for a new feature “Release-level-approvals”, and then used this in the “TFS – Prod Update” Release Definition. This feature ensures that approval is required only once in the release – no matter which Ring is deployed first – as long as the approvers for all the Rings are the same:

<

<

p>As you can see above, all Rings, except for the first Ring, have the following option selected: “Automatically approve auto-triggered deployments to this environment for users who have approved deployment to the previous environment”. (That’s quite a mouthful!) Ring 0 doesn’t need to have this option selected since there is no “previous” environment.

<

p>This ensures clean traceability of the release i.e. the bits are not unnecessarily dragged through rings where they are not meant to be deployed.

<

Fixes still to be rolled out

The issues in this section have not been completely addressed. Once we address them, I will write up the details in part 2 of this blog.

<

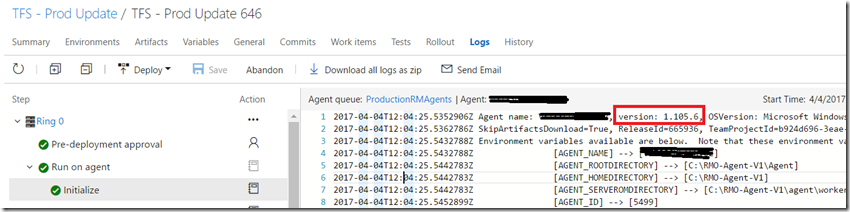

p>Problem statement: The log file was sometimes not available in the browser – typically when it was very large.

<

p>Solution: [TBD: Change in the way we use RM] We will fix the log file upload reliability issue by moving from the 1.x agent to the 2.x agent. There is a known reliability issue with the 1.x agent with respect to uploading large log files.

<

<

p>The upgrade of the agent is being delayed because we used a legacy variable $(Agent.WorkingDirectory) which was available in the 1.x agent, but is not available in the 2..x agent. So we need to re-write the Powershell scripts that used this variable, and replace its usage with $(System.DefaultWorkingDirectory).

<

p>Problem statement: The logs were difficult to understand, since each log file had mangled information from multiple scale units.

<

p>Solution: [TBD: Change in the way we use RM] We will enable better traceability per environment by having a scale unit per environment.

<

p>This design, however, gave rise to several new issues:

<

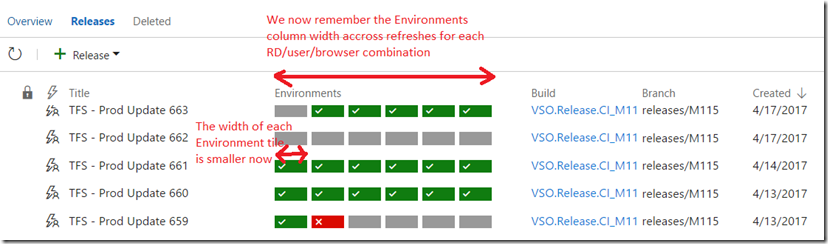

p>Sub-problem statement: The number of environments will go up from 5 to more than 15. Viewing the list of Releases will become a pain because of the need to constantly re-size the Environments column. In addition, even with the Release-level-approval feature, approvals will still be problematic. Reason: Each Ring will blow up into several Environments e.g. Ring 3 – which used to correspond to three scale units WEU2, SU6 and WUS22 – would now correspond to three environments. Hence, starting Ring 3 would correspond to deploying three environments manually, and approving three times – once for each environment (since Release-level-approvals kick in only if the previous approval is completed by the time the next deployment starts).

<

p>Solution: We did some things to make this better, with some more work pending:

- [Enhanced RM] We added support for “remembering” the width of the environments column per [User, Release Definition] combination per browser

- [Enhanced RM] We also made the environments smaller so that they took up less real estate

- [TBD: Enhanced RM] Over the next few sprints, we will add support for bulk deployments and bulk approvals. After that, hopefully, we will be able to move to an environment-per-scale unit.

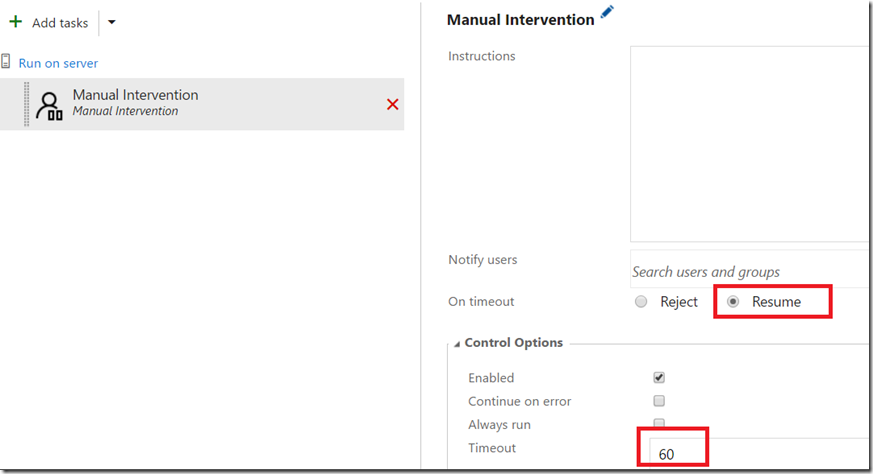

Problem statement: How do you model the scenario of “waiting for an environment to bake” without using up an agent which sleeps?

Solution:

(a) [Enhanced RM] We introduced the feature “Resume task on timeout” feature in the Manual Intervention task. When this is set to “Resume on timeout”, it acts like a sleep, without using up any agent resources.

(b) [TBD: Enhanced RM] However, there is an additional requirement to make the timeout of the Manual Intervention task specify-able through a variable, so that the timeouts of the environments can be easily managed through environment variables. Once we do that over the next few sprints, we will be able to replace the Sleep task in the Release Definition with the Manual Intervention task with “Resume on timeout”.

Conclusion

VSTS relies heavily on RM for its production deployments, and now you have some insight into how we use RM, and the improvements we are making in RM as we fine-tune this experience. Stay tuned for part 2 of this blog, as we iron out more of the issues that have come up during this dogfooding.

Hopefully some of the techniques used by us will apply to your releases too.

0 comments